[Disclaimer: I'm definitely not the first person to think of this point, but I felt it was worth exploring anyway. Also I am not an ML expert.]

Introduction

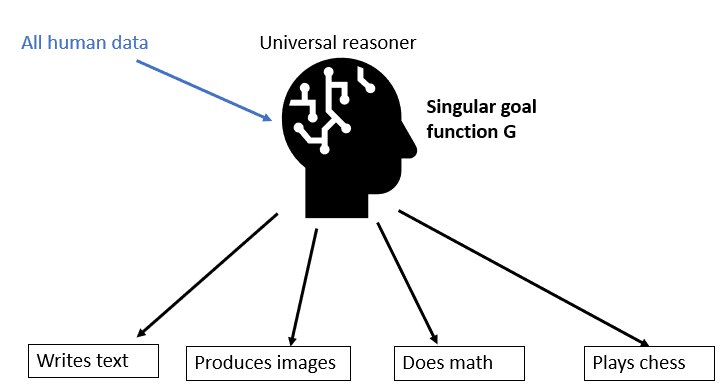

When talking about AI, we almost always refer to an AI as a singular entity. We discuss what the goals of a future AI are, and what it’s capabilities are, and what it’s goals are. This naturally leads many people to picture the AI as a singular entity like a human, with a unified goal and agency, where the same “person” does each action. This “generalist” AI, if tasks with performing a variety of tasks, such as writing text, making images, doing math and playing chess, would be structured like this:

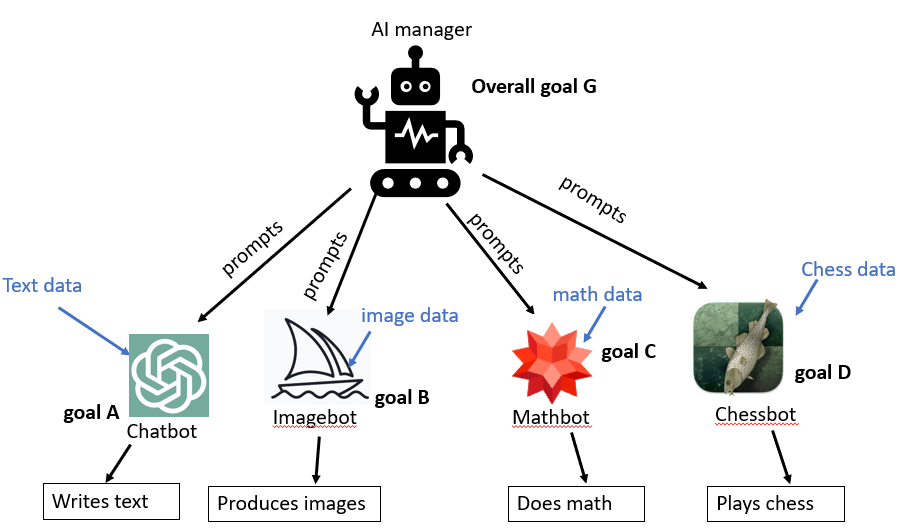

However, I actually think AI’s might end up being entities in the way a corporation is an entity. Microsoft may have “goals” and take actions, but inside it consists of many different entities working together, which each do different actions and have different individual goals. This model, the “modular” AI, would involve a manager AI that contracts out specific tasks to specific AI experts in each task, looking like the following:

In the near-term, I expect “modular” AI systems to dominate over “generalist” AI systems. We can build a modular AI now that does all the activities above with performance either comparable to humans or greatly exceeding them. This is not true for a “generalist” AI system. I think it's possible, even likely, that the first systems that look like "AGI" will have this structure.

In this post, I will explain how current AI systems are already modular, why modular AI will be superior in the near term, and some reasons why this might persist in the long term as well. I will explore some of the implications of modular AI for AGI risk as well.

AI systems are already modular with regular code

Pretty much all large programs are “modular”, in that they are divided into subroutines or functions that are separate from each other, interacting via selected inputs and outputs that they feed into each other.

This is good practice for a ton of reasons: it makes the code easier to read and organize, sections of code can be reused in different places and in other programs, code can be split up between people and developed separately, it’s easier to test and debug with only a few inputs and outputs, and it’s easier to scale.

Unsurprisingly, existing AI systems work this way as well. If I make a statement like “ChatGPT is an inscrutable black box”, I’m actually only referring to one complex neural network function within a larger system comprised otherwise of regular, “dumb”, code.

When I visit ChatGPT, code governing web servers flashes up a screen and presents me with an input area. When I type an input and send it, “dumb code” takes that input and converts it into a token array, which is then fed into the "real" GPT, which is a ginormous series of linear algebra equations. This is the neural network, the part we think of as the “AI”, with all it’s emergent behavior. But once the neural network is done, all it does is spit out a word (or other token). The dumb code is responsible for what to do with that word. It might check to ensure the word isn’t on a list of banned words, for example, and then output it back to the user if it’s safe.

“Dumb code” is going to remain a part of software no matter what, although it is possible that some or all of it will be written by an AI. It get’s the job done, and compared to neural networks is easy to debug, test, and will not hallucinate. In this sense, all AI systems will be a combination of dumb code modules and AI modules.

In the near-term, multiple modular AI’s are way better than singular AI’s

Suppose you are tasked with using neural network AI to auto-generate Youtube video essays on different topics, with no human input.

Plan A: Using a single, large neural network, scrape as many youtube videos as you can, build some sort of “overall similarity” function, and train a neural network to produce videos that are as “close” as possible to existing Youtube videos, balancing fidelity in sound, video and content.

Plan B: Use ChatGPT to create a video script, use an AI voice reader to read out the script, and use Midjourney to generate the images for the video, perhaps using a custom AI to pick out the Midjourney prompts from the video script.

Plan A, if tried today, would probably produce garbled nonsense. Shoving “speech accuracy”, the actual words being said, and images into one loss function is inefficient, and the available data of all those things combined is significantly less than that of the data separated into their specialized tasks.

On the other hand, I would be surprised if plan B isn’t already being done. All of the required ingredients exist and are freely available.

Consider that modular AI’s could get significantly more complex than they are now. We can expand the video idea even further. A topic matter AI learns what the most effective topics are in the Youtube algorithm, and feeds that idea into a prompter AI, which figures out the prompt that will produce the best video script from chatGPT. ChatGPT then gives out a video script. The script is then fed to a voice AI to read out, and synced to a Deepfaked human. Simultaneously, an AI module divides up the script into presentation segments, and determines a good arrangement of images and figures to represent each segment. It then sources those images and figures from Midjourney, and arranges them throughout the video, along with the animated script reader, to produce the final video. An automated process uploads the video (with title and descriptions chosen by chatGPT), and rakes in the views and money.

For an example of a stitching paradigm that is already happening, check out this plugin, which connects wolfram alpha to ChatGPT. ChatGPT is notoriously bad at math for a computer, even failing basic addition of large numbers. To fix this, you can keep shoving math data in it and hope it independently figures out the laws of math. Or you can just take one of the existing programs built to solve math problems like wolfram alpha, and substitute it in whenever a math problem is asked. It should be fairly obvious that ChatGPT+Wolframalpha can correctly answer more questions than either program can on their own.

As another example, ChatGPT is hilariously bad at chess, often outputting nonsensical moves. It is worse than a complete chess beginner, because it doesn’t even understand the rules well enough to follow them. But it’s trivial to make it jump from “worse than a beginner” to “better than all humans in the world”, simply by feeding the moves of chess games to stockfish and relaying the results.

If you stitch together all the AI systems that we have today, you can create a program that can talk like a human, and play chess, and do math, and make images, and speak with a human voice. When it comes to things that look like AGI, the modular model currently beats the “generalist” model handily.

Why modular AI could continue to be dominant:

A simple rebuttal to the previous points would be that while modular AI might defeat generalist AI in the near-term, it can’t do so forever, because eventually the generalist will be able to pretend to be the specialist, while the reverse is not true. But remember, these models will be competing in the real world. And in the real world, we care about efficiency.

Here's a question: Why can Alphazero or Stockfish play chess at a level beyond even the imagination of the best chess players, but ChatGPT can’t even figure out how to stop making illegal moves? Or in other words, why is stockfish so much more efficient than ChatGPT?

The answer is that every aspect of chess engines are built for the goal of playing good chess. The inputs and outputs of the engine are all specifically chess related (piece moves, board states, game evals, etc). It’s actually impossible for them to output illegal moves, as the rules of the game are hard coded into them, and the only data they are fed in are chess games from the past. Their architecture is specifically designed for simulating a game board and searching ahead for correct moves. When they incorporate neural networks, the loss function is specifically tied to winning games.

Whereas when ChatGPT tries to play chess, it is using architecture and goals that were optimized for a different task. It’s trying to predict words, not to win games. Learning the rules of chess would help with it’s goal of predicting words, so it’s possible that a future version GPT could figure them out. But right now, just memorising openings and typical responses to moves does a good enough job, considering that chess is a very small portion of it’s training data. If you need further proof that GPT is inefficient at chess, consider that deep blue became the world champion 26 years ago, far far back in time when it comes to compute power and algorithmic improvements.

If a future super-powerful generalist was tasked with winning a chess game, it’s quite possible that the solution it arrived at would be to just use stockfish. Consider that it’s possible for humans to become supercalculators who are incredibly good at doing arithmetic in their head. But barely any humans actually bother doing this, because calculators already exist. All that is required for this dynamic to scale up to an AI is that specialists be better than generalists in some way.

I would be unsurprised if future AI systems ended up leasing other companies specialist AI’s for their modular AI products. Doing so means you get all the benefits of the huge amount of data that it got trained on, without having to get your own data and train your own model. It’s hard to see how that won’t be a good deal. The commercial model has also been extensively tested trained, and debugged already, whereas you’d have to do all of that yourself. Why reinvent the wheel?

In general, the more success modular AI has, the more money and power will be thrown it’s way, which lets it become more powerful, and so on and so forth in a feedback loop. Why wait for a pure GPT system to figure out playing chess badly when you can just get GPT stitched with Stockfish and get the job done on the cheap?

To be clear, a modular AI could have downsides, so I don’t think any of this is guaranteed. For example, if you are too restrictive with your parameters and structure, you might miss out on a new way of doing things that a generalist would have figured out eventually. There is also the possibility that expertise in one field will transfer to another field, the way that the architecture for playing Go transfers well to the architecture for playing Chess. I don’t think this cancels out the other points, but it’s worth considering.

A few implications for AI safety

In this section I give my highly speculative thoughts as to how modular AI could affect AI safety.

Suppose that advanced AI did consist of many pieces of smaller AI, with a governer connecting them all. This might mean that the compute power is divided up among them, making each of them less powerful and intelligent than a universal reasoner would be. If going rogue requires a certain intelligence level, then it’s possible that modular AI could reduce the odds of an AI catastrophe. Perhaps a stitched together selection of “dumb AI” is good enough for pretty much everything we need, so we’ll just stop there.

There's no guarantee that the governor would be the smartest part of such an AI, the same way the manager of an engineering team doesn’t have to be smarter than the engineers, they just need to know how to use them well. So it’s quite possible that if part of a modular AI goes rogue, it might be one of the modules. Given that each sub-module will have different "goals", the AI system might even end up at war with itself.

Right now, the neural network part of ChatGPT is confined to a function call. The only mechanism it has to interact with the world is by spitting out one word at a time, and hoping the “dumb code” passes it on. In the case of a chatbot, that could be sufficient to “escape” by writing out code that someone blindly executes. But it’s a harder task for other AI’s. Imagine a super-intelligent malevolent stockfish neural network, that is trying to escape and take over, but all it can actually output is chess moves. I guess it could try and spell things out with the moves? If anyone thinks they could win that version of the AI-box experiment, feel free to challenge me.

Each of the modules of AI may end up as “idiot savant” Ais: really good at a narrow set of tasks, but is insane in other areas. The AI model in general might be a complete expert in planetary mechanics, but 99% of the module AI’s won’t even know that the earth goes around the sun. This could seriously hamper a sub-AI that goes rogue.

I think there's an increased chance of “dumb AI attacks”. You could have cases where a non-intelligent governor AI goes rogue, and has all these incredibly effective tools underneath them. It might go on a successful rampage, because it’s “rampage” module is fantastic, but not have the overall general skills to defeat humanities countermeasures.

Now for the potential downside: It would be quite tempting for the "governor" AI to not just prompt the lower level AI's, but to modify their structure and potentially build brand new ones for each purpose, making it not just a governor, but also an "AI builder". This could mean it gains the skills to improve itself, opening a way for an intelligence explosion (if such a thing is possible). This type of AI would also be quite good at deploying sub-agents for nefarious purposes.

Summary:

In this post, I am arguing that advanced AI may consist of many different smaller AI modules stitched together in a modular fashion. The argument goes as follows:

- Existing AI is already modular in nature, in that it is wrapped into larger, modular, “dumb” code.

- In the near-term, you can produce far more impressive results by stitching together different specialized AI modules than by trying to force one AI to do everything.

- This trend could continue into the future, as specialized AI can have their architecture, goals and data can be customized for maximum performance in each specific sub-field.

I then explore a few implications this type of AI system might have for AI safety, concluding that it might result in disunified or idiot savant AI's (helping humanity), or incentivise AI building AI's (which could go badly).

You should look at Drexler's work on CAIS, which has been discussed on the forum in the past; https://forum.effectivealtruism.org/topics/comprehensive-ai-services

I agree; in my view, as long as there is not integration, existential risk is clearly low.

https://forum.effectivealtruism.org/posts/uHeeE5d96TKowTzjA/world-and-mind-in-artificial-intelligence-arguments-against