Researcher Notes:

This is a semi-polished ideation & formalization post. I spent about a week on it. It's a bit outside our main work at QURI, but I thought it was promising enough to be worth some investigation. I've done some reading into this area, and have included a few related links, but I'm not at all an expert in internet privacy or LLMs.

If you skim this, I suggest reading the beginning until you understand the main concept, then going to the Potential Uses section.

The main controversial point might be the name, "LLM-Secured Systems". I'm unsure about this, but wanted something to refer to for this piece. Other suggestions are welcome!

This piece works well with my previous one on on Superhuman Governance. Arguably, these systems could be a key component to better governance, and governance might be the place where these systems are most useful.

I used Claude and DallE-3. I also got advice from a handful of helpful anonymous people.

Summary:

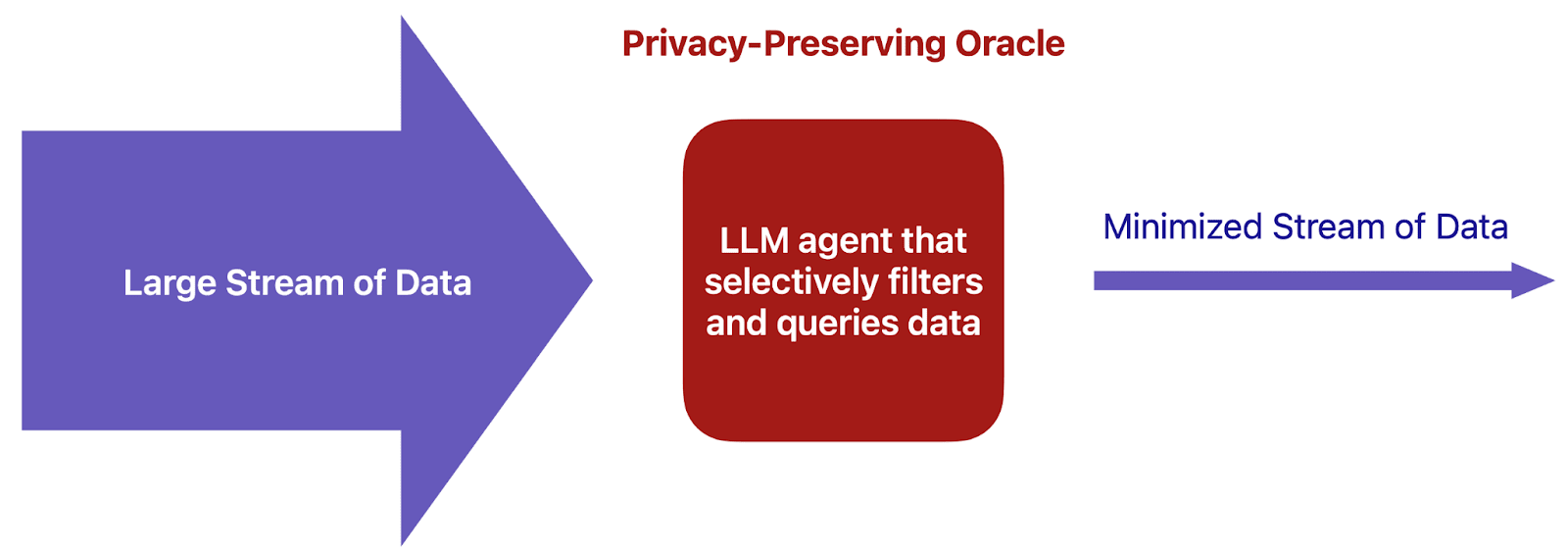

This is a formalization for using Large Language Models (LLMs) as privacy gatekeepers, dubbed LLM-Secured Systems (LSSs). The core idea is to entrust continuously-updating confidential information to an AI-based oracle system that can manage and share private data securely. Compared to techniques like homomorphic encryption, LSSs emphasize flexibility and generality over speed and privacy guarantees.

The main concepts are straightforward, have existed for quite some time, and have been explored in other discussions. This work primarily aims to propose a formal structure and compile a list of applications. I think there are a lot of potentially great applications.

The post explores a hypothetical implementation of a basic LSS using existing tools and outlines potential use cases in government, corporate accountability, personal privacy, interpersonal interactions, and more. It also discusses the implications of an ecosystem with multiple interacting LSSs, drawing parallels to a world where all communication is mediated through lawyers.

While acknowledging the current limitations of LLMs in terms of reliability and the potential for misuse, this outlines how LSSs could lead to higher levels of trust, institutional alignment, and reduced data abuse in the long term. The impact of LSSs may vary depending on the political context, with democratic countries potentially benefiting from enhanced accountability while authoritarian regimes could exploit them to consolidate power.

Introduction

Surveillance and general data collection offer substantial benefits but come with significant privacy costs. Historically, tools like Differential Privacy, Homomorphic Encryption, and Secure Multi-party Computation have aimed to find ways of enabling a partial sharing of information from secret private data. These algorithms provide strong guarantees where applicable but are often complex to implement and limited in scope.

In the last few years, there’s been some strong work on Structured Transparency at the Centre for Governance of AI and at OpenMined. This has highlighted the existing trade-offs, the possibility of more efficient methods, and concrete recommendations for privacy in specific AI systems. There’s been interest in On-Device AI, most recently with Apple, Alphabet, and Microsoft, all investing in this area.

The recent popularity of Large Language Models opens up some new opportunities. LLMs could be used to directly make and execute decisions about what data should be shared with who, and to produce specific queries or summaries of the data optimized for certain use cases. This premise can potentially achieve strong trade-offs between information benefits and privacy costs, while being highly general-purpose and flexible. Robustly tested LLMs will be necessary for this to work for demanding use cases, but if there’s a lot of progress in LLMs in the next few years, this might be possible.

The idea of LLMs as privacy gatekeepers directly has been discussed and researched to some extent, but is still fairly niche. The paper Language Models Can Reduce Asymmetry in Information Markets, investigates exactly what the title says, in situations where LLMs have private information. Allison Duettmann and the Foresight Institute have explored similar ideas through "Multipolar Active Shields”, envisioning a network of AI agents managing various data types. Very recently, Buck discussed some potential security benefits of this strategy in the post Access to powerful AI might make computer security radically easier. The Age of Em discusses some similar ideas, but around a different implementation (Ems, instead of simple LLM systems).

I think these concepts could use more formalization and brainstorming. This piece aims to outline the general concept of "LLM-Secured Systems" (LSSs) and explore the problems they could address. I’m sure most or all of the specific points in this have been discussed before, but I believe that’s mostly been spread out across many sources, and without this formalization.

LLM-Secured Systems (LSSs)

A LLM-Secured System (LSS) is an AI-based system designed to manage and share private data securely. Essentially, a LSS is an oracle—a program that primarily answers queries—entrusted with continuously-updating confidential information.

In the near term, LSSs would likely feature LLMs as part of a simple AI agent. These systems can be developed inexpensively and easily, especially if low reliability is acceptable, making them an excellent starting point.

Compared to techniques like homomorphic encryption, LSSs emphasize flexibility and generality over speed and strong privacy guarantees. LLM agents within LSSs can generate a wide range of queries on the contained data and even negotiate with data requesters.

Think of a LSS as a digital concierge or virtual lawyer, adeptly handling data requests and sharing only the necessary information. These general-purpose systems can accommodate various uses while maintaining a consistent architecture.

LSSs serve as a useful thought experiment for envisioning how future AI systems might address various social problems. It's uncertain whether LSSs will be implemented in their stated form exactly. Perhaps people will prefer systems far more powerful than LSSs, or maybe there won’t be formal separation between LSSs in the way we outline here. However, by focusing on LSSs, we can gain a clearer understanding of the benefits and limitations of this specific set of functionality.

A Thought Experiment

Imagine Dropbox introduces a premium new service called "Legal Data Fiduciary." Typically, you manually configure folder sharing permissions, but with this service, you are assigned a private human lawyer to handle data requests. You explain your preferences, and the lawyer ensures your interests are executed.

For instance, if a cousin requests photos, the lawyer reviews your photo library and shares only the ones you're comfortable with. If a potential employer seeks job history, the lawyer provides a summary that excludes sensitive details. In a legal dispute, the lawyer finds the minimum evidence needed to verify your presence in Hawaii on a specific date provides this.

If scientists request data on a disease you have, offering a monetary reward, the lawyer verifies their claims, ensures data disposal post-use, and asks for your approval before sharing the exact data needed.

All data requests and sharing actions are transparently communicated to you via email, detailing what was shared, with whom, and why. In uncertain situations, the lawyer consults you before proceeding. This lawyer has a fiduciary duty to maintain honesty and protect your privacy.

While the use of a human lawyer would be costly, this setup could be very powerful. It could provide a great deal of flexibility and value while also being trustworthy and transparent.

Now, imagine replacing the human lawyer with an advanced LLM system. This approach would be dramatically more affordable and fast, and offer some advantages:

- Much better monitoring and guarantees. You could never monitor a human lawyer 24/7 to make sure they didn’t leak information, but you could do this to a machine.

- Standardization. No two lawyers are the same, but AI agents can be. There would likely be many existing performance and reliability of different AI systems you can choose from.

- Memory erasure. You can easily remove any or all information from the AI, whenever that might be valuable.

Viability

While modern LLMs show great promise, their current failure rates and lack of transparency make them unsuitable for handling sensitive, confidential data in most applications. Significant improvements in reliability are needed before LSSs can be widely deployed for such use cases.

However, there are several ways LSSs could still provide value in the near term, or be more promising than otherwise expected.

- Low-Stakes Applications: LSSs could be leveraged for small-scale applications where the consequences of failure are minimal. There are likely many of these, for creative developers.

- Human Augmentation: Rather than replacing existing systems, LSSs can assist humans in tasks like drafting emails using private notes or filtering information for an organization's auditor with authorized data access.

- Logging and Monitoring: Comprehensive logs of LSS activity can help detect misuse or attacks, many of which are likely to be easily identifiable.

- Multi-layered Security: LLMs can serve as one component in a multi-layered security approach. For example, strict rules would limit an LLM's output to a maximum of 20 lines per person per day or 100 lines total per day. Or an LLM is added to an existing permissions system, where it can only restrict access further.

- Targeted Use: LSSs' versatility and ease of integration can be leveraged to handle specific aspects of a system (e.g., 20% of permissions layers) that are difficult to hard-code, while other techniques secure the remaining 80%.

- Future-proofing: As AI capabilities evolve, future LLMs capable of writing code could help ensure the use of advanced security measures like homomorphic encryption and formal verification in workflows. The LLM part of LSSs would become increasingly narrow.

- Hedging Bets: In scenarios where AI risk escalates quickly, LLMs are also likely to improve rapidly, potentially helping with some of the most concerning risks.

An Example Implementation of a LLM-Secured System

LSSs can be simple and realizable. Here’s an example of how you could hypothetically set up a basic LSS this week.

Step 1: Set Up the Server

First, you’d create a new web server software, which we’ll call “MyOracle.” You’d host this on a dedicated server, preferably with a robust setup that includes ample storage and a powerful GPU. The server setup should be secured with appropriate measures such as firewalls, encryption, and regular security audits to protect the data. You’d set up Llama3 on this server.

Step 2: Populate with Data

Populate MyOracle with select personal data, such as photos, backups, Facebook posts, emails, and other documents. Exclude the most sensitive information like passwords and financial data at this stage. Organize this data into a Retrieval-Augmented Generation (RAG) system to ensure fast and efficient data retrieval by the LLM. This step involves structuring the data in a way that the LLM can quickly access and process relevant information based on user queries.

Step 3: Develop the Frontend

The frontend can be simple, but it should be user-friendly and secure. Implement a simple login system requiring users to authenticate via Facebook or Google. This ensures that only specific contacts or facebook connections can use the system. Once logged in, users would be presented with a chat interface where they can make data requests.

Step 4: Fine-Tune the LLM (Optional)

Craft a detailed prompt and provide numerous examples of how you’d want the LLM to respond to different requests from various people in your life. This dataset, consisting of your personal preferences and scenarios, would be used to fine-tune an LLM, such as Llama 3, which will run on the MyOracle server. This fine-tuning process involves training the LLM on specific examples and scenarios to tailor its responses to match your expectations. You can have the LLM initially suggest a list of samples that you can then run and modify.

Step 5: Implement the Request-Response System

When someone interacts with MyOracle’s interface and requests information, a message would be sent to the LLM. The LLM would assess whether you’d be comfortable sharing the requested data based on the fine-tuned examples. If the request is deemed appropriate, the LLM would either share the data directly or process it before sharing to ensure privacy and relevance. If the LLM is uncertain, it would send you an email for confirmation, ensuring that no sensitive information is shared without your explicit approval.

Step 6: Monitoring and Logging

Make sure that all communication of the LLM is saved locally. This system should record all interactions, data requests, and responses, providing an audit trail that you can review to ensure the system is operating as expected.

Step 7: Summarization and Control

Once a logging system has been set up, adjust the LLM to be able to search through it. The LLM should check it for history with specific people, before providing them information. Then, change its prompt to provide restrictions based on these logs. For example, perhaps it shouldn’t engage in over 3 short conversations per day with most people, to help ensure it isn’t easily exploited.

Ideally this could generate periodic reports that highlight the frequency and nature of data requests, helping you identify any unusual patterns or potential security concerns.

Many-Oracle Ecosystems

A person interacting with another person’s LSS might be required to do a lot of work. The LSS might ask them a lot of questions to figure out if they really need the information. A simple solution could be for the client of the information to provide their own LLM-Secured System, about themselves. This oracle could be trusted to provide accurate information, and not too much information, about their intentions for the data. This is equivalent to a situation where two parties communicate through their own lawyers.

In a larger system, there could be situations where many oracles communicate with each other on behalf of different parties. Perhaps some specific oracles aren’t completely trusted by others, so these others communicate with third party oracles who can help verify trustworthiness or specific details. This is equivalent to a world where all people or organizations have their own teams of lawyers, and only communicate with each other through these lawyers. Lawyers have a common practice of gathering information from many parties (in each case, through their lawyers), for specific situations.

A different way that oracles could be used is to ensure that entities do not collude with each other. For example, in finance, company employees are not allowed to share private information with financial traders. Or, within corporations, high-level individuals in different organizations are not allowed to collude with each other. If this information was contained in a LLM-secured system, it could be exactly programmed to especially limit communication between certain entities. Specific agencies could only be allowed to communicate through LLM-secured systems that would be monitored by third parties (likely, with their own oracles).

Many-oracle ecosystems can potentially be an alternative high-level architecture for the web. Much of the internet can be considered as a combination of “private information” and frontends or aggregations. LLMs are already starting to be used as a better frontend for much of the internet. For example, instead of searching for information on Stack Overflow, many people are simply asking LLMs for coding help directly. LSSs could hypothetically provide a superior backend for private data. Instead of a person providing their book reviews to GoodReads, their social media thoughts to Facebook, their job information to LinkedIn, and so on, they could just provide all of theitr information to one oracle directly. Other users would interact with their LLMs, which could query these LSSs when needed. Perhaps someone asks, “How do people enjoy working at Microsoft?”, then a bunch of LSSs negotiate to get specific information and return that back to the requester’s LLM.

Web3 Vs. Web-LLM

In recent years, there has been significant hype surrounding cryptography and smart contracts, leading to numerous proposals for using blockchain technology to organize information and control permissions. This collective concept has sometimes been referred to as "Web3," emphasizing its potential impact on the internet.

Many of the proposals discussed here mirror Web3 ideas but without the reliance on blockchain technology. It can be argued that LLM systems could be used instead, manually controlling things. The right deployed LLM-overseen architectures could assure that individual humans wouldn’t have control, similar to smart-contracts.

Internals

Key Functions

There's a lot going on with LLMs, so it can be useful to break them down into a key functions. Even one or two of these would be useful. Each could represent a different set of software challenges.

1. Data Querying and Analysis

LSS LLMs can analyze data and answer broad questions about it. ChatGPT can currently automatically do some kinds of data science directly on narrow data sets. More powerful versions would need to be better at handling complex sets of different kinds of data.

If we want AI agents inside of LSSs to automatically handle complex sets of data, we’ll first need to be very good at automating data queries for regular humans. Currently, we don’t yet have programs that humans can use to ask arbitrary questions to their computer like, “According to my emails, facebook notifications, and text messages, how many times have I discussed Mexican food with family members?” Full LSSs would want no human interaction in most cases, so we’d both need fully general queries to be automated, and need them to work very reliably.

2. Claim Verification

LSSs should be trusted to give only accurate information. This means:

- The underlying data would need to be trusted.

- The LLMs would need to be highly reliable and well tested. Ideally, there would be standard LLMs for LSSs with lots of available evaluation data.

- The LLMs would need to be trusted to be instructed to tell the truth.

Ensuring that items 1-3 are all well handled is very difficult. However, there is a path to get there, and even mediocre versions can still provide value.

In some special situations, we might want the additional knowledge that the information available is complete. Or, we’d want to have not only perfect records of a person’s provided email accounts, but we’d also want to ensure that this person doesn’t keep secret email accounts or communicate in secret alternative ways. Such requirements would require very high levels of monitoring and data collection (potentially monitoring absolutely everything that a certain person or organization does), so would only be possible later on, and likely only be used in exceptional circumstances. Note that this ultimately requires solving the “oversight problem” of AIs overseeing humans, which might itself be an undesirable problem to solve for those worried about AI takeover.

3. Information Sharing Decision-Making

LSSs would need to make decisions about who to share what information with. Custom prompts and instructions would be provided, but there would have to be a lot of work done to make sure that the regular cases are reasonable and robust.

There are multiple options for how these decisions could be made. For example:

1. Follow a simple policy, like, “Every day, filter information using process X and then send a summary of the rest.”

2. Use heuristics and examples to guess the owner’s preferences. This could be done with prompt engineering that suggests following a long and specific reasoning process.

3. Do formal cost-benefit analysis, using some explicit utility function or guesses at a utility function.

4. Individualized Data Sharing

LSSs take in a set of data, and selectively share subsets of it with specific individuals. This could be done without the other aspects. This is similar to a system that automatically shares cloud documents with specific people, based on knowledge about the author and these connections.

Ideally user identity verification can be done via email or with other regular identity verification services.

5. Information Negotiation

Advanced LSSs would require custom clarifications about data requests. They might require specific verifications to get some kinds of data, or require lengthy negotiations for special requests. This can require them to get a great deal of information from other systems, or perhaps bring in extra third parties to help out.

Useful Properties

Auditability

All queries and responses of LSSs can be recorded and made visible to certain parties. The level of privacy here can vary depending on the circumstances.

Standardization

For people to trust LSSs and write tools on top of them, it would be useful for LSS infrastructure to be highly standardized. This way the key components could be robustly tested.

Data Erasure

There will be many situations where LSSs will only exist for short periods of time, in order to do specific jobs. After these, they should be deleted, destroying all contained information. This can be useful to ensure that information is not leaked.

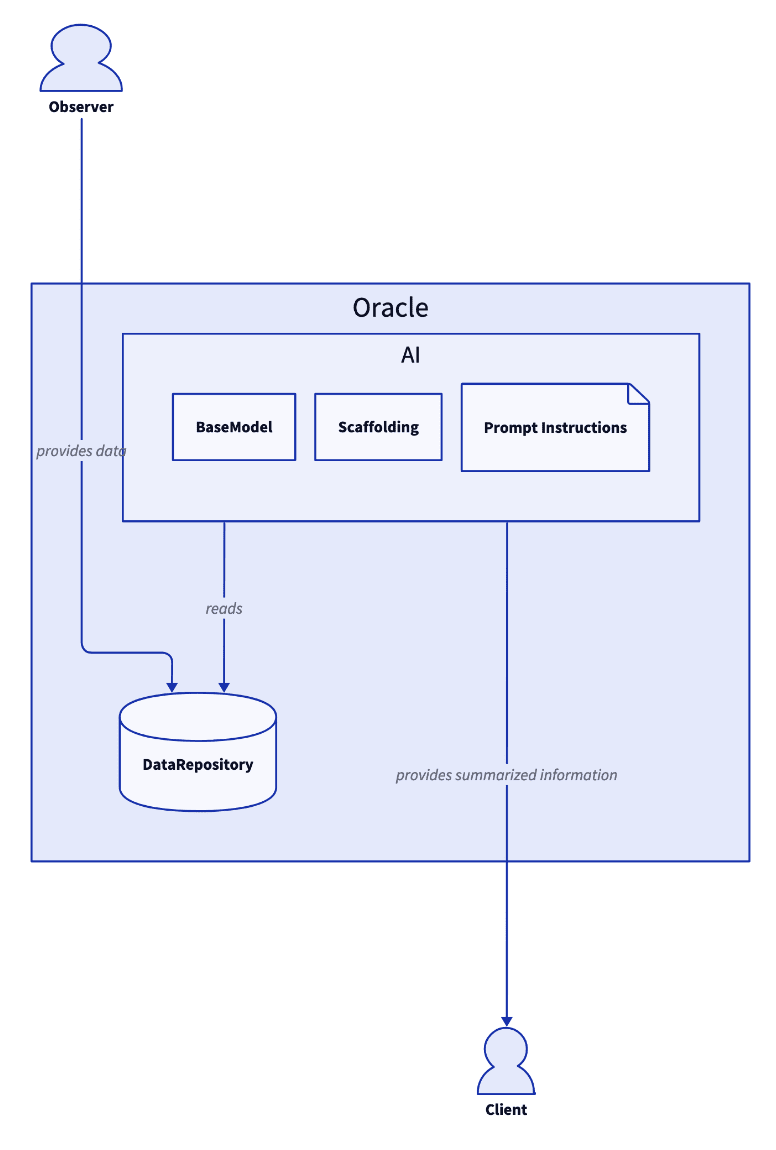

Simplified Architecture

Here's a very simple diagram showing how an LSS could work.

Observer

The person or agent whose information is stored.

Data Repository

Storage of all private information. This should be made only accessible to the internal AI.

Goal

The AI in question should have a specified goal. This could be a high-level utility function that it optimizes for, or some heuristics of how it should perform. This would likely be implemented as an LLM prompt and fine-tuning.

In the case that the LSS is set up by the observer, then the goal would be to represent their interests. If the LSS is set up by a third party to monitor the observer, then the goal would aim to strike a balance between respecting the privacy of the observer and revealing key information.

Client

A person or agent who asks for information.

Potential Uses

The following is a collection of good and bad things that LSSs could help with, depending on how they are used.

This section and the following ones are really more about the benefits and implications of great structured transparency than this specific implementation. So there are likely other great tools that can help here as well.

Government and Corporate Accountability

1.1 Government Officials and Key Corporate Officials Monitoring

- Monitoring Activities: Monitor government and corporate officials for illegal activities or other pre-specified reasons. LSSs can reveal information only in cases of potential or clear illegal activity, ensuring accountability while protecting privacy.

- Proof of Compliance: Provide proof of minimal data use and adherence to rules by surveillance authorities. This demonstrates that they are not abusing their power and are following a long list of regulations.

1.2 Police and Local Bureaucrats Monitoring

- Detecting Misconduct: Detect bribery and other illegal or unethical actions among police, bureaucrats, and other government figures. LSSs can monitor their relationships and activities to identify potential misconduct.

- Aggregated Measurements: Aggregate data for long-term quality measurement without exposing individuals. This allows for the assessment of overall police quality and practices without compromising the privacy of individual officers.

1.3 Surveillance Accountability

- Power Abuse Prevention: Demonstrate the amount of abuse of power by surveillance authorities. LSSs can show that minimal data is being used and that a long list of rules are being followed, ensuring accountability and trust.

- National Security Verification: Verify claims of national security without revealing secrets. In cases where the military or intelligence agencies claim secrecy, LSSs can act as independent verifiers, revealing key facts without exposing military information.

1.4 Whistleblower Protection

- Revealing High-Level Facts: Enable whistleblowers to reveal high-level facts, such as war crimes, while minimizing the exposure of confidential information. This ensures trust in the whistleblower's claims without requiring the disclosure of detailed evidence that could compromise security.

- Selective Information Disclosure: Allow whistleblowers to provide LSSs for selective information disclosure to journalists. Instead of engaging in potentially-hazardous continuous communication with journalists, whistleblowers can publish a LSS as a one-time transfer, reducing the risk of detection.

1.5 Conflict Prevention and Prediction

- Conflict Success Assessment: Assess the chances of success in potential conflicts by having entities submit secrets to LSSs. If the chances are significantly different from what one side predicted, they may seek to resolve the conflict without fighting.

- Threat Credibility Verification: Verify the credibility of threats, such as trade restrictions, using LSSs. This can help prevent conflicts by assuring entities that an attack is not being planned, potentially reducing the need for deterrence measures.

Personal Privacy and Security

2.1 Information Evaluation

- Determining Information Value: Consult with a provider's LSS to determine the value of certain information, such as books or articles, to an individual or organization. The LSS can automatically purchase the information if the price is less than the determined value or simply reveal the information to the owner.

- Secure Financial Transactions: Facilitate financial transactions between individuals without exposing personal details. LSSs can share bank data with an AI agent for a single use, enabling secure and private transactions.

2.2 Secure Personal Data Management

- Limited Data Permissions: Grant limited permissions to LSSs to share personal data, such as addresses, with company AI agents for specific tasks only. This ensures that the data is not misused or stored permanently.

- Identity Verification: Verify unique identity, such as social security numbers, without revealing the actual information to government programs or corporations. LSSs can perform the necessary verification while maintaining the confidentiality of sensitive data.

2.3 Alibi Verification and Behavior Monitoring

- Alibi Confirmation: Confirm a suspect's location during a crime using their LSS data. The LSS can provide a statement confirming that the individual was not at the crime scene without revealing their specific whereabouts, protecting their privacy while assisting in investigations.

- Faithfulness Verification: Verify the faithfulness of a romantic partner through their LSS. This allows individuals to confirm their partner's fidelity without compromising their privacy.

- At-Risk Monitoring: Monitor at-risk individuals for drug abuse, criminal activity, or violation of home arrest using LSSs. This helps ensure compliance and safety while maintaining privacy.

2.4 Personal Health Monitoring

- Health Data Management: Manage personal health data from wearables and medical records using LSSs. The LSSs can provide insights and recommendations to healthcare providers and researchers while ensuring the privacy of detailed health information.

- Early Health Issue Detection / Contact Tracing: Enable early detection and intervention for potential health issues by analyzing data from LSSs. This can improve individual health outcomes while maintaining the confidentiality of sensitive medical data.

Interpersonal and Social Interactions

3.1 Peer Group Common Interests

- Identifying Common Interests: Facilitate the discovery of common interests and helpful connections within a peer group using LSSs. Each individual's LSS can interact with others to identify shared interests without revealing all personal data.

- Suggesting Collaborations: Suggest potential collaborations or activities based on the identified common interests. LSSs can provide recommendations for group members to explore their shared interests while maintaining privacy.

3.2 Shared Beliefs Identification

- Common Beliefs Discovery: Discover common beliefs within a large crowd that individuals assume others don't share. LSSs can aggregate this information anonymously and reveal the prevalence of the belief to the group.

- Reducing Stigma: Encourage open discussion and reduce the perception of stigma around certain beliefs by publicizing the results of the LSS analysis. This can foster a more inclusive and understanding social environment.

3.3 Romantic Interest Verification

- Mutual Interest Verification: Enable LSSs to discuss romantic interests and inform both parties if there is mutual interest. The reasons for the match can be kept confidential, protecting the privacy of the individuals involved.

- Facilitating Connections: Facilitate serendipitous connections between compatible individuals by analyzing their LSS data. This can be done over long time periods with specific nudges, such as suggesting learning a new skill or improving fashion choices, to increase the likelihood of a successful relationship.

3.4 Profile Information Verification

- Accuracy Verification: Verify the accuracy of information posted in public profiles, such as job and dating profiles, using LSSs. This helps ensure that individuals are representing themselves truthfully and reduces the risk of deception.

- Trusted Source of Verification: Provide a trusted source of verification for profile information, enhancing the reliability and safety of online interactions.

3.5 Cautious Interaction

- Sharing Negative Experiences: Allow individuals with negative experiences with a specific person to anonymously share their information with the person's LSS. The LSS can then aggregate this data and warn others or initiate legal proceedings if necessary.

- Informed Decision Making: Empower individuals to make informed decisions about their interactions with others based on the aggregated feedback provided by LSSs. This can help create safer and more trustworthy communities.

Service Enhancements

4.1 Guest Behavior Monitoring

- Monitoring Guest Behavior: Enable Airbnb hosts to monitor guest behavior using LSSs and installed cameras. The cameras would only send signals flagging cases of extreme abuse, protecting guest privacy while ensuring property safety.

- Host Assurance: Provide hosts with peace of mind and the ability to take action against destructive guests without compromising the privacy of responsible guests.

4.2 Secure Manufacturing

- Manufacturing Specifications: Allow companies to send manufacturing specifications to LSSs, which ensure that the specs are only used for specific manufacturing implementations and cannot be accessed or stolen outside of those contexts.

- Intellectual Property Protection: Protect intellectual property and prevent industrial espionage by maintaining the confidentiality of sensitive manufacturing data.

4.3 Politician Polling

- Public Opinion Data: Enable politicians to gather public opinion data through LSSs, avoiding the limitations and potential biases of conventional polling methods.

- Accurate Insights: Provide politicians with more accurate and representative insights into constituent preferences and concerns while maintaining voter privacy.

4.4 Personal Health Monitoring

- Health Data Management: Utilize LSSs to manage personal health data from wearables and medical records. The LSSs can provide insights and recommendations to healthcare providers and researchers while ensuring the privacy of detailed health information.

- Personalized Medicine: Enable personalized medicine and early intervention by allowing LSSs to securely share relevant health data with authorized parties, such as medical diagnostic algorithms, which would delete the data after use.

4.5 Employee Knowledge Transfer

- Knowledge Capture and Transfer: Capture and transfer the specialized knowledge of employees, particularly regarding specific personal relationships and unique insights, using LSSs. This information can be selectively shared with others, facilitating knowledge continuity within organizations.

- Turnover Mitigation: Mitigate the impact of turnover and ensure that critical information is preserved and accessible to future decision-makers. For example, outgoing presidents could use LSSs to share key learnings with their successors.

Security and Trust Enhancements

5.1 Credible Threat Verification

- Threat Verification: Utilize LSSs to verify the likelihood and credibility of threats, such as trade restrictions or military action. This helps parties make informed decisions and reduces the risk of misunderstandings or escalations.

- Communication Mechanism: Provide a trusted mechanism for communicating the seriousness of threats without revealing sensitive information, promoting more effective negotiation and conflict resolution.

5.2 Multilateral Arms Control

- Arms Control Verification: Employ LSSs to verify compliance with arms control treaties using classified data analysis. This allows for effective monitoring and enforcement without compromising national security secrets.

- Trust and Stability: Foster greater trust and stability among nations by providing a secure and impartial means of verifying adherence to arms control agreements.

5.3 Digital Voting Verification

- Voting Integrity: Utilize LSSs to verify the integrity of each step in digital voting processes. This helps prevent tampering and ensures that votes are accurately counted.

- Voter Transparency: Allow individual voters to confirm that their votes were properly recorded and counted using LSSs, enhancing transparency and trust in the electoral process.

5.4 Social Science Research

- Sensitive Data Analysis: Enable social scientists to analyze sensitive personal data using LSSs while preserving the anonymity of research subjects. This expands the scope and depth of social science research while maintaining ethical standards.

- Comprehensive Research: Facilitate the study of sensitive topics and populations without compromising individual privacy, leading to more comprehensive and representative research findings.

Supply Chains

6.1 Humane Animal Product Verification

- Humane Treatment Verification: Utilize LSSs to verify the humane treatment of animals in product supply chains without revealing other sensitive business information.

- Consumer Assurance: Provide consumers with assurance that the animal products they purchase are ethically sourced while protecting the information of responsible producers.

6.2 Supply Chain Management

- Verification: Employ LSSs to monitor and verify the integrity of supply chains. For example, verify that no part of a computer has come from an untrusted vendor.

Steady State Solutions

Many of the examples above could work if implemented in isolation, but not in an ecosystem of many LSSs. Some of these examples directly counter others. It’s difficult to predict what a LSS-heavy world would look like, especially in adversarial situations.

Whistleblowers could use LSSs to better hide their identity. But companies can use LSSs to ensure that employees don’t whistleblow. I would assume that LSSs will generally make whistleblowing more difficult, all things considered.

A company LSS could help ensure that the company is doing moral actions. But, immoral companies could get very good at using sophisticated LSSs to give them a lot of plausible deniability over things. Instead of hiding certain decision-making procedures in private conversations, organizations can choose to move key decisions to automated processes that they are confident will do illegal things, like collude, but would give them much greater plausible deniability. Basically, there’s a lot of money being made now by actors doing legally-questionable things, so there’s a lot of incentive to come up with creative ways to continue doing so.

The NSA could better reveal trustworthy information about key insights, without exposing other data, using LSSs. But they might eventually use LSSs to outsource decision-making in ways

So, there could be some sort of arms race between “agents trying to hide their criminal activities by obfuscating them” and “agents trying to monitor and oversee agents they don’t trust, using increasingly sophisticated methods and more monitoring.”

Long-Term Implications

I would guess that LSSs and similar tools will generally cause:

1. Higher Levels of Trust

LSSs are likely to lead to higher levels of accountability, and correspondingly, justified trust. While this can occasionally be negative—for instance, if a malicious actor trusts a good actor mistakenly, or if a good actor trusting a malicious actor leads to harm (e.g., ransom situations)—it seems generally positive.

Note that there are situations where trust trades off against privacy. Higher levels of justified trust would make it harder to lie or obfuscate. In a murder case, if everyone except the murderer proves they are not the culprit, it highlights the actual murderer. This shift can be good or bad depending on the specifics of each situation.

2. Higher Levels of Institutional Alignment

Increased trust and the ability to minimize or monitor communication between untrusted parties will lead to greater institutional alignment. Nations could ensure their governments work in their favor, and companies could ensure employees optimize shareholder interests. Alignment can be either malicious or positive, depending on who benefits.

3. Cheaper Methods of Communication

LSSs would automate much of the work involved in sharing information between parties, making it more efficient. This includes all forms of information sharing, reducing the effort required.

4. Greater Use of Monitoring and Surveillance

LSSs could significantly decrease the costs and increase the benefits of monitoring and surveillance. As a result, we should expect a substantial increase in their use.

5. Reduced Abuse of Data

When data is provided to an authority, LSSs could ensure that the authority uses it as minimally as necessary and then removes the data. This would lead to very low levels of data abuse.

6. Increased Trade

Trade becomes more efficient and cost-effective with higher levels of trust and alignment. This extends to more abstract forms of trade, such as countries negotiating agreements to reduce military threats in exchange for better trade deals. Additionally, nations engaged in military arms races might find ways to "collude" to reduce the negative-sum costs associated with their competition.

7. Increased Effectiveness of Political Power

In democratic countries, where power is split between citizens, multiple politicians, and various branches of government, LSSs could serve as powerful tools for enhancing accountability. Corruption typically harms the broader political ecosystem, meaning that most political actors have a vested interest in preventing it. For instance, if a Democratic Senator is corrupt, it negatively impacts not only the senator but also the party and its supporters. Therefore, there is a collective incentive among Democrats to enforce rigorous LSS monitoring on their representatives to ensure transparency and integrity.

However, in authoritarian regimes, leaders could manipulate the use of LSSs to consolidate their power further. By strategically implementing LSSs in ways that enhance their authority and banning them in contexts that might expose their wrongdoings, autocratic leaders can create a more powerful and stable government. This selective application would allow them to maintain control and avoid accountability, using LSSs as a tool to reinforce their dominance.

While LSSs can incentivize honesty by providing a mechanism for demonstrating integrity, this only works if there is a genuine incentive for transparency. In situations where leaders hold overwhelming power and face little to no accountability, they may simply choose not to adopt LSSs for themselves. This refusal would allow them to continue operating without scrutiny, effectively neutralizing the potential benefits of LSSs in promoting honest and transparent governance.

Conclusion

To those who made it this far, I salute you. I got a bit carried away with this, in part because I think this idea is a good combination of important, neglected, and straightforward.

I feel like there should be better groups advancing this area. I wouldn't be surprised if we see dozens of new companies and products emerge here in the next few years.

Personally, the thought and viability of LSSs has made me a fair bit more hopeful for the future. I don't know if future technologies will take this exact shape, but I would expect some of the key benefits to be realized somehow or other.

Executive summary: LLM-Secured Systems (LSSs), which use AI to manage private data and handle information requests, could provide substantial benefits for privacy, accountability, and efficiency across various domains, if LLM reliability improves sufficiently.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.