Summary

Currently, it is impossible to know where a post fits within the landscape of EA ideas just by glancing at a few values. I am suggesting a set of infographics that summarize 8 vectors that categorize a post within the complex EA space at a glance. These vectors describe posts':

- Effects timeline (4-dimensional)

- Risk and wellness (2D)

- What types of reasoning are stimulated (5D)

- Breadth and depth (2D)

- Entertaining or serious (1D)

- Problem and solution system (6D)

- Longtermism detail (5D)

- Who recommends it (68 values, filterable)

I am looking for feedback on what categories can comprehensively describe important aspects of a post, how these can be visualized, and how can the mathematics be intuitive as well as unbiased and unbiasing.

I thank Edo Arad for framing feedback.

Infographics and calculations

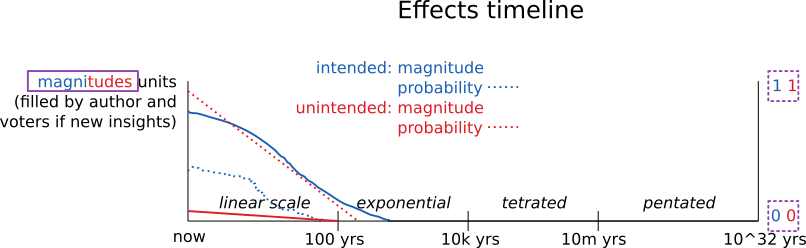

Effects timeline

Description

Line chart

x-axis: Time scale with segments of increasing exponentiation iteration (starting with linear)

y-axis:

Intended effects:

- Magnitude (voters suggest units) (left scale)

- Probability (right scale)

Unintended effects:

- Magnitude (voters suggest units) (left scale)

- Probability (right scale)

Calculations

- Users draw curves or use mathematical input

- Curves are averaged (weighted by x)

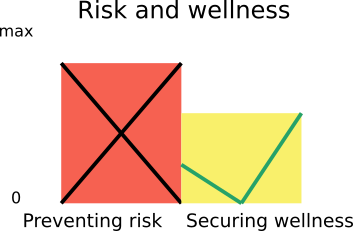

Risk and wellness

Description

Bar graph

Bars (from 0 to max):

- Preventing risk

- Securing wellness

Note: The two bars do not need to add up to 100%; a post can discuss both risk prevention and wellness advancement to a high extent, for example.

Calculations

- Voters have 100 points to allocate among all posts (if they have no more points to allocate, they have to decrease their upvotes on other posts)

- Points allocated to each bar are squared and then multiplied by x; results are summed for each bar

- The size of bar is the ratio of the points of this post over max points any post is currently receiving for that bar

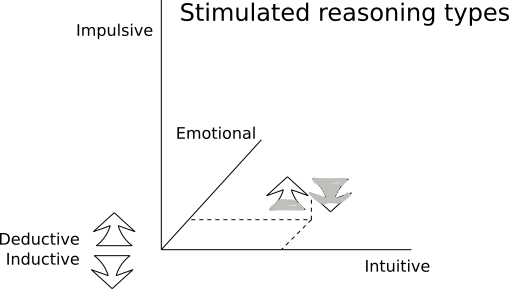

What types of reasoning are stimulated

Description

Two arrows in 3D space

x-axis: Intuitive

y-axis: Emotional

z-axis: Impulsive

Arrow up: Deductive

Arrow down: Inductive

Calculations

- Voters have a maximum of 100x points to allocate among the five categories

- Points in each category are summed

- The sum in each category is divided by the number of voters (weighted by x)

- The ratio is displayed as a % from 0 to 100% on axes or empty to full arrow

Breadth and depth

Description

Horizontal bar graph

Left-right bar: Comprehensiveness

Right-left bar: Detail

Calculations

- Voters have a maximum of 100x points to allocate between the two bars

- Votes for each bar are summed

- The ratio of the sum of votes for each bar over the number of voters (weighted by x) is shown as a % from 0 to 100%

Entertaining or serious

Description

Linear scale

Left: Entertaining

Right: Serious

Calculations

- Voters place a slider or enter a number from 0 (left end) to 100 (right end)

- An average position (weighted by x) is shown

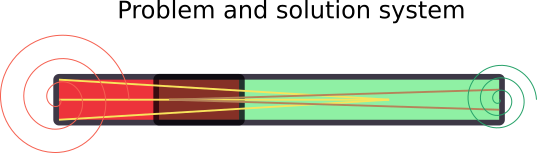

Problem and solution system

Description

Bar graph with spirals

Left-right full bar: Problem

Right-left full bar: Solution

Left-right three connecting lines: Connecting problems

Right-left two connecting lines: Connecting solutions

Left spiral: Ways to find problems

Right spiral: Ways to develop solutions

Calculations

- Voters have a maximum of 100x points to allocate to each pair

- Points in each of the the six categories are summed

- The ratio of points allocated to each category over the number of voters voting for that pair (weighted by x) is displayed (as a size of a bar or a spiral from 0 to 100%)

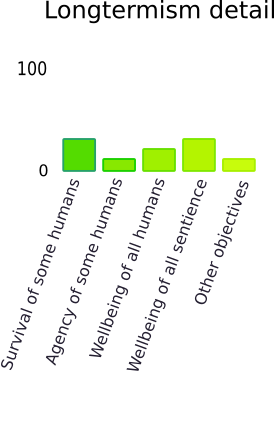

Longtermism detail

Description

Bar graph

Bars:

- Survival of some humans

- Agency of some humans

- Wellbeing of all humans

- Wellbeing of all sentience

- Other objectives

Calculations

- Users have exactly 100 points to allocate between the bars

- Points in each category are added

- The sum in each category over the number of voters (weighted by x) is displayed (as a number between 0 and 100)

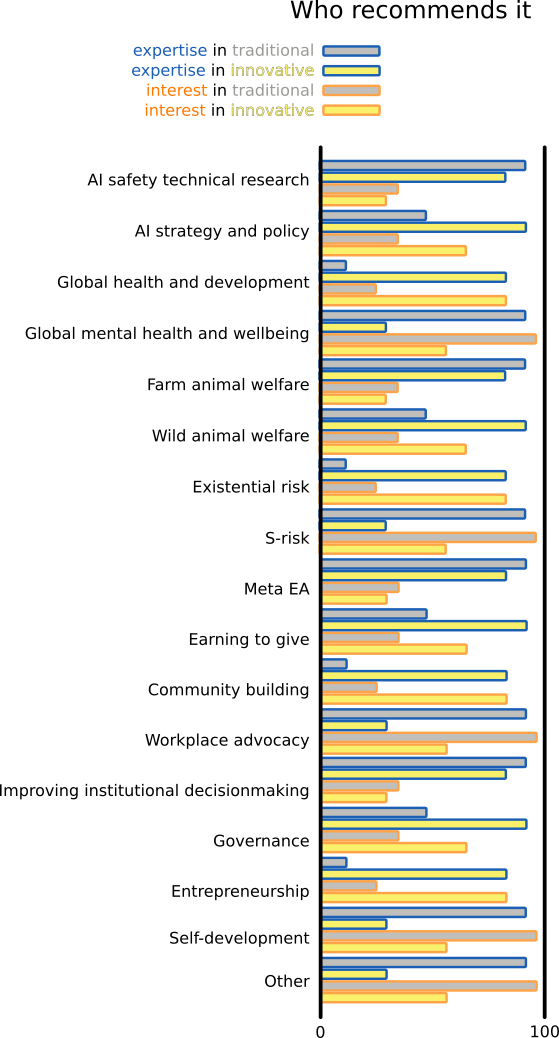

Who recommends it

Description

Bar graph

Bars: Recommendation for similar readers by persons with expertise/interest in traditional/innovative approach to the following fields:

- AI safety technical research

- AI strategy and policy

- Global health and development

- Global mental health and wellbeing

- Farm animal welfare

- Wild animal welfare

- Existential risk

- S-risk

- Meta EA

- Earning to give

- Community building

- Workplace advocacy

- Improving institutional decisionmaking

- Governance

- Entrepreneurship

- Self-development

- Other

Calculations

- Voters use a slider or enter a number between 0 and 100 for each bar

- Average score (weighted by x) is displayed as a size of the bar

Conclusion

I described 8 upvote categories that can together enable users to know where in the EA landscape posts fit at a glance. I am looking for feedback on further progress in categorized EA Forum upvoting.

I can see that you’ve put a lot of effort into this, and I think that if there were some way of reliably automating it I’d say “go for it.” And perhaps there’s just something I’m missing about all this!

But I’ll be entirely honest: this feels entirely overwhelming and overcomplicated relative to the value that it might provide, especially since it tries going for 200% implementation before we’ve even tried the prototypical 20% version: 7 vectors with 25 dimensions plus another vector with “68 values”. That’s an enormous ask.

And it’s for the purpose of enabling “users to know where in the EA landscape” a post fits at a glance? 1) I don’t think it would accomplish that for most people; you’d still have to reason through where it fits in by thinking in your octo-vectorial space. 2) Does that really matter even if you do achieve it? 3) Is it not already possible to roughly understand where it fits—at least to the extent that such understanding would be valuable—by looking at the title, author, and tags? 4) I don’t think that objective rating will be as reliable/consistent as you hope—assuming people even try to provide all the metrics.

In contrast, I was expecting this article to talk about something like “the option to see narrower ratings such as ‘how interesting was this,’ ‘how clear was it’, ‘how valuable was it to the level that I understood it,’ etc.” That seems plausibly implementable and still directly valuable for users.

Sure, what about 20% version 1) encouraging users to write collections and summaries of posts that they recommend - then, if I meet someone whose work or perspectives I like or would like to respond to it can be easier to learn and contribute if there is a summary and 2) tags under Longtermism: Human survival, Human agency, Human wellbeing, Sentience wellbeing, and Non-wellbeing objectives, and 3) 'red' tags which show in grey Repugnant Conclusion and Sadistic Conclusion?

Responding to your points:

1) Steep learning curve? Human minds are faster than you think?

2) No, by the time I achieve it posts will avoid scoring poorly on these metrics so it does not matter what the pictures are at any post. It is a guidance on how to write good posts, kind of. Again, human mind - can synthesize from these categories and optimize for an overall great content, considering complementarity with other post / ability to score high more uniquely? Otherwise, users may optimize for attention ...

3) Not the title - you cannot know if it is for example writing trying to catch readers and provides valuable solution- (or problem- or otherwise valuable) oriented content or a neutral title where the content motivates impulsive reasoning, for example. The tags - also not really, if something is tagged as 'Community infrastructure,' for example, you are not sure if it is a scale-up write up, innovation, problem, solution, inspiration for synthesis, directive recommendation, etc. If you are specifically looking for posts with this 'spirit' of 'I employed emotional reasoning to synthesize problems and am offering solutions that I am quite certain about in the long term and are inclusive in wellbeing,' you cannot use tags. Can you look at the author? Not really either, because there are many people who you do not know and who may be presenting certain public-facing narratives, also due to otherwise their posts being scored low? But sure, somewhat you can just glance at the preview and see what the post is about.

4) Hm, yes that is a real risk: if something becomes defined as 'wellness,' for instance, by the community but then entities are suffering it is challenging to change it (although I actually paid attention to this in the math which is that users have to continuously reallocate scarce points) - so, another example, posts with high 'Agency of some humans' score that later are discovered that are actually limiting human agency can decrease users' ability to point out that these limit agency, because 'no the bar is high so they safeguard it.' Even thinking about scoring these categories can be valuable and the overall picture can be quite informative?

What do you mean? Like something that enables the users to become better writers by seeing an (imperfect) score and normalizes the judging of posts based on conformity to Western standard of writing, plus motivates rejecting some content based on 'did not go though' - no, I think this is not a good idea users will be optimizing for conformity due to fear of being publicly shamed and will limit creativity and innovation but something like 'Is there a concise and comprehensive summary?' 'How I felt reading it?' 'Did I read it or skim it?' 'Who should read it (what level of expertise in what field)?' can be less judging the author according to arbitrary standards and more motivating readers to engage with the authors to whom they can provide valuable feedback while letting others know how is normal to engage with the post.

I'm not sure I follow how your 20% version relates to original post/proposal about categorized voting: summaries seem reasonable/good but unrelated, and the two points about tagging just seem to be "it would be nice if we used/had more tags."

There are a lot of other points/responses I could address, but I think that it's probably better to step back and summarize my big-picture concerns rather than continue narrowing in:

I think the answer to (1) is "probably a lot":

I'm not going to go much deeper to cover (2), as I think the issue is fairly understandable, but I will just highlight that the time and quality are clearly proportional, and so skimping on time will make the quality suffer.

Ultimately, I do not see this metric being sufficiently valuable to be worth a daily commitment of >5 hours of EA time; I would much rather people spend that time creating new posts, commenting on existing posts, etc.

Hm, ok, maybe just more tags is the solution.

1. Anyone who would opt in to switch or add voting matrices, about 30 minutes to learn on their favorite post and then similarly to one-score voting, times how many categories/subcategories they want to vote on (if you intuitively assign an upvote, you would just intuitively assign maybe 3 upvotes by clicking on images).

2. Yes, depending on the learning curve, and assuming people who would spend too much time learning would not opt in, this would be sufficiently accurate and quick. This would also provide aggregate data - however, it may be easier if experts who have seen a lot of posts make estimates. So, assuming that one to a few humans keeps awareness of posts and can assess what a person may like, then someone like an EA Librarian can recommend posts an individual would best benefit from. The recommendations can be of higher quality and more efficient. So, you may be right, the quality/time ratio may be much worse than the best alternative.

Oh, yes, if there is a moderator who would have to be digitizing their perspective - plus, would probably not capture the complexity of the post by these categories - the human brain is much better in this - a reminder note can function better. But, if you upvote only one post per week by clicking once and you would have to upvote one post per week by clicking 4x4 times, on average, it is still ok. Yes, the reallocation of the points - users would be so affected they would even stop paying attention to FB or other media since there are these demands on upvoting .. Yes, at lest 10 similar perspectives can be taken as saturation, unless new perspectives emerge?

Hm, I guess you are not so much about intuitive understanding of these infographics - in general, when persons develop something then it is much easier for them to orient in the summary (including an image) - so, somehow everyone would need to be involved in the development of scoring metrics.

I would be much rather if people regularly pause their posting and commenting to reflect where their actions are leading, why they do what they do, if they are missing something, if there are solutions already developed, what are some problems, who is liking what in the community, etc. This can improve epistemics and cooperation efficiency.

I may agree with you that categorized scoring metrics are not the only way to achieve this objective. There may be much better ways, such as expert recommendations of posts and cooperation opportunities.

Thank you very much for the reply.

Would more detailed tags help you with this?

I'm asking this because your proposals seem less like "something that people will disagree about" and more like "something that anyone can tag"

P.S

If I'd work on the forum myself, I'd probably want to ask you further questions, like "ok, let's say all of this was ready, what posts would you personally search for now?"

Tags can work for Longtermism detail, although the nuance due to the extent of each longtermism aspect would be lost (plus, there would be five tags just for one image).

Generally, tags are not scales, so, for example, if you want to know to what extent this is entertaining or serious, you can only have the binary tag Humor (for very humorous posts).

You could have tags for effects timeline that are discrete steps (e. g. within 100, 1000, 10000, ... years) but to combine these with the effects certainty in each of these time periods you would need some tag relationship function, which could be visually confusing so just graphing seems better to me.

There are already tags that relate to risk and wellness, such as Global catastrophic risk and Global health and wellbeing, but (especially the former one) these can be somewhat generously applied (if the post somewhat relates, why not to tag it by this popular tag, for example) and do not depict the ethical theory that the post is implying (just one picture and you know to what extent this is e. g. negative prioritarianism or a system where high wellbeing of a few is prioritized notwithstanding others' suffering). Thus, 'red' tags, such as 'Repugnant Conclusion,' 'Sadistic Conclusion,' etc could be applied. But again, the nuance.

Stimulated reasoning is for the readers to think about the way they and others reason. Tags could be used but having to graph this in 5D can motivate deeper meta-analysis regarding one's cognition, which can be generally useful for coming up with unbiased ideas and understanding others' ways of thinking.

Breadth and depth is really better if it is a scale, otherwise you have: is it broad? Is it deep? Well, this is a bit of an oversimplification - you could have a specific tag for posts which are details, posts which are just broad overviews, posts which introduce examples and use deductive reasoning, posts which are broad overviews but motivate inductive reasoning based on logic, posts which are somewhat detailed, posts which are info hazards and are too detailed (maybe you could control for a threshold value for posts tagged both risk and with a score above a certain level of detail, ... just one use). Then, you would have the issue that certain tags are to categorize the post within a certain aspect (such as breadth and depth) and other tags denote a different category (such as reasoning type) but they all look the same, so it is more time consuming to scan and make a mental picture. If you want to keep readers rather confused about what this is all about, then this is somewhat definitive argument against infographics. But, I think that easy orientation to enable users to see what they can best like is beneficial.

The problem and solution system is also too many tags but here the scale can be perhaps the least needed. Readers looking for problems could just go find them. Readers looking for, for instance, if someone has already connected some solutions on a specific topic could just jump by the tag.

Who recommends it can probably be the one to keep from all this. For example, if there are people who like to advance public facing narratives in an attention-captivating manner that limits critical thinking and may motivate impulsive action (this is also maybe why the reasoning type is implemented, you can see which posts motivate impulsive reasoning) and recommend a post, then a person who is also maybe just coming across EA, perhaps due to seeking effective climate actions, may enjoy it but it will be identified for those who are not so much interested in public facing narratives and impulsive reasoning and may rather prefer maybe connecting solutions. The cause area and innovative/traditional interest/expertise upvoting can be quite informative in knowing who would best benefit from engagement.

I am not sure what posts I would search for, haha, I sound like GPT-3, but perhaps as reasoned in the above paragraph, since I am a more engaged community member, I would search posts that

If I would be actually reading or scoring posts, I would have a mental model full of conditionalities that is continuously updated and would think that I will read certain content but then read based on other factors, such as recommendations, highlights, or coincidence. So, I would have to set up a filter to keep some focus.

Thank you for the questions.