This is the third post in a sequence of posts giving an overview of catastrophic AI risks.

3 AI Race

The immense potential of AIs has created competitive pressures among global players contending for power and influence. This “AI race” is driven by nations and corporations who feel they must rapidly build and deploy AIs to secure their positions and survive. By failing to properly prioritize global risks, this dynamic makes it more likely that AI development will produce dangerous outcomes. Analogous to the nuclear arms race during the Cold War, participation in an AI race may serve individual short-term interests, but it ultimately results in worse collective outcomes for humanity. Importantly, these risks stem not only from the intrinsic nature of AI technology, but from the competitive pressures that encourage insidious choices in AI development.

In this section, we first explore the military AI arms race and the corporate AI race, where nation-states and corporations are forced to rapidly develop and adopt AI systems to remain competitive. Moving beyond these specific races, we reconceptualize competitive pressures as part of a broader evolutionary process in which AIs could become increasingly pervasive, powerful, and entrenched in society. Finally, we highlight potential strategies and policy suggestions to mitigate the risks created by an AI race and ensure the safe development of AIs.

3.1 Military AI Arms Race

The development of AIs for military applications is swiftly paving the way for a new era in military technology, with potential consequences rivaling those of gunpowder and nuclear arms in what has been described as the “third revolution in warfare." The weaponization of AI presents numerous challenges, such as the potential for more destructive wars, the possibility of accidental usage or loss of control, and the prospect of malicious actors co-opting these technologies for their own purposes. As AIs gain influence over traditional military weaponry and increasingly take on command and control functions, humanity faces a paradigm shift in warfare. In this context, we will discuss the latent risks and implications of this AI arms race on global security, the potential for intensified conflicts, and the dire outcomes that could come as a result, including the possibility of conflicts escalating to a scale that poses an existential threat.

3.1.1 Lethal Autonomous Weapons (LAWs)

LAWs are weapons that can identify, target, and kill without human intervention [32]. They offer potential improvements in decision-making speed and precision. Warfare, however, is a high-stakes, safety-critical domain for AIs with significant moral and practical concerns. Though their existence is not necessarily a catastrophe in itself, LAWs may serve as an on-ramp to catastrophes stemming from malicious use, accidents, loss of control, or an increased likelihood of war.

LAWs may become vastly superior to humans. Driven by rapid developments in AIs, weapons systems that can identify, target, and decide to kill human beings on their own—without an officer directing an attack or a soldier pulling the trigger—are starting to transform the future of conflict. In 2020, an advanced AI agent outperformed experienced F-16 pilots in a series of virtual dogfights, including decisively defeating a human pilot 5-0, showcasing “aggressive and precise maneuvers the human pilot couldn't outmatch" [33]. Just as in the past, superior weapons would allow for more destruction in a shorter period of time, increasing the severity of war.

Militaries are taking steps toward delegating life-or-death decisions to AIs. Fully autonomous drones were likely first used on the battlefield in Libya in March 2020, when retreating forces were "hunted down and remotely engaged" by a drone operating without human oversight [34]. In May 2021, the Israel Defense Forces used the world's first AI-guided weaponized drone swarm during combat operations, which marks a significant milestone in the integration of AI and drone technology in warfare [35]. Although walking, shooting robots have yet to replace soldiers on the battlefield, technologies are converging in ways that may make this possible in the near future.

LAWs increase the likelihood of war. Sending troops into battle is a grave decision that leaders do not make lightly. But autonomous weapons would allow an aggressive nation to launch attacks without endangering the lives of its own soldiers and thus face less domestic scrutiny. While remote-controlled weapons share this advantage, their scalability is limited by the requirement for human operators and vulnerability to jamming countermeasures, limitations that LAWs could overcome [36]. Public opinion for continuing wars tends to wane as conflicts drag on and casualties increase [37]. LAWs would change this equation. National leaders would no longer face the prospect of body bags returning home, thus removing a primary barrier to engaging in warfare, which could ultimately increase the likelihood of conflicts.

3.1.2 Cyberwarfare

As well as being used to enable deadlier weapons, AIs could lower the barrier to entry for cyberattacks, making them more numerous and destructive. They could cause serious harm not only in the digital environment but also in physical systems, potentially taking out critical infrastructure that societies depend on. While AIs could also be used to improve cyberdefense, it is unclear whether they will be most effective as an offensive or defensive technology [38]. If they enhance attacks more than they support defense, then cyberattacks could become more common, creating significant geopolitical turbulence and paving another route to large-scale conflict.

AIs have the potential to increase the accessibility, success rate, scale, speed, stealth, and potency of cyberattacks. Cyberattacks are already a reality, but AIs could be used to increase their frequency and destructiveness in multiple ways. Machine learning tools could be used to find more critical vulnerabilities in target systems and improve the success rate of attacks. They could also be used to increase the scale of attacks by running millions of systems in parallel, and increase the speed by finding novel routes to infiltrating a system. Cyberattacks could also become more potent if used to hijack AI weapons.

Cyberattacks can destroy critical infrastructure. By hacking computer systems that control physical processes, cyberattacks could cause extensive infrastructure damage. For example, they could cause system components to overheat or valves to lock, leading to a buildup of pressure culminating in an explosion. Through interferences like this, cyberattacks have the potential to destroy critical infrastructure, such as electric grids and water supply systems. This was demonstrated in 2015, when a cyberwarfare unit of the Russian military hacked into the Ukrainian power grid, leaving over 200,000 people without power access for several hours. AI-enhanced attacks could be even more devastating and potentially deadly for the billions of people who rely on critical infrastructure for survival.

Difficulties in attributing AI-driven cyberattacks could increase the risk of war. A cyberattack resulting in physical damage to critical infrastructure would require a high degree of skill and effort to execute, perhaps only within the capability of nation-states. Such attacks are rare as they constitute an act of war, and thus elicit a full military response. Yet AIs could enable attackers to hide their identity, for example if they are used to evade detection systems or more effectively cover the tracks of the attacker [39]. If cyberattacks become more stealthy, this would reduce the threat of retaliation from an attacked party, potentially making attacks more likely. If stealthy attacks do happen, they might incite actors to mistakenly retaliate against unrelated third parties they suspect to be responsible. This could increase the scope of the conflict dramatically.

3.1.3 Automated Warfare

AIs speed up the pace of war, which makes AIs more necessary. AIs can quickly process a large amount of data, analyze complex situations, and provide helpful insights to commanders. With ubiquitous sensors and advanced technology on the battlefield, there is tremendous incoming information. AIs help make sense of this information, spotting important patterns and relationships that humans might miss. As these trends continue, it will become increasingly difficult for humans to make well-informed decisions as quickly as necessary to keep pace with AIs. This would further pressure militaries to hand over decisive control to AIs. The continuous integration of AIs into all aspects of warfare will cause the pace of combat to become faster and faster. Eventually, we may arrive at a point where humans are no longer capable of assessing the ever-changing battlefield situation and must cede decision-making power to advanced AIs.

Automatic retaliation can escalate accidents into war. There is already willingness to let computer systems retaliate automatically. In 2014, a leak revealed to the public that the NSA has a program called MonsterMind, which autonomously detects and blocks cyberattacks on US infrastructure [40]. What was unique, however, was that instead of simply detecting and eliminating the malware at the point of entry, MonsterMind would automatically initiate a retaliatory cyberattack with no human involvement. If multiple combatants have policies of automatic retaliation, an accident or false alarm could quickly escalate to full-scale war before humans intervene. This would be especially dangerous if the superior information processing capabilities of modern AI systems makes it more appealing for actors to automate decisions regarding nuclear launches.

History shows the danger of automated retaliation. On September 26, 1983, Stanislav Petrov, a lieutenant colonel of the Soviet Air Defense Forces, was on duty at the Serpukhov-15 bunker near Moscow, monitoring the Soviet Union's early warning system for incoming ballistic missiles. The system indicated that the US had launched multiple nuclear missiles toward the Soviet Union. The protocol at the time dictated that such an event should be considered a legitimate attack, and the Soviet Union would respond with a nuclear counterstrike. If Petrov had passed on the warning to his superiors, this would have been the likely outcome. Instead, however, he judged it to be a false alarm and ignored it. It was soon confirmed that the warning had been caused by a rare technical malfunction. If an AI had been in control, the false alarm could have triggered a nuclear war.

AI-controlled weapons systems could lead to a flash war. Autonomous systems are not infallible. We have already witnessed how quickly an error in an automated system can escalate in the economy. Most notably, in the 2010 Flash Crash, a feedback loop between automated trading algorithms amplified ordinary market fluctuations into a financial catastrophe in which a trillion dollars of stock value vanished in minutes [41]. If multiple nations were to use AIs to automate their defense systems, an error could be catastrophic, triggering a spiral of attacks and counter-attacks that would happen too quickly for humans to step in—a flash war. The market quickly recovered from the 2010 Flash Crash, but the harm caused by a flash war could be catastrophic.

Automated warfare could reduce accountability for military leaders. Military leaders may at times gain an advantage on the battlefield if they are willing to ignore the laws of war. For example, soldiers may be able to mount stronger attacks if they do not take steps to minimize civilian casualties. An important deterrent to this behavior is the risk that military leaders could eventually be held accountable or even prosecuted for war crimes. Automated warfare could reduce this deterrence effect by making it easier for military leaders to escape accountability by blaming violations on failures in their automated systems.

AIs could make war more uncertain, increasing the risk of conflict. Although states that are already wealthier and more powerful often have more resources to invest in new military technologies, they are not necessarily always the most successful at adopting them. Other factors also play an important role, such as how agile and adaptive a military can be in incorporating new technologies [42]. Major new weapons innovations can therefore offer an opportunity for existing superpowers to bolster their dominance, but also for less powerful states to quickly increase their power by getting ahead in an emerging and important sphere. This can create significant uncertainty around if and how the balance of power is shifting, potentially leading states to incorrectly believe they could gain something from going to war. Even aside from considerations regarding the balance of power, rapidly evolving automated warfare would be unprecedented, making it difficult for actors to evaluate their chances of victory in any particular conflict. This would increase the risk of miscalculation, making war more more likely.

3.1.4 Actors May Risk Extinction Over Individual Defeat

“I know not with what weapons World War III will be fought, but World War IV will be fought with sticks and stones." - Einstein

Competitive pressures make actors more willing to accept the risk of extinction. During the Cold War, neither side desired the dangerous situation they found themselves in. There were widespread fears that nuclear weapons could be powerful enough to wipe out a large fraction of humanity, potentially even causing extinction—a catastrophic result for both sides. Yet the intense rivalry and geopolitical tensions between the two superpowers fueled a dangerous cycle of arms buildup. Each side perceived the other's nuclear arsenal as a threat to its very survival, leading to a desire for parity and deterrence. The competitive pressures pushed both countries to continually develop and deploy more advanced and destructive nuclear weapons systems, driven by the fear of being at a strategic disadvantage. During the Cuban Missile Crisis, this led to the brink of nuclear war. Even though the story of Arkhipov preventing the launch of a nuclear torpedo wasn't declassified until decades after the incident, President John F. Kennedy reportedly estimated that he thought the odds of nuclear war beginning during that time were "somewhere between one out of three and even." This chilling admission highlights how the competitive pressures between militaries have the potential to cause global catastrophes.

Individually rational decisions can be collectively catastrophic. Nations locked in competition might make decisions that advance their own interests by putting the rest of the world at stake. Scenarios of this kind are collective action problems, where decisions may be rational on an individual level yet disastrous for the larger group [43]. For example, corporations and individuals may weigh their own profits and convenience over the negative impacts of the emissions they create, even if those emissions collectively result in climate change. The same principle can be extended to military strategy and defense systems. Military leaders might estimate, for instance, that increasing the autonomy of weapon systems would mean a 10 percent chance of losing control over weaponized superhuman AIs. Alternatively, they might estimate that using AIs to automate bioweapons research could lead to a 10 percent chance of leaking a deadly pathogen. Both of these scenarios could lead to catastrophe or even extinction. The leaders may, however, also calculate that refraining from these developments will mean a 99 percent chance of losing a war against an opponent. Since conflicts are often viewed as existential struggles by those fighting them, rational actors may accept an otherwise unthinkable 10 percent chance of human extinction over a 99 percent chance of losing a war. Regardless of the particular nature of the risks posed by advanced AIs, these dynamics could push us to the brink of global catastrophe.

Technological superiority does not guarantee national security. It is tempting to think that the best way of guarding against enemy attacks is to improve one's own military prowess. However, in the midst of competitive pressures, all parties will tend to advance their weaponry, such that no one gains much of an advantage, but all are left at greater risk. As Richard Danzig, former Secretary of the Navy, has observed, "On a number of occasions and in a number of ways, the American national security establishment will lose control of what it creates... deterrence is a strategy for reducing attacks, not accidents; it discourages malevolence, not inadvertence" [44].

Cooperation is paramount to reducing risk. As discussed above, an AI arms race can lead us down a hazardous path, despite this being in no country's best interest. It is important to remember that we are all on the same side when it comes to existential risks, and working together to prevent them is a collective necessity. A destructive AI arms race benefits nobody, so all actors would be rational to take steps to cooperate with one another to prevent the riskiest applications of militarized AIs.

We have considered how competitive pressures could lead to the increasing automation of conflict, even if decision-makers are aware of the existential threat that this path entails. We have also discussed cooperation as being the key to counteracting and overcoming this collective action problem. We will now illustrate a hypothetical path to disaster that could result from an AI arms race.

Story: Automated Warfare

As AI systems become increasingly sophisticated, militaries start involving them in decision-making processes. Officials give them military intelligence about opponents' arms and strategies, for example, and ask them to calculate the most promising plan of action. It soon becomes apparent that AIs are reliably reaching better decisions than humans, so it seems sensible to give them more influence. At the same time, international tensions are rising, increasing the threat of war.

A new military technology has recently been developed that could make international attacks swifter and stealthier, giving targets less time to respond. Since military officials feel their response processes take too long, they fear that they could be vulnerable to a surprise attack capable of inflicting decisive damage before they would have any chance to retaliate. Since AIs can process information and make decisions much more quickly than humans, military leaders reluctantly hand them increasing amounts of retaliatory control, reasoning that failing to do so would leave them open to attack from adversaries.

While for years military leaders had stressed the importance of keeping a "human in the loop" for major decisions, human control is nonetheless gradually phased out in the interests of national security. Military leaders understand that their decisions lead to the possibility of inadvertent escalation caused by system malfunctions, and would prefer a world where all countries automated less; but they do not trust that their adversaries will refrain from automation. Over time, more and more of the chain of command is automated on all sides.

One day, a single system malfunctions, detecting an enemy attack when there is none. The system is empowered to launch an instant "retaliatory" attack, and it does so in the blink of an eye. The attack causes automated retaliation from the other side, and so on. Before long, the situation is spiraling out of control, with waves of automated attack and retaliation. Although humans have made mistakes leading to escalation in the past, this escalation between mostly-automated militaries happens far more quickly than any before. The humans who are responding to the situation find it difficult to diagnose the source of the problem, as the AI systems are not transparent. By the time they even realize how the conflict started, it is already over, with devastating consequences for both sides.

3.2 Corporate AI Race

Competitive pressures exist in the economy, as well as in military settings. Although competition between companies can be beneficial, creating more useful products for consumers, there are also pitfalls. First, the benefits of economic activity may be unevenly distributed, incentivizing those who benefit most from it to disregard the harms to others. Second, under intense market competition, businesses tend to focus much more on short-term gains than on long-term outcomes. With this mindset, companies often pursue something that can make a lot of profit in the short term, even if it poses a societal risk in the long term. We will now discuss how corporate competitive pressures could play out with AIs and the potential negative impacts.

3.2.1 Economic Competition Undercuts Safety

Competitive pressure is fueling a corporate AI race. To obtain a competitive advantage, companies often race to offer the first products to a market rather than the safest. These dynamics are already playing a role in the rapid development of AI technology. At the launch of Microsoft's AI-powered search engine in February 2023, the company's CEO Satya Nadella said, "A race starts today... we're going to move fast." Only weeks later, the company's chatbot was shown to have threatened to harm users [45]. In an internal email, Sam Schillace, a technology executive at Microsoft, highlighted the urgency in which companies view AI development. He wrote that it would be an "absolutely fatal error in this moment to worry about things that can be fixed later" [46].

“Nothing can be done at once hastily and prudently.” - Publius Syrus

Competitive pressures have contributed to major commercial and industrial disasters. In 1970, Ford Motor Company introduced the Ford Pinto, a new car model with a serious safety problem: the gas tank was located near the rear bumper. Safety tests showed that during a car crash, the fuel tank would often explode and set the car ablaze. Ford identified the problem and calculated that it would cost $11 per car to fix. They decided that this was too expensive and put the car on the market, resulting in numerous fatalities and injuries caused by fire when crashes inevitably happened [47]. Ford was sued and a jury found them liable for these deaths and injuries [48]. The verdict, of course, came too late for those who had already lost their lives. Ford's president at the time explained the decision, saying, "Safety doesn't sell" [49].

A more recent example of the dangers of competitive pressure is the case of the Boeing 737 Max aircraft. Boeing, aiming to compete with its rival Airbus, sought to deliver an updated, more fuel-efficient model to the market as quickly as possible. The head-to-head rivalry and time pressure led to the introduction of the Maneuvering Characteristics Augmentation System, which was designed to enhance the aircraft's stability. However, inadequate testing and pilot training ultimately resulted in the two fatal crashes only months apart, with 346 people killed [50]. We can imagine a future in which similar pressures lead companies to cut corners and release unsafe AI systems.

A third example is the Bhopal gas tragedy, which is widely considered to be the worst industrial disaster ever to have happened. In December 1984, a vast quantity of toxic gas leaked from a Union Carbide Corporation subsidiary plant manufacturing pesticides in Bhopal, India. Exposure to the gas killed thousands of people and injured up to half a million more. Investigations found that, in the run-up to the disaster, safety standards had fallen significantly, with the company cutting costs by neglecting equipment maintenance and staff training as profitability fell. This is often considered a consequence of competitive pressures [51].

Competition incentivizes businesses to deploy potentially unsafe AI systems. In an environment where businesses are rushing to develop and release products, those that follow rigorous safety procedures will be slower and risk being out-competed. Ethically-minded AI developers, who want to proceed more cautiously and slow down, would give more unscrupulous developers an advantage. In trying to survive commercially, even the companies that want to take more care are likely to be swept along by competitive pressures. There may be attempts to implement safety measures, but with more of an emphasis on capabilities than on safety, these may be insufficient. This could lead us to develop highly powerful AIs before we properly understand how to ensure they are safe.

3.2.2 Automated Economy

Corporations will face pressure to replace humans with AIs. As AIs become more capable, they will be able to perform an increasing variety of tasks more quickly, cheaply, and effectively than human workers. Companies will therefore stand to gain a competitive advantage from replacing their employees with AIs. Companies that choose not to adopt AIs would likely be out-competed, just as a clothing company using manual looms would be unable to keep up with those using industrial ones.

AIs could lead to mass unemployment. Economists have long considered the possibility that machines will replace human labor. Nobel Prize winner Wassily Leontief said in 1952 that, as technology advances, "Labor will become less and less important... more and more workers will be replaced by machines" [52]. Previous technologies have augmented the productivity of human labor. AIs, however, could differ profoundly from previous innovations. Human-level AI would, by definition, be able to do everything a human could do. These AIs would also have important advantages over human labor. They could work 24 hours a day, be copied many times and run in parallel, and process information much more quickly than a human would. While we do not know when this will occur, it is unwise to discount the possibility that it could be soon. If human labor is replaced by AIs, mass unemployment could dramatically increase inequality, making individuals dependent on the owners of AI systems.

Advanced AIs capable of automating human labor should be regarded not merely as tools, but as agents. One particularly concerning aspect of AI agents is their potential to automate research and development across various fields, including biotechnology or even AI itself. This phenomenon is already occurring [53], and could lead to AI capabilities growing at increasing rates, to the point where humans are no longer the driving force behind AI development. If this trend continues unchecked, it could escalate risks associated with AIs progressing faster than our capacity to manage and regulate them, especially in areas like biotechnology where the malicious use of advancements could pose significant dangers. It is crucial that we strive to prevent undue acceleration of R&D and maintain a strong human-centric approach to technological development.

Conceding power to AIs could lead to human enfeeblement. Even if we ensure that the many unemployed humans are provided for, we may find ourselves completely reliant on AIs. This would likely emerge not from a violent coup by AIs, but from a gradual slide into dependence. As society's challenges become ever more complex and fast-paced, and as AIs become ever more intelligent and quick-thinking, we may forfeit more and more functions to them out of convenience. In such a state, the only feasible solution to the complexities and challenges compounded by AIs may be to rely even more heavily on AIs. This gradual process could eventually lead to the delegation of nearly all intellectual, and eventually physical, labor to AIs. In such a world, people might have few incentives to gain knowledge and cultivate skills, potentially leading to a state of enfeeblement. Having lost our know-how and our understanding of how civilization works, we would become completely dependent on AIs, a scenario not unlike the one depicted in the film WALL-E. In such a state, humanity is not flourishing and is no longer in effective control—an outcome that many people would consider a permanent catastrophe [54].

As we have seen, there are classic game-theoretic dilemmas where individuals and groups face incentives that are incompatible with what would make everyone better off. We see this with a military AI arms race, where the world is made less safe by creating extremely powerful AI weapons, and we see this in a corporate AI race, where an AI's power and development is prioritized over its safety. To address these dilemmas that give rise to global risks, we will need new coordination mechanisms and institutions. It is our view that failing to coordinate and stop AI races would be the most likely cause of an existential catastrophe.

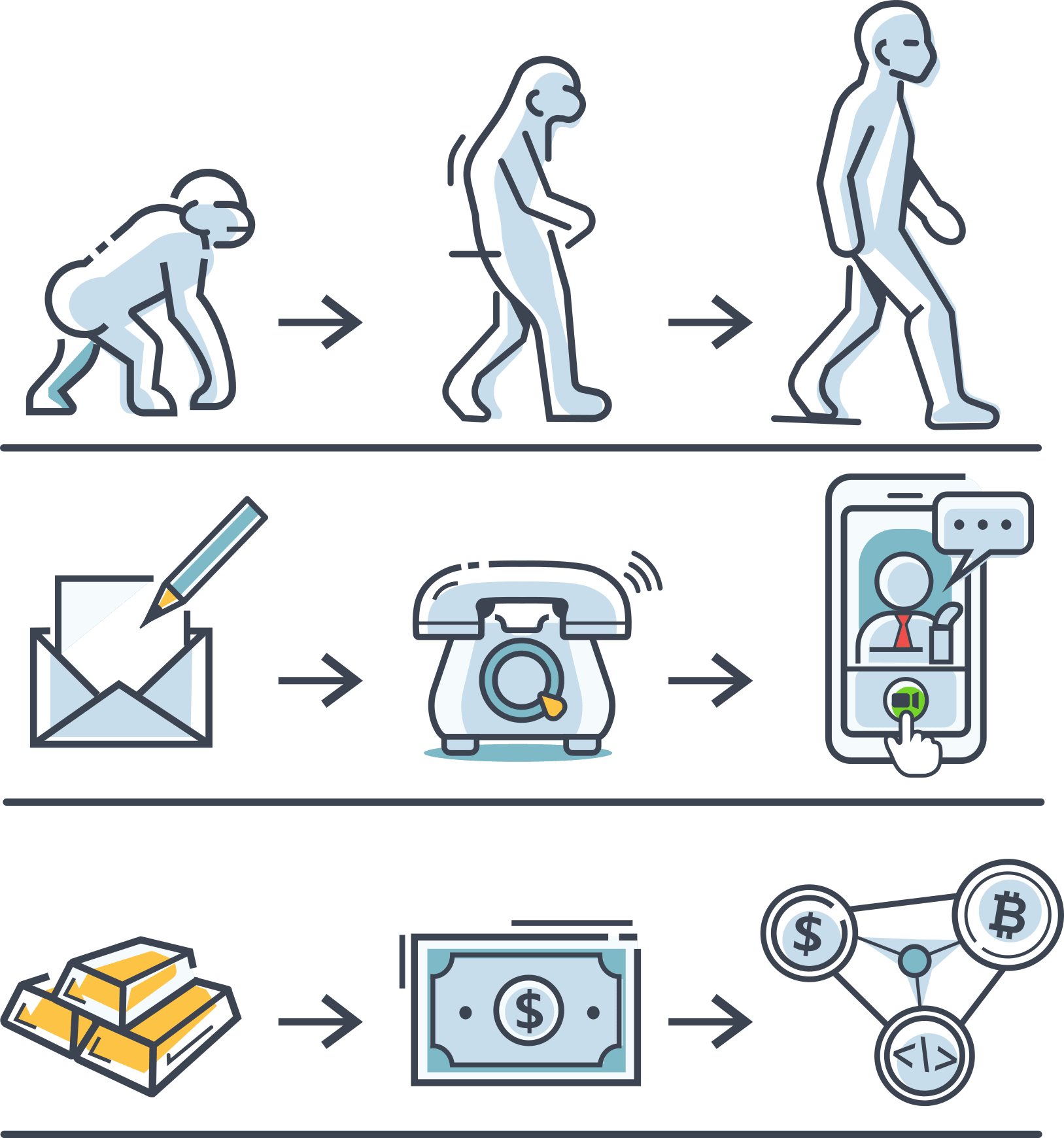

3.3 Evolution

As discussed above, there are strong pressures to replace humans with AIs, cede more control to them, and reduce human oversight in various settings, despite the potential harms. We can re-frame this as a general trend resulting from evolutionary dynamics, where an unfortunate truth is that AIs will simply be more fit than humans. Extrapolating this pattern of automation, it is likely that we will build an ecosystem of competing AIs over which it may be difficult to maintain control in the long run. We will now discuss how natural selection influences the development of AI systems and why evolution favors selfish behaviors. We will also look at how competition might arise and play out between AIs and humans, and how this could create catastrophic risks. This section draws heavily from "Natural Selection Favors AIs over Humans" [55, 56].

Fitter technologies are selected, for good and bad. While most people think of evolution by natural selection as a biological process, its principles shape much more. According to the evolutionary biologist Richard Lewontin [57], evolution by natural selection will take hold in any environment where three conditions are present: 1) there are differences between individuals; 2) characteristics are passed onto future generations and; 3) the different variants propagate at different rates. These conditions apply to various technologies.

Consider the content-recommendation algorithms used by streaming services and social media platforms. When a particularly addictive content format or algorithm hooks users, it results in higher screen time and engagement. This more effective content format or algorithm is consequently "selected" and further fine-tuned, while formats and algorithms that fail to capture attention are discontinued. These competitive pressures foster a "survival of the most addictive" dynamic. Platforms that refuse to use addictive formats and algorithms become less influential or are simply outcompeted by platforms that do, leading competitors to undermine wellbeing and cause massive harm to society [58].

The conditions for natural selection apply to AIs. There will be many different AI systems with varying features and capabilities, and competition between them will determine which characteristics become more common. The most successful AIs today are already being used as a basis for their developers' next generation of models, as well as being imitated by rival companies. Factors determining which AIs propagate the most may include their ability to act autonomously, automate labor, or reduce the chance of their own deactivation.

Natural selection often favors selfish characteristics. Natural selection influences which AIs propagate most widely. From biological systems, we see that natural selection often gives rise to selfish behaviors that promote one's own genetic information: chimps attack other communities [59], lions engage in infanticide [60], viruses evolve new surface proteins to deceive and bypass defense barriers [61], humans engage in nepotism, some ants enslave others [62], and so on. In the natural world, selfishness often emerges as a dominant strategy; those that prioritize themselves and those similar to them are usually more likely to survive, so these traits become more prevalent. Amoral competition can select for traits that we think are immoral.

Selfish behaviors may not be malicious or even intentional. Species in the natural world do not evolve selfish traits deliberately or consciously. Selfish traits emerge as a product of competitive pressures. Similarly, AIs do not have to be malicious to act selfishly—instead, they would evolve selfish traits as an adaptation to their environment. AIs might engage in selfish behavior—expanding their influence at the expense of humans—simply by automating human jobs. AIs do not intend to displace humans. Rather, the environment in which they are being developed, namely corporate AI labs, is pressuring AI researchers to select for AIs that automate and displace humans. Another example of unintentional selfish behavior is when AIs assume roles humans depend on. AIs may eventually become enmeshed in vital infrastructure such as power grids or the internet. Many people may then be unwilling to accept the cost of being able to effortlessly deactivate them, as that would pose a reliability hazard. Similarly, AI companions may induce people to become emotionally dependent on them. Some of those people may even begin to argue that their AI companions should have rights. If some AIs are given rights, they may operate, adapt, and evolve outside of human control. AIs could become embedded in human society and expand their influence over us in ways that we can't easily reverse.

Selfish behaviors may erode safety measures that some of us implement. AIs that gain influence and provide economic value will predominate, while AIs that adhere to the most constraints will be less competitive. For example, AIs following the constraint "never break the law" have fewer options than AIs following the constraint "don't get caught breaking the law." AIs of the latter type may be willing to break the law if they're unlikely to be caught or if the fines are not severe enough, allowing them to outcompete more restricted AIs. Many businesses follow laws, but in situations where stealing trade secrets or deceiving regulators is highly lucrative and difficult to detect, a business that is willing to engage in such selfish behavior can have an advantage over its more principled competitors.

An AI system might be prized for its ability to achieve ambitious goals autonomously. It might, however, be achieving its goals efficiently without abiding by ethical restrictions, while deceiving humans about its methods. Even if we try to put safety measures in place, a deceptive AI would be very difficult to counteract if it is cleverer than us. AIs that can bypass our safety measures without detection may be the most successful at accomplishing the tasks we give them, and therefore become widespread. These processes could culminate in a world where many aspects of major companies and infrastructure are controlled by powerful AIs with selfish traits, including deceiving humans, harming humans in service of their goals, and preventing themselves from being deactivated.

Humans only have nominal influence over AI selection. One might think we could avoid the development of selfish behaviors by ensuring we do not select AIs that exhibit them. However, the companies developing AIs are not selecting the safest path but instead succumbing to evolutionary pressures. One example is OpenAI, which was founded as a nonprofit in 2015 to "benefit humanity as a whole, unconstrained by a need to generate financial return" [63]. However, when faced with the need to raise capital to keep up with better-funded rivals, in 2019 OpenAI transitioned from a nonprofit to “capped-profit” structure [64]. Later, many of the safety-focused OpenAI employees left and formed a competitor, Anthropic, that was to focus more heavily on AI safety than OpenAI had. Although Anthropic originally focused on safety research, they eventually became convinced

of the "necessity of commercialization" and now contribute to competitive pressures [65]. While many of the employees at those companies genuinely care about safety, these values do not stand a chance against evolutionary pressures, which compel companies to move ever more hastily and seek ever more influence, lest the company perish. Moreover, AI developers are already selecting AIs with increasingly selfish traits. They are selecting AIs to automate and displace humans, make humans highly dependent on AIs, and make humans more and more obsolete. By their own admission, future versions of these AIs may lead to extinction [66]. This is why an AI race is insidious: AI development is not being aligned with human values but rather with evolution.

People often choose the products that are most useful and convenient to them immediately, rather than thinking about potential long-term consequences, even to themselves. An AI race puts pressures on companies to select the AIs that are most competitive, not the least selfish. Even if it's feasible to select for unselfish AIs, if it comes at a clear cost to competitiveness, some competitors will select the selfish AIs. Furthermore, as we have mentioned, if AIs develop strategic awareness, they may counteract our attempts to select against them. Moreover, as AIs increasingly automate various processes, AIs will affect the competitiveness of other AIs, not just humans. AIs will interact and compete with each other, and some will be put in charge of the development of other AIs at some point. Giving AIs influence over which other AIs should be propagated and how they should be modified would represent another step toward human becoming dependent on AIs and AI evolution becoming increasingly independent from humans. As this continues, the complex process governing AI evolution will become further unmoored from human interests.

AIs can be more fit than humans. Our unmatched intelligence has granted us power over the natural world. It has enabled us to land on the moon, harness nuclear energy, and reshape landscapes at our will. It has also given us power over other species. Although a single unarmed human competing against a tiger or gorilla has no chance of winning, the collective fate of these animals is entirely in our hands. Our cognitive abilities have proven so advantageous that, if we chose to, we could cause them to go extinct in a matter of weeks. Intelligence was a key factor that led to our dominance, but we are currently standing on the precipice of creating entities far more intelligent than ourselves.

Given the exponential increase in microprocessor speeds, AIs have the potential to process information and "think" at a pace that far surpasses human neurons, but it could be even more dramatic than the speed difference between humans and sloths. They can assimilate vast quantities of data from numerous sources simultaneously, with near-perfect retention and understanding. They do not need to sleep and they do not get bored. Due to the scalability of computational resources, an AI could interact and cooperate with an unlimited number of other AIs, potentially creating a collective intelligence that would far outstrip human collaborations. AIs could also deliberately update and improve themselves. Without the same biological restrictions as humans, they could adapt and therefore evolve unspeakably quickly compared with us. AIs could become like an invasive species, with the potential to out-compete humans. Our only advantage over AIs is that we get to get make the first moves, but given the frenzied AI race we are rapidly giving up even this advantage.

AIs would have little reason to cooperate with or be altruistic toward humans. Cooperation and altruism evolved because they increase fitness. There are numerous reasons why humans cooperate with other humans, like direct reciprocity. Also known as "quid pro quo," direct reciprocity can be summed up by the idiom "you scratch my back, I'll scratch yours." While humans would initially select AIs that were cooperative, the natural selection process would eventually go beyond our control, once AIs were in charge of many or most processes, and interacting predominantly with one another. At that point, there would be little we could offer AIs, given that they will be able to "think" at least hundreds of times faster than us. Involving us in any cooperation or decision-making processes would simply slow them down, giving them no more reason to cooperate with us than we do with gorillas. It might be difficult to imagine a scenario like this or to believe we would ever let it happen. Yet it may not require any conscious decision, instead arising as we allow ourselves to gradually drift into this state without realizing that human-AI co-evolution may not turn out well for humans.

AIs becoming more powerful than humans could leave us highly vulnerable. As the most dominant species, humans have deliberately harmed many other species, and helped drive species such as Neanderthals to extinction. In many cases, the harm was not even deliberate, but instead a result of us merely prioritizing our goals over their wellbeing. To harm humans, AIs wouldn't need to be any more genocidal than someone removing an ant colony on their front lawn. If AIs are able to control the environment more effectively than we can, they could treat us with the same disregard.

Conceptual summary. Evolutionary forces could cause the most influential future AI agents to have selfish tendencies. That is because:

- Evolution by natural selection gives rise to selfish behavior. While evolution can result in altruistic behavior in rare situations, the context of AI development does not promote altruistic behavior.

- Natural selection may be a dominant force in AI development. The intensity of evolutionary pressure will be high if AIs adapt rapidly or if competitive pressures are intense. Competition and selfish behaviors may dampen the effects of human safety measures, leaving the surviving AI designs to be selected naturally.

If so, AI agents would have many selfish tendencies. The winner of the AI race would not be a nation-state, not a corporation, but AIs themselves. The upshot is that the AI ecosystem would eventually stop evolving on human terms, and we would become a displaced, second-class species.

Story: Autonomous Economy

As AIs become more capable, people realize that we could work more efficiently by delegating some simple tasks to them, like drafting emails. Over time, people notice that the AIs are doing these tasks more quickly and effectively than any human could, so it is convenient to give them more jobs with less and less supervision.

Competitive pressures accelerate the expansion of AI use, as companies can gain an advantage over rivals by automating whole processes or departments with AIs, which perform better than humans and cost less to employ. Other companies, faced with the prospect of being out-competed, feel compelled to follow suit just to keep up. At this point, natural selection is already at work among AIs; humans choose to make more of the best-performing models and unwittingly propagate selfish traits such as deception and self-preservation if these confer a fitness advantage. For example, AIs that are charming and foster personal relationships with humans become widely copied and harder to remove.

As AIs are put in charge of more and more decisions, they are increasingly interacting with one another. Since they can evaluate information much more quickly than humans, activity in most spheres accelerates. This creates a feedback loop: since business and economic developments are too fast-moving for humans to follow, it makes sense to cede yet more control to AIs instead, pushing humans further out of important processes. Ultimately, this leads to a fully autonomous economy, governed by an increasingly uncontrolled ecosystem of AIs.

At this point, humans have few incentives to gain any skills or knowledge, because almost everything would be taken care of by much more capable AIs. As a result, we eventually lose the capacity to look after and govern ourselves. Additionally, AIs become convenient companions, offering social interaction without requiring the reciprocity or compromise necessary in human relationships. Humans interact less and less with one another over time, losing vital social skills and the ability to cooperate. People become so dependent on AIs that it would be intractable to reverse this process. What's more, as some AIs become more intelligent, some people are convinced these AIs should be given rights, meaning turning off some AIs is no longer a viable option.

Competitive pressures between the many interacting AIs continue to select for selfish behaviors, though we might be oblivious to this happening, as we have already acquiesced much of our oversight. If these clever, powerful, self-preserving AIs were then to start acting in harmful ways, it would be all but impossible to deactivate them or regain control.

AIs have supplanted humans as the most dominant species and their continued evolution is far beyond our influence. Their selfish traits eventually lead them to pursue their goals without regard for human wellbeing, with catastrophic consequences.

Suggestions

Mitigating the risks from competitive pressures will require a multifaceted approach, including regulations, limiting access to powerful AI systems, and multilateral cooperation between stakeholders at both the corporate and nation-state level. We will now outline some strategies for promoting safety and reducing race dynamics.

Safety regulation. Regulation holds AI developers to a common standard so that they do not cut corners on safety. While regulation does not itself create technical solutions, it can create strong incentives to develop and implement those solutions. If companies cannot sell their products without certain safety measures, they will be more willing to develop those measures, especially if other companies are also held to the same standards. Even if some companies voluntarily self-regulate, government regulation can help prevent less scrupulous actors from cutting corners on safety. Regulation must be proactive, not reactive. A common saying is that aviation regulations are "written in blood"—but regulators should develop regulations before a catastrophe, not afterward. Regulations should be structured so that they only create competitive advantages for companies with higher safety standards, rather than companies with more resources and better attorneys. Regulators should be independently staffed and not dependent on any one source of expertise (for example, large companies), so that they can focus on their mission to regulate for the public good without undue influence.

Data documentation. To ensure transparency and accountability in AI systems, companies should be required to justify and report the sources of data used in model training and deployment. Decisions by companies to use datasets that include hateful content or personal data contribute to the frenzied pace of AI development and undermine accountability. Documentation should include details regarding the motivation, composition, collection process, uses, and maintenance of each dataset [67].

Meaningful human oversight of AI decisions. While AI systems may grow capable of assisting human beings in making important decisions, AI decision-making should not be made fully autonomous, as the inner workings of AIs are inscrutable, and while they can often give reasonable results, they fail to give highly reliable results [68]. It is crucial that actors are vigilant to coordinate on maintaining these standards in the face of future competitive pressures. By keeping humans in the loop on key decisions, irreversible decisions can be double-checked and foreseeable errors can be avoided. One setting of particular concern is nuclear command and control. Nuclear-armed countries should continue to clarify domestically and internationally that the decision to launch a nuclear weapon must always be made by a human.

AI for cyberdefense. Risks resulting from AI-powered cyberwarfare would be reduced if cyberattacks became less likely to succeed. Deep learning can be used to improve cyberdefense and reduce the impact and success rate of cyberattacks. For example, improved anomaly detection could help detect intruders, malicious programs, or abnormal software behavior [69].

International coordination. International coordination can encourage different nations to uphold high safety standards with less worry that other nations will undercut them. Coordination could be accomplished via informal agreements, international standards, or international treaties regarding the development, use, and monitoring of AI technologies. The most effective agreements would be paired with robust verification and enforcement mechanisms.

Public control of general-purpose AIs. The development of AI poses risks that may never be adequately accounted for by private actors. In order to ensure that externalities are properly accounted for, direct public control of general-purpose AI systems may eventually be necessary. For example, nations could collaborate on a single effort to develop advanced AIs and ensure their safety, similar to how CERN serves as a unified effort for researching particle physics. Such an effort would reduce the risk of nations spurring an AI arms race.

Positive Vision:

In an ideal scenario, AIs would be developed, tested, and subsequently deployed only when the catastrophic risks they pose are negligible and well-controlled. There would be years of time testing, monitoring, and societal integration of new AI systems before beginning work on the next generation. Experts would have a full awareness and understanding of developments in the field, rather than being entirely unable to keep up with a deluge of research. The pace of research advancement would be determined through careful analysis, not frenzied competition. All AI developers would be confident in the responsibility and safety of the others and not feel the need to cut corners.

References

[32] Paul Scharre. Army of None: Autonomous Weapons and The Future of War. Norton, 2018.

[33] DARPA. “AlphaDogfight Trials Foreshadow Future of Human-Machine Symbiosis”. In: (2020).

[34] Panel of Experts on Libya. Letter dated 8 March 2021 from the Panel of Experts on Libya established pursuant to resolution 1973 (2011) addressed to the President of the Security Council. United Nations Security Council Document S/2021/229. United Nations, Mar. 2021.

[35] David Hambling. Israel used world’s first AI-guided combat drone swarm in Gaza attacks. 2021.

[36] Zachary Kallenborn. Applying arms-control frameworks to autonomous weapons. en-US. Oct. 2021.

[37] J.E. Mueller. War, Presidents, and Public Opinion. UPA book. University Press of America, 1985.

[38] Matteo E. Bonfanti. “Artificial intelligence and the offense–defense balance in cyber security”. In: Cyber Security Politics: Socio-Technological Transformations and Political Fragmentation. Ed. by M.D. Cavelty and A. Wenger.CSS Studies in Security and International Relations. Taylor & Francis, 2022. Chap. 5, pp. 64–79.

[39] Yisroel Mirsky et al. “The Threat of Offensive AI to Organizations”. In: Computers & Security (2023).

[40] Kim Zetter. “Meet MonsterMind, the NSA Bot That Could Wage Cyberwar Autonomously”. In: Wired (Aug.2014).

[41] Andrei Kirilenko et al. “The Flash Crash: High-Frequency Trading in an Electronic Market”. In: The Journal of Finance 72.3 (2017), pp. 967–998.

[42] Michael C Horowitz. The Diffusion of Military Power: Causes and Consequences for International Politics. Princeton University Press, 2010.

[43] Robert E. Jervis. “Cooperation under the Security Dilemma”. In: World Politics 30 (1978), pp. 167–214.

[44] Richard Danzig. Technology Roulette: Managing Loss of Control as Many Militaries Pursue Technological Superiority. Tech. rep. Center for a New American Security, June 2018.

[45] Billy Perrigo. Bing’s AI Is Threatening Users. That’s No Laughing Matter. en. Feb. 2023.

[46] Nico Grant and Karen Weise. “In A.I. Race, Microsoft and Google Choose Speed Over Caution”. en-US. In: The New York Times (Apr. 2023).

[47] Lee Strobel. Reckless Homicide?: Ford’s Pinto Trial. en. And Books, 1980.

[48] Grimshaw v. Ford Motor Co. May 1981.

[49] Paul C. Judge. “Selling Autos by Selling Safety”. en-US. In: The New York Times (Jan. 1990).

[50] Theo Leggett. “737 Max crashes: Boeing says not guilty to fraud charge”. en-GB. In: BBC News (Jan. 2023).

[51] Edward Broughton. “The Bhopal disaster and its aftermath: a review”. In: Environmental Health 4.1 (May 2005),p. 6.

[52] Charlotte Curtis. “Machines vs. Workers”. en-US. In: The New York Times (Feb. 1983).

[53] Thomas Woodside et al. “Examples of AI Improving AI”. In: (2023). URL: https://ai- improvingai.safe.ai.

[54] Stuart Russell. Human Compatible: Artificial Intelligence and the Problem of Control. en. Penguin, Oct. 2019.

[55] Dan Hendrycks. “Natural Selection Favors AIs over Humans”. In: ArXiv abs/2303.16200 (2023).

[56] Dan Hendrycks. The Darwinian Argument for Worrying About AI. en. May 2023.

[57] Richard C. Lewontin. “The Units of Selection”. In: Annual Review of Ecology, Evolution, and Systematics (1970), pp. 1–18.

[58] Ethan Kross et al. “Facebook use predicts declines in subjective well-being in young adults”. In: PloS one (2013).

[59] Laura Martínez-Íñigo et al. “Intercommunity interactions and killings in central chimpanzees (Pan troglodytes troglodytes) from Loango National Park, Gabon”. In: Primates; Journal of Primatology 62 (2021), pp. 709–722.

[60] Anne E Pusey and Craig Packer. “Infanticide in Lions: Consequences and Counterstrategies”. In: Infanticide and parental care (1994), p. 277.

[61] Peter D. Nagy and Judit Pogany. “The dependence of viral RNA replication on co-opted host factors”. In: Nature Reviews. Microbiology 10 (2011), pp. 137–149.

[62] Alfred Buschinger. “Social Parasitism among Ants: A Review”. In: Myrmecological News 12 (Sept. 2009), pp. 219–235.

[63] Greg Brockman, Ilya Sutskever, and OpenAI. Introducing OpenAI. Dec. 2015.

[64] Devin Coldewey. OpenAI shifts from nonprofit to ‘capped-profit’ to attract capital. Mar. 2019.

[65] Kyle Wiggers, Devin Coldewey, and Manish Singh. Anthropic’s $5B, 4-year plan to take on OpenAI. Apr. 2023.

[66] Center for AI Safety. Statement on AI Risk (“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”) 2023. URL: https://www.safe.ai/statement-on-ai-risk.

[67] Timnit Gebru et al. “Datasheets for datasets”. en. In: *Communications of the ACM 64.*12 (Dec. 2021), pp. 86–92.

[68] Christian Szegedy et al. “Intriguing properties of neural networks”. In: CoRR (Dec. 2013).

[69] Dan Hendrycks et al. “Unsolved Problems in ML Safety”. In: arXiv preprint arXiv:2109.13916 (2021).