In this post, I’ll aim to do the following:

- Give a comprehensive nontechnical summary of what Responsible Capability Scaling (RCS) policies are

- Describe their theory of change

- Provide context by comparing them to two types of self regulation, formal risk management and industry standard setting.

- Make some predictions about what type of regulatory regime we'd be in if RCS policies become the basis for government regulation of frontier AI and argue that its unlikely to be the best place to aim for.

I also provide a reading list for learning more about RCS policies and comparisons between the specific policies released by different labs so far.

Open AI, Anthropic, and Google DeepMind have each released RCS policies under different names and frameworks: Open AI with the Preparedness Framework (PF), Anthropic with Responsible Scaling Policies (RSP), and Google DeepMind with the Frontier Safety Framework (FSF). For clarity when talking about this family of policies in general, I’ll use the term RCS policy and the relevant acronyms when talking about specific policies from each company.

I won’t go into depth comparing the existing RCS policies, mainly because I think others have already done a good job of this (see Reading List for examples). Instead I'll focus on the theory of change-level questions about these policies.

These views are my own and don’t represent 80,000 Hours.

What are Responsible Capability Scaling policies?

Responsible capability scaling (RCS) policies are voluntary commitments frontier AI companies make regarding the steps they will take to ensure they are avoiding large-scale risks when developing and deploying increasingly powerful foundation models. Normally an RCS policy will do three main things:

1) Identify a set of harms which a model could give rise to.

2) Identify potential early-warning signs for those dangers and evaluations which may be used to test whether the dangers are present.

3) Commit to implementing certain protective measures to mitigate those dangers.

These harms could refer to things coming directly from the model, such as dangerous capabilities, but may also count ways the model could be misused or tampered with – such as through cybersecurity vulnerabilities. RCS policies generally differ from other AI commitments to make AI safer or more responsible in that they are specifically designed to target catastrophic risks arising from future foundation models, rather than focusing on risks arising from present or more narrow systems.

Existing RCS policies categorise models in two ways: by assigning them general threat levels to the model as a whole or by evaluating them on a domain-by-domain basis. Open AI’s Preparedness Framework and DeepMind’s Frontier Safety Framework take the domain specific path; positing some potential threat models for models at different capability levels and testing them in ways relevant to each threat model. For example, DeepMind and OpenAI both test models on their ability to help an actor of different levels of sophistication produce a biological weapon.

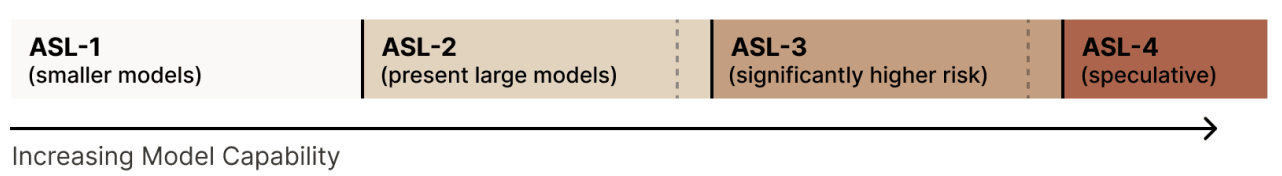

Anthropic takes a more general approach modelled on biosafety levels, which gives a model an overall safety score. For example, Claude 2 was classed as AI Safety Level 2 (ASL-2). This more general approach allows you to provide uniform standards for a model across domains, rather than taking a more patchwork approach. It may also be motivated by a desire to be structurally consistent with risk frameworks used in other high-stakes domains, like biosecurity and the nuclear power industry.

.

Once a new model has been classified, safety teams try to evaluate its risks by designing comprehensive evaluations (or evals) that elicit capabilities and vulnerabilities in the model. Though I won’t go into much depth about the specifics of how different evals work, I find it useful to borrow Evan Hubinger’s division of evals into separate buckets. Capabilities evals are tests designed to determine whether a model could do a certain dangerous thing if it/the user tried to; alignment evals test to see whether the model would be ‘motivated’ to do dangerous things if it could. Some common techniques used in capabilities evals might include expert red-teaming (e.g.) capture the flag style tests, and automated evaluations in which a smaller or more narrow model evaluates the performance of the test model. Since AI evals are in an early stage as a field, it’s awfully hard to know in advance if a suite of tests will be comprehensive and RCS policies often involve passing evals which haven’t been designed yet. Some organisations such as the UK AI Safety Institute and METR have provided third party evaluations and/or pre-release testing for the family of models released in 2023 and early 2024, but at present most evaluations are done inside AI companies.

Another important element of RCS policies is the inclusion of internal governance and enforcement mechanisms. Even if a company has identified some potential dangers associated with deploying or training a new model, it still might choose to proceed despite those risks; both to retain a commercial advantage over competitors and to protect its ability to practise defensive deployment. Because of this, an effective internal policy needs to include internal governance mechanisms (e.g, the 3 levels of defence approach) which will allow some actors to stop or delay development and deployment. A prespecified plan reduces the risk of short term internal tensions driving decision-making in these situations. Maybe just as important, it has to establish specific, action-guiding thresholds at which this can happen – or else it might be ambiguous whether an RSP’s trigger point has been reached or not. Common governance mechanisms across RCS policies include: setting up internal safety boards, mandating internal safety audits, upping cybersecurity standards, and siloing important information about the model across different actors. Including contingency plans for dangerous situations or establishing standards for when to establish new evaluations are also included in some policies. For example, Anthropic’s RSP includes a pledge to have a plan in place to pause a training run if a safety threshold is reached, although the exact point at which that would be triggered is not exactly clear.

There is pretty widespread agreement that existing policies do not give sufficiently rigorous or concrete standards to guide the transition to advanced AI – even among AI developers proposing these policies. Presumably future policies will provide more detail. However, AI companies have not been especially transparent about their progress on or plans to implementing their policies beyond, promising to reevaluate their models for every 4x (Anthropic) or 6x (Open AI and Google DeepMind) jump in effective compute and by promising new versions of current RCS policies. I think rather than delving into the specifics of each policy’s standards and wording (which other people have done great jobs of already), it is more instructive to see whether the adoption of RCS policies by frontier developers points us towards a good regime for AI governance in general.

Summing up, RSPs are self regulatory frameworks in which:

- AI companies make self-enforced commitments for how they’ll deal with catastrophic risks from future models.

- Thresholds where hazardous behaviours can emerge are identified and companies commit to implementing mitigation strategies before those thresholds are reached.

- Internal governance structures are created to guide an organisation’s behaviour in order to increase the chances that they safely develop and deploy new systems.

- AI companies iteratively refine the details of their safety commitments as the science of model evaluation and safety mechanisms advances.

What is the theory of change for RCS policies?

Based on the writings of people involved in the development and implementation of RCS policies, I think their theory of change consists of three main components:

- Creating a self-regulatory framework that encourages model evaluation while remaining flexible enough to cover many different potential threats.

- Incentivising other AI companies to do some small amount of coordination on safety.

- Provide a shovel ready regulatory framework to speed up the adoption of a comprehensive AI governance framework.

1 - RCS policies allow for flexible interventions and standard setting experimentation

One distinctive thing about RCS policies is that they are iterative and sensitive to how models change as they scale. RCS policies try to forecast dangerous thresholds in advance and can impose protective measures at different stages of the model lifecycle to account for this. In theory, this makes the safety measures more closely tied to the model’s development since the safety standards become a necessary milestone for proceeding with a training run or deployment plan. Since the regulation of foundation models is in a fairly early stage, with no comprehensive regulatory framework in place in the US or UK, this allows developers to experiment to find suitable safety standards and coordinate on safety.

RCS policies allow developers to experiment with different threat models and safety tests at multiple stages of the model’s lifecycle. They also allow for some flexibility in which hazards companies aim to address. Many vulnerabilities mentioned in presently available policies focus on risks related to the model’s potential for use as offensive cybersecurity weapons, aides to creating biological weapons, vectors of deception/misinformation, and more general purpose boosts to the model’s autonomy. However, since the policies are designed to iterate as models scale, they can be modified to include mitigations related to new threats as they arise. This may allow different companies to experiment with creating mitigations for slightly different threats while model capabilities remain at a manageable level; leading to more of the potential threat landscape being covered. As regulators and AI companies learn more about which threats are more likely, I expect these policies to converge on the most pressing risks.

One benefit of RCS policies being self-imposed commitments is that they can target different stages of the model’s life cycle. For risks related to model autonomy or self-improvement it seems especially important to identify them in an early stage. These threats seem less likely to be comprehensively targeted and addressed by regulators than deployment-stage since they require regulators to more intrusively engage with AI companies’ day to day operations and R&D. Since RSPs are self-imposed, they don’t face these types of obstacles and can be made specific to the company in question’s models

2 - RCS policies may provide incentives to coordinate on safety

Despite being self-imposed, RCS policies may help AI companies coordinate on safety. In a situation where several companies are able to produce models of similar sophistication, competitive dynamics can weigh heavily on decisions about when to release models. If each of these competitors made binding claims to avoid certain unsafe behaviours, it would be less costly for an individual company to proceed more cautiously. In a more extreme version of this, complementary RSPs could make industry-wide standards or auditing more appealing to frontier model developers, since you’d want to reduce the chances of your competitors cheating on their commitments by applying enforcement mechanisms to all three companies. The creation of the Frontier Model Forum, may be a sign that something like this is occurring.

One way to draw out the ways RCS policies might help labs coordinate is by comparing them to calls to pause frontier model development. Evan Hubinger and Holden Karnosfky argued that RSPs are essentially “pauses done right”. They argue that in advocating for pausing AI development in general, you risk creating a badly executed pause, which might be worse than the status quo because it could incentivise a race to the bottom by reducing regulatory effectiveness. For example, a legally enforced pause in the US might just lead companies to relocate somewhere with less strict regulations, and would likely crush AI companies’ willingness to cooperate with regulators. At the moment, evaluation and safety testing relies heavily on AI companies’ willingness to provide regulators with access to their models. Until there are better methods for gaining visibility on the models before deployment, it’s not obvious that this loss of cooperation would leave us in a better position than the status quo because of these coordination losses. Coordination losses seem especially likely to arise when a pause does not address these questions:

- What criteria are we using to decide which actors should be involved in the pause?

- How do we avoid some actors pausing but not others?

- When, if ever, should the pause end?

- Who gets to decide and enforce the questions above?

On the other hand, when RCS policies make specific, legible, technically sound commitments they provide an answer to all of these – at least within a single firm. RCS policies try to forecast dangerous thresholds in advance and can impose protective measures at different stages of the model lifecycle to account for this.

3 - RCS policies provide a shovel-ready framework regulators could adopt to create a comprehensive regulatory regime for catastrophic risks from foundation models.

While the adoption of RCS policies may help with industry-level standard setting, they can also help shape future legally binding regulation. Self-regulation is a common way industry can signal to regulators that they are self-governing adequately and that more onerous legal requirements are not needed. On the other hand, it can also be a way for industries that know they are going to be regulated to point to some standards that will be relevant to safety, but don’t impose an undue commercial burden on them.

Jack Clark has publicly stated that he views Anthropic’s RSP as a sort of shovel-ready framework which regulators can adopt and operationalise in the future. Evan Hubinger, also from Anthropic, made a similar point in “AI coordination needs clear wins”. Further, since executives from many of the US’s largest AI companies publicly requested that regulators get involved in the industry. If you take them at their word, it is unsurprising that they would attempt to self regulate to help guide future standards. Again, the creation of the Frontier Model Forum is some evidence for the projection of an industry-wide consensus among these developers.

From a safety standpoint, this may dramatically speed up the move towards a comprehensive AI regulation framework and reduce the chances of piecemeal or nearterm-focused AI regulation becoming dominant. While doing so, RCS policies create the norm that companies be allowed to continue scaling more or less indefinitely as long as certain evaluations and mitigations are put in place. Given that a spate of bills targeting AI have been passed in US state legislatures recently, it is highly likely that more nation-wide AI regulation is likely to follow. This means it may be an especially influential time for AI companies to shape this coming wave of regulations, even while genuinely being concerned about catastrophic risks. For that reason, I think the assumption of government regulation is a key part of the theory of change for responsible scaling policies.

Are RCS policies preferable to other forms of self-regulation that could be adopted?

Since RCS policies are one of the dominant forms of self-regulation being adopted by frontier model developers, it is important to compare them to alternatives which could be adopted. Two families of regulation seem especially salient here: formal risk management and the industry standard-setting approach. Because of its flexibility and lack of canonical formulation, the RCS framework may adopt elements of both approaches. However, the two approaches are still different enough to what I would expect a RCS-based regulatory regime that it is worth considering them as alternative frameworks, even though a sort of ‘RCS plus’ policy might incorporate standard setting and risk management methodologies.

Risk management vs RCS policies

Formal risk management is a popular method of self-regulation in high-stakes industries. Risk management “involves modelling a system’s potential outcomes, identifying vulnerabilities, and designing processes to define and reduce the likelihood of unacceptable outcomes”. Risk management can be contrasted with risk estimation and risk evaluation. In risk estimation, an effort is made to assess and sometimes quantify the risk, while risk evaluation quantifies and weighs risks against potential benefits or side-constraints. RCS policies have some similarities to these approaches in that they try to identify hazards that may arise from scaling models, and impose tests and mitigations to try and address them.

What makes RM distinct from other approaches to risk is that it involves several distinct method-driven steps:

- Identification: A procedure-driven, proactive attempt to identify potential risks arising from the model’s training or deployment in different contexts. For example, a fishbone method might be used to seek out hazards arising from different threat vectors or at different levels of brainstorming effort.

- Assessment: A quantitative assessment of the severity and likelihood of each harm which could result from the model being trained or deployed. For example, the life insurance industries sometimes calculate a Human Life Value to estimate the amount that would need to be paid out if a person died at various stages of their career and in different financial situations. In addition to doing a valuation of the disvalue of a hazard, risk assessments can make probabilistic assessments of the hazard’s likelihood in a variety of scenarios.

- Setting risk tolerance: The policy designer determines the amount of risk they are willing to accept for each potential harm and pledges to keep risks below that level. Setting a predefined risk tolerance creates both a definite threshold at which a risk is or isn’t being contained, and directly communicates with external stakeholders about how the developer is making risk-benefit tradeoffs.

- Estimation: The policy designer works with the AI organisation to estimate the current level of risk of each hazard identified earlier. For each of the identified hazards, this step involves assessing their likelihood with and without different mitigations being put in place.

- Mitigation: For each risk that is above the predefined acceptable threshold, the company develops safety measures or design changes to get risks below that threshold. In the RCS case, these might involve things like specific fine-tuning, information security changes, or restrictions to the model’s scaffolding.

- Evaluation: The policy designer checks whether risks are below the acceptable threshold (i.e., did the mitigations work?). Unlike in the capabilities and alignment evaluations that RCS policies currently employ, we would expect these evaluations to then feed back into a re-assessment of the risks arising from the model. For the most part, as reported in current RCS policies, evals take more of a discrete pass/fail approach than a continuous ‘all risks considered’ one.

- Monitoring: The policy designer decides how to check that risks remain at this level (e.g., for future models or with additional scaffolding). Often this will either be done at set time intervals, random evaluations, or automated methods. This step is crucial to helping avoid sandbagging in the evaluation stage or accidental modifications to the model during finetuning that undoes some of its safeguards (e.g., the kind of thing that may have happened with GPT-4’s API).

- Reporting and disclosure norms: The policy includes some norms around disclosure and reporting, both inside and outside the lab. Some amount of standardisation here may help developers’ safety and governance teams hold decision-makers accountable for pausing or modifying models that fail to meet their risk targets. Further details relating to sharing risk analysis with external regulators could also be found in this part of a risk management policy.

In their current form, RCS policies involve risk identification, evaluation, and mitigation, but most skip over (or do not publicly report) their risk tolerance, estimation, monitoring, or disclosure plans. I think it's likely that many elements of these steps are in AI companies’ internal versions of RCS policies. However, their lack of transparency makes it hard to distinguish between an RCS policy that is stronger than it publicly appears and one that is exactly as vague as the published policy. Since they don’t set concrete levels at which the risk becomes unacceptable and instead rely on qualitative or threshold-based assessments of risk, RCS policies are opaque and rely heavily on AI companies following them in spirit rather than to the letter without public oversight as a default.

One reason we might prefer formal risk management to RCS policies is that roughly quantifying the risks helps contextualise where current protective measures are relative to model capabilities. By not clearly quantifying the risks allow AI companies to signal to regulators that they are attending to novel risks from their models, but obscuring how precarious the situation is to stave off harsher government action. Even on a more charitable picture, an AI company might have different risk tolerances than government regulators, but not providing precise boundaries for the risks hides this. For example, a model could be classified as ‘low risk’ in the relevant CBRN safety category of OpenAI or Google DeepMind’s RCS policy. However, it’s not totally clear what that actually means other than that the evals didn’t trigger the conditions for the ‘medium’ risk category. Does that mean you ran an evaluation 50 times and the mitigation worked in all 50 cases or does it mean the mitigation worked 47/50 times and you consider that an acceptable level of risk?

You could argue this is too high of a bar to set for AI regulation, at least at this stage. For RCS proponents like Evan Hubinger, they are clear, unobjectionable frameworks that regulators can borrow from when crafting legislation. Presumably details like those found in a formal risk management framework could come later. Some of the steps mentioned above were laid out more clearly in the commitments AI companies made at the recent AI Safety Summit in Seoul (see Outcome 1), but it remains to be seen which of them will be implemented and by which companies.

Standards setting approach vs RCS policies

Since existing RCS policies target similar sets of risks (e.g, cybersecurity capabilities, CBRN, deception), they may help us identify best practices for addressing these risks across the industry. To the extent that current RCS policies have a common goal, establishing general standards for how AI companies should address risks across these domains seems like a good candidate. Because of this, RCS policies are analogous to the ‘best practices’ approach to self regulation. Since AI safety does not have established, forward-looking best practices RCS policies may help safely share knowledge and establish model evaluation practices.

The ISO describes standards as “the distilled wisdom of people with expertise in their subject matter and who know the needs of the organizations they represent”, which are usually translated into voluntary commitments from industry. Establishing best practices involves conducting applied research into the specific risks and failure modes of the product. Because of their access to talent, compute, and the models themselves, frontier AI companies are a natural place for early-stage applied research into the potential safety risks of these models.

There is some evidence that RCS policies are an attempt to coordinate best practices with other AI companies and to signal potential dangers to regulators. Following the Bletchley Summit, four large AI companies (OpenAI, Anthropic, Microsoft, Google DeepMind) set up the Frontier Model Forum, which is designed to help AI companies coordinate on best practices. As mentioned before, many of the commitments made at the Seoul AI Safety Summit also support the idea that AI companies are working with each other and with regulators to share knowledge and establish safety standards. If that moves us towards a regulatory landscape where more mitigation strategies have been uncovered, then this strengthens the case for RCS policies.

However, how useful the standard setting approach is may vary significantly based on how many industry players take part and how enforcement is done. Associations with onerous standards and rigorous enforcement may profess to provide safer standards, but will suffer more to get willing participants by default. Conversely, lax or badly enforced standards with widespread industry buy-in may create a false sense of security by promising better safety standards than they’re actually delivering.

How good the standard setting component of RCS frameworks are depends largely on the strength of its enforcement. An example of rigorous enforcement of voluntary standards, which we might want to see in the AI industry, is the Institute of Nuclear Power Operations’ (INPO) model.

Following the 3 Mile Island meltdown in 1979, the US nuclear power industry’s public image was greatly damaged and INPO was set up as an industry regulatory body – partially as a response to government scrutiny. Under INPO’s self-regulatory regime intensive plant safety inspections, new training, and safety drilling procedures were implemented. INPO is paid for by American nuclear power companies and works closely with the US government’s Nuclear Regulatory Commission. Although the US nuclear power industry all but halted the construction of new plants since 1979, INPO serves as a good example of an industry standard setting body which rigorously enforces and shares best practices.

On the other hand, heavy industry buy-in but lax standard enforcement is found in the chemical industry’s Responsible Care program; which is sometimes pointed to as a case study for the failures of self-regulation.

By default, RCS policies seem more likely to end up looking like the loosely-enforced version of standard setting than the rigorous version or the risk management-driven one. Since many of the key inputs to foundation models, such as curated datasets and algorithms, are closely guarded secrets; competitive dynamics will make it more difficult to create standards that are detailed and actionable. Further, unlike in the chemical industry where many of the potential harms are well-understood, how we ought to standardise safety practices in AI is much more open-ended and leaves substantially more wiggle room. This means that even if an industry standard setting body agrees on a sufficiently rigorous set of standards, enforcement will remain difficult as the technology continues to progress quickly. For this reason, I think it is unlikely that RCS policies will lead to a more desirable self-regulatory regime than the alternatives considered by default.

Where does the RCS theory of change take AI governance?

I think RCS policies are designed to create shared standards across the frontier AI developers, and likely are meant to become the basis for legally binding regulations. It’s worth asking if this takes us to a regulatory regime we should want, as it may become the base that future foundation model governance efforts are added on to.

In brief, this regulatory regime will likely be pretty favourable to AI developers compared to some alternatives we could end up with. Even if we assume the policies will become more detailed and demanding than the current generation, it leaves us in a regime where AI companies are largely left unfettered as long as they can demonstrate they are performing the right safety evaluations. I think a world where some external oversight (e.g., from governments or auditors) into these evaluations would be preferable to the status quo, but leaves developers holding almost all the cards. For example, it may be difficult for an evaluations organisation to verify that they are using the final release version of the model or in some cases to stress test the model’s safety fine-tuning. Although it is too early to give this much weight, model evaluators already face obstacles getting proper buy-in from AI companies.

Overall, the adoption of an RCS-based regulatory framework would have a lot of positives if done well, but seems structurally less desirable than alternatives that we should be considering. Strict enforcement mechanisms and more detailed technical safety guarantees than evaluations provide should be key parts of these alternatives, and I worry the focus on RCS policies distracts from these more onerous targets. If we are taking seriously that foundation models pose catastrophic risks, then our approach to mitigations should involve publicly overseeable risk management and allow regulators privileged information about the models.

RCS Reading List

Here are some articles I recommend on the topic of RCS policies in general:

- Best introduction: Responsible Scaling Policies - METR

- Jack Titus (Federation of American Scientists) - Can Preparedness Frameworks Pull Their Weight?

- Evan Hubinger (Anthropic) - RSPs are ‘Pauses Done Right’

- Oliver Habryka (LessWrong) and Ryan Greenblatt (Redwood Research)- What’s Up With Responsible Scaling Policies?

- Simeon Campos (SaferAI) - Responsible Scaling Policies are Risk Management Done Wrong

- UK Government - Emerging Processes for Frontier AI Safety (Responsible Capabilities Scaling section)

- Zvi Mowshowitz on the 80,000 Hours podcast.

- Bill Anderson Samways (IAIPS) - Responsible Scaling: Comparing Government Guidance and Company Policy

- Evan Hubinger (Anthropic) - Model Evaluations for Extreme Risks

- Holden Karnosky - We’re not ready. Thoughts on pausing and responsible scaling policies

- Jack Clark (Anthropic) interview on Politico’s Big Tech Podcast - this has a section on Anthropic's RSP towards the end

Here are three major AI companies’ policies

Some comparisons and more in-depth dives on existing RCS frameworks

- Zvi - On Responsible Scaling Policies

- Zvi - On Open AI’s Preparedness Framework

- Safer AI - Is OpenAI’s Preparedness Framework Better than Its Competitors’?

Quick picks on risk management and self regulation relevant to AI Safety

- Clymer et al. - Affirmative Safety, An Approach to Risk Management for Advanced AI

- IAEA Report - The future role of risk assessment in nuclear safety

- Jonas Schuett (GovAI) - Three Lines of Defense Against Risks From AI

See also: AI Lab Watch, e.g., AI companies aren’t really using external evaluators

Executive summary: Responsible Capability Scaling (RCS) policies, while a positive step, are unlikely to lead to the optimal AI governance regime compared to alternatives like formal risk management or rigorous industry standard-setting.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.