Intro: Epistemic Maps

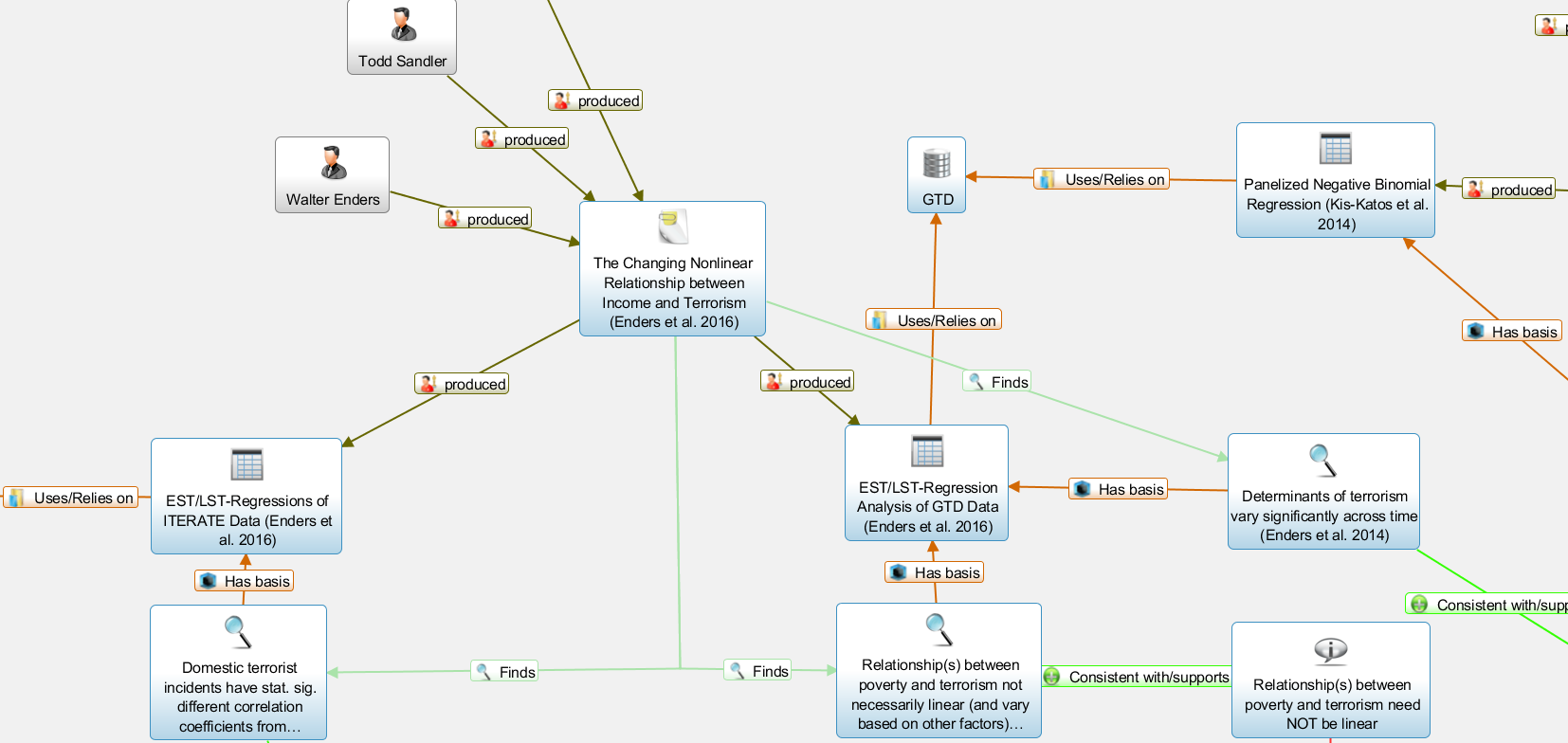

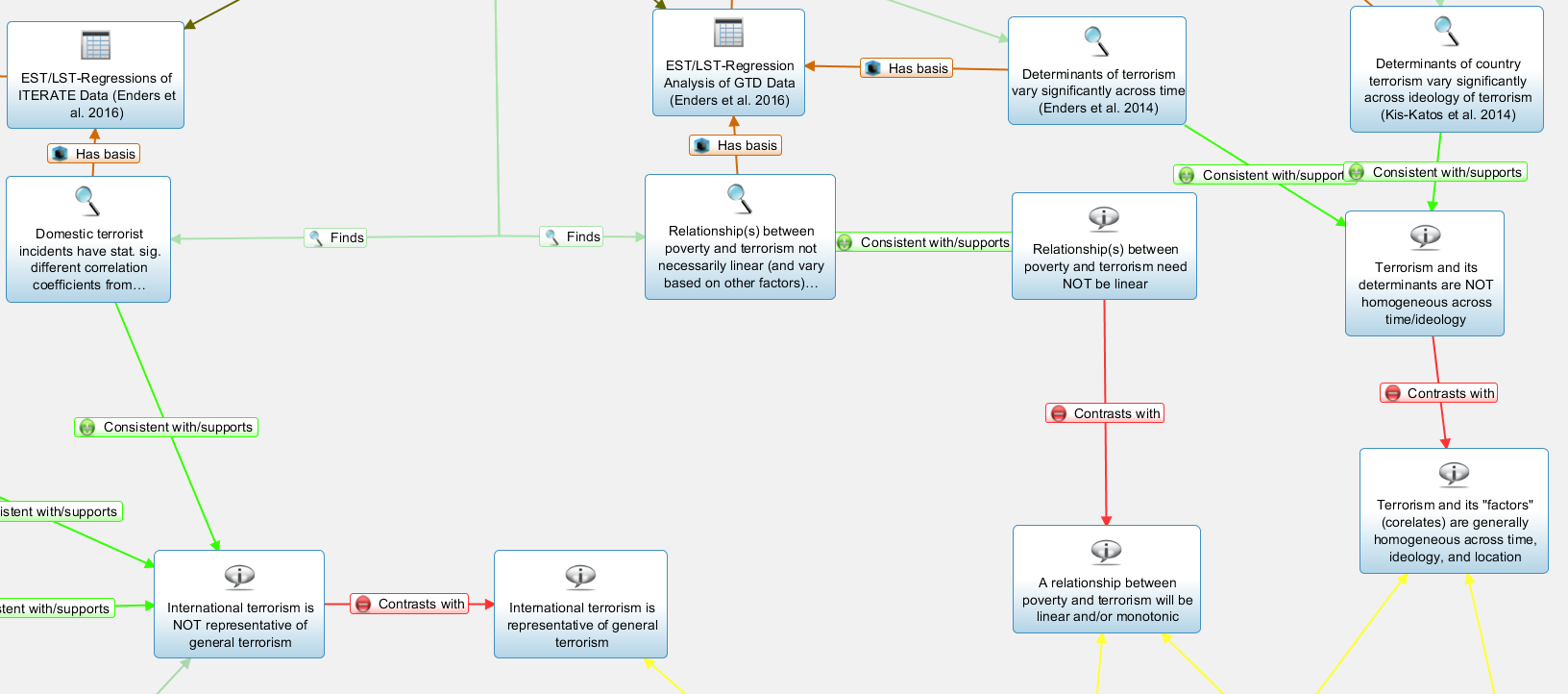

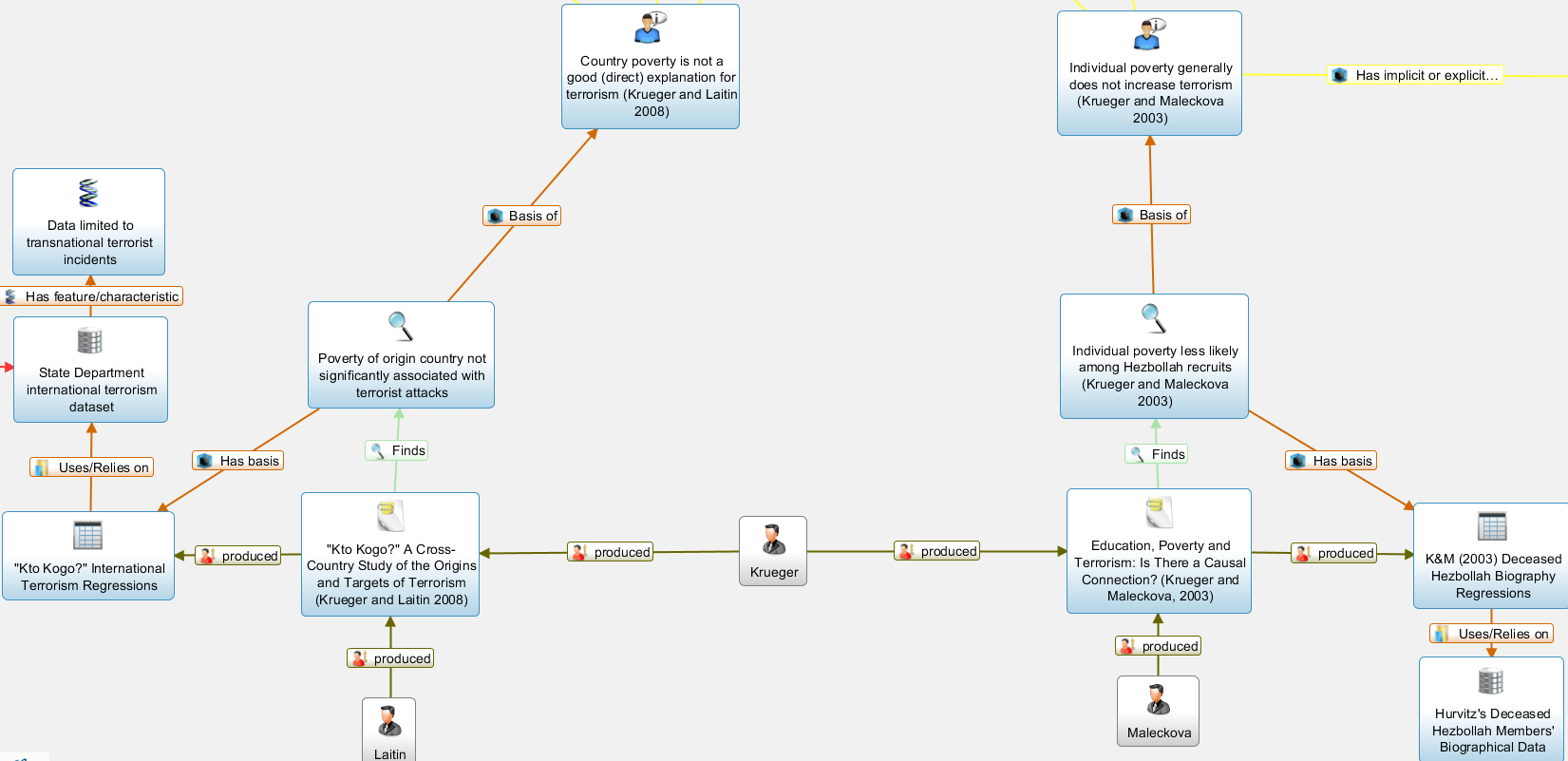

For a while I have been interested in an idea I’ve been calling an epistemic map (or "epistemap", for lack of a better name). The core idea is a virtual space for concept, entity, and link diagramming, with an emphasis on semantically-rich[1] relationships—for example, claim X relies on assumption Y; claim X conflicts/contrasts with claim Y; study X relied on Y dataset and Z methodology to make claim W; and so on. Beyond this, there are many different potential variations as to what it might look like, but my particular interest is in collaborative or semi-collaborative work, as I discuss a bit further below. Below are some snapshots from a toy model I made for an internship project where I primarily focused on diagramming some of the published literature on the debated link between poverty and terrorism.

Since thinking of this concept, I’ve searched largely in vain for an example implementation or even theoretical description of the kind of concept I have in mind. Like I said, I don't even know what exactly to call this concept; I’ve seen a few things like mind-mapping, “computer-based collaborative argumentation”, and literature mapping in general, but I don’t think I’ve seen anything that really strikes at the idea of what I’m getting at. [The following list was added in an update months later] In particular, some of the key features that I have in mind include:

- Node and link system with a free/open structure rather than a mandatory origin node or similar hierarchical structure. (So many mind mapping tools I've looked at fail this by forcing you to use a hierarchical structure)

- The links are labeled to describe/codify the relationship, rather than just having generic, unlabeled links that denote "related". (I have found many programs which fail this basic criterion, and I generally am skeptical of the value of measures which rely on this data and try to go beyond some rudimentary claims about the data)

- An emphasis on "entities" or just "nodes" in a very broad sense that includes claims, observations, datasets, studies, people (e.g., authors), etc. rather than just concepts (as in many mindmaps I've seen).

- Some degree of logic functionality (e.g., basic if/then coding) to automate certain things, such as "Claims X, Y, and Z all depend on assumption W; Study Q found that W is false / assume that W is false; => flag claims X, Y, and Z as 'needs review'; => flag any claims that depend on claims X, Y, or Z...".

- The ability to collaborate or at least share your maps and integrate others'. This pokes a rather large can of worms regarding implementation, as I describe later, but one key point here is about harmonizing contributions across a variety of researchers by promoting universal identifiers for "entities" at least including datasets, studies, people (see point 3). This should make it easier/more beneficial for two researchers to combine their personal epistemaps which might each include different references to the Global Terrorism Database (GTD), for example.

- (Preferably some capability for polyadic relationships rather than strictly dyadic relationships—although I recognize this may not be as easy/neat as I imagine given that I don't think I've seen this done smoothly in any program, let alone those for concept mapping)

Overall, I don't think I've seen any programs or tools that even meet all of criteria 1 through 4 (let alone 5 and 6), although a few seem to decently handle 1, 2, and 3 even though they don't seem to be designed for the purpose I have in mind. (In particular, these include Semantica Pro, Palantir, Cytoscape, Carnegie Mellon's ORA, and a few others.)

I’ve been really interested to get a sense of whether a project like this could be useful for working through complicated issues that draw on a variety of loose concepts and findings rather than siloed expertise and/or easily-transferable equations and scientific laws (e.g., in engineering). I recognize that transferring an overall argument or finding (e.g., the effect of poverty on opportunity cost which relates to willingness engage in terrorism) will not be as easy, objective/defensible, or conceptually pure as copy+pasting the melting point of some rubidium alloy as determined by some experimental studies (for example). However, I find it hard to believe that we can't substantially improve upon our current methods in policy analysis, social science, etc., and I have some reasons to think that maybe, just maybe, methods similar to what I have in mind could help.

Unfortunately, however, I’m not exactly sure where to begin and I have some skeptical priors along the lines of “If this were a good idea, it’d already have been done.” (I have a few responses to such reasoning—e.g., “this probably requires internet connectivity/usage, computing, and GUIs on a level really only seen in the past 10-20 years in order to be efficient”, “this probably requires a dedicated community motivated to collaborate on a problem in order to reach a critical mass where adoption becomes worthwhile more broadly”—but I’m still skeptical)

Potential benefits for AI debates (and other issues in general)

Although I am not involved in AI research, I occasionally read some of the less-technical discussions/analyses, and it definitely seems like there is a lot of disagreement and uncertainty on big questions like fast takeoff probability and AI alignment probability. Thus, it seems quite plausible that such a system as I am describing could help improve community understanding by:

- Breaking down big questions by looking at smaller, related questions (e.g., “when will small-sample learning be effective”, “how strong will market incentives vs. market failures (e.g., nonexcludability of findings) be for R&D/investment”, “how feasible and useful will neural mapping be”);

- Sharing/aggregating individual ideas in a compact format—and making it easier to find those ideas when looking for, e.g., “what are the counterarguments to claim X", "what are the criticisms of study Y"; and

- Highlighting the logical relationships/dependencies between studies, arguments, assumptions, etc. In particular, this could be valuable:

- If some study or dataset is found to be flawed in some way, and there was a way to flag any claims that were marked as relying on that study or dataset;

- To identify what are some of the most widespread assumptions or disputed claims in a field (which might be useful for deciding where one should focus their research).

(I have a few other arguments, but for now I’ll leave it at those points)

Implementation (basically: I have a few idea threads but am really uncertain)

In terms of “who creates/edits/etc. the map”, the short answer is that I have a few ideas, such as a very decentralized system akin to a library/buffet where people can make contributions in “layers” that other people can choose to download and use, with the options for organizations to "curate" sets of layers they find particularly valuable/insightful. However, I have not yet spent a lot of time thinking about how this would exactly work, especially since I want to probe it for other issues first and avoid overcomplicating this question-post. This leads me to my conclusion/questions:

Questions

Ultimately, I can go into more detail if people would like; I have some other thoughts scattered across various documents/notes, but I’ve found it a bit daunting to try to collect it all in one spot (i.e., I’ve been procrastinating writing a post on this for months now). I’ve also worried everything might be a bit much for the reader. Thus, I figured it might be best to just throw out the broad/fuzzy idea and get some initial feedback, especially along the lines of the following questions:

- Does something like this exist and/or what is the closest concept that you can think of?

- (With the note that I have not spent all that much time thinking about specific implementation details) Does this sound like a feasible/useful concept in general? E.g., would there be enough interest/participation to make it worthwhile? Would the complexity outweigh/restrict the potential benefits?

- Does it sound like it could be useful for problems/questions in AI specifically?

- Are there any other problem areas (especially those relevant to EA) where it seems like this might be particularly helpful?

- (Do you have alternative name suggestions?)

- (Any other feedback you’d like to provide!)

- ^

i.e., conceptual or labeled; see the illustrations provided.

What you describe there is probably one of the most similar concepts I've seen thus far, but I think a potentially important difference is that I am particularly interested in a system that allows/emphasizes semantically-richer relationships between concepts and things. From what I saw in that post, it looks like the relationships in the project you describe are largely just "X influences Y" or "X relates to/informs us about Y", whereas the system I have in mind would allow identifying relationships like "X and Y are inconsistent claims," "Z study ha... (read more)