Dear EAs,

After the interview with Tristan Harris Rob asked EAs to do their own research on the topic of aligning recommender systems / short term AI risks. A few weeks ago I already posed the short question on donating against these risks. After that I listened to the 2.5 hour podcast on the topic and read multiple articles on this topic on this forum (https://forum.effectivealtruism.org/posts/E4gfMSqmznDwMrv9q/are-social-media-algorithms-an-existential-risk, https://forum.effectivealtruism.org/posts/xzjQvqDYahigHcwgQ/aligning-recommender-systems-as-cause-area, https://forum.effectivealtruism.org/posts/ptrY5McTdQfDy8o23/short-term-ai-alignment-as-a-priority-cause)

I would love to collaborate with some others on collecting more in-depth arguments including some back- of-the-envelope calculations / Fermi estimates on the possible scale of the problem. I've spent some hours creating a structure including some next steps. In particular we should focus on the first argument since I feel the mental health one is very shaky after recent research.

I didn’t start reading the mentioned papers (except for the abstracts). We can define multiple work streams and divide them between possible collaborators. My expertise is mostly in back- of-the-envelope calculations / Fermi estimates given my background as management consutant. I am especially looking for people who like to / are good at assessing the value of scientific papers.

Please drop a message below or send an e-mail to jan-willem@effectiefaltruisme.nl if you want to participate

Arguments in favour of aligning recommender systems as cause area

Social media causes political polarization

- Polarization causes less international collaboration

- Proved by paper?

- Brexit: What are the odds that social media was decisive in the outcome?

- https://journals.sagepub.com/doi/pdf/10.1177/0894439317734157

- https://comprop.oii.ox.ac.uk/wp-content/uploads/sites/93/2017/12/Russia-and-Brexit-v27.pdf

- https://www.theguardian.com/commentisfree/2020/jul/21/russian-meddling-brexit-referendum-tories-russia-report-government

- Trump: What are the odds that social media was decisive in the outcome?

- Less appetite for EU

- Brexit: What are the odds that social media was decisive in the outcome?

- This increases risks for other (X-)risks:

- Extreme Climate change scenarios

- Estimate Trump’s impact on climate (What is the chance that an event like this causes certain amplifiers of climate change to push us towards extreme scenarios?)

- Direct

- Indirect through other countries doing less

- Show that more instead of less international collaboration is needed if you want to decrease the probability of extreme scenarios

- Estimate Trump’s impact on climate (What is the chance that an event like this causes certain amplifiers of climate change to push us towards extreme scenarios?)

- Nuclear war (increasing Sino-American tensions)

- AI safety risks from misuse (through Sino-American tensions)

- (Engineered) Pandemics

- Can we already calculate extra deaths caused through misinformation?

- Increased chances of biowarfare

- Lower economic growth because of trade barriers / protectionist measures

- Calculate extra economic growth from EU to calculate what it will cost is the EU falls apart?

- Convert to possible additional QALY’s?

- Calculate extra economic growth from EU to calculate what it will cost is the EU falls apart?

- Extreme Climate change scenarios

- Proved by paper?

Next steps here:

- Find papers for all the relevant claims

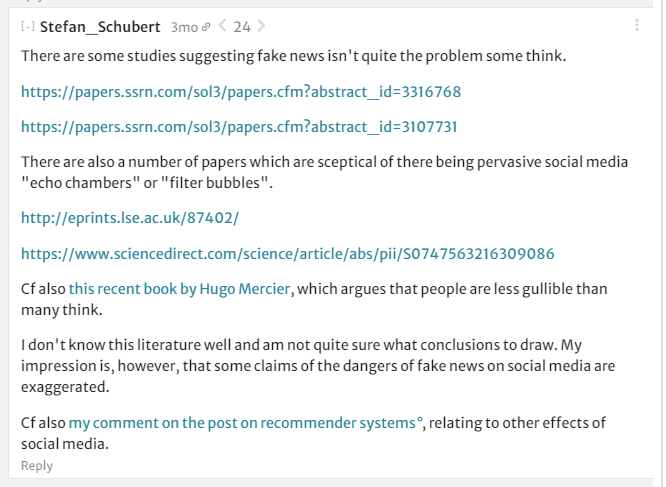

- Look at Stefan_Schuberts counterarguments below (https://forum.effectivealtruism.org/posts/E4gfMSqmznDwMrv9q/are-social-media-algorithms-an-existential-risk)

- Synthesize findings from papers that prove political polarisation

- Show papers that prove that political polarisation led to less international cooperation

- Look for cases (Trump / Brexit) where social media are to be blamed. Calculate the chance that social media actually flipped the election there

- Make back- of-the-envelope calculations / Fermi estimates for all relevant negative consequences

Social media causes declining mental health

- Doesn’t seem to be the case after doing a short review

- https://journals.sagepub.com/doi/10.1177/2167702618812727)

- https://lydiadenworth.com/articles/social-media-has-not-destroyed-a-generation/

- https://www.sciencedirect.com/science/article/abs/pii/S0747563219303723

This one looks interesting as well: https://docs.google.com/document/d/1w-HOfseF2wF9YIpXwUUtP65-olnkPyWcgF5BiAtBEy0/edit#

Next steps here:

- Countercheck these paper

- If we find prove that it causes mental health to decline: calculate lost QALY’s (see https://forum.effectivealtruism.org/posts/xzjQvqDYahigHcwgQ/aligning-recommender-systems-as-cause-area#Scale for first estimate)

Aligning recommender systems is “training practice” for larger AI alignment problems

Next step here:

- Should we expand this argument?

The problem is solvable (but we need more capacity for research)

See e.g. https://www.turing.ac.uk/sites/default/files/2020-10/epistemic-security-report_final.pdf

Next step here:

- Collect additional papers on solutions

- What kind of research is interesting and would be worthwhile investing in?

Thanks for this! I've sent you an email. Especially regarding caveat #2 I believe you can help with relative little time and resources.