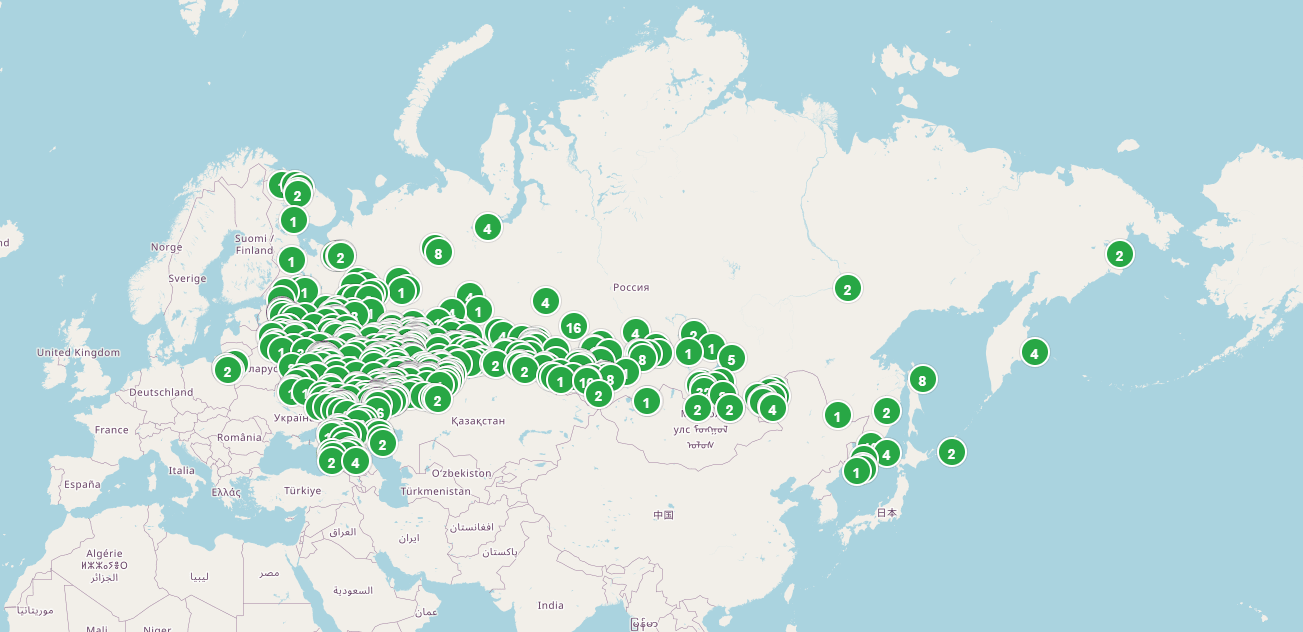

We have contact details and can send emails to 1500 students and former students who've received hard-cover copies of HPMOR (and possibly Human Compatible and/or The Precipice) because they've won international or Russian olympiads in maths, computer science, physics, biology, or chemistry.

This includes over 60 IMO and IOI medalists.

This is a pool of potentially extremely talented people, many of whom have read HPMOR.

I don't have the time to do anything with them, and people in the Russian-speaking EA community are all busy with other things.

The only thing that ever happened was an email sent to some kids still in high school about the Atlas Fellowship, and a couple of them became fellows.

I think it could be very valuable to alignment-pill these people. I think for most researchers who understand AI x-risk well enough and can speak Russian, even manually going through IMO and IOI medalists, sending those who seem more promising a tl;dr of x-risk and offering to schedule a call would be a marginally better use of their time than most technical alignment research they could be otherwise doing, because it plausibly adds highly capable researchers.

If you understand AI x-risk, are smart, have good epistemics, speak Russian, and want to have that ball, please DM me on LW or elsewhere.

To everyone else, feel free to make suggestions.

Speaking as an IMO medalist who partially got into AI safety because of reading HPMOR 10 years ago, I think this plan is extremely reasonable