Scott Alexander's review of What We Owe The Future seems to imply (as I read it) that the standard person-affecting-view solves the Repugnant Conclusion. This is true for the standard way the Repugnant Conclusion view is told, but a simple modification makes this no longer the case. I've heard this point made a lot, though, so I figure I'd make a short post addressing it.

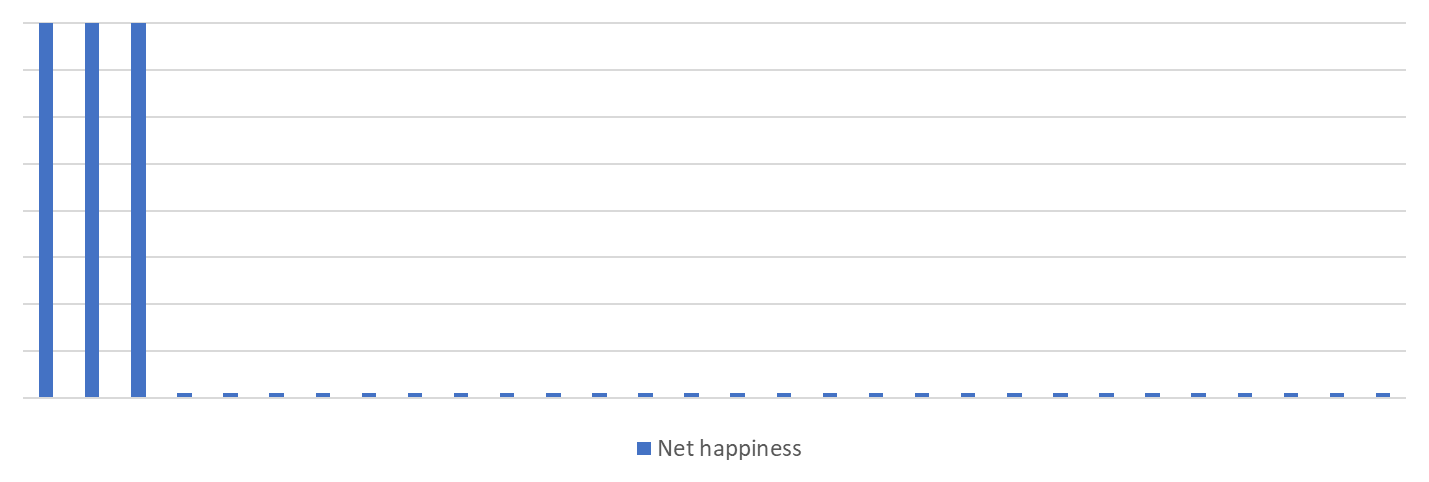

The standard Repugnant Conclusion, told to discredit total utilitarianism, is as follows. You have some population, each starting out at a reasonable net happiness:

Then you add a bunch of people with much lower but still positive happiness, which under total utilitarianism is a net benefit:

Then you impose equality while adding a little bit of happiness to each person to make it a net benefit under utilitarianism:

And here you end up with a society preferable under utilitarianism relative to the first but maybe intuitively worse. This can also be repeated infinitely until you have people just above the "net zero happiness" threshold.

The person-affecting view normally told to avoid this problem is a view that is neutral with respect to creating or removing future happy people (or even, as Scott writes, slightly negative about creating), but is in favor of making existing people happier. So the first trade would not be taken.

But a simple modification shows that this doesn't actually solve anything. We just have to make the first trade add a small amount of happiness. Or, if you accept Scott's "slightly negative" approach, an amount of happiness slightly more than (# of new lives * negative margin). So this first world (axes modified from above):

Becomes this second world:

Which becomes this third world:

If you accept the "slightly negative" argument, say margin=0.1, this prevents you from extending this to preferring a world with many people at 0.001 happiness and instead limits you to 0.101. I disagree with this threshold argument (which has been made before more explicitly), but regardless it doesn't actually get around the meat of the Repugnant Conclusion.

Scott has some more ethical commentary that suggests he might not care if individually ethical decisions lead to cumulatively terrible results. Regardless, I just wanted to address this one point. The standard person-affecting view does not escape the Repugnant Conclusion.

My puzzlement about population ethics is why we should give any serious weight at all to our evolved moral intuitions, when we're thinking about long-term global-scale population issues.

Our moral intuitions about human welfare, reproduction, inequality, redistribution, intergenerational justice, etc. all evolved in Pleistocene tribal conditions, to address various specific adaptive challenges in mate choice, parenting, reciprocity, kinship, group competition, etc. We rarely had to think beyond the scale of 100 to 1,000 people, are rarely beyond two or three generations.

And insofar as those moral intuitions were domain-specific, adapted to solve different kinds of problems that have their own adaptive tradeoffs and game-theoretic challenges, there's no reason whatsoever to expect those moral intuitions to be logically consistent with each other. (Indeed, the inner conflict we often feel about moral issues testifies to this domain-specificity.)

So, I'm just baffled about why moral philosophers who appreciate the small-scale evolutionary origins of our moral intuitions would expect those intuitions to 'feel happy' with any logically consistent population ethics principles that can scale up to billions of people across thousands of generations.

I was pretty aggravated by this part of the review, it's my impression that Alexander wasn't even endorsing the person-affecting view, but rather some sort of averagism (which admittedly does outright escape the repugnant conclusion). The issue is I think he's misunderstanding the petition on the repugnant conclusion. The authors were not endorsing the statement "the repugnant conclusion is correct" (though some signatories believe it) but rather "a theory implying the repugnant conclusion is not a reason to reject it outright". One of the main motivators of this is that, not as a matter of speculation, but as a matter of provable necessity, any formal view in this area has some implication people don't like. He sort of alludes to this with the eyeball pecking asides, but I don't think he internalizes the spirit of it properly. You don't reject repugnancy in population ethics by picking a theory that doesn't imply this particular conclusion, you do it by not endorsing your favored theory under its worst edge cases, whatever that theory is.

Given this, there just doesn't seem to be any reason to take the principled step he does towards averagism, and arguably averagism is the theory that is least on the table on its principled merits. I am not aware of anyone in the field who endorses average, and I was recently at an EA NYC talk on population ethics with Timothy Campbell in which he basically said outright that the field has, for philosophy an unusually strong consensus, that average is simply off the table. Average can purchase the non-existence of worthwhile lives at the cost of some happiness of those who exist in both scenarios. The value people contribute to the world is highly extrinsic to any particular person's welfare, to the point where whether a life is good or bad on net can have no relation to whether that life is good or bad for anyone in the world, whether the person themself, or any of the other people who were already there. Its repugnant implications seem to be deeper than just the standard extremes of principled consistency.

Yes, I also thought that the view that Scott seemed to suggest in the review was a clear non-starter. Depending on what exactly the proposal is, it inherits fatal problems from either negative utilitarianism or averagism. One would arguably be better off just endorsing a critical level view instead, but then one has stopped going beyond what's in WWOTF. (Though, to be clear, it would be possible to go beyond WWOTF by discussing some of the more recent and more complex views in population ethics that have been developed, such as attempts to improve upon standard views by relaxing properties of the axiological 'better than' relation.) See also here.

I think this is the same as Huemer's Benign Addition argument from https://philpapers.org/rec/HUEIDO

For what it's worth, there's more than one person-affecting view. The kinds philosophers endorse would probably usually avoid the Repugnant Conclusion, even in response to your argument. They often don't just extend pairwise comparisons to overall transitive rankings, and may instead reject the independence of irrelevant alternatives and be transitive within each option set. See my comment here: https://forum.effectivealtruism.org/posts/DCZhan8phEMRHuewk/person-affecting-intuitions-can-often-be-money-pumped?commentId=ZadcAxa2oBo3zQLuQ

That's a good point!

I would say "creating happy people is neutral; creating unhappy people is bad" is the cliffnotes of (asymmetric) person-affecting views, but there are further things to work out to make the view palatable. There are various attempts to do this (e.g. Meacham introduces "saturating counterpart relations") . My attempt is here.

In short, I think person-affecting views can be framed as "preference utilitarianism for existing people/beings, low-demanding contractualism (i.e., 'don't be a jerk') for possible people/beings."

"Low-demanding contractualism" comes with principles like:

See also the discussion in this comment thread.

I'm not a philosopher, but with my "person-affecting intuitions hat" on, I think that when you bring the extra people into existence, you also bring into existence obligations for the previously existing people (i.e. if they can sacrifice a little of their wellfare for a lot of everyone else's, they should). To count the "real" effect on their welfare, you need to factor in the obligations, and in your example the total sum comes out negatively for them (otherwise, after all the transformations, they wouldn't be worse off, assuming that the transformations are actually realisable and the assumed moral theory actually does create obligations).