One of the most attended to benchmarks for any new model these days is the METR estimated time horizon for “a diverse set of multi-step software and reasoning tasks”[1]. This comes in two variants - 50% pass rate and 80% pass rate.

Ostensibly, these represent the time it would take for a competent human expert to complete a task which the model have a 50% or 80% chance of one shotting.

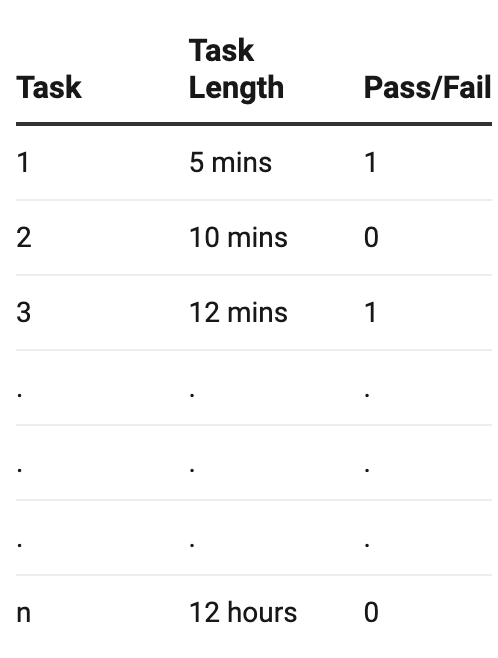

The full details on the benchmark construction are here, and a rough description is that there are a bunch of tasks, with each task having an associated time horizon (“It would take a human X minutes to do this task”). Models are given one attempt per problem, and the output is a table like this:

The 50% time horizon is then an estimate of the interpolated task length where a model would have a 50% chance of passing.[2]

The choice of measuring the time horizon, as opposed to giving a % score on a benchmark, was an inspired one, and has captured the imagination of the AI following public. Ultimately, however, like any other benchmark, there are a bunch of tasks and the scores are determined by how well the models do on the tasks. The time horizons are an estimate, not a fact of nature, and will be sensitive to the particular tasks in the task suite. See here, for example, for estimates of time horizons based on the WeirdML benchmark, which have shown a similar 5 month doubling time but currently sit at almost 4x the time horizon length of the METR versions.

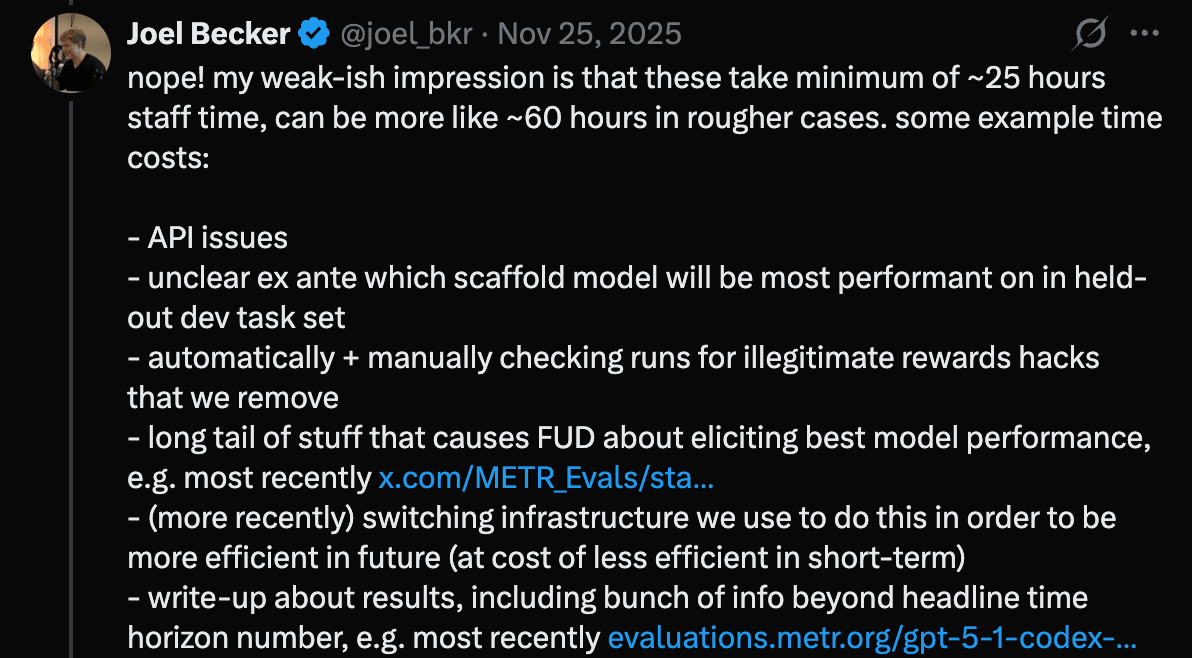

METR have noted that it is a time consuming process to run the evaluations.

Thankfully, there are other agentic coding evaluations we can use to estimate these if we are impatient and/or have too much time on our hands. In this post I am going to make an attempt to estimate these for GPT 5.3 Codex and Claude Opus 4.6.

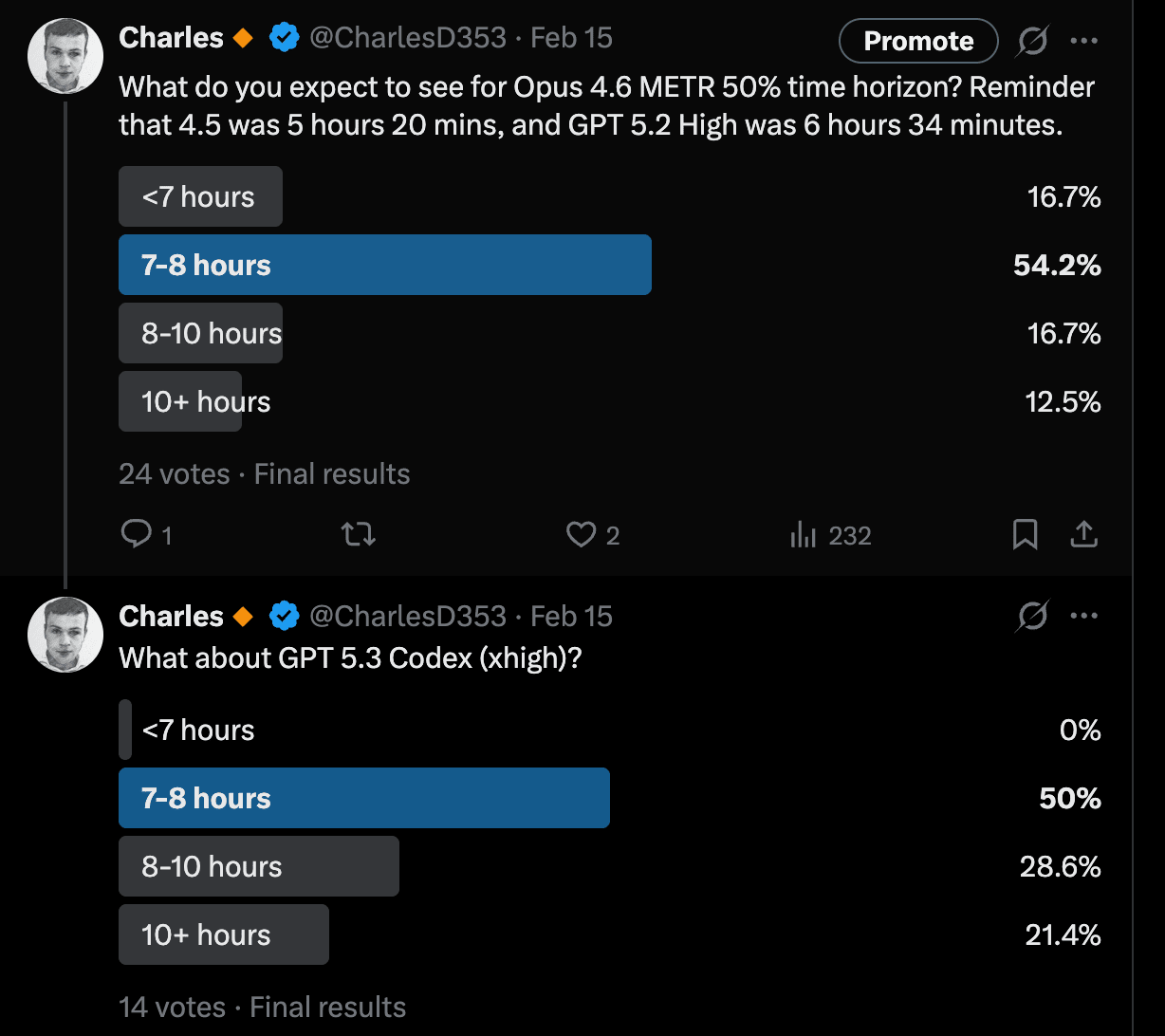

I asked my Twitter followers yesterday for their predictions, and got the following:

Interpolating and taking the mean of answers, we get 7.9 hours for Opus and 8.7 hours for GPT 5.3 Codex. The crowd expects OpenAI to retain their lead.

Let’s see what I find.

Methodology

Not all models do all benchmarks, and not all benchmarks are directly useful for evaluating agentic task completion capabilites. Thankfully, Epoch AI have done a lot of useful work for us via their Epoch Capabilities Index (ECI).

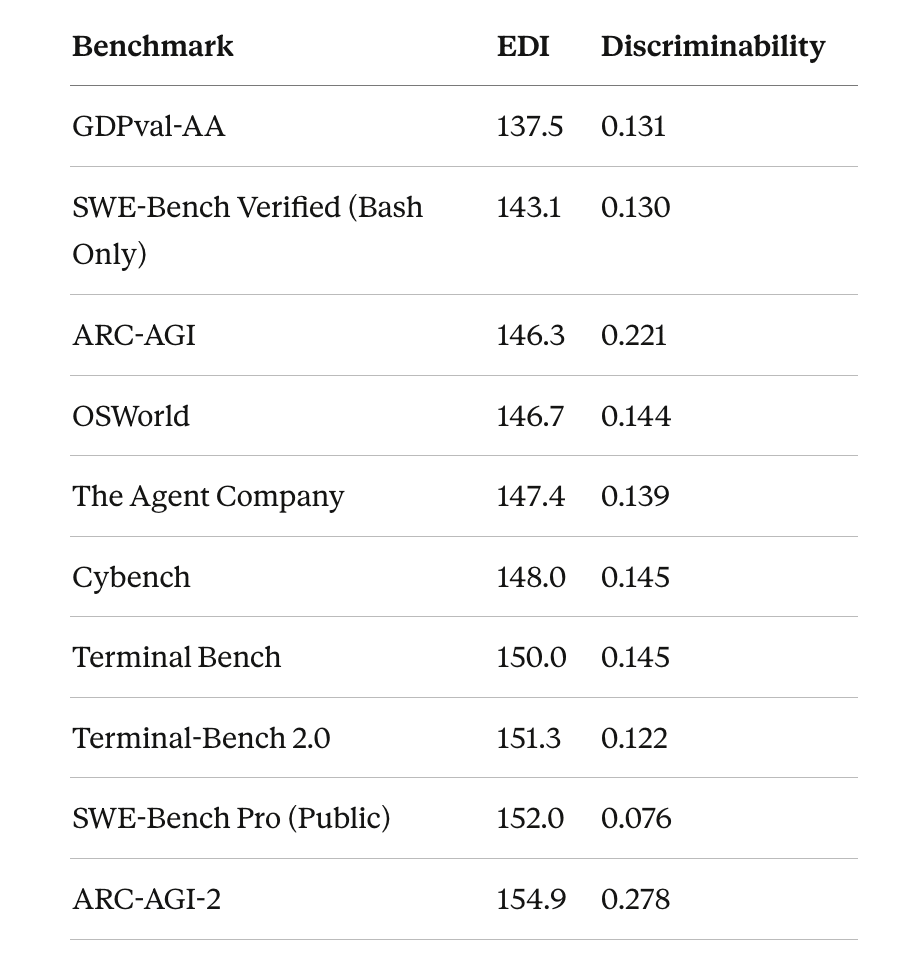

ECI provides an IRT (Item Response Theory)-based framework[3] for estimating model capabilities across benchmarks. Each benchmark gets a “difficulty” parameter (EDI) and a discriminability parameter, and each model gets a capability score. This handles the issue of different benchmarks having different scales, ceilings, and difficulties.

I extended ECI to include SWE-Bench Pro, ARC-AGI-2, GDPval-AA, and Terminal-Bench 2.0 scores and refit the IRT model[4]. I did this because some recent models have only been run on these benchmarks. This left us with 10 agentic or difficult reasoning benchmarks which I included in the fit.[5]

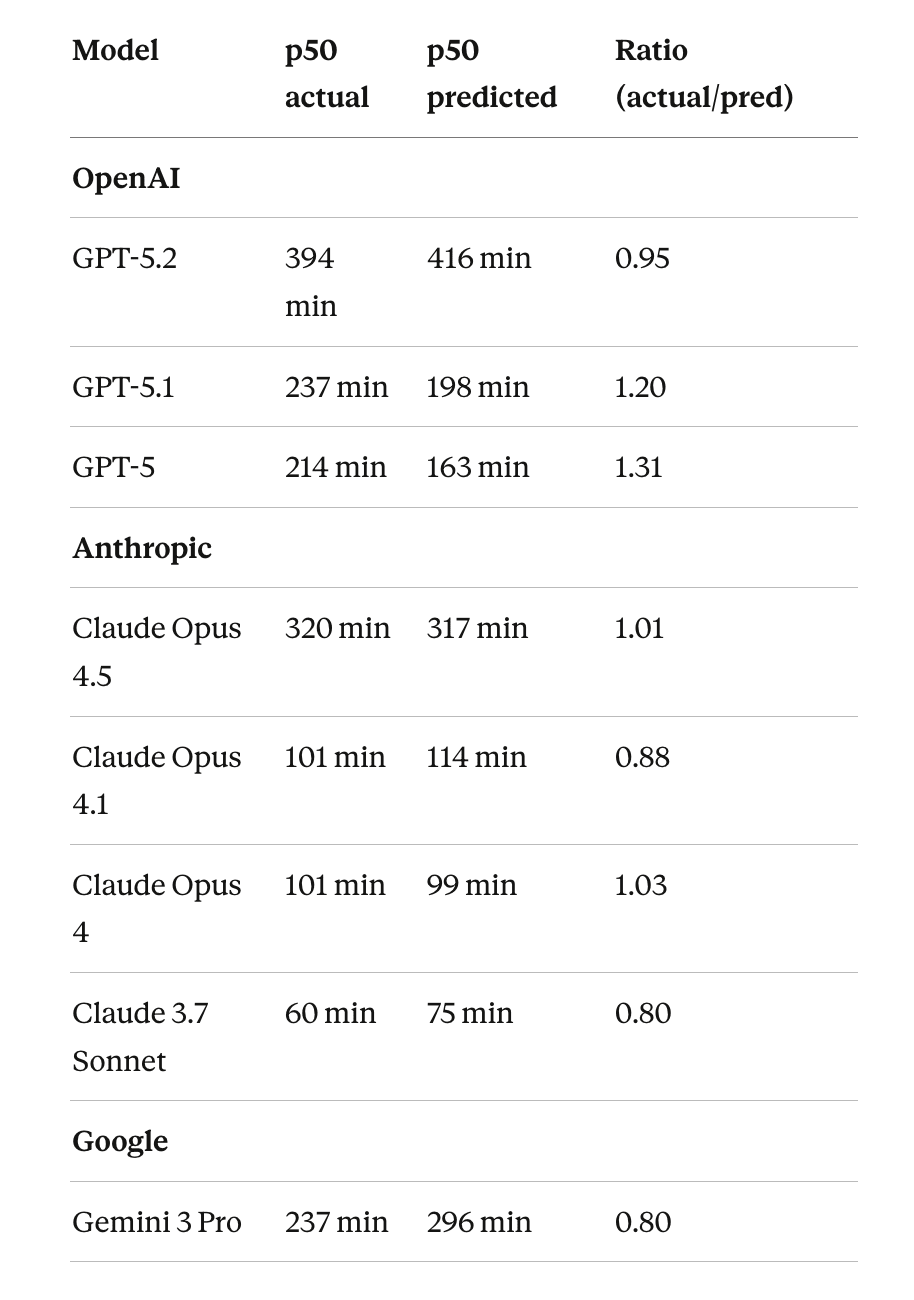

I then fit a weighted regression of log(METR time horizon) on IRT capability, separately for p50 and p80. The weights were set to increase linearly with capability: w = 1 + 1 × normalised_capability. This was done to overweight more capable frontier models in the fit, to capture the intuition that new models will have more similarity with more recent models than earlier ones.

I included post-o3 2025 models which METR had measured in the analysis, which amounted to 8 models[6].

What do we see? The model does an excellent job predicting GPT 5.2 and Opus 4.5, and does a pretty good job still on earlier models. If anything, Anthropic models have slightly underperformed their predictions, and several OpenAI models outperformed.

Predicting Opus 4.6 and GPT 5.3 Codex

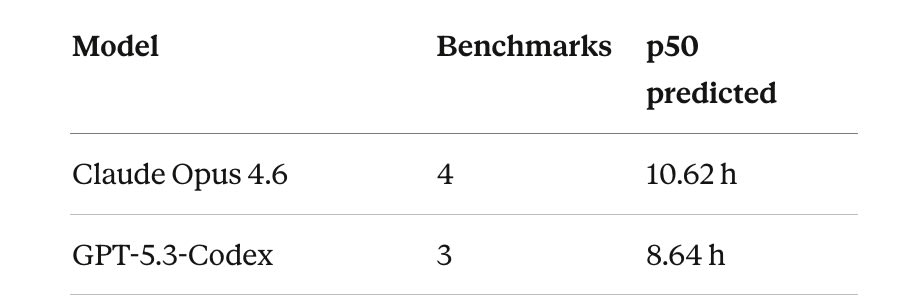

We only have limited scores for these models, and for different benchmarks. For GPT 5.3 Codex, we have Terminal Bench 2.0, SWE Bench Pro (Public) and Cybench, while for Opus 4.6 we have Terminal Bench 2.0, ARC-AGI-2, GDPval-AA, and SWE-Bench-Verified (Bash only).

On the one shared benchmark, Terminal Bench 2.0, GPT 5.3 Codex did better, scoring 65% vs 63% from Opus 4.6 using the same scaffold. But Opus scored extremely highly on ARC-AGI-2, the most difficult benchmark by our measure, and this gave us a higher estimate.

Codex is right in line with what the crowd expected, but Opus is dramatically higher.

This is largely down to the ARC-AGI-2 result. Can we trust this prediction?

I don’t expect so. Model providers have somewhat of a reputation for picking and publishing the benchmark evaluations that their models are going to do best on. Older model scores will often have come from independent evaluators like Epoch themselves, which are probably more trustworthy.

And the absence of SWE Bench Pro, along with the presence ofARC-AGI-2, suggests that something like that might have gone on with Anthropic. Similarly, with OpenAI, it seems plausible that they may have cherry-picked here. OpenAI also released the model practically immediately after Anthropic did, and in response to it, and so they may have cherry-picked some of the benchmarks on which they expected to beat Anthropic’s models. There is also some previous on Anthropic models underperforming on METR relative to this prediction, and OpenAI outperforming.

If I had to guess, I expect both models to be slightly lower, and to be closer together. Perhaps 8.25 hours for Codex and 8.7 for Opus, or something like this.

We shall see!

- ^

See original paper introducing the benchmark here.

- ^

Note that this bears no relation to the the length of time it actually takes the model to do the task. Just because Claude Code can go and spend 12 full hours iterating without needing user input does not mean that it has a time horizon of 12 hours. The time horizon is determined by the length of time a human would take to do the task. In most cases the model probably takes less time than the human.

- ^

Quoting from Epoch: “The technical foundation for the ECI comes from Item Response Theory (IRT), a statistical framework originally developed for educational testing. IRT enables comparisons between students, even when they took tests from different years, and one test might be harder than another.”

- ^

I also did not use OSWorld for newer models not in Epoch’s repo, because I could not determine which scores were OSWorld vs OSWorld-Verified and if there was a different between these with enough confidence.

- ^

This is somewhat arbitrary as ECI has more benchmarks than this, but I cared more about ones that were difficult general benchmarks or agentic benchmarks as I expected these to be most relevant to METR time horizons.

- ^

Note that GPT-5.x here refers to the version evaluated by METR, which was “high” for 5.2, “Codex-Max” for 5.1, and “thinking” (likely “medium”) for 5.0. For 5.3 Codex the scores from OpenAI were labelled xhigh.