Arkose is an AI safety fieldbuilding organisation that supports experienced machine learning professionals — such as professors and research engineers — to engage with the field. We focus on those new to AI safety, and have strong evidence that our work helps them take meaningful first steps.

Since December 2023, we’ve held nearly 300 one-on-one calls with mid-career machine learning researchers and engineers. In follow-up surveys, 79% reported that the call accelerated their involvement in AI safety[1]. Nonetheless, we’re at serious risk of shutting down in the coming weeks due to a lack of funding. Several funders have told us that we’re close to meeting their bar, but not quite there, leaving us in a precarious position. Without immediate support, we won’t be able to continue this work.

If you're interested in supporting Arkose, or would like to learn more, please reach out here or email victoria@arkose.org. You can also make donations directly through our Manifund project.

What evidence is there that Arkose is impactful?

AI safety remains significantly talent-constrained, particularly with regard to researchers who possess both strong machine learning credentials and a deep understanding of existential risk from advanced AI.

Arkose aims to address this gap by identifying talented researchers (e.g., those with publications at NeurIPS, ICML, and ICLR), engaging them through one-on-one calls, and supporting their immediate next steps into the field.

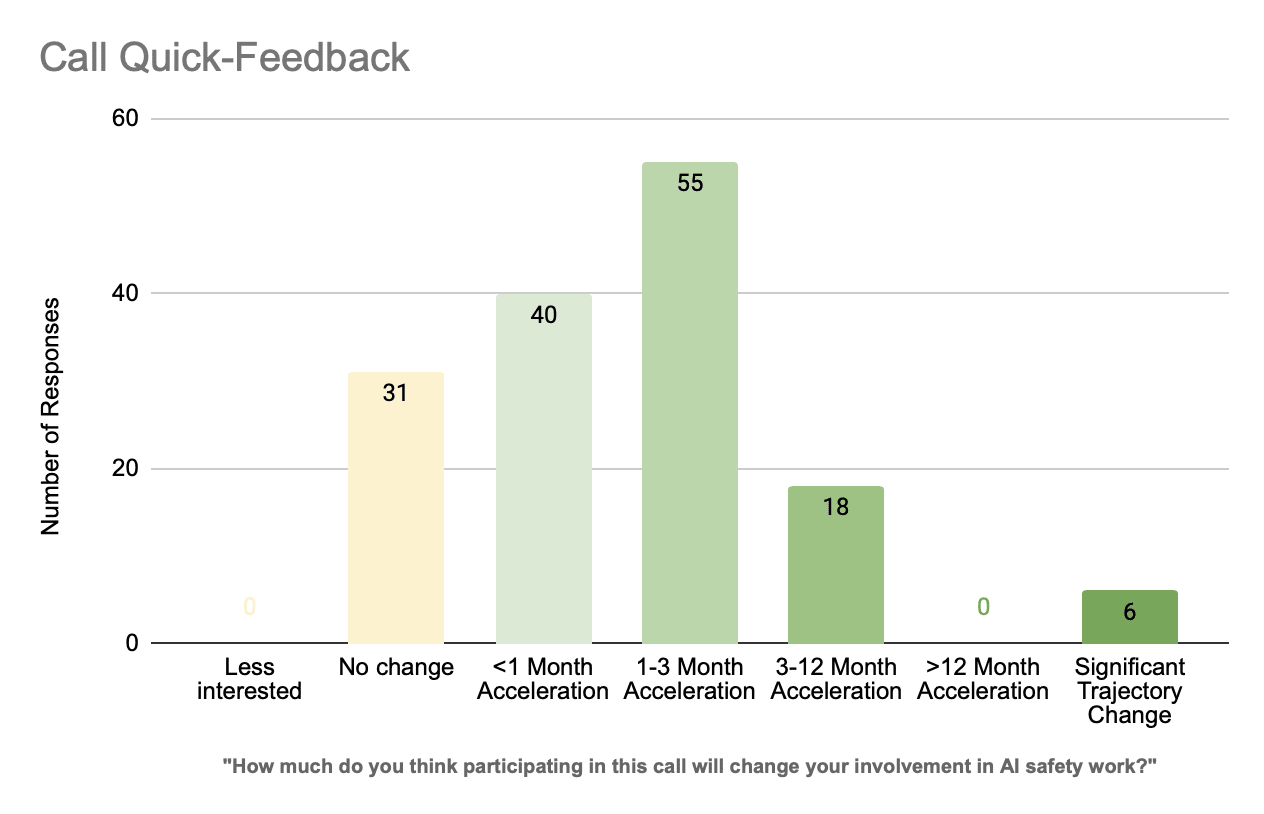

Following each call, we distribute a feedback form to participants. 52% of professionals complete the survey, and of those, 79% report that the call accelerated their involvement in AI safety:

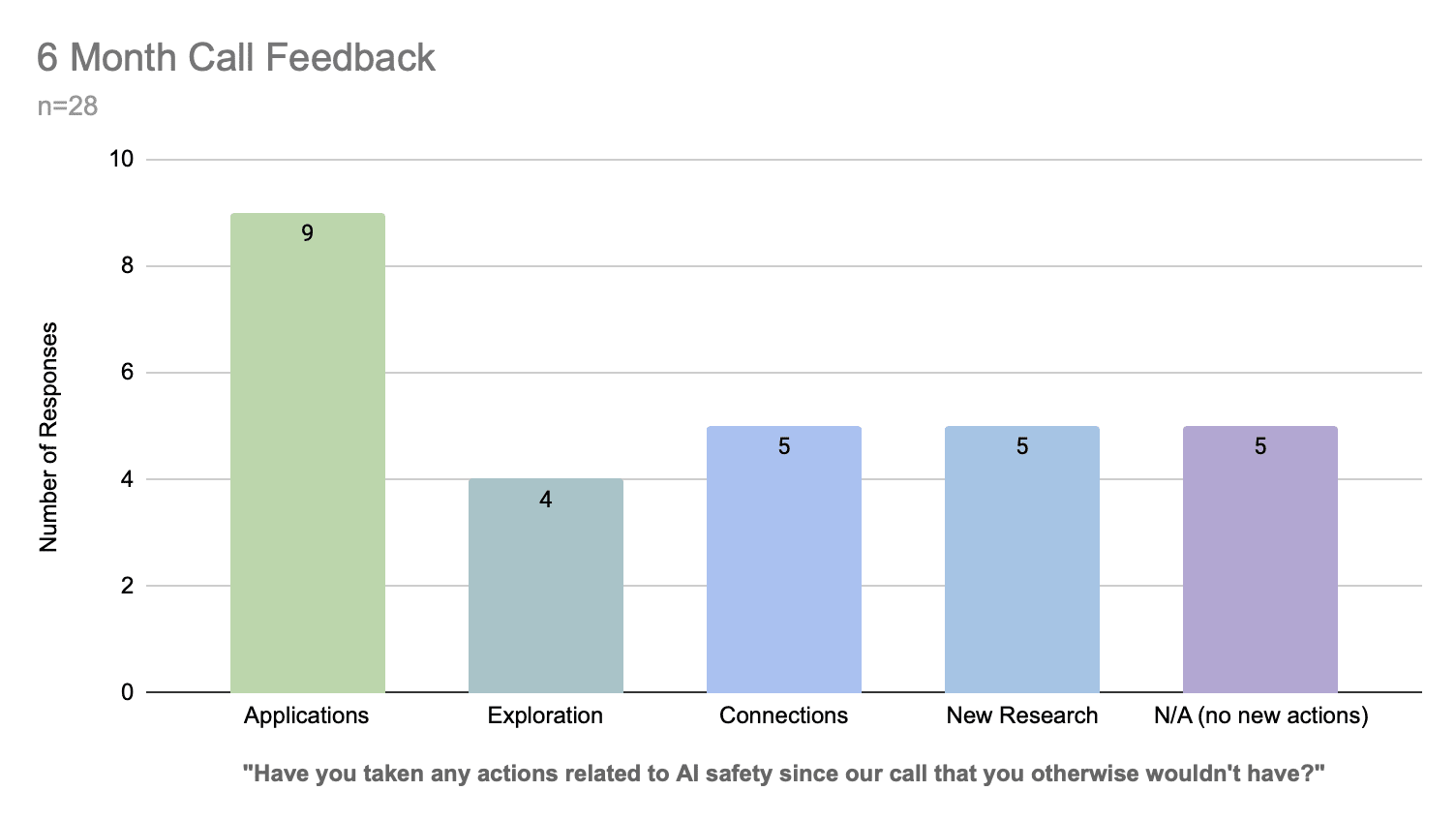

We also send a short form 6 months later, which demonstrates that professionals take a variety of concrete actions following their call with us:

We think that accelerating a senior machine learning professional's involvement in AI safety (e.g. a professor) by 1-3 months is a great outcome, and we're excited about the opportunity to improve our call quality over the next year and support researchers even better.

Unfortunately, we're not able to share all of our impact analysis publicly. If you're interested in learning more (for instance, if you'd like to see summaries of particularly high or low impact calls), please reach out to team@arkose.org.

What would the funding allow you to achieve?

We need $200,000, which would allow us to run (along with our remaining runway) for a year. During this time period, we expect to be able to run between 300 and 800 more calls with senior researchers while improving our public resources and expanding our support for researchers following the call.

How can I help?

At present, we're only seeking funding at or above our minimum viable level. That said, we're still enthusiastic about small donors — and may be able to continue if enough are willing to contribute. If you're interested in supporting us, please email victoria@arkose.org with an indication of how much you're able to give; we'll only ask for donations if the combined offers are sufficient to keep the organisation running. If you prefer, you can publicly commit to donating via Manifund.

We believe Arkose is a promising, cost-effective approach to addressing one of AI safety’s most pressing bottlenecks, and remain excited about this work. With additional funding, we’re in a position to continue reaching high-impact researchers, deepen our post-call support, and refine our approach. Without it, we will be forced to wind down operations in the coming weeks. If this work aligns with your interests, please do get in touch -- the next few weeks will be a critical time for us.

- ^

Of 51% who gave feedback immediately after the call

Quick flag that I might change the post title, as I assumed from "Arkose is closing" that closure was a definite, not something you are still working to prevent.

Thanks for the suggestion! I've changed the title.

Sorry to hear that! I would recommend posting a request for funding on Manifund, that makes it easier for people to donate, and I believe has a mechanism whereby people get refunded unless you meet a minimum funding bar

Thanks for the suggestion, Neel! I've now posted a project on Manifund.

Why not cross-post this to LessWrong?

Thanks for the suggestion! I've now crossposted it to LessWrong.