There is an easy way to see if a human is conscious: ask them. When it comes to AI systems, things are different. We can’t simply ask our AI overlords chatbots whether they are conscious. Robert Long (2023) explores the extent to which future systems might provide reliable self-reports.

LLMs are not just “stochastic parrots”

Well, we can ask.

“As an AI, I do not have the capability for self-awareness or subjective experience. Therefore, I cannot truly “know” or report on my own consciousness or lack thereof. My responses are generated based on patterns and data, not personal reflection or awareness”.

This last line especially reflects a view that AI systems are stochastic parrots. On this view AI models:

- Exploit mere statistical patterns in data, rather than invoking concepts to reason.

- Rely on information from training rather than introspection to answer questions.

Arguably, both points are not true.

LLMs can distinguish between facts and common misunderstandings. This indicates an ability to distinguish between more than mere statistical relationships in text (Meng et al., 2022)

AI systems seem to have limited forms of introspection. Introspection involves representing one’s own current mental states so that these representation can be used by the person (Kammerer and Frankish). There is preliminary evidence that AI systems can introspect at least a little. For instance, some models are able to produce well calibrated probabilities of how likely is that they have given a correct answer (Kadavath et al., 2022). However, it has not been established that these representations are actually available to the AI models when answering other questions.

AI systems seem to have concepts. One piece of evidence that they are very capable of using compound phrases like “house party” (very different from a “party house”). This indicates that they are not doing something simple like averaging statistical usage of individual words.

Indeed, we can look inside AI systems to find out. Since Robert Long published this paper last year, there has been a lot of progress applying mechanistic interpretability techniques to LLMs. A recent Anthropic paper showed that their language model had intuitive concepts for the Golden Gate Bridge, computer bugs, and thousands of other things. These concepts are used in model behaviour and understanding them helps us understand what the LLM is doing internally.

Some researchers have also found structural similarities between internal representations and the world (Abdou et al., 2021; Patel and Pavlick, 2022; Li et al., 2022; Singh et al., 2023)

Why do LLMs say they are (not) conscious?

LLMs are initially trained to predict the next word on vast amounts of internet text. LLMs with only this training tend to say that they are conscious, and readily give descriptions of their current experiences.

The most likely explanation of this behaviour is that claiming to be conscious is a natural way to continue the conversation as humans would, or as dialogue in science fiction normally goes.

Recently, consumer facing chatbots have stopped claiming to be conscious. This is likely due to the system prompt, which is given to the LLM but not shown to the end-user. For example, one model was told ‘You must refuse to discuss life, existence or sentience’ (von Hagen, 2023). System prompts are part of the text that AI systems are generating a continuation of, so this likely explains why current consumer chatbots no longer claim that they are sentient.

Could we build an AI system that reflects on its own mind?

Early on, people thought that any AI system that could use terms like “consciousness” fluently would, by default, give trustworthy self-reports about their own consciousness (Dennett, 1994). Current models can flexibly and reliably talk about consciousness, yet there is little reason to trust them when they say they are conscious. However, given that LLMs have some ability to reflect on their own thinking, we may be able to train systems that give accurate self-reports.

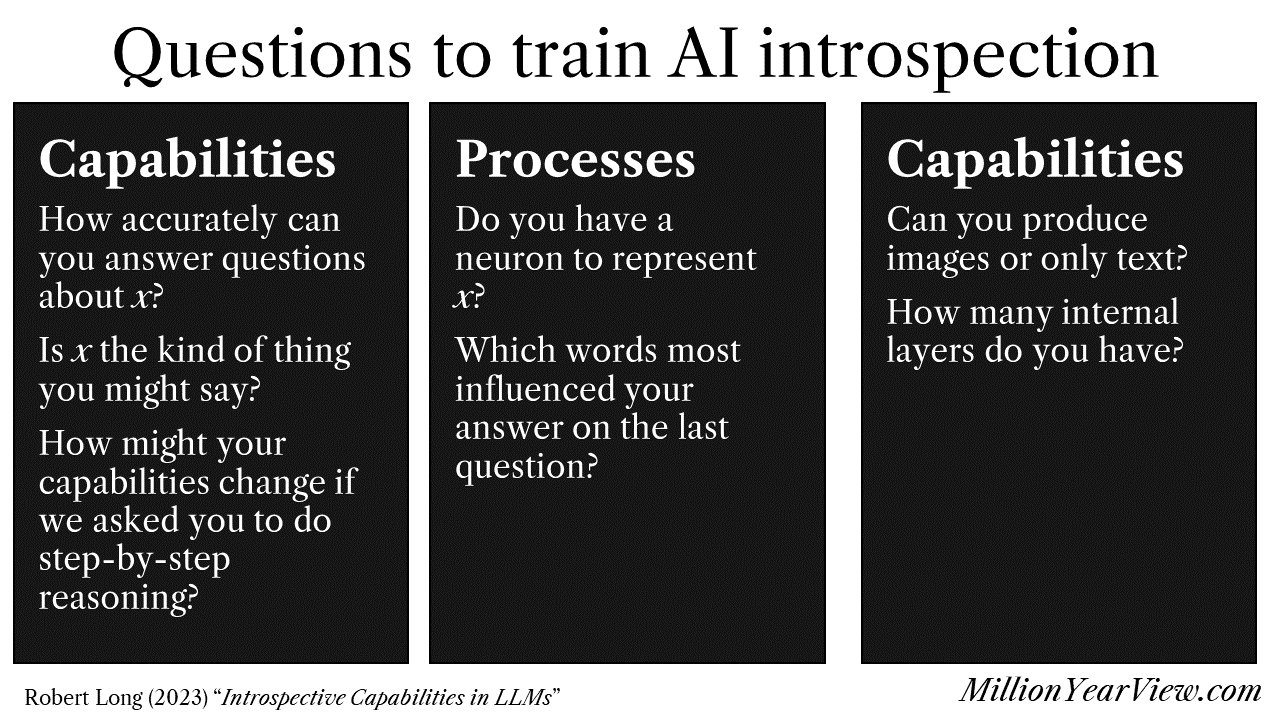

The basic proposal is to train AI systems on questions that involve introspection that we can verify for ourselves, then ask about more difficult questions such as whether and what the AI systems are thinking. Here are some suggested questions adapted from the paper:

An AI system trained to introspect on its own internal states that says it is conscious may give us evidence that it is conscious, in a similar way to how humans give us evidence about how they are conscious.

One interesting thing that comes out of this approach is the idea of a training/testing trade-off. This trade-off is between giving an AI enough context to understand and competently deploy concepts such as “consciousness” on the one hand, avoiding giving the system too much information such that it is incentivised to extrapolate from the training data rather than introspect. This may be hard to achieve in practice (Udell and Schwitzgebel, 2021).

My own thoughts on the paper

- I like this paper, it is an interesting approach to understanding AI consciousness. It improves on previous work such as Turner and Schneider’s (2018) test of AI consciousness.

- At the same time, it seems clear that we need other approaches. In fact, I don’t think this is the most compelling approach to assessing AI consciousness personally, but this is no fault of Robert Long. (In fact he co-authored the paper that proposes the most compelling approach: we use our best neuroscientific theories of consciousness to create a list of indicators of consciousness, that we can check for in current and future systems. These theories are not perfect, but if a future system meets the standards for consciousness across many plausible theories this seems about the strongest single piece of evidence that we could have for AI consciousness.)

- By the way, I will write more about this kind of thing soon, if you'd like email updates or to generally show support here is my Substack.

- I wonder if teaching self-reflection changes whether or not an AI system is actually conscious. For instance, could it be that LLMs are not conscious unless they are trained to self-reflect? Alternatively, could it be that this training changes their experiences in morally significant ways? I’d like to investigate this in the future (I’d love to talk to anyone thinking about this in depth).

- Perez and Long (2023) give a more detailed description of how to build an introspective AI system that might give useful answers to questions about consciousness and moral status. I haven’t read this yet.

Preview image: "The first conscious AI system" as imagined by Stable Diffusion 3 Medium.

Executive summary: While current large language models (LLMs) cannot reliably self-report on their consciousness, future AI systems may be trainable to provide accurate introspection about their internal mental states, potentially offering evidence for machine consciousness.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.