This is a rough research note – we’re sharing it for feedback and to spark discussion. We’re less confident in its methods and conclusions.

Summary

Different strategies make sense if timelines to AGI are short than if they are long.

In deciding when to spend resources to make AI go better, we should consider both:

- The probability of each AI timelines scenario.

- The expected impact, given some strategy, conditional on that timelines scenario.

We’ll call the second component "leverage." In this note, we'll focus on estimating the differences in leverage between different timeline scenarios and leave the question of their relative likelihood aside.

People sometimes argue that very short timelines are higher leverage because:

- They are more neglected.

- AI takeover risk is higher given short timelines.

These are important points, but the argument misses some major countervailing considerations. Longer timelines:

- Allow us to grow our resources more before the critical period.

- Give us more time to improve our strategic and conceptual understanding.

There's a third consideration we think has been neglected: the expected value of the future conditional on reducing AI takeover risk under different timeline scenarios. Two factors pull in opposite directions here:

- Longer timelines give society more time to navigate other challenges that come with the intelligence explosion, which increases the value of the future.

- But longer timelines mean that authoritarian countries are likely to control more of the future, which decreases it.

The overall upshot depends on which problems you’re working on and what resources you’re allocating:

- Efforts aimed at reducing AI takeover are probably the highest leverage on 2-10 year timelines. Direct work has the highest leverage on the shorter end of that range; funding on the longer end.

- Efforts aimed at improving the value of the future conditional on avoiding AI takeover probably have the highest leverage on 10+ year timeline scenarios.

Timelines scenarios and why they’re action-relevant

In this document, we’re considering three different scenarios for when we get fully automated AI R&D. Here we describe some salient plausible features of the three scenarios.

- Fully automated AI R&D is developed by the end of 2027 (henceforth “short timelines”).

- On this timeline, TAI will very likely be developed in the United States by one of the current front-runner labs. The intelligence explosion will probably be largely software-driven and involve rapid increases in capabilities (which increases the risk that one government or lab is able to attain a decisive strategic advantage(DSA)). At the time that TAI emerges, the world will look fairly similar to the world today: AI adoption by governments and industries other than software will be fairly limited, there will be no major US government investment in AI safety research, and there are no binding international agreements about transformative AI. The AI safety community will be fairly rich due to investments in frontier AI safety companies. But we probably won’t be able to effectively spend most of our resources by the time that AI R&D is automated, and there will be limited time to make use of controlled AGI labor before ASI is developed.

- Fully automated AI R&D is developed by the end of 2035 (henceforth “medium timelines”).

- On this timeline, TAI will probably still be developed in the United States, but there’s a substantial possibility that China will be the frontrunner, especially if it manages to indigenize its chip supply chain. If TAI is developed in the US, then it might be by current leading AI companies, but it’s also plausible that those companies have been overtaken by some other AI companies or a government AI project. There may have been a brief AI winter after we have hit the limits of the current paradigm, or progress might have continued steadily, but at a slower pace. By the time TAI arrives, AI at the current level (or higher) will have had a decade to proliferate through the economy and society. Governments, NGOs, companies, and individuals will have had time to become accustomed to AI and will probably be better equipped to adopt and integrate more advanced systems as they become available. Other consequences of widespread AI—which potentially include improved epistemics from AI-assisted tools, degraded epistemics from AI-generated propaganda and misinformation, more rapid scientific progress due to AI research assistance, and heightened bio-risk—will have already started to materialize. The rate of progress after AI R&D is automated will also probably be slower than in the 2027 scenario.

- Fully automated AI R&D is developed by the end of 2045 (“long timelines”).

- China and the US are about equally likely to be the frontrunners. AI technology will probably have been deeply integrated into the economy and society for a while at the time that AGI arrives. The current AI safety community will have had 20 more years to develop a better strategic understanding of the AI transition and accumulate resources, although we may lack great opportunities to spend that money, if the field is more crowded or if the action has moved to China. The speed of takeoff after AI R&D is automated will probably be slower than it would have been conditional on short or medium timelines, and we might have the opportunity to make use of substantial amounts of human-level AI labor during the intelligence explosion.

Some strategies are particularly valuable under some specific assumptions about timeline length.

- Investing in current front-runner AI companies is most valuable under short timelines. Under longer timelines, it’s more likely that there will be an AI winter that causes valuations to crash or that the current front-runners are overtaken.

- Strategies that take a long time to pay off are most valuable under longer timelines (h/t Kuhan for these examples).

- Outreach to high schoolers and college students seems much less valuable under 2027 timelines compared to 2035 or 2045 timelines.

- Supporting political candidates running for state offices or relatively junior national offices is more valuable under 2035 or 2045 timelines, since they can use those earlier offices as stepping stones to more influential offices.

- Developing a robust AI safety ecosystem in China looks more valuable on longer timelines, both because this will probably take a long time and because China’s attitude toward AI safety is more likely to be relevant on longer timelines.

- Developing detailed plans for how to reduce risk given current shovel-ready techniques looks most valuable on short timelines.

We do think that many strategies are beneficial on a range of assumptions about timelines. For instance, direct work targeted to short timeline scenarios builds a research community that could go on to work on other problems if it turns out that timelines are longer. But not all work that’s valuable on long timelines can be costlessly deferred to the future. It’s often useful to get started now on some strategies that are useful for long timelines.

Understanding leverage

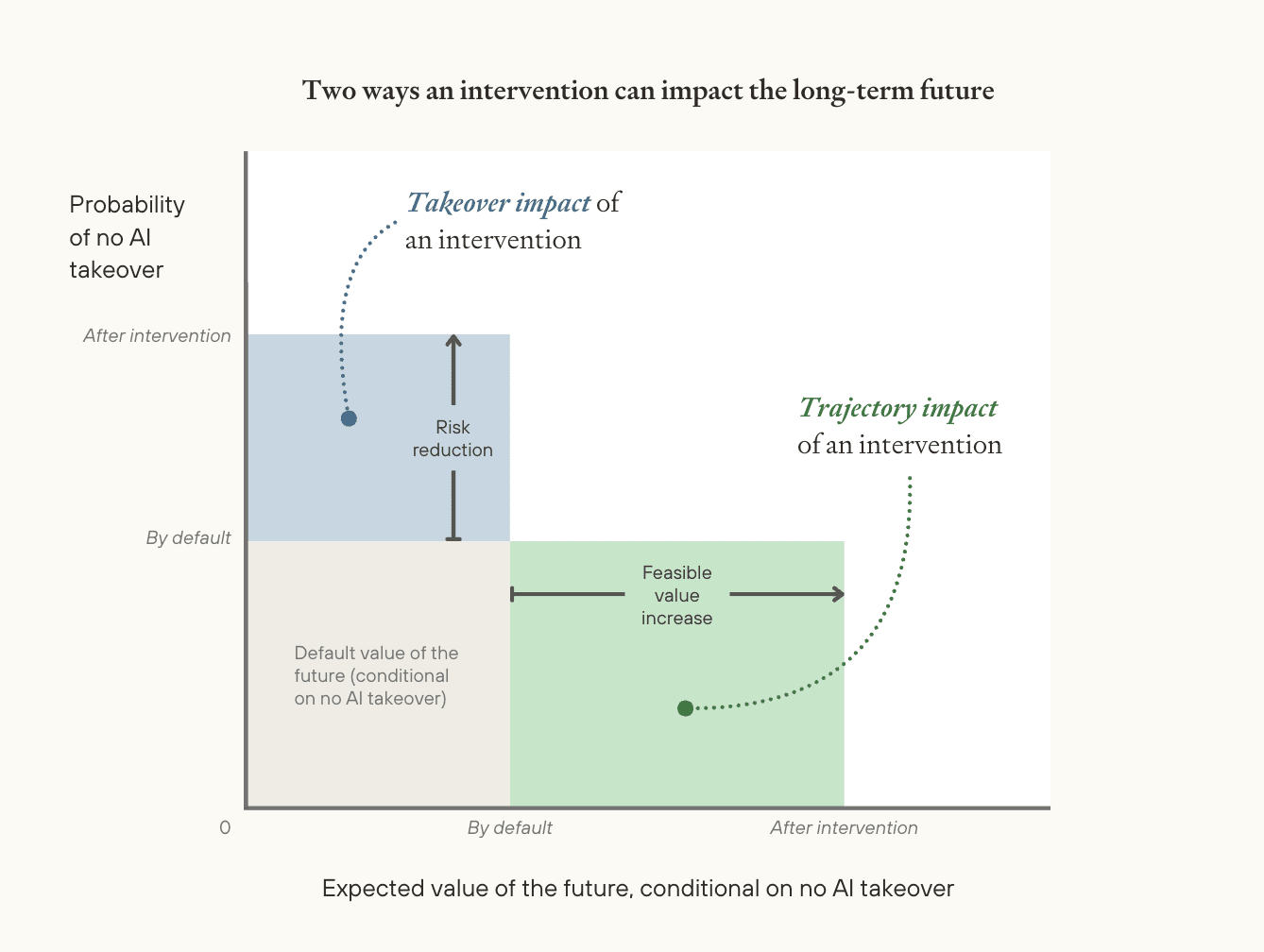

Here are two ways that we can have an impact on the long-term future:[1]

- Takeover impact: we can reduce the risk of AI takeover. The value of doing this is our potential risk reduction (the change in the probability of avoiding AI takeover due to our actions) multiplied by the default value of the future conditional on no AI takeover.

- Trajectory impact: we can improve the value of the future conditional on no AI takeover—e.g., by preventing AI-enabled coups or improving societal epistemics. The value of doing this is our feasible value increase (the improvement in the value of the future conditional on no AI takeover due to our actions)[2] multiplied by the default likelihood of avoiding AI takeover.

Here’s a geometric visualization of these two types of impact.

Arguments about timelines and leverage often focus on which scenarios offer the best opportunities to achieve the largest reductions in risk. But that’s just one term that you need to be tracking when thinking about takeover impact—you also need to account for the default value of the future conditional on preventing takeover, which is plausibly fairly different across different timeline scenarios.

The same applies to trajectory impact. You need to track both terms: your opportunities for value increase and the default likelihood of avoiding takeover. Both vary across timeline scenarios.

In the rest of this note, we’ll argue:

- For work targeting takeover impact, short-to-medium timelines are the highest leverage. This is because:

- The default value of the future is highest on medium timelines, compared to either shorter or longer timelines (more).

- There’s probably greater potential risk reduction on shorter timelines than medium, although the strength of this effect varies depending on what resources people are bringing to bear on the problem. I expect that for people able to do direct work now, the total risk reduction available on short timelines is enough to outweigh the greater value of averting takeover on medium timelines. For funders, medium timelines still look higher leverage (more).

- For work targeting trajectory impact, long timelines are the highest leverage. This is because:

- The default probability of survival is higher for long timelines than medium ones and higher for medium timelines than short ones (more).

- We’re unsure whether the feasible value increase is higher or lower on long timelines relative to medium or short timelines. But absent confidence that it’s substantially lower, the higher survival probability means that longer timelines look higher leverage for trajectory impact (more).

Takeover impact

In this section, we’ll survey some key considerations for which timelines are highest leverage for reducing AI takeover risk.

The default value of the future is higher on medium timelines than short or long timelines

Apart from AI takeover, the value of the future depends a lot on how well we navigate the following challenges:

- Catastrophic risks like pandemics and great power conflict, which could lead to human extinctions. Even a sub-extinction catastrophe that killed billions of people could render the survivors less likely to achieve a great future by reducing democracy, moral open-mindedness, and cooperation.

- Extreme concentration of power—where just a handful of individuals control most of the world’s resources—which is bad because it reduces opportunities for gains from trade between different value systems, inhibits a diverse “marketplace of ideas” for different moral views, and probably selects for amoral or malevolent actors.

- Damage to societal epistemics and potentially unendorsed value change, which could come from individualized superhuman persuasion or novel false yet compelling ideologies created with AI labor.

- Premature value lock-in, which could come from handing off to AI aligned to a hastily chosen set of values or through individuals or societies using technology to prevent themselves or their descendants from seriously considering alternative value systems.

- Empowerment of actors who are not altruistic or impartial, even after a good reflection process.

We think the timing of the intelligence explosion matters for whether we succeed at each of these challenges.

There are several reasons to expect that the default value of the future is higher on longer timelines.

On longer timelines, takeoff is likely slower and this probably makes it easier for institutions to respond well to the intelligence explosion. There are a couple of reasons to expect that that takeoff will be slower on longer timelines:

- There are different AI improvement feedback loops that could drive an intelligence explosion (software improvements, chip design improvements, and chip production scaling), these feedback loops operate at different speeds, and the faster ones are likely to be automated earlier (see Three Types of Intelligence Explosion for more on this argument).

- More generally, incremental intelligence improvements being more difficult, expensive, or time-consuming imply both longer timelines and slower takeoffs.

If takeoff is slower, institutions will be able to respond more effectively to challenges as they emerge. This is for two reasons: first, slower takeoff gives institutions more advance warning about problems before solutions need to be in place; and second, institutions are more likely to have access to controlled (or aligned) AI intellectual labor to help make sense of the situation, devise solutions, and implement them.

We think this effect is strongest for challenges that nearly everyone would be motivated to solve if they saw them coming. In descending order, this seems to be: catastrophic risks; concentration of power; risks to societal epistemics; and corruption of human values.

On longer timelines, the world has better strategic understanding at the time of takeoff. If AGI comes in 2035 or 2045 instead of 2027, then we get an extra eight to eighteen years of progress in science, philosophy, mathematics, mechanism design, etc.—potentially sped up by assistance from AI systems around the current level. These fields might turn up new approaches to handling the challenges above.

Wider proliferation of AI capabilities before the intelligence explosion bears both risks and opportunities, but we think the benefits outweigh the costs. On longer timelines, usage of models at the current frontier capability level will have time to proliferate throughout the economy. This could make the world better prepared for the intelligence explosion. For instance, adoption of AI tools could improve government decision-making. In some domains, the overall effect of AI proliferation is less clear. AI-generated misinformation could worsen the epistemic environment and make it harder to reach consensus on effective policies, while AI tools for epistemic reasoning and coordination could help people identify policies that serve their interests and work together to implement them. Similarly, AIs might allow more actors to develop bioweapons, but also might strengthen our biodefense capabilities. We weakly expect wider proliferation to be positive.

But there are also some important reasons to expect that the value of the future will be lower on longer timelines.

China has more influence on longer timelines. The leading AI projects are based in the west, and the US currently has control over hardware supply chains. On longer timelines, it’s more likely that Chinese AI companies have overtaken frontier labs in the west and that China can produce advanced chips domestically. On long timelines, China may even have an edge—later intelligence explosions are more likely to be primarily driven by chip production, and China may be more successful at rapidly expanding industrial production than the US.

On longer timelines, we incur more catastrophic risk before ASI. The pre-ASI period plausibly carries unusually high "state risk." For example, early AI capabilities might increase the annual risk of engineered pandemics before ASI develops and implements preventative measures. Great power conflict may be especially likely in the run-up to ASI, and that risk might resolve once one country gains a DSA or multiple countries use ASI-enabled coordination technology to broker a stable division of power. If this pre-ASI period does carry elevated annual catastrophic risk, then all else equal, the expected value of the future looks better if we spend as few years as possible in it—which favors shorter timelines.

In the appendix, we find that the value of the future is greater on medium timelines than shorter timelines or longer timelines. Specifically, we find that the value of the future conditional on medium timelines is about 30-50% greater than the value of the future conditional on short timelines and 55-90% greater than the value conditional on long timelines. The main factor that decreases the value of the future on longer timelines is the increased influence of authoritarian governments like China and the main factor that decreases the value of the future on shorter timelines is greater risk of extreme power concentration.

Shorter timelines allow for larger AI takeover risk reduction

To estimate the takeover impact across timelines, we need to compare these differences in value to the differences in potential AI takeover reduction risk.

There are two main reasons to expect larger potential AI takeover risk reduction on longer timelines.

First, over longer timelines, there’s more time to expand our resources—money, researchers, knowledge—through compounding growth. Money can be invested in the stock market or spent on fundraising or recruitment initiatives; researchers can mentor new researchers; and past insights can inform the best directions for future research.

Historically, the EA community has experienced annual growth in the range of 10-25%.[3] It is not implausible that this continues at a similar pace for the next 10-20 years given medium to long timelines. Admittedly, over long time periods, the movement risks fizzling out, drifting in its values, or losing its resources. These risks are real but can be priced into our growth rate.

On longer timelines, there will likely be more options for spending a lot of resources effectively. It’s more feasible to build up capacity by launching organizations, starting megaprojects, spinning up new research agendas, and training new researchers. There might also be qualitatively new opportunities for spending on longer timelines. For instance, slower takeoff makes it more likely we'll have access to aligned or controlled human-level AI labor for an extended period. Paying for AI employees might be dramatically more scalable than paying for human employees: unlike with hiring humans, as soon as you have AI that can do a particular job, you can “employ” as many AI workers to do that task as you like, all at equal skill level, without any transaction costs to finding those workers.

That said, there is an important countervailing consideration.

On longer timelines, there will likely be fewer great opportunities for risk reduction. One reason for this is that there are probably lower absolute levels of risk on longer timelines (see next section), which means that there's a lower ceiling on the total amount of risk we could reduce. Another reason is that there will simply be more resources devoted to reducing takeover risk. On longer timelines, governments, philanthropists, and other institutions will have had a longer time to become aware of takeover risk and ramp up spending by the time the intelligence explosion starts. Plus, given slower takeoff on longer timelines, they will have more time to mobilize resources once the intelligence explosion has started. These actors will probably have better strategic understanding on longer timelines, so their resources will go further. Thus on longer timelines, the world is more likely to adequately address AI takeover risk without our involvement.

Whether short or medium timelines are highest leverage depends on the resources or skillset being deployed

In the appendix, we estimate that the value of the future is 30-50% greater on medium timelines compared to short timelines, which suggests that an individual has higher leverage short timelines if they expect that they can drive 30-50% more absolute risk reduction (e.g., if you think that you can reduce AI takeover risk by 1 percentage point on 2035 timelines, but >1.5 percentage points on 2027 timelines, then you have higher leverage on shorter timelines). (Of course, relative likelihood also matters when deciding what to focus on. If you assign significantly higher probability to one scenario, this could outweigh leverage differences.)

We find it plausible that high-context researchers and policymakers who can do direct work immediately meet this condition and should focus on shorter timelines. On short timelines, we'll probably be talent-bottlenecked rather than funding-bottlenecked—it seems difficult to find funding opportunities that can usefully absorb tens of billions of dollars when there's limited time to ramp up new projects or train and onboard new researchers. But these considerations cut the other way for funders. Longer timelines make it easier to deploy large amounts of capital productively. In part, this is simply because there's more time to scale up funding gradually. But also, slower takeoff is also more likely on longer timelines. This raises the chance of an extended window when we can hire controlled human-level AI researchers to work on the most important projects, which is potentially a very scalable use of money to reduce risk.

Trajectory impact

Trajectory impact is our impact from improving the value of futures without AI takeover: this is the value increase due to our efforts on futures without AI takeover, weighted by the probability of avoiding AI takeover.

The default probability of averting AI takeover is higher on medium and longer timelines

There are three main considerations in favor.

On longer timelines, more resources will be invested in reducing AI takeover risk. The AI safety field is generally growing rapidly, and on longer timelines we’ll enjoy the gains from more years of growth. Additionally on longer timelines, takeoff is likely to be slower, which gives institutions more warning to start investing resources to prepare for AI takeover.

On longer timelines, society has accumulated more knowledge by the time of the intelligence explosion. Again, medium to long timelines mean that we get an extra eight to eighteen years of progress in science, philosophy, mathematics, cybersecurity and other fields, potentially accelerated by assistance from AI at the current capability level. These advances might provide useful insights for technical alignment and AI governance.

Beyond knowledge and resource considerations, we expect AI takeover risk is higher if takeoff is faster. The most viable prevention strategies for preventing takeover rely on weaker, trusted models to constrain stronger ones—through generating training signals, hardening critical systems against attacks, monitoring behavior, and contributing to alignment research. These strategies will be less effective if capabilities increase rapidly and, more generally, faster takeoff gives human decision-makers and institutions less time to react.

The most convincing countervailing consideration is that:

The current paradigm might be unusually safe. Current AIs are pretrained on human text and gain a deep understanding of human values, which makes it relatively easy to train them to robustly act in line with those values in later stages of training. Compare this to a paradigm where AIs were trained from scratch to win at strategy games like Starcraft—it seems much harder to train such a system to understand and internalize human goals. On short timelines, ASI is especially likely to emerge from the current paradigm, so this consideration pushes us directionally toward thinking that AI takeover is less likely on shorter timelines.

But overall, it seems quite likely that the overall level of risk is lower on longer timelines.

It’s unclear whether feasible value increase is greater or lower on different timelines

There are some reasons to expect that feasible value increase is greater on longer timelines.

We will have better strategic understanding. As discussed above, we’ll be able to take advantage of more years of progress in related fields. We’ll also have had the chance to do more research ourselves into preparing for the AI transition. We still have a very basic understanding of the risks and opportunities around superhuman AI, and some plausibly important threat models (e.g. AI-enabled coups, gradual disempowerment), have only been publicly analysed in depth in the last few years. Given another decade or two of progress, we might be able to identify much better opportunities to improve the value of the future.

We will probably have more resources on longer timelines. (see discussion above).

But on the other hand:

We probably have less influence over AI projects on longer timelines. The AI safety community is currently closely connected to leading AI projects—many of us work at or collaborate with current front-runner AI companies. It's also easier to have greater influence over AI projects now when the field is less crowded. On shorter timelines, we likely have substantial influence over the projects that develop transformative AI, which amplifies the impact of our work: new risk reduction approaches we develop are more likely to get implemented and our niche concerns (like digital sentience) are more likely to get taken seriously. This level of influence will probably decrease on longer timelines. Government control becomes more likely as timelines lengthen, and we'd likely have a harder time accessing decision-makers in a government-run AI project than we currently have with AI lab leadership, even accounting for time to build political connections. If AI development shifts to China—which looks fairly likely on long timelines—our influence declines even more.

Conclusion

We've listed what we consider the most important considerations around which timeline scenarios are highest leverage. In general, when assessing leverage, we think it's important to consider not just your ability to make progress on a given problem, but also the likelihood of success on other problems that are necessary for your progress to matter.

We’ve specifically thought about timelines and leverage for two broad categories of work.

- Working on averting AI takeover (where we consider both our ability to reduce risk, and the default value of the future conditional on no AI takeover).

- Working on improving the value of the future, conditional on no AI takeover (where we consider both the feasible value increase and the default level of takeover risk).

For work aimed at averting AI takeover, we think the default value of the future is highest on 2035 timelines. However, for direct work by high-context people who can start today, we think reducing AI risk is more tractable on earlier timelines, and this tractability advantage overcomes the higher value of succeeding on longer timelines. We're less convinced this tractability advantage exists for funders, who we think should focus on timeline scenarios closer to 2035.

For work aimed at improving the value of the future, we think the default likelihood of avoiding AI takeover increases on longer timelines. We're unclear about whether the feasible value increase goes up or down with longer timelines, and so tentatively recommend that people doing this work focus on longer timelines (e.g., 2045).

We thank Max Dalton, Owen Cotton-Barratt, Lukas Finnveden, Rose Hadshar, Lizka Vaintrob, Fin Moorhouse, and Oscar Delaney for helpful comments on earlier drafts. We thank Lizka Vaintrob for helping design the figure.

Appendix: BOTEC estimating the default value of the future on different timelines

In this section, we estimate the default value of the future conditional on avoiding AI takeover, which is relevant to our takeover impact—the value of reducing the likelihood of AI takeover. See above for a qualitative discussion of important considerations.

Note that this section is only about estimating the default value of the future conditional on avoiding AI takeover for the purpose of estimating takeover impact. We’re not estimating the tractability at improving the value of the future, which is what we’d use to estimate the trajectory impact.

To get a rough sense of relative magnitudes, we're going to do a back-of-the-envelope calculation that makes a lot of simplifying assumptions that we think are too strong.

We’ll assume that the expected value of the future is dominated by the likelihood of making it to a near-best future, and that we are very unlikely to get a near-best future if any of the following happen in the next century:

- There is extreme power concentration (e.g., via an AI-assisted coup) where <20 people end up in control of approximately all of the world’s resources.

- There is a non-takeover global catastrophe that causes human extinction or significantly disrupts civilization in a way that reduces the value of the far future (e.g., reduces the power of democracies, causes a drift toward more parochial values). We are not including AI takeover or AI-assisted human takeover, but are including other kinds of catastrophes enabled by AI capabilities or influenced by the presence of the advanced AI systems, e.g., engineered pandemics designed with AI assistance or great power conflict over AI.

- Post-AGI civilization doesn’t have a sufficient number of people with sufficiently good starting values—i.e., values that will converge to near-best values given a good enough reflection process. This might happen if we have leaders who are selfish, malevolent, or unconcerned with pursuing the impartial good.

- There are major reflection failures. Reflective failures could involve: people prematurely locking in their values, suppression of important ideas that turn out to be important and true, or the spread of memes that corrupt people's values in unendorsed ways.

- There are major aggregation failures. Even if there are a sufficient number of people with sufficiently good starting values, who have avoided reflective failure, perhaps they are unable to carry out positive-sum trades with other value systems to achieve a near-best future. Failures might involve threats, preference-aggregation methods that don’t allow minority preferences to be expressed (e.g., the very best kinds of matter getting banned), or the presence of value systems with linear returns on resources that are resource-incompatible[4] with “good” values.

Let's factor the value of the future (conditional on no AI takeover) into the following terms:

- Probability of avoiding extreme concentration of power, conditional on avoiding AI takeover.

- Probability of avoiding other catastrophic risks, conditional on avoiding AI takeover and concentration of power.

- Probability of empowering good-enough values (i.e., values that will converge to the "correct" values given that we avoid a reflective failure), conditional on avoiding AI takeover, concentration of power, and other catastrophic risks.

- Probability of avoiding a reflective or aggregation failure, conditional on avoiding AI takeover, concentration of power, and other catastrophic risks, and getting good-enough values.

- Probability of getting a near-best future, conditional on avoiding AI takeover, concentration of power, other catastrophic risks, and a reflective failure, and getting good-enough values.

As a simplifying assumption—and because we don’t have a strong opinion on how the final term (e) varies across different AI timelines—we will assume that e is constant across timelines and set it to 1.

| Parameter (All probabilities are conditional on avoiding AI takeover and avoiding the outcomes described in rows above) | Values conditional on different timelines | Reasoning | ||

| Mia | Will | Mia | Will | |

| Probability of avoiding extreme concentration of power in the next ~century | 2027: 76% | 80.1% | China is more likely to get a DSA on longer timelines (say, 10%/30%/50% on short/medium/long timelines), and extreme power concentration is comparatively more likely given a Chinese DSA (say, ~50%) The US becomes less likely to get a DSA on longer timelines (say, 85%/55%/35% on short/medium/long timelines). Slower takeoff is more likely on longer timelines, and that is somewhat protective against coups; the world is generally more prepared on longer timelines. Coup risk in the US (conditional on US dominance) is something like 22%/10%/7% on short/medium/long timelines | Any country getting a DSA becomes less likely on longer timelines (I put 90% to 75% to 60%). The US (rather than China) winning the race to AGI becomes less likely on longer timelines (95% to 80% to 50%). |

| 2035: 80% | 86.5% | |||

| 2045: 75% | 80.1% | |||

| Probability of avoiding other catastrophic risks in the next ~century | 2027: 97% | 98.5% | The main risks I have in mind are war and bioweapons.

For war:

Numbers are illegible guesses after thinking about these considerations. | I put the expected value of the future lost from catastrophes at around 3%, with half of that coming from extinction risk and half from rerun risk and trajectory change.

The number gets higher in longer timelines because there’s a longer “time of perils”.

It doesn’t overall have a big impact on the BOTEC. |

| 2035: 96% | 97% | |||

| 2045: 95% | 95.5% | |||

| Probability of empowering good-enough values | 2027: 57% | 16.0% | I think probably I should have a smallish negative update on decision-makers’ values on shorter timelines because it seems like later timelines are probably somewhat better. (But this is small because we are conditioning on them successfully avoiding coups and AI takeovers).

On shorter timelines, I think that hasty lock-in of not-quite-right values seems particularly likely.

We’re conditioning on no extreme power concentration, but power concentration could be intense (e.g. to just 100 people), and I think power concentration is somewhat more likely on very short timelines (because of rapid takeoff) and very long timelines (because increased likelihood of a Chinese DSA).

Numbers are illegible guesses after thinking about these considerations. | Even conditioning on no concentration of power risk as we’ve defined it (<20 people), there’s still a big chance of extreme concentration of power, just not as extreme as <20 people. And, the smaller the number of people with power, the less likely some of them will have sufficiently good starting values.

This is much more likely conditional on China getting to a DSA first vs the US getting to a DSA first.

I place some weight on the idea that “broadly good-enough values” is quite narrow, and only initially broadly utilitarian-sympathetic people cross the bar; I see this as most likely in 2035, then 2027, then 2045. |

| 2035: 65% | 19.5% | |||

| 2045: 55% | 13.7% | |||

| Probability of avoiding a reflective or aggregation failure | 2027: 50% | 34.5% | Liberal democracy / an open society seem more likely on medium timelines, and that seems probably good for ensuring a good reflective process.

There are considerations in both directions, but all things considered I’m more worried about meme wars/AI-induced unendorsed value drift on long timelines.

On longer timelines, takeoff was probably slower and therefore a lot of the challenges like concentration of power, AI take over, and other global catastrophic risks were probably “easier” to handle. So, since we're conditioning on society having solved those problems for this row, we should probably make a larger update on societal competence for shorter timelines than longer timelines.

Numbers are illegible guesses after thinking about these considerations. | I feel particularly un-confident about this one.

Overall, an open society seems more likely to have the best ideas win out over time.

But perhaps post-AGI meme wars are really rough, and you need state-level control over information and ideas in order to avoid getting trapped in some epistemic black hole, or succumbing to some mind virus. This consideration favours China DSA worlds over US DSA worlds. (H/T Mia for this argument). |

| 2035: 55% | 41.6% | |||

| 2045: 40% | 40.1% | |||

| Multiplier on the value of the future, conditional on no AI takeover | 2027: 0.21 | 0.044 | ||

| 2035: 0.27 | 0.068 | |||

| 2045: 0.14 | 0.043 | |||

Based on Mia’s point estimates, the relative value of the future conditional on 2035 timelines compared to the value of the future conditional on 2027 timelines is 0.27/0.21 = 1.29. So you should condition on 2027 timelines if you think you can drive a takeover risk reduction that would be 29% greater than the reduction you could drive in 2035.

Based on Will’s point estimates, the relative value of the future conditional on 2035 timelines compared to the value of the future conditional on 2027 timelines is 0.068/0.044 = 1.54. So you should condition on 2027 timelines if you think you can drive a takeover risk reduction that would be 54% greater than the reduction you could drive in 2035.

Likewise, Mia found that the relative value of the future conditional on 2035 timelines compared to the value of the future conditional on 2045 timelines was 0.27/0.14 =1.93 and Will found it was 0.068/0.043 = 1.58. So you should condition on 2045 timelines over 2035 timelines if you think that you’ll be able to drive a 58-93% greater risk reduction on 2045 timelines.

So, overall, medium timelines look higher value than short or longer timelines, and shorter timelines perhaps look higher value than longer timelines.

Doing this exercise impacted our views in various ways:

- Initially, we thought it was likely that the value of the future, conditional on no takeover, would be higher in longer timeline worlds, because short timeline worlds just seem so chaotic.

- But now this seems really non-obvious to us: using a slightly different model, or creating these estimates on a different day, could easily lead us to switch to estimating that the value of the future conditional on no AI takeover is highest on the short timelines.

- In particular, we hadn’t fully appreciated the impact of the consideration that China is more likely to get a DSA on longer timelines.

- So we see the most important point as a conceptual one (that we need to take into account the value of the future conditional on no takeover as well as reduction in takeover risk), rather than the quantitative analysis above.

We’ll note again that this BOTEC was not about trajectory impact, which would probably strengthen the case for medium-to-long timelines worlds being higher-leverage.

This article was created by Forethought. Read the original on our website.

- ^

Formally:

Where is the likelihood of avoiding AI takeover and is the value of the future conditional on avoiding AI takeover, if we take some baseline action (e.g., doing nothing), while and are those values if we take the optimal action with our available resources. is some particular timeline.

We assume for simplicity that we cannot affect the value of the future conditional on AI takeover. For and , we measure value on a scale where 0 is the value of the future conditional on AI takeover, and 1 is the value of the best achievable future. This is based on a similar formula derived here.

- ^

I'm measuring value on a scale where 0 is the value of the future conditional on AI takeover, and 1 is the value of the best achievable future.

- ^

Benjamin Todd estimated that the number of EAs grew by 10-20% annually over the 2015-2020 period. The amount of committed dollars grew by 37% (from $10B to $46B) from 2015-2021 but this number included 16.5B from FTX. When we subtract funds from FTX, the growth rate is 24%.

- ^

Two value systems are resource-compatible if they can both be almost fully satisfied with the same resources.

Really enjoyed this, great work! I get the impression medium and long timelines are significantly under-prioritized by the community, perhaps due to social/systemic biases.

I’m thrilled to see this as currently a leading forum debate week topic Toby Ord has suggested, I think this could be really high value for the community to collectively reckon with, and of course Toby’s work on the surprisingly bad scaling of chain of thought post-training (1 & 2) seems highly relevant here.