Introduction

In April 2024, my colleague and I (both affiliated with Peking University) conducted a survey involving 510 students from Tsinghua University and 518 students from Peking University—China's two top academic institutions. Our focus was on their perspectives regarding the frontier risks of artificial intelligence.

In the People’s Republic of China (PRC), publicly accessible survey data on AI is relatively rare, so we hope this report provides some valuable insights into how people in the PRC are thinking about AI (especially the risks). Throughout this post, I’ll do my best to weave in other data reflecting the broader Chinese sentiment toward AI.

For similar research, check out The Center for Long-Term Artificial Intelligence, YouGov, Monmouth University, The Artificial Intelligence Policy Institute, and notably, a poll conducted by Rethink Priorities, which closely informed our survey design.

You can read the full report published in the Jamestown Foundation’s China Brief here: Survey: How Do Elite Chinese Students Feel About the Risks of AI?

Key Takeaways

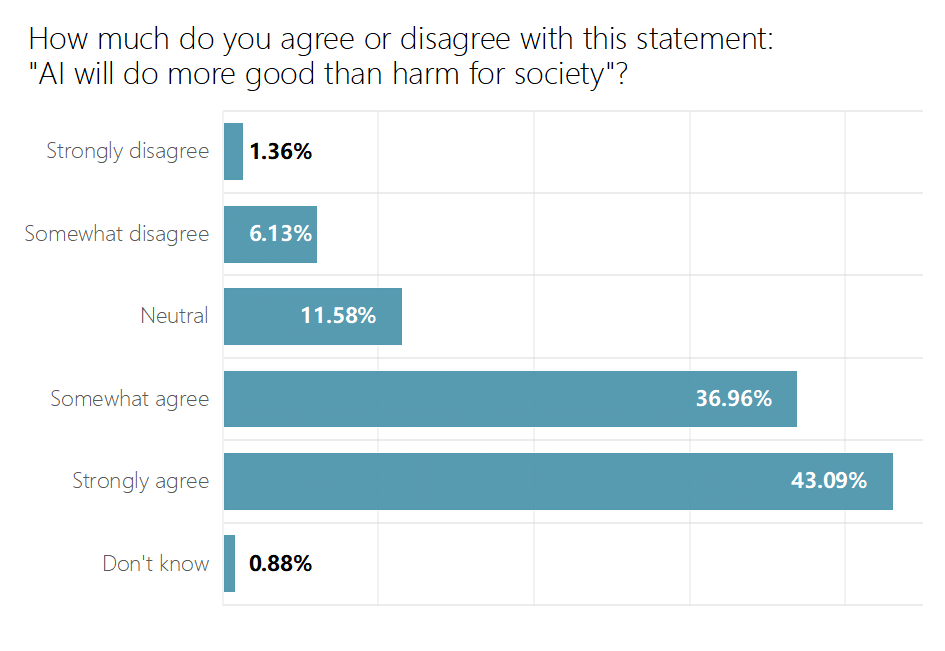

- Students are more optimistic about the benefits of AI than concerned about the harms. 80 percent of respondents agreed or strongly agreed with the statement that AI will do more good than harm for society, with only 7.5 percent actively believing the harms could outweigh the benefits. This, similar to other polling, indicates that the PRC is one of the most optimistic countries concerning the development of AI.

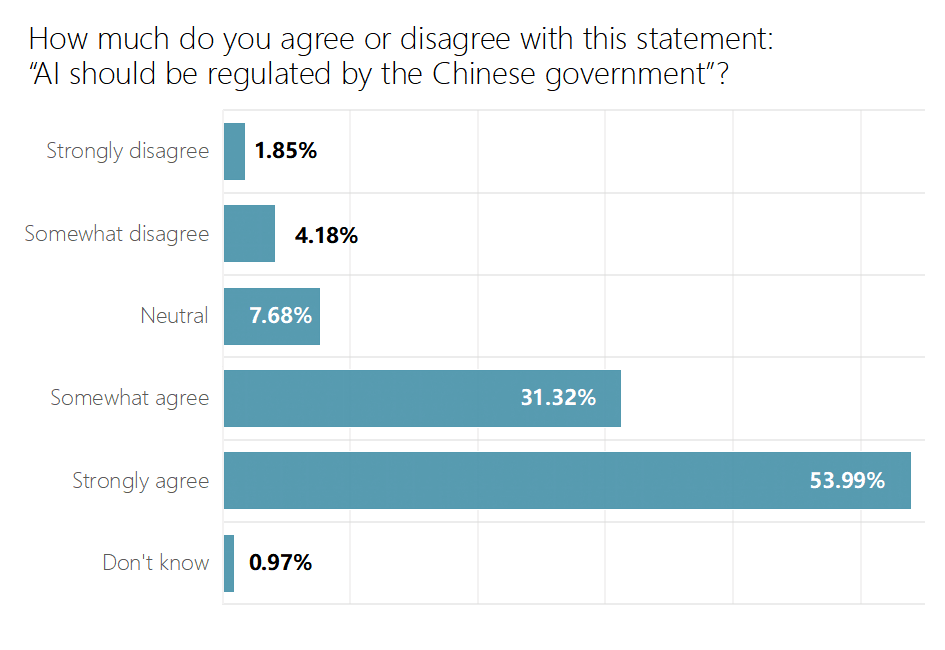

- Students strongly believe the Chinese government should regulate AI. 85.31 percent of respondents believe AI should be regulated by the government, with only 6 percent actively believing it should not. This contrasts with trends seen in other countries, where there is typically a positive correlation between optimism about AI and calls for minimizing regulation. The strong support for regulation in the PRC, even as optimism about AI remains high, suggests a distinct perspective on the role of government oversight in the PRC context.

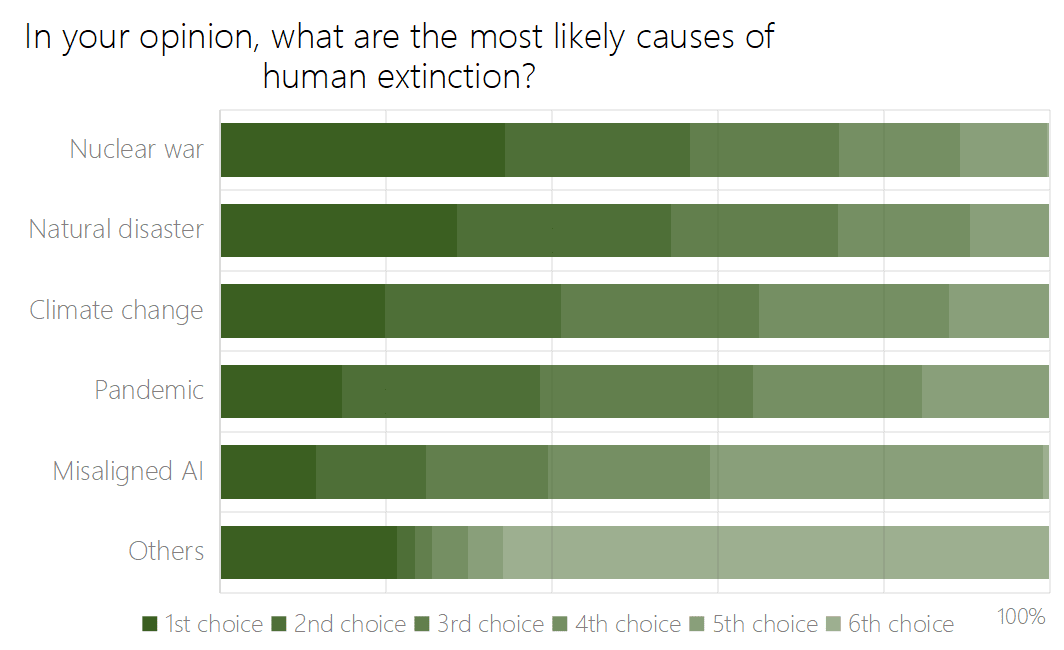

- Students ranked AI the lowest among all possible existential threats to humanity. When asked about the most likely causes of human extinction, misaligned artificial intelligence received the lowest score. Nuclear war, natural disaster, climate change, and pandemics all proved more concerning for students.

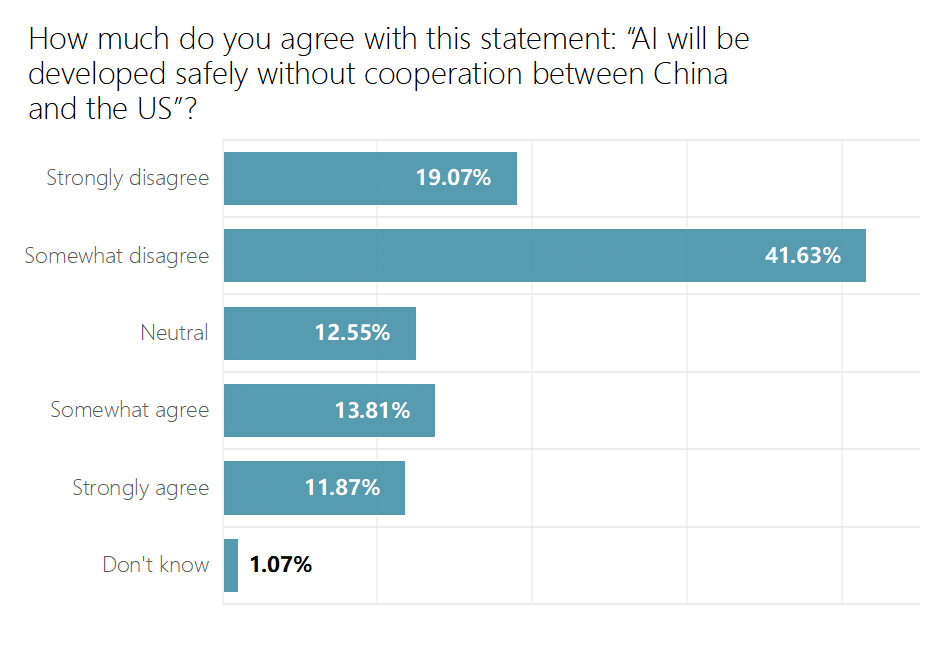

- Students lean towards cooperation between the United States and the PRC as necessary for the safe and responsible development of AI. 60.7 percent of respondents believe AI will not be developed safely without cooperation between China and the U.S., with 25.68 percent believing it will develop safely no matter the level of cooperation.

- Students are most concerned about the use of AI for surveillance. This was followed by misinformation, existential risk, wealth inequality, increased political tension, various issues related to bias, with the suffering of artificial entities receiving the lowest score.

Background

As the recent decision (决定) document from the Third Plenum meetings in July made clear, AI is one of eight technologies that the Chinese Communist Party (CCP) leadership sees as critical for achieving “Chinese-style modernization (中国式现代化),” and is central to the strategy of centering the country’s economic future around breakthroughs in frontier science (People’s Daily, July 22). The PRC also seeks to shape international norms on AI, including on AI risks. In October 2023, Xi Jinping announced a “Global AI Governance Initiative (全球人工智能治理倡议)” (CAC, October 18, 2023).

Tsinghua and Peking Universty are the two most prestigious universities in the PRC (by far), many of whose graduates will be very influential in shaping the country’s future. These students may also be some of China’s most informed citizens on the societal implications of AI, with both schools housing prominent generative AI and safe AI development programs.

We collected 1028 valid responses, with 49.61% of the sample population’s respondents attending Peking University and 50.39% attending Tsinghua University. See the Methodology section for further information on sampling procedures and considerations.

Report

Will AI do more harm than good for society?

Respondents strongly believed that AI's benefits outweigh the risks. 80% of respondents agreed or strongly agreed with the statement that AI will be more beneficial than harmful for society. Only 7.49% actively believed the harms could outweigh the benefits, while 12.46% remained neutral or uncertain.

Our results closely align with a 2022 Ipsos survey where 78% of Chinese respondents viewed AI's benefits as outweighing drawbacks – the most optimistic of all countries polled. It sharply contrasts Western sentiment, where polls suggest majorities worry more about transformative AI's dangers than upsides. (In the Ipsos survey, only 35 percent of Americans believed AI offers more benefits than harms.)

China currently seems to be one of the most optimistic countries about the upsides of AI—if not the most optimistic.

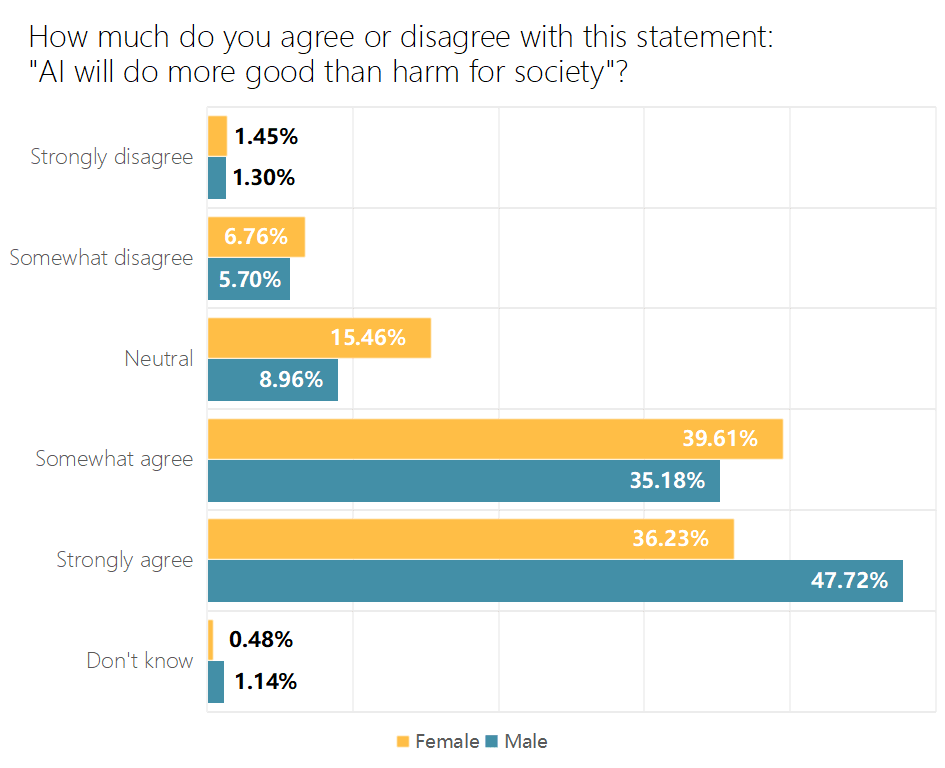

Women tend to be less optimistic about AI than men. Our study revealed a similar gender divide in attitudes towards AI, with male-identifying students displaying slightly greater optimism about its societal impact compared to their female-identifying counterparts. While 82.9% of males somewhat or strongly agreed that AI will do more good than harm for society, only 75.8% of females shared this positive outlook.

Concerns about the effects of AI in daily life

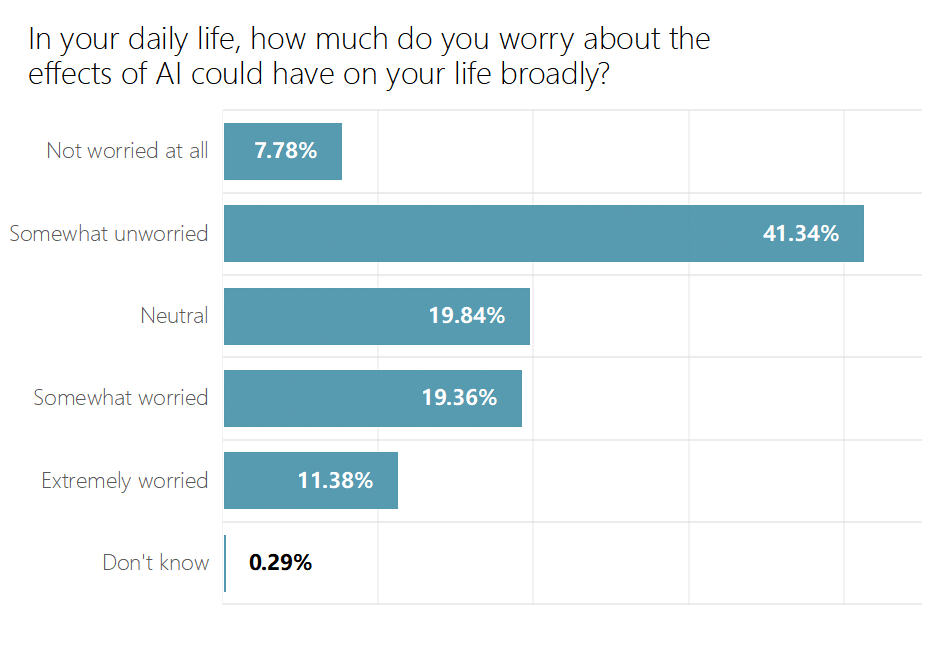

Following from student’s optimism about the benefits of AI, respondents tended to not be worried about the effects of AI in their daily life. 49.12% of respondents feel somewhat or not at all worried, while 31.2% report concern, and 20.13% were neutral or uncertain.

Perhaps more than any other country, China currently utilizes AI for many use cases, such as surveillance, healthcare, transportation, and education. For this reason, we wanted first to gauge a relatively broad indication of how much students actively worry about AI, then a separate indication of their perceived likelihood of more specific risks later on. We therefore chose to use the exact wording of the Rethink Priorities survey of U.S. adults (with their permission), which found that the majority (72%) of US adults worry little or not at all in their daily lives. Our data shows a similar trend toward being unconcerned.

Should the development of large-scale AI systems be paused for at least six months worldwide?

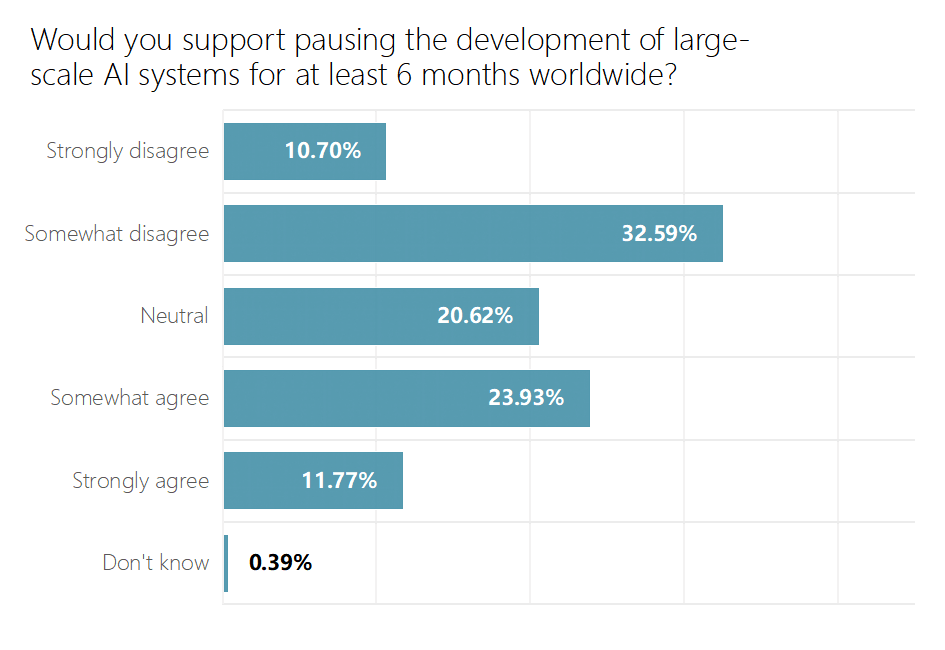

Respondents leaned towards not pausing large-scale AI systems. 43.29% disagreed or disagreed strongly with the claim that AI should be paused, while 35.16% agreed, and 21% remained neutral or uncertain.

This question was inspired by the open letter issued by the Future of Life Institute in March 2022, urging AI labs to suspend development for a minimum of six months to address potential safety concerns, signed by influential figures such as Elon Musk, Steve Wozniak, and Stuart Russell.

When a YouGov poll asked this question to a pool of respondents from the United States, 58-61% (depending on framing) supported and 19-23% opposed a pause on certain kinds of AI development. Similarly, when Rethink Priorities replicated the question for US adults, altering the framing from ">1000" to "some" technology leaders signing the open letter, their estimates indicated 51% of US adults would support a pause, whereas 25% would oppose it. Both surveys show a stronger desire for the pause of AI than our results.

When the Center for Long-Term AI asked a similar question to an exclusively Chinese population sample about ‘Pausing Giant AI Experiments,’ 27.4% of respondents supported pausing the training of AI systems more powerful than GPT-4 for at least six months, and 5.65% supported a six-month pause on all large AI model research. However, when a less specific question was asked, "Do you support the ethics, safety, and governance framework being mandatory for every large AI model used in social services?" 90.81% of participants expressed support.

Note: Our survey was conducted approximately one year after the open letter, meaning it was not as fresh on the respondents' minds.

Should the Chinese government regulate AI?

In our survey's most pronounced result, 85.3 percent of respondents agreed with or strongly agreed with the claim that AI should be regulated by the Chinese government, with only 6.03% disagreeing and 8.65% remaining neutral or uncertain.

A Harris-MITRE poll conducted in November 2022 estimated that 82% of US adults would support such regulation. A January 2023 Monmouth University poll estimated that 55% of Americans favored having "a federal agency regulate the use of artificial intelligence similar to how the FDA regulates the approval of drugs and medical devices", with only 41% opposed. Using similar question framing, Rethink Priorities estimated that a sizeable majority (70% of US adults) would favor federal regulation of AI, with 21% opposed.

We chose not to specify a particular government agency that would oversee AI regulation, as the regulatory landscape in China differs from the US. Even so, our results still reflected a comparably high demand from Chinese students for the government to implement oversight and control measures.

While China has shown little fear of regulating practices it deems unsafe AI applications, it has also hypercharged development efforts, attempting to provide top labs like Baidu and Tencent with resources to compete against Western labs such as OpenAI, Google, and Anthropic.

Cooperation between the U.S. and China

Students believe AI will not be developed safely without cooperation between the U.S. and China. 60.7% of respondents disagreed that AI would develop safely, 25.68% agreed, and 13.62% remained neutral or uncertain.

A similar question was asked to American voters in a survey conducted by the Artificial Intelligence Policy Institute (AIPI), inquiring whether respondents support China and U.S. agreeing to ban AI in drone warfare, in which 59% supported and only 20% did not support. However, in another poll from AIPI, 71% of US adults, including 69% of Democrats and 78% of Republicans, disapprove of Nvidia selling high-performance chips to China, while just 18% approve, underscoring the difficulty of navigating cooperation.

AI has been a topic of increasing prevalence for China-U.S. diplomacy. It was one of the main topics in the November 2023 Woodside Summit meeting between China President Xi Jinping and U.S. President Joe Biden in San Francisco, which spawned the commitment to a series of bilateral talks on the development of AI between the two countries. China has long complained about U.S. export controls on advanced chips and semiconductors, seeing them as obstacles to AI development. The U.S., meanwhile, justifies these restrictions by citing concerns over China's potential misuse of AI technologies and, implcitly, hurting China economically.

Misaligned AI compared to other existential threats

When asked about the most likely causes of human extinction, misaligned artificial intelligence received the lowest score. Nuclear war, natural disaster, climate change, and pandemics all proved more concerning for students.

Misaligned AI received the least amount of first-place votes of available options, and received the lowest aggregate score when combining all ordinal rankings.

We asked a nearly identical question to the one Rethink Priorities asked and received similar results, with AI also receiving the lowest ranking in their survey. The only difference was that in their survey, “Climate Change” ranked above “asteroid impact” as the second most concerning existential risk. This is possibly because our question asked for a conjunction of any natural disaster - referencing both an asteroid collision and supervolcanoes as examples.

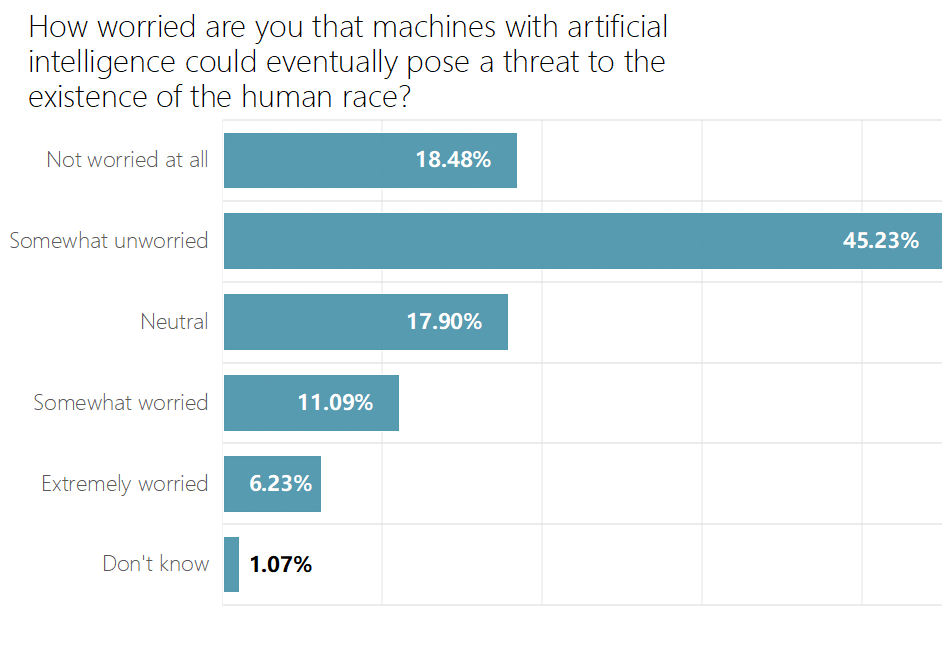

Risk of human extinction from AI

A significant number of respondents were concerned about the possibility of AI threatening the existence of the human race. 17.32% agreed or strongly agreed with the possibility, while 63.71% of respondents disagreed or strongly disagreed, and 18.97% remained neutral or uncertain.

We translated this question very closely to a YouGov poll of 1000 U.S adults. Results from the YouGov poll suggested high estimates of the likelihood of extinction caused by AI: 17% reported it ‘very likely’ while an additional 27% reported it ‘somewhat likely’. When Rethink Priorities replicated the survey question, they received lower estimates, but they chose to make their questions time-bound (e.g., the likelihood of AI causing human extinction in the next 10 or 50 years). However, because we believed most students would lack a meaningful distinction between different time ranges, we left the question temporally unbounded.

Could AI be more intelligent than humans?

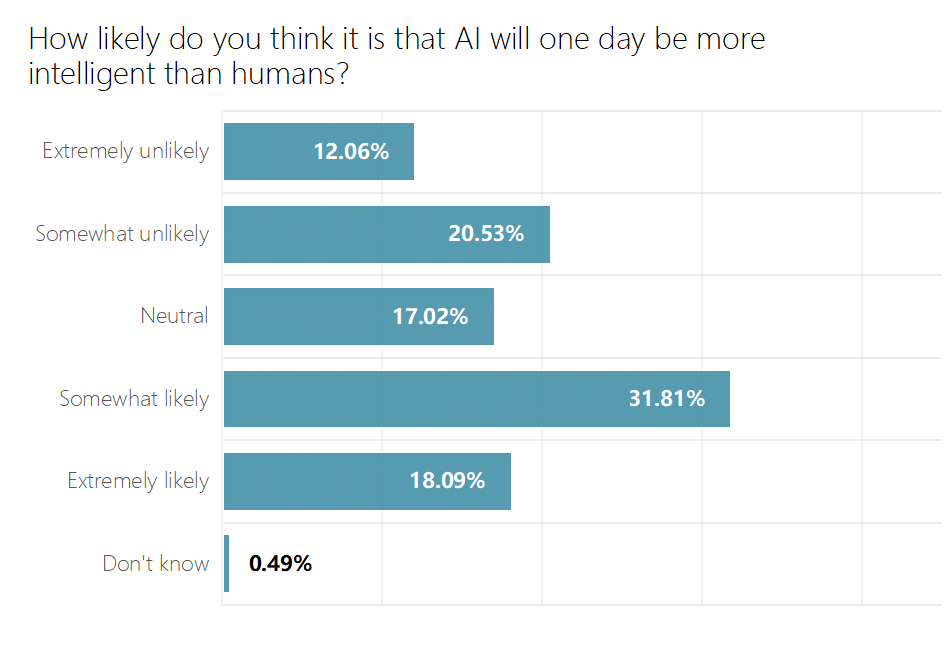

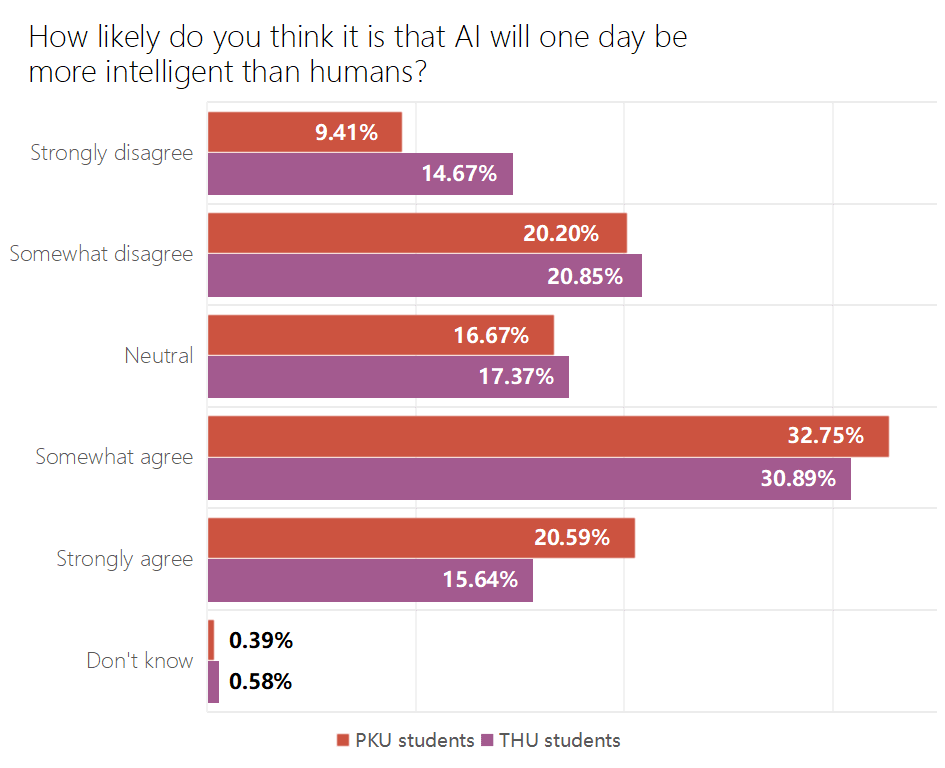

50% of respondents agreed or strongly agreed with the claim that AI will eventually be more intelligent than humans, while 32.59% disagreed and 17.51% remained neutral or uncertain.

When Rethink Priorities asked this question, they estimated that 67% of US adults think it is moderately likely, highly likely, or extremely likely that AI will become more intelligent than people. The Center for Long-Term AI asked a related question specifically to Chinese young and middle-aged AI-related students and scholars but chose to word it as “Strong AI” (强人工智能)—a catch-all term combining Artificial General Intelligence, Human-Level AI, and Superintelligence. Of their participants, 76% believed Strong AI could be achieved, although most participants believed Strong AI could not be achieved before 2050, and around 90% believed it would be “after 2120.” Both surveys show a more substantial reported likelihood of smarter-than-human intelligence than our results.

Given the more scientific orientation and reported higher familiarity with AI among Tsinghua University students, we analyzed the responses across universities. Our findings indicate that Tsinghua students exhibited a lower tendency to believe AI would eventually surpass human intelligence levels compared to their counterparts at Peking University.

Most concerning risks posed by AI

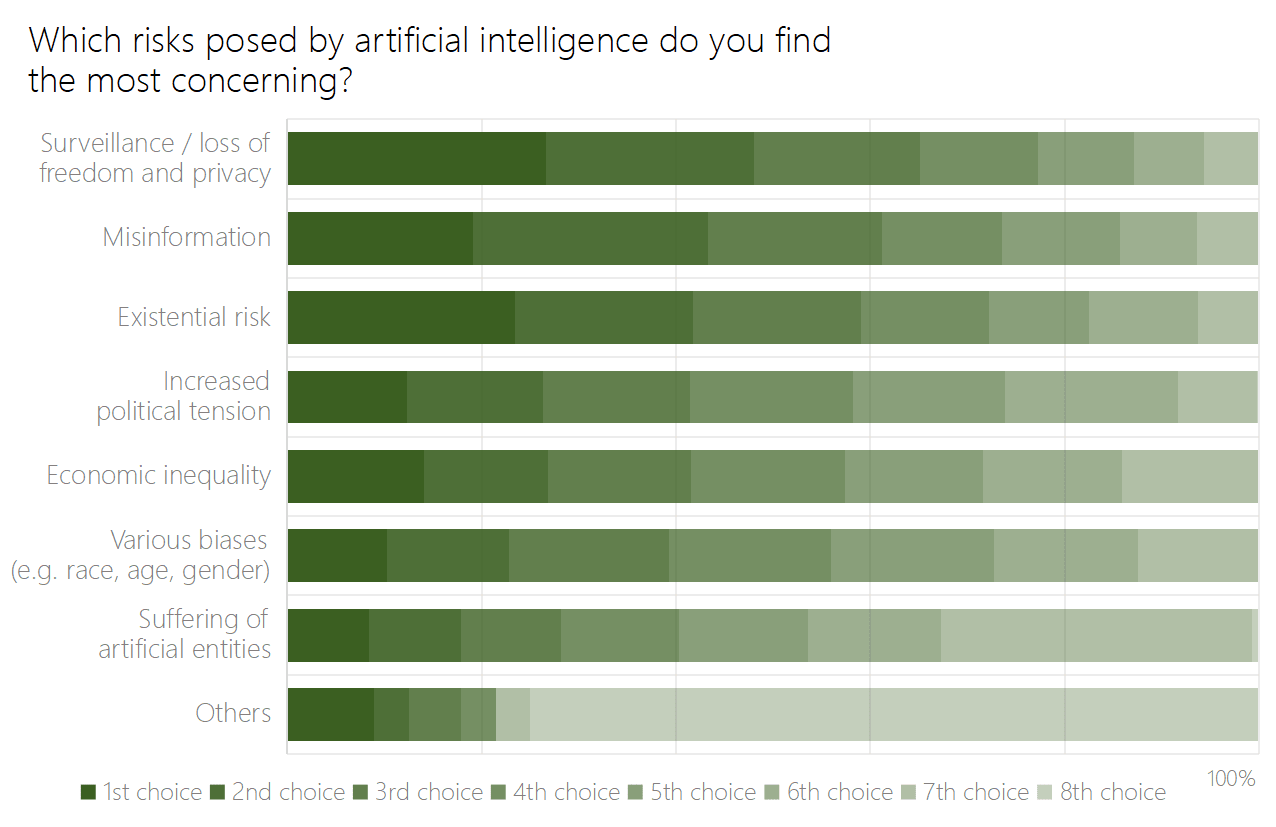

When asked which potential risks posed by AI are the most concerning, surveillance proved to be the most popular answer.

The use of AI for surveillance received the most amount of first place votes of available options (26.64%). This was followed by existential risk, misinformation, wealth inequality, increased political tension, various issues related to bias (e.g., race, age, or gender), with the welfare of AI entities receiving the least amount of first-place votes.

When aggregating ordinal rankings, surveillance also received the highest total score, but was then followed by misinformation, existential risk, increased political tension, wealth inequality, various issues related to bias, with the welfare of AI entities receiving the lowest total score.

China has actively invested in surveillance in recent years, bolstering extensive CCTV and digital monitoring systems in its major cities. Although our results don’t show how students view the upsides of AI surveillance, they do suggest students are concerned about the potential downsides.

Reflection

Limited survey data in the PRC assesses how citizens feel about the risks of AI. It is, therefore, difficult to know to what extent our survey of Tsinghua and Peking University students aligns with the larger Chinese population. However, our results suggest that students are broadly less concerned about the risks of AI than people in the United States and Europe.

Chinese students' optimism about the benefits of AI aligns more closely with sentiments found in the developing nations of the Global South, which tend to view the technology's potential in a more positive light overall. In terms of socioeconomic conditions and geopolitical standing, China currently finds itself between the developed and developing world, and it will be interesting to see how this shapes its views on AI in the coming years.

Among the major players in the global AI race, China's stance on addressing the risks of the technology remains the least clear. While some argue that the PRC does not take AI safety as seriously as Western nations, the country has taken notable steps to address these concerns in recent years.

Last year, the PRC co-signed the Bletchley Declaration at the UK AI Safety Summit, calling for enhanced international cooperation on developing safe and responsible AI systems. Within the PRC, the government has implemented regulations restricting the use of technologies like deepfakes and harmful recommendation algorithms. The Cyber Security Association of China (CSAC) announced an AI safety and security governance expert committee in October 2023. Major tech hubs like Shanghai, Guangdong, and Beijing, which hosts over half of China's large language models, have initiated efforts to establish benchmarks and assessments for evaluating the safety of AI applications. These measures indicate China's recognition of managing risks as AI capabilities rapidly advance, though the full extent and effectiveness of China's AI safety initiatives remain to be seen.

It's important to note that our survey occurred around one year later than many of the other studies we've used for comparative data. In that time, the field of AI has advanced significantly. Even from the date the survey was initially administered (April 18-20, 2024) to when this write-up was published (August 23), major developments have unfolded - such as the release of OpenAI's multimodal GPT-4o model and the first bilateral talk on AI between the U.S. and China.

Given the recognized absence of data on how Chinese citizens perceive the risks of AI, we hope that future research will further investigate perspectives in China. It could be interesting to investigate how different age demographics, urban and agrarian populations, or people working in different industries in the PRC feel about AI.

Methodology

To administer the survey, we leveraged the “Treehole (树洞)” online platforms, which are exclusive to each university and can be accessed only by current students. Respondents used their WeChat IDs to receive monetary compensation (a range of 3-20 RMB ($0.42-$2.80) per participant, randomly assigned). Respondents were also asked to state their university and detected IP address to mark those outside the two universities as invalid. These measures prevented multiple responses from single accounts and responses from bots.

One key uncertainty, however, is whether the gender demographics of the survey accurately reflect the composition of Tsinghua and PKU. Survey respondents reported a gender breakdown of 59.73 percent male and 40.27 percent female. Neither university publicly discloses its official gender demographics, so definitively comparing the survey demographics to the general population is not possible. Analysis of indirect sources, however, such as departmental announcements, blog posts, and other websites led us to conclude that the likely gender ratio is approximately 60 percent male, 40 percent female. Using this as our baseline probability assumption before conducting the survey, we found that the results aligned with this estimated ratio. As a result, we believed post-stratification of the dataset was not necessary.

Finally, a note on the translation process. Given the significant structural differences between Mandarin Chinese and English, we could not translate all terms literally. For example, "transformative AI" was translated as "前沿人工智能" (frontier AI), a more commonly used phrase conveying a similar meaning. However, we structured the framing of each question so that the answer someone would give in either language would be the same, attempting to ensure language-independent responses despite disparities in phrasing. You can find the Chinese version of the report here. 调查:中国顶尖大学的学生如何看待人工智能风险?

What is the base rate for Chinese citizens saying on polls that the Chinese government should regulate X, for any X?

Great question! I wish I knew the answer. Of all the Chinese surveys we looked through, to my knowledge, that question was never asked. (I think it might be a bit of a taboo.)

Nice one!

A nitpick (h/t @Agustín Covarrubias ): the English translation of the US-China cooperation question ('How much do you agree with this statement: "Al will be developed safely without cooperation between China and the US?') reads as ambiguous.

ChatGPT and Gemini suggest the original can be translated as 'Do you agree that the safe development of artificial intelligence does not require cooperation between China and the United States?', which would strike me as less ambiguous.

Good point. Yeah, i think the question was worded better in Chinese. The question, literally translated, is: "Do you agree or disagree that the safe development of artificial intelligence requires cooperation between China and the United States?" (你是否赞同人工智能的安全发展需要中美两国的合作?) This is essentially your translation, but a bit different in that it frames the question as "requires" instead of "does not require," which anchors the respondent a bit differently.

Just wanted to drop in this study: Harvard Undergraduate Survey on Generative AI since it seems somewhat related/interesting :)

Excellent stuff—great facts to anchor on!

Executive summary: A survey of elite Chinese university students found they are generally optimistic about AI's benefits, strongly support government regulation, and view AI as less of an existential threat compared to other risks, though they believe US-China cooperation is necessary for safe AI development.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

I teach math to mostly Computer Science students at a Chinese university. From my casual conversations with them, I've noticed that many seem to be technology optimists, reflecting what I perceive as the general attitude of society here.

Once, I introduced the topic of AI risk (as a joking topic in a class) and referred to a study (possibly this one: AI Existential Risk Survey) that suggests a significant portion of AI experts are concerned about potential existential risks. The students' immediate reaction was to challenge the study's methodology.

This response might stem from the optimism fostered by decades of rapid technological development in China, where people have become accustomed to technology making things "better."

I am also feeling that there could be many other survival problems (jobs, equality, etc) in the society, that makes them feel this problem could still be very far. But I know of a friend who try to work on AI governance and raise more awareness.