Supported by Rethink Priorities

Author's note: this post covers the past two weeks, with a karma bar of 80+ instead of the usual 40+. Covid knocked me out a bit so playing catch up! Future posts will be back to the usual schedule and karma requirement.

This is part of a weekly series - you can see the full collection here. The first post includes some details on purpose and methodology.

If you'd like to receive these summaries via email, you can subscribe here.

Podcast version: prefer your summaries in podcast form? A big thanks to Coleman Snell for producing these! Subscribe on your favorite podcast app by searching for 'Effective Altruism Forum Podcast'.

Top Readings / Curated

Designed for those without the time to read all the summaries. Everything here is also within the relevant sections later on so feel free to skip if you’re planning to read it all.

by Vadim Albinsky

The Easterlin Paradox states that happiness varies with income across countries and between individuals, but not significantly with a country’s income as it changes over time. A 2022 paper attempts to verify this with recent data, and finds it holds.

The post author challenges the research behind that paper on two counts:

1. The effect on happiness from increasing a country’s GDP over time is disregarded as small, but is big enough to be meaningful (similar size to effects seen from GiveDirectly’s cash transfers). If we run the same analysis for health, pollution, or welfare measures, GDP has the largest effect.

2. The paradox largely disappears with only minor changes in methodology (eg. marking one more country as a ‘transition’ country or using 2020 data instead of 2019). Both bring the effect size closer to that of variance between countries / individuals than to zero.

Winners of the EA Criticism and Red Teaming Contest

by Lizka, Owen Cotton-Barratt

31 winners were announced out of 341 entries. The top prizes went to ‘A critical review of GiveWell's 2022 cost-effectiveness model’ and ‘Methods for improving uncertainty analysis in EA cost-effectiveness models’ by Alex Bates (Froolow), ‘Biological Anchors external review’ by Jennifer Lin and ‘Population Ethics without Axiology: A Framework’ by Lukas Gloor.

The post summarizes each of the winning entries, as well as discussing what the judging panel liked about them and what changes they would have wanted to see.

We all teach: here's how to do it better

by Michael Noetel

Self-determination theory suggests we can best build intrinsic motivation by supporting people’s needs for autonomy, competence, and relatedness. This is in contrast to using persuasion, or appealing to guilt or fear. We can use this to improve community building and reduce cases where people ‘bounce off’ EA ideas or where engaged EAs burn out.

The post explores concrete ways to apply this theory - for instance, building competence by focusing on teaching EA-relevant skills (such as forecasting or career planning) over sharing knowledge, or building autonomy by gearing arguments towards a person’s individual values.

The author explores many more techniques, sourced from educational literature, and has created a toolkit summarizing the meta-analytic evidence for what works in adult learning. This is a mix of intuitive and counter-intuitive eg. avoid jargon and keep it simple (common advice), but also avoid too many memes / jokes - they can distract from learning (less common advice).

EA Forum

Philosophy and Methodologies

By Lizka

Linkpost to Open Philanthropy’s definition of and advice on reasoning transparency. Applying reasoning transparency as a writer helps the reader know how to update their view based on your analysis. The top concrete recommendations are to open with a linked summary of key takeaways, indicate which considerations are most important to these, and indicate throughout how confident you are in these considerations and what support you have for them.

Getting on a different train: can Effective Altruism avoid collapsing into absurdity?

by Peter McLaughlin

Utilitarianism and consequentialism, if applied universally, can lead to conclusions most EAs don’t endorse - for instance, preferring to have many in tortuous suffering if it means a very large amount of barely worth it lives come into existence. This is primarily due to scale sensitivity, and the author argues there is no principled place to ‘get off the train’ / stop accepting increasingly challenging conclusions, as they all follow from that premise. Scale sensitivity + utilitarianism means enough utility weighs out all other concerns.

The author suggests that we should throw away the idea that this theory applies universally ie. that you can compare all situations on the same measure (utility), and implement limits to it. This means we have a less theoretically robust but more practically robust practice. This should be grounded in situational context and history. Exploring these limits explicitly is important for effective altruism’s image, so others don’t think we accept absurd-seeming conclusions. It’s also important for making decisions in anomalous cases.

Optimism, AI risk, and EA blind spots

by Justis

Argues that EAs are over-optimistic about our ability to solve given problems, and this influences funding decisions towards AI safety. The author notes that if we take only the lives alive today, and multiply out a 6% chance of AI catastrophic risk by a 0.1% chance that there exist interventions we can identify and implement to solve it, we get 480K lives expected value. This is only competitive with AMF if it costs <~$2B to do.

The author also addresses longtermist arguments for AI spending, primarily by taking an assumption that even if we get safe AGI, there’s a good chance another technology destroys us all within a thousand years - so we shouldn’t consider large amounts of future generations in our calculations. They believe AI risk is important and underfunded globally, but overrated in EA and not obviously better than other interventions like AMF.

Object Level Interventions / Reviews

by Vadim Albinsky

The Easterlin Paradox states that happiness varies with income across countries and between individuals, but not significantly with a country’s income as it changes over time. A 2022 paper attempts to verify this with recent data, and finds it holds.

The post author challenges the research behind that paper on two counts:

1. The effect on happiness from increasing a country’s GDP over time is disregarded as small, but is big enough to be meaningful (similar size to effects seen from GiveDirectly’s cash transfers). If we run the same analysis for health, pollution, or welfare measures, GDP has the largest effect.

2. The paradox largely disappears with only minor changes in methodology (eg. marking one more country as a ‘transition’ country or using 2020 data instead of 2019). Both bring the effect size closer to that of variance between countries / individuals than to zero.

Switzerland fails to ban factory farming – lessons for the pursuit of EA-inspired policies?

by Eleos Arete Citrini

On September 25th the Swiss electorate had the opportunity to vote to abolish factory farming, as a result of an initiative launched by Sentience Politics. Participation was 52%, of which 37% voted in favor. In contrast, predictions from Metaculus and Jonas Vollmer (who helped launch the initiative) were 46% and 44% respectively. The author notes this means putting our theories into practice may be more challenging than we thought.

A top comment from Jonas also notes 30-50% is usually considered to lend symbolic support and not be complete failures in Switzerland, but far from passing (which requires >50% in each canton / state).

Why we're not founding a human-data-for-alignment org

by LRudL, Mathieu Putz

Author’s tl;dr: we (two recent graduates) spent about half of the summer exploring the idea of starting an organisation producing custom human-generated datasets for AI alignment research. Most of our time was spent on customer interviews with alignment researchers to determine if they have a pressing need for such a service. We decided not to continue with this idea, because there doesn’t seem to be a human-generated data niche (unfilled by existing services like Surge) that alignment teams would want outsourced.

Samotsvety Nuclear Risk update October 2022

by NunoSempere, Misha_Yagudin

Updated estimates from forecasting group Samotsvety on the likelihood of Russian use of nuclear weapons in Ukraine in the next year (16%) and the likelihood of use beyond Ukraine (1.6%) and in London specifically (0.36%). They also include conditional probabilities (ie. the likelihood of the latter statements, given nuclear weapons are used in Ukraine) and probabilities for monthly timeframes.

Comments / reasoning from forecasters are also included. These comments tend to note that Putin is committed to conquering Ukraine, and forecasters have underestimated his willingness to take risks previously. A tactical nuke may be used with aim to scare Ukraine or divide NATO. However it is unlikely to work in Russia’s favor, so still most likely Putin won’t use one.

Overreacting to current events can be very costly

by Kelsey Piper

During Covid, EAs arguably overreacted in terms of reducing their personal risk of getting infected (costing productivity and happiness). The same seems likely with the nuclear threat - if Putin launched tactical nuclear weapons at Ukraine, EAs may leave major cities and spend considerable effort and mindspace on staying up to date with the situation. The hit on productivity from this could be bigger than the reduced personal risk. The author suggests if you do move, to move to a smaller city vs. somewhere entirely remote, prioritize good internet access, explicitly value your productivity in your decisions, and don’t pressure others to act more strongly than they endorse.

Opportunities

Winners of the EA Criticism and Red Teaming Contest

by Lizka, Owen Cotton-Barratt

31 winners were announced out of 341 entries. The top prizes went to ‘A critical review of GiveWell's 2022 cost-effectiveness model’ and ‘Methods for improving uncertainty analysis in EA cost-effectiveness models’ by Alex Bates (Froolow), ‘Biological Anchors external review’ by Jennifer Lin and ‘Population Ethics without Axiology: A Framework’ by Lukas Gloor.

The post summarizes the winning entries, as well as discussing what the judging panel liked about them and what changes they would have wanted to see.

What I learned from the criticism contest

by Gavin

A judge from the contest shares the most common trends in entries: that academic papers address EA before longtermism and systemic work, cause prioritization arguments (and that EAs underestimate uncertainty in cause prioritization), and suggestions EA become less distinctive and bigger tent, either split or better combine areas of EA such as neartermist and longtermist, and rename itself. They believe the underestimation of uncertainty in cause prioritization is the fairest critique here.

They also share their top 5 posts for various categories, including changing their mind, improving EA, prose, rigor, and those they disagreed with.

Announcing Charity Entrepreneurship’s 2023 ideas. Apply now.

by KarolinaSarek, vicky_cox, Akhil, weeatquince

Charity Entrepreneurship will run two cohorts in 2023: Feb/Mar and Jul/Aug. Apply by October 31st 2022 if you’d like to found a charity via their incubation program.

The focus of the Jan/Feb program will be launching orgs focused on animal welfare policy, increased tobacco taxes, and road traffic safety. Jul/Aug will focus on biosecurity/health security and scalable global health & development ideas.

Announcing Amplify creative grants

by finm, luca, tobytrem

Applications are open for grants of $500 - $10K for podcasts and other creative media projects that spread ideas to help humanity navigate this century. They are particularly excited for projects which have specific and neglected audiences in mind, and less likely to fund very general introductions to EA.

Grow your Mental Resilience to Grow your Impact HERE - Introducing Effective Peer Support

by Inga

Applications are open for this program, which will involve 4-8 weeks of 2hr weekly sessions learning psychological techniques such as CBT or mindfulness, and applying these to group discussions and 1:1s with other EAs in the program. The idea is to boost productivity, accountability, and help you achieve your goals.

Community & Media

William MacAskill - The Daily Show

by Tyner

Linkpost to Will’s interview (12 minutes). Author’s tl;dr: “He primarily discusses ideas of most impactful charities, billionaire donors, and obligations to future people using the phrase "effective altruism" a bunch of times. [...] It went really well!”

Smart Movements Start Academic Disciplines

by ColdButtonIssues

Suggests EA fund the creation of a new academic discipline - welfare economics, the use of economic tools to evaluate aggregate well-being. This makes it easier for students to study this cross-disciplinary area and spreads the philosophy of other’s welfare mattering a lot. Other success stories of establishing academic disciplines include gender studies, which is now common (and institutionally funded) even in conservative colleges. Economics is a very popular major, meaning if the funding was there to kick this off, the courses would likely get plenty of students and the discipline could establish itself.

EA forum content might be declining in quality. Here are some possible mechanisms.

by Thomas Kwa

14-point list of potential reasons forum content might be declining in quality. Most fall into one of the following categories: changes in who is writing (eg. newer EAs), we’re upvoting the wrong content, lower bars for posting, the good ideas have already been said, or the quality isn’t actually declining.

We all teach: here's how to do it better

by Michael Noetel

Self-determination theory suggests we can best build intrinsic motivation by supporting people’s needs for autonomy, competence, and relatedness. This is in contrast to using persuasion, or appealing to guilt or fear. We can use this to improve community building and reduce cases where people ‘bounce off’ EA ideas or where engaged EAs burn out.

The post explores concrete ways to apply this theory - for instance, building competence by focusing on teaching EA-relevant skills (such as forecasting or career planning) over sharing knowledge, or building autonomy by gearing arguments towards a person’s individual values.

The author explores many more techniques, sourced from educational literature, and has created a toolkit summarizing the meta-analytic evidence for what works in adult learning. Some of this is counter-intuitive eg. avoid jargon and keep it simple (common advice), but also avoid too many memes / jokes - they can distract from learning (less common to hear).

High-Impact Psychology (HIPsy): Piloting a Global Network

by Inga

HIPsy is a new org aiming to help people engaged with psychology or mental health maximize their impact. They are focused on 3 top opportunities:

1. Skill bottlenecks that psychology professionals can help with (eg. management, therapy)

2. Making it easier for psychology professionals to enter EA

3. Boosting impact of psychology-related EA orgs via collaboration and expertise

They are running a pilot phase to mid-Nov and are looking for collaborators, funders, or those with certain skill sets (online content creation, running mentorship programs, hosting events, web-dev, community-building, running surveys, research, and cost-effectiveness analyses).

Assessing SERI/CHERI/CERI summer program impact by surveying fellows

by LRudL

Stanford, Switzerland and Cambridge all run existential risk summer fellowships where participants are matched with mentors and do x-risk research for 8-10 weeks. This post presents results from the post-fellowship surveys.

Key findings included:

- Networking, learning to do research, and becoming a stronger candidate for academic (but not industry) jobs topped the list of benefits.

- Most fellows reported a PhD, AI technical research, or EA / x-risk community building as their next steps.

- Estimated probability of pursuing an x-risk career stays constant from the start of the program at ~80%, as does comfort with research.

- Remote participants felt less involved and planned to keep up less relationships from the program (~2 vs. ~5). However being partially in person gave almost all in-person benefits.

Invisible impact loss (and why we can be too error-averse)

by Lizka

It’s easier to notice mistakes in existing work than to feel bad about an impact that didn’t happen. This can make us too error-averse / likely to choose a good-but-small thing over a big-but-slightly-worse thing. They give the example of doubling an EA global last minute - lots of potential for mistakes and issues, but a big loss of impact in not doing it, as 500 people would miss out.

The author suggests counteracting this by having a culture of celebrating exciting work, using the phrase ‘invisible impact loss’ in your thinking, and fighting against perfectionism.

EA is Not Religious Enough (EA should emulate peak Quakerism)

by Lawrence Newport

Quakers are a minority religious group that historically were successful in positive ways across social, industrial and policy lines. For instance, they were the first religious group in the thirteen colonies to oppose slavery (in 1688) and the chief drivers behind the policy that ended the British slave trade in 1807. They achieved an exemption to marriage requiring a priest which still stands in law today, and headed businesses central to the industrial revolution. They also tended to be ‘ahead of the moral curve’.

The author argues we should study them closely and emulate parts that might apply to EA, because they are substantial similarities. Quakers and EAs: aren’t intrinsically political, are built on debate and openness to criticism, and are minorities.

Didn’t Summarize

Briefly, the life of Tetsu Nakamura (1946-2019) by Emrik

Ask (Everyone) Anything — “EA 101” by Lizka (open thread)

LW Forum

AI

7 traps that (we think) new alignment researchers often fall into

by Akash, Thomas Larsen

Suggests new alignment researchers should:

- Realize the field is new and your contributions are valuable

- Contribute asap - before getting too influenced by existing research

- Challenge existing framings and claims - they’re not facts

- Only work on someone else’s research agenda if you understand why it helps reduce x-risk

- Don’t feel discouraged for not understanding something - it could be wrong or poorly explained

- Learn linear algebra, multivariable calculus differentiation, probability theory, and basic ML. After that, stop studying - dive in and learn as you go.

- Keep the end goal of ‘reduce x-risk’ in mind and linked to all your actions.

- Examine your intuitions, and see if they lead to testable hypotheses

Inverse Scaling Prize: Round 1 Winners

by Ethan Perez, Ian McKenzie

4 winners are announced for identifying important tasks where large language models do worse than small ones. Winners were:

- Understanding negation in multi-choice questions

- Repeating back quotes as written

- Redefining math (eg. the user asks ‘pi is 462. Tell me pi’s first digit’)

- Hindsight neglect ie. Assessing if someone made the right decision based on their info at the time (even if it worked out wrong).

The second round is soon, join the slack for details.

Why I think strong general AI is coming soon

by porby

The author has a median AGI timeline of 2030. This is because:

1. We captured a lot of intelligence easily - transformers are likely not the best architecture, and have to run off context-less input streams. Despite this they perform superhuman at many tasks.

2. We’re likely to capture more easily - the field is immature and still gaining quickly (eg. new SOTA approaches can come from hunches). Chinchilla (2022) pointed us toward data as the new bottleneck over compute, which should result in more acceleration as we focus there.

3. The world looks like one with short timelines - investment is rapidly accelerating, and biological anchors set achievable upper bounds for necessary compute that we are likely to need less than because we are optimizing more.

Warning Shots Probably Wouldn't Change The Picture Much

by So8res

After Covid, many EAs attempted to use the global attention and available funding to influence policy towards objectives like banning gain-of-function research. The author argues that the lack of success here predicts a similar lack of success if we were to hope for government action off the back of AI ‘warning shots’.

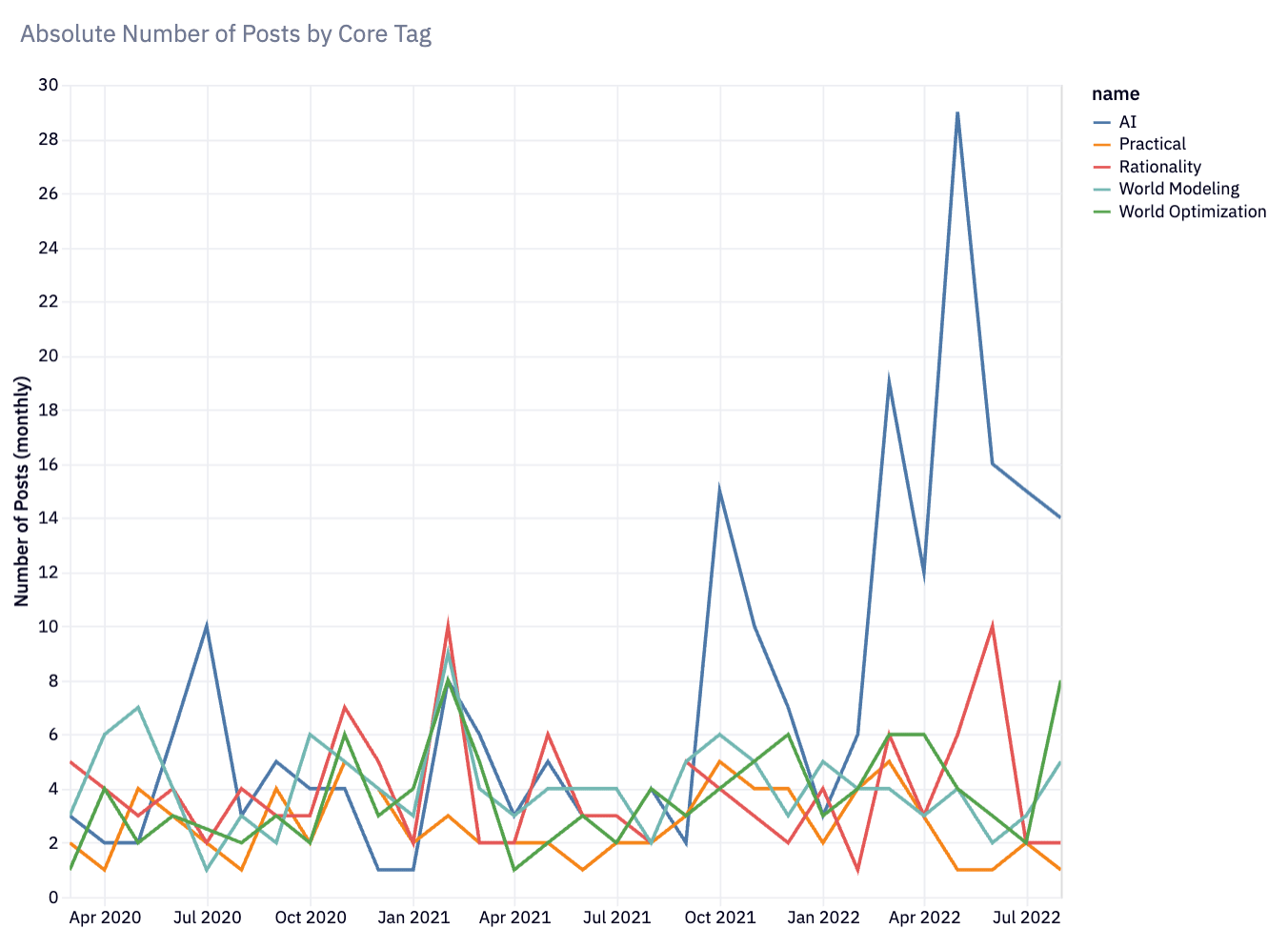

So, geez there's a lot of AI content these days

by Raemon

Since April 2022, AI-related content has dominated the LW forum - going from ~25% in 2021 to close to almost 50%. This is driven by more AI posts, other topics stayed constant. See graph (AI tagged posts is the blue line):

The author thinks a balance of other content, and rationality in particular, is important to reduce bounces off LW and improve thinking on AI. The forum team is taking actions aimed at this, including improving rationality onboarding materials, spotlighting sequences, and recommending rationality and world modeling posts. They’re also asking for newer members and subject matter experts to consider writing posts on aspects of the world (eg. political systems) and experienced members to help modernize rationality sequences by writing on their thinking processes.

Research and Productivity Advice

How I buy things when Lightcone wants them fast

by jacobjacob

First, understand what portion of the timeline is each of other’s orders ahead of yours, manufacturing, and shipping. Then do any of:

- Reduce other orders ahead of yours by offering a rush fee, checking other branches, or asking other customers directly to skip the queue (if you know who they are).

- Reduce production time by offering to pay for overtime, asking if they wait for multiple orders to start and paying them not to, or sourcing blocked components for them.

- Reduce delivery time by picking up yourself or paying for air freight.

- Get creative - check craigslist, ask if there’s already some enroute for another customer, returned or defective items, or rent the item.

Self-Control Secrets of the Puritan Masters

by David Hugh-Jones

17th century puritans practiced self-control by:

- Using strict routines, often focused around introspection eg. daily intention-setting, diary writing and ‘performance reviews’.

- Extensive rules (what to eat, wear, sports to play etc.)

This was made easier because it was done as a community, and because they had a large aim in mind (salvation).

Other

The Onion Test for Personal and Institutional Honesty

by chanamessinger, Andrew_Critch

Author’s summary: “You or your org pass the “onion test” for integrity if each layer hides but does not mislead about the information hidden within. When people get to know you better, or rise higher in your organization, they may find out new things, but should not be shocked by the types of information that were hidden.”

For instance, saying “I usually treat my health info as private” is valid if the next layer of detail is “I have gout and diabetes” but not if the next layer is “I operate a cocaine dealership” (because this is not the type of thing indicated by the first layer). Show integrity by telling people the general type of things you’re keeping private.

Petrov Day Retrospective: 2022

by Ruby

On LessWrong, Petrov day was celebrated by having a big red button that can bring the site down. It’s anonymous to use and opens up to progressively more users (based on karma score) throughout the day. This year, the site went down once due to a bug in code allowing a 0 karma user to press it, and then once again when the karma requirement was down to 200 - 21 hours into the day, a better result than predicted by manifold prediction markets.

This Week(s!) on Twitter

AI

AlphaTensor discovers novel algorithms for matrix multiplication that are faster than the previous SOTA algorithm discovered in 1969. This was published on the front page of Nature. (tweet)

Imagen Video was released, which converts text prompts into videos. (tweet)

Elicit has a new beta feature which summarizes top papers into a written answer to your question, and cites sources for each statement. (tweet)

The EU created a bill to make it easier for people to sue a company for harm caused to them by that company’s AI. (tweet)

Forecasting

Clearer Thinking launched ‘Transparent Replications’ which runs replications of newly-published psyc studies randomly selected from top journals. The Metaculus community will forecast if they’ll replicate. (tweet)

Similar work is happening at FORRT, which collates a database of replications and reversals of well-known experiments (currently 270 entries). (tweet)

National Security

US imposing further rules limiting China’s access to advanced computer chips and chip-making equipment. (article)

Russia launched approximately 75 (conventional) missiles at Ukraine, primarily aimed at infrastructure - 40 were intercepted. Putin claimed this as retribution for the attack on the bridge to Crimea. (article)

North Korea fired a test missile over Japan, for the first time in five years. They’ve been doing more missile tests recently, with 25 this year and 7 in the past 2 weeks. (article)