Pleasure and suffering are not conceptual opposites. Rather, pleasure and unpleasantness are conceptual opposites. Pleasure and unpleasantness are liking and disliking, or positive affect and negative affect, respectively.[1]

Imagine an experience of suffering that involved little or no effects on your attention. It's easy to ignore, along with whatever is causing it and whatever could help relieve it. We (or at least, I) would either recognize this as at most mild suffering, and perhaps not suffering at all.

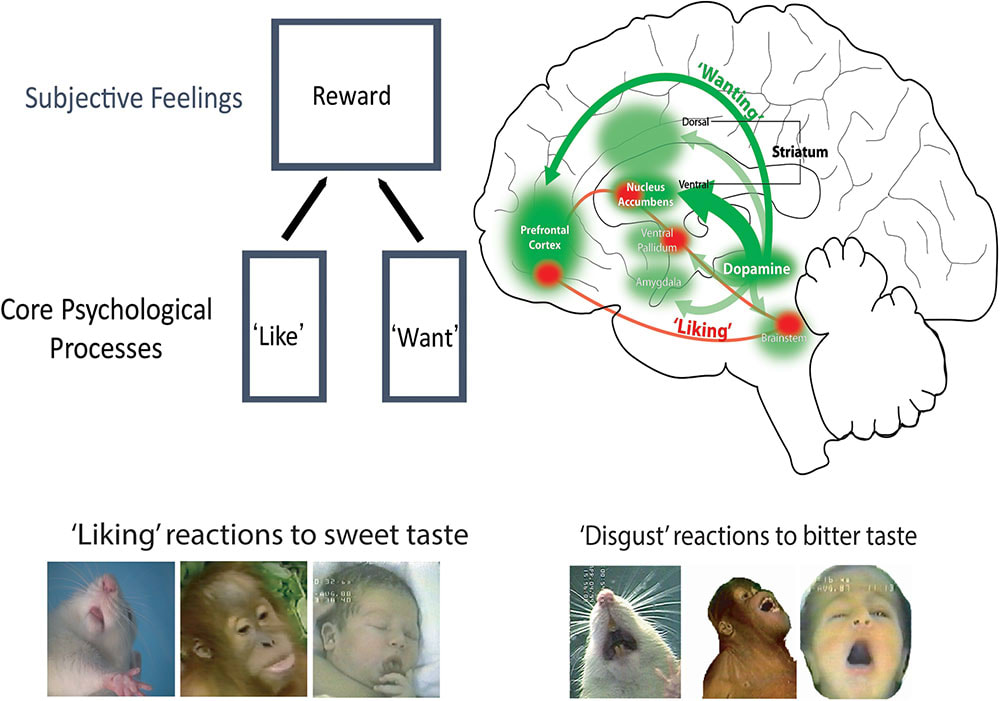

Our concept of suffering probably includes both unpleasantness and desire (or wanting), which is mediated by motivational salience (or incentive salience and aversive/threat/fearful salience), a mechanism for pulling our attention (Berridge, 2018).[2] I'd guess the apparent moral urgency we assign to intense suffering requires intense desire, and so strong effects on attention. We wouldn't mind an intensely unpleasant experience if it had no effects on our attention,[3] although it's hard for me to imagine what that would even be like. I find it easier to imagine intense pleasure without strong effects on attention.

Pleasure and unpleasantness need not involve desire, at least conceptually, and it seems pleasure at least does not require desire in humans. Desire, as motivational salience, depends on brain mechanisms in animals distinct from those for pleasure, and which can be separately manipulated (Berridge, 2018, Nguyen et al., 2021, Berridge & Dayan, 2021), including by reducing desire (incentive salience) without also reducing drug-induced euphoria (Leyton et al., 2007, Brauer & H De Wit, 1997). Berridge and Kringelbach (2015) summarize the last two studies as follows:

human subjective ratings of drug pleasure (e.g., cocaine) are not reduced by pharmacological disruption of dopamine systems, even when dopamine suppression does reduce wanting ratings (Brauer and De Wit, 1997, Leyton et al., 2007)

On the other hand, in humans and other animals, the aversive salience of physical pain may not be empirically separable from its unpleasantness (Shriver, 2014), but as far as I can tell, the issue is not settled.

- ^

Or specifically the conscious versions of these. On the possibility of unconscious liking and unconscious emotion, see Berridge & Winkielman, 2003 (pdf) and Winkielman & Berridge, 2004.

- ^

And perhaps specifically aversive desire, i.e. aversive/threat salience.

- ^

This could be true by definition, if "to mind" is taken to mean "to pay attention to" or find aversive (aversive salience), at least while experiencing the unpleasantness.

IMO, suffering ≈ displeasure + involuntary attention to the displeasure. See my handy chart (from here):

I think wanting is downstream from the combination of displeasure + attention. Like, imagine there’s some discomfort that you’re easily able to ignore. Well, when you do think about it, you still immediately want it to stop!

I'm pretty sympathetic to suffering ≈ displeasure + involuntary attention to the displeasure, or something similar.

I think wanting, or at least the relevant kind here, just is involuntary attention effects, specifically motivational salience. Or, at least, motivational salience is a huge part of what it is. This is how Berridge often uses the terms.[1] Maybe a conscious 'want' is just when the effects on our attention are noticeable to us, e.g. captured by our model of our own attention (attention schema), or somehow make it into the global workspace. You can feel the pull of your attention, or resistance against your voluntary attention control. Maybe it also feels different from just strong sensory stimuli (bottom-up, stimulus-driven attention).

Where I might disagree with "involuntary attention to the displeasure" is that the attention effects could sometimes be to force your attention away from an unpleasant thought, rather than to focus on it. Unpleasant signals reinforce and bias attention towards actions and things that could relieve the unpleasatness, and/or disrupt your focus so that you will find something to relieve it. Sometimes the thing that works could just be forcing your attention away from the thing that seems unpleasant, and your attention will be biased to not think about unpleasant things. Other times, you can't ignore it well enough, so you your attention will force you towards addressing it. Maybe there's some inherent bias towards focusing on the unpleasant thing.

But maybe suffering just is the kind of thing that can't be ignored this way. Would we consider an unpleasant thought that's easily ignored to be suffering?

Berridge and Robinson (2016) distinguish different kinds of wanting/desires, and equate one kind with motivational (incentive) salience:

I think you can have involuntary attention that aren’t particularly related to wanting anything (I’m not sure if you’re denying that). If your watch beeps once every 10 minutes in an otherwise-silent room, each beep will create involuntary attention—the orienting response a.k.a. startle. But is it associated with wanting? Not necessarily. It depends on what the beep means to you. Maybe it beeps for no reason and is just an annoying distraction from something you’re trying to focus on. Or maybe it’s a reminder to do something you like doing, or something you dislike doing, or maybe it just signifies that you’re continuing to make progress and it has no action-item associated with it. Who knows.

In my ontology, voluntary actions (both attention actions and motor actions) happen if and only if the idea of doing them is positive-valence, while involuntary actions (again both attention actions and motor actions) can happen regardless of their valence. In other words, if the reinforcement learning system is the reason that something is happening, it’s “voluntary”.

Orienting responses are involuntary (with both involuntary motor aspects and involuntary attention aspects). It doesn’t matter if orienting to a sudden loud sound has led to good things happening in the past, or bad things in the past. You’ll orient to a sudden loud sound either way. By the same token, paying attention to a headache is involuntary. You’re not doing it because doing similar things has worked out well for you in the past. Quite the contrary, paying attention to the headache is negative valence. If it was just reinforcement learning, you simply wouldn’t think about the headache ever, to a first approximation. Anyway, over the course of life experience, you learn habits / strategies that apply (voluntary) attention actions and motor actions towards not thinking about the headache. But those strategies may not work, because meanwhile the brainstem is sending involuntary attention signals that overrule them.

So for example, “ugh fields” are a strategy implemented via voluntary attention to preempt the possibility of triggering the unpleasant involuntary-attention process of anxious rumination.

The thing you wrote is kinda confusing in my ontology. I’m concerned that you’re slipping into a mode where there’s a soul / homunculus “me” that gets manipulated by the exogenous pressures of reinforcement learning. If so, I think that’s a bad ontology—reinforcement learning is not an exogenous pressure on the “me” concept, it is part of how the “me” thing works and why it wants what it wants. Sorry if I’m misunderstanding.

I agree you can, but that's not motivational salience. The examples you give of the watch beeping and a sudden loud sound are stimulus-driven or bottom-up salience, not motivational salience. There are apparently different underlying brain mechanisms. A summary from Kim et al., 2021:

I'd say there is some "innate" motivational salience, e.g. probably for innate drives, physical pains, innate fears and perhaps pleasant sensations, but then reinforcement (when it's working as typically) biases your systems for motivational salience and action towards things associated with those, to get more pleasure and less unpleasantness.

I'll address two things you said in opposite order.

I don't have in mind anything like a soul / homunculus. I think it's mostly a moral question, not an empirical one, to what extent we should consider the mechanisms for reinforcement to be a part of "you", and to what extent your identity persists through reinforcement. Reinforcement basically rewires your brain and changes your desires. I definitely consider your desires, as motivational salience, which have been shaped by past reinforcement, to be part of "you" now and (in my view) morally important.

From my understanding of the cognitive (neuro)science literature and their use of terms, attentional and action biases/dispositions caused by reinforcement are not necessarily "voluntary".

I think they use "voluntary", "endogenous", "top-down", "task-driven/directed" and "goal-driven/directed" (roughly) interchangeably for a type of attentional mechanism. For example, you have a specific task in mind, and then things related to that task become salient and your actions are biased towards actions that support that task. This is what focusing/concentration is. But then other motivationally salient stimuli (pain, hunger, your phone, an attractive person) and intense stimuli or changes in background stimuli (a beeping watch, a sudden loud noise) can get in the way.

My impression is that there is indeed a distinct mechanism describable as voluntary/endogenous/top-down attention, which lets you focus and block irrelevant but otherwise motivationally salient stimuli. It might also recruit motivational salience towards relevant stimuli. It's an executive function. And I'm inclined to reserve the term "voluntary" for executive functions.

In this way, we can say:

In both cases, reinforcement for motivational salience is partly the reason for the behaviour. But they seem less voluntary than when executive/top-down control works better.

Motivational salience can also be manipulated in experiments to lead to dissociation with remembered, predicted and actual reward (Baumgartner et al., 2021):

If you agree that suffering is (at least) unpleasantness + desire, and both components can vary separately in intensity, then there isn't really "one intensity" for an experience of suffering, but two, one for each component. Suffering would vary in intensity along the two dimensions of unpleasantness intensity and desire intensity.

You could have some function that takes both component intensities and spits out a "suffering intensity" or "suffering badness", though.

Most of my conception of suffering doesn't feel captured by either of these.

I think suffering does not require unpleasantness (you might agree) - consider mental suffering that occurs in absence of {'unpleasant' experience, such as physical pain, or such as this post's taste example}. I'd say suffering can occur in response to[1] those, as well as in response to other things. For example, watching a bug trying and unable to to roll back onto its legs can cause empathy/sadness, but there's no 'unpleasant sensual experience' here, at least for me (I consider the visual experience to be neutral).

I don't feel that 'desire/wanting' explains of mental suffering what 'unpleasantness' does not. I guess you could say that I want this situation to be different / not happening, and that would be true, but that doesn't feel like the source of the sadness to me, nor do I feel that that 'wanting' is itself suffering.

(Note: I'm relying on my subjective sense of what 'desire/wanting' means, because they were undefined in the post)

If you want to address my view, I think it could be helpful to list some examples of suffering and identify the 'desire/wanting' in each of them.

I'm unsure if I consider both {the sensory experience of physical pain} and {the internal mental/psychological reaction to it} to be suffering, or if I consider only the latter to be suffering. I lean towards 'only the latter', though to not mislead anyone, I want to be clear that in my view extreme physical pain still causes extreme internal suffering for a large class of Earthen life forms.

Unpleasantness doesn't only apply to sensations. I think sadness, like as an empathetic response to the bug struggling, involves unpleasantness/negative affect. That's the case on most models, AFAIK. I agree (or suspect) it's not the sensations (visual experience) that are unpleasant.

To add to this, there’s evidence negative valence depends on brain regions common to unpleasant physical pain, empathic pains and social pains (from social rejection, exclusion, or loss) (Singer et al., 2004, Eisenberger, 2015). In particular, the title of Singer et al., 2004 is "Empathy for Pain Involves the Affective but not Sensory Components of Pain".

I'm not sure either way whether I'd generally consider sadness to be suffering, though. I'd say suffering is at least unpleasantness + desire (or maybe unpleasantness + aversive desire specifically), but I'm not sure that's all it is. I might also be inclined to use some desire (and unpleasantness) intensity cutoff to call something suffering, but that might be too arbitrary.

You're right that I didn't define desire. The kind of desire I had in mind in this post basically just is motivational (incentive + aversive) salience, which is a neurological mechanism that affects (biases) your attention.[1] There might be more to this kind of desire, but I think motivational salience is a lot of it, and could be all of it. (There are other types of desires, like goals, but those are not what I have in mind here.)

Brody (2018, 2023) defines suffering so that an individual suffers when “she has an unpleasant or negative affective experience that she minds, where to mind some state is to have an occurrent desire that the experience not be occurring.” Unpleasantness and aversion wouldn't be enough for suffering: there must be aversion to the experience itself. Whereas, instead, we could be averse to other things outside of us, like a bear we're afraid of, rather than to the experience itself.

I have some sympathy for this definition, but I'm also not sure it even makes sense. If this kind of "occurrent desire" is just aversive salience, how exactly would it apply differently from when we're afraid of a bear, say? If it's not aversive salience, then what kind of desire is it and how exactly does that work?

It's a different mechanism from the one for (bottom-up) stimulus intensity, and the one for (top-down) task-based attention control. From Kim et al., 2021: