This article is part of a series on Decision-Making under Deep Uncertainty (DMDU). Building on an introduction to complexity modeling and DMDU, this article focuses on the application of this methodology to the field of AI governance. If you haven't read the previous article, I strongly recommend to pause reading this article, and go through the introduction first. Many aspects will only appear clear if we share the same basic terminology. I might be biased, but I think, it's worth it.

Why this article?

I have written up this article because of two reasons. The 1st reason is that various people have approached me, asking how I envision the application of the introduced methodology to particular EA cause areas. People want to see details. And understandably so. In my last article, I have mentioned a bunch of EA cause areas, that I consider to be well suited for systems modeling and decision-making under deep uncertainty (DMDU)[1]. This article is intended to be a (partial) answer for these people providing details on one particular cause area. The 2nd reason for writing this article is my own specialization in AI governance and my wish for people to get started working on this. I want to show a more practical and concrete side of this methodology. I also consider this article as a handy reference that I can share with interested parties as a form of coarse research proposal.

Summary

- DMDU methodology is well-suited to address the complexity of AI governance for the four reasons:

- AI governance demarcates as complex socio-technical system which involves the interaction of technology and society.

- DMDU methodology values the diversity of stakeholder perspectives in AI governance and encourages decision-making that incorporates various values and objectives.

- AI governance is characterized by deep uncertainty due to the unpredictability of AI advancements, societal reactions, and potential risks. DMDU methodology embraces deep uncertainty by exploring a wide range of plausible futures and developing flexible strategies that can adapt to changing circumstances.

- Robustness is crucial in AI governance, and DMDU supports the development of policies that can withstand disruptions, adapt to changes, and consider diverse stakeholder perspectives.

- Traditional methods (standard predictive modeling + highly aggregated economic models) are ill-equipped to deal with the four previous points.

- To maximize value from modeling in the first place, complexity modeling and DMDU are necessary.

- Three steps are proposed for applying complexity modeling and DMDU to AI governance:

- model building (e.g. an agent-based model)

- embedding the model in an optimization setup

- conducting exploratory analysis

- Examples are teased to show how the steps could look like in practice including potential

- exogenous uncertainties

- policy levers

- relations

- performance metrics

- Deliverables of a DMDU process could include:

- Insights

- System dynamics

- Key drivers of outcomes

- Potential vulnerabilities

- Policy options and trade-offs

- Tools for ongoing decision-making

- Policy evaluation and 'What-if' Analysis

- Adaptive Strategy Development

- Insights

Basics of DMDU

If it has been some time since you have read the previous article, or you are already somewhat familiar with systems modeling and/or DMDU, here is a short summary of the previous article:

- Real-world political decision-making problems are complex, with disputed knowledge, differing problem perceptions, opposing stakeholders, and interactions between framing the problem and problem-solving.

- Modeling can help policy-makers to navigate these complexities.

- Traditional modeling is ill-suited for this purpose.

- Systems modeling is a better fit (e.g., agent-based models).

- Deep uncertainty is more common than one might think.

- Deep uncertainty makes expected-utility reasoning virtually useless.

- Decision-Making under Deep Uncertainty is a framework that can build upon systems modeling and overcome deep uncertainties.

- Explorative modeling > predictive modeling.

- Value diversity (aka multiple objectives) > single objectives.

- Focus on finding vulnerable scenarios and robust policy solutions.

- Good fit with the mitigation of GCRs, X-risks, and S-risks.

Now that we are all caught up, let's jump right into it.

Why applying DMDU to AI governance?

As most people that are familiar with Effective Altruism, are also familiar with the arguments why mitigating AI risk deserves particular attention, we will skip listing all the arguments showing how advanced AI systems could pose great risks to society now and in the future. When I speak of mitigating risks from AI, I refer to transformative AI (TAI), artificial general intelligence (AGI), and artificial superintelligence (ASI). At this point, I assume that the reader is on board and we agree on AI governance being an important cause area. But now, let's untangle the intellectual ball of yarn, weaving together the threads of DMDU (Deep Uncertainty Decision Making) and AI Governance in a way that illuminates its profound necessity. Let's break it down into four points.

1. Dealing with a Socio-Technical System

AI governance, at its heart, is a socio-technical system. This signifies that it's not merely about the development and regulation of technological artifacts; it's a nuanced interface where technology and society meet, intertwine, and co-evolve. It involves the navigation of societal norms and values, human behaviors and decisions, regulatory and policy environments, and the whirlwind of technological advancements. This results in a system that's akin to a vast, interconnected network, buzzing with activity and interplay among numerous components.

Each of these components brings its own layer of complexity. For instance, the human element introduces factors like cognitive biases, ethical considerations, and unpredictable behaviors, making it challenging to model and predict outcomes. Technological advancements, meanwhile, have their own dynamism, often progressing at a pace that leaves legal and regulatory frameworks struggling to catch up. These complexities aren't simply additive; they interact in ways that amplify the overall uncertainty and dynamism of the system.

DMDU is particularly equipped to grapple with this complexity. It recognizes that simplistic, deterministic models cannot accurately capture the dynamism of socio-technical systems. DMDU instead encourages a shift in mindset, embracing uncertainty and complexity instead of trying to eliminate it. By focusing on a wide array of plausible futures, it acknowledges that there isn't a single "most likely" future to plan for, but rather a range of possibilities that we must prepare for.

Moreover, DMDU embraces the systems thinking paradigm, understanding that the system's components are not isolated but interconnected in intricate ways. It acknowledges the cascading effects a change in one part of the system could have on others. This holistic view aligns perfectly with the complexity of AI governance, allowing for the development of more comprehensive and nuanced strategies.

Finally, DMDU's iterative nature also mirrors the dynamic nature of socio-technical systems. It recognizes that decision-making isn't a one-time event but a continuous process. As new information becomes available and the system evolves, strategies are revisited and adjusted, promoting a cycle of learning and adaptation.

In essence, DMDU is like a skilled navigator for the labyrinthine realm of AI governance, providing us with the tools and mindset to delve into its complexity, understand its intricacies, and navigate its uncertainties. It encourages us to look at the socio-technical system of AI governance not as an intimidating tangle, but as a fascinating puzzle, rich with challenges and ripe with opportunities for effective, inclusive, and adaptable governance strategies.

2. Valuing the Multiplicity of Stakeholder Perspectives

In the sphere of AI governance, we encounter an expansive array of stakeholders. Each brings to the table their own set of values, perspectives, and objectives, much like individual musicians in an orchestra, each playing their own instrument yet contributing to a harmonious symphony. These stakeholders range from government bodies and policy-makers, private corporations and AI developers, to individual users and civil society organizations. Each group's interests, concerns, and visions for AI development and regulation differ, contributing to a rich tapestry of diverse values.

For instance, a tech company might prioritize innovation and profitability, while a government agency could be more concerned with maintaining security, privacy, and the public good. Meanwhile, civil society organizations might stress human rights and social justice issues related to AI. Individual users, on the other hand, could have myriad concerns, from ease of use and affordability to ethical considerations and privacy protections. This diversity of values is not a complication to be minimized but a resource to be embraced. It brings to light a wider range of considerations and potential outcomes that may otherwise be overlooked. However, navigating this diversity and reaching decisions that respect and incorporate this plethora of perspectives is no small feat.

This is where DMDU plays an instrumental role. It offers a methodology designed to address situations with multiple objectives and conflicting interests. DMDU encourages the consideration of a broad spectrum of perspectives and criteria in decision-making processes, thereby ensuring that various stakeholder values are included and assessed. One way it does this is through scenario planning, which allows exploration of a variety of future states, each influenced by different stakeholder values and objectives. This exploration enables decision-makers to assess how different courses of action could impact diverse interests and fosters more comprehensive, balanced policy outcomes.

Furthermore, DMDU promotes an iterative decision-making process. This is especially pertinent in the context of value diversity as it provides opportunities to reassess and refine decisions as the values and objectives of stakeholders evolve over time. This ongoing engagement facilitates continued dialogue among diverse stakeholders, fostering a sense of inclusivity and mutual respect.

In essence, by utilizing DMDU methodology, we are not merely acknowledging the existence of diverse values in AI governance but actively inviting them to shape and influence the decision-making process. It's like conducting an orchestra with a wide range of instruments, ensuring that each has its solo, and all contribute to the final symphony. The resulting policies are, therefore, more likely to be nuanced, balanced, and representative of the diverse interests inherent in AI governance.

3. Embracing Deep Uncertainty

The field of AI governance is a veritable sea of deep uncertainty. As we look out upon this vast expanse, we are faced with a multitude of unknowns. The pace and trajectory of AI advancements, societal reactions to these developments, the impact of AI on various sectors of society, and the potential emergence of unforeseen risks – all these elements contribute to a fog of uncertainty.

In many ways, AI governance is like exploring uncharted waters. We can forecast trends based on current knowledge and patterns, but there remains a substantial range of unknown factors. The behavior of complex socio-technical systems like AI is difficult to predict precisely due to their inherent dynamism and the interplay of multiple variables. In this context, conventional decision-making strategies, which often rely on predictions and probabilities, fall short.

This is where DMDU shines as an approach tailored to address situations of deep uncertainty. Instead of attempting to predict the future, DMDU encourages the exploration of a wide range of plausible futures. By considering various scenarios, including those at the extreme ends of possibility, DMDU helps decision-makers understand the breadth of potential outcomes and the variety of pathways that could lead to them.

This scenario-based approach encourages the development of flexible and adaptive strategies. It acknowledges that given the uncertainty inherent in AI governance, it is crucial to have plans that can be modified based on changing circumstances and new information. This resilience is vital for navigating an uncertain landscape, much like a ship that can adjust its course based on shifting winds and currents.

In sum, the application of DMDU in AI governance acknowledges the presence of deep uncertainty, embraces it, and uses it as a guide for informed decision-making. It equips us with a compass and map, not to predict the future, but to understand the potential landscapes that may emerge and to prepare for a diverse range of them. By doing so, it turns the challenge of uncertainty into an opportunity for resilience and adaptability, offering us glimpses throughout the thick fog.

4. Ensuring Robustness

AI technology is not static; it evolves, and it does so rapidly. It's akin to a river that constantly changes its course, with new tributaries emerging and old ones disappearing over time. As AI continues to advance and permeate various sectors of society, the landscape of AI governance will similarly need to adapt and evolve. This creates an imperative for robustness in policy solutions – policies that can withstand the test of time and adapt to changing circumstances.

Robustness in this context extends beyond mere resilience. It's not just about weathering the storm, but also about being able to sail through it effectively, adjusting the sails as needed. Robust policies should not only resist shocks and disruptions but also adapt and evolve with them. They should provide a solid foundation, while also maintaining enough flexibility to adapt to the dynamic AI landscape.

DMDU offers a methodology that directly supports the development of such robust policy solutions. It focuses on the identification of strategies that perform well under a wide variety of future scenarios, rather than those optimized for a single, predicted future. This breadth of consideration promotes the creation of policies that can handle a range of potential scenarios, effectively enhancing their robustness.

DMDU also supports adaptive policy-making through its iterative decision-making process. It acknowledges that as the future unfolds and new information becomes available, strategies may need to be reassessed and adjusted. This iterative process mirrors the dynamism of the AI field, ensuring that policies remain relevant and effective over time.

Moreover, DMDU's inclusive approach to decision-making, which considers a wide range of stakeholder perspectives and values, further contributes to policy robustness. By encompassing diverse perspectives, it enables the development of policies that are not only resilient but also respectful of different values and interests. This inclusivity aids in building policy solutions that are more likely to garner widespread support, further enhancing their robustness.

In essence, DMDU equips us with the tools and mindset to develop robust policy solutions for AI governance. Like a master architect, it guides us in designing structures that are sturdy yet adaptable, with a strong foundation and the flexibility to accommodate change. Through the lens of DMDU, we can build policy solutions that stand firm in the face of uncertainty and change, while also evolving alongside the dynamic landscape of AI.

To sum up, incorporating DMDU methodology into AI governance is a bit like giving a mountaineer a reliable compass, a map, and a sturdy pair of boots. It equips us to traverse the complex terrain, cater to varied travelers, weather the unexpected storms, and reach the peak with policies that are not only robust but also responsive to the ever-changing AI environment.

How to apply DMDU to AI Governance?

Having taken an expansive view of the myriad reasons to pair DMDU with AI governance—from dealing with its complexity, valuing the multiplicity of stakeholder perspectives, embracing deep uncertainty, to ensuring robustness in our policy responses—we find ourselves standing at the foot of a significant question. As described above, this approach seems to provide us with the essential tools for our journey, analogous to a mountaineer. These resources position us to expertly navigate the convoluted socio-technical terrain, accommodate a wide range of traveling companions with their diverse priorities, withstand the unforeseeable tempests, and ultimately arrive at the peak armed with policies that are both robust and responsive to the ever-evolving AI environment.

But now that we (hopefully) appreciate the necessity of this methodology and understand its benefits, the path forward leads us to a crucial juncture. This exploration prompts an essential and practical inquiry: "How do we apply DMDU to AI Governance?" Now is the time to delve into the concrete ways we might integrate this methodology into the practice of governing AI, turning these theoretical insights into actionable strategies.

How do we approach this question best? I would suggest, we consider the following three steps:

- Model Building

- Embedding of Model in Optimization Setup

- Exploratory Analysis

1. Model Building

The process of applying DMDU to AI governance necessitates a more detailed and nuanced approach, starting with a clear identification of the problem we wish to address. AI governance, in its essence, is an intricate socio-technical system with numerous facets and challenges. As discussed in the previous article of this sequence, an agent-based model is an excellent choice with respect to modeling paradigms.

Given the heterogeneous nature of the agents involved in AI governance – from governments, private corporations, civil society organizations to individual users – an agent-based modeling (ABM) approach can serve as an ideal tool for this task. As described in the previous article of this sequence, ABM is a computational method that enables a collection of autonomous entities or "agents" to interact within a defined environment. Each agent can represent a different stakeholder, equipped with its own set of rules, behaviors, and objectives. By setting up the interactions among these agents, we can simulate various scenarios and observe emergent behavior. The strength of ABM lies in its ability to represent diverse and complex interactions within a system. In the context of AI governance, it can help illuminate how different stakeholders' behaviors and decisions may interact and influence the system's overall dynamics. This allows us to simulate a multitude of scenarios and observe the consequences, providing us with valuable insights into potential policy impacts and helping us design robust, adaptable strategies.

Creating an agent-based model (ABM) usually involves a series of methodological steps. Here's a quick overview:

- Problem Identification and Conceptual Model Design: This is where we identify the problem we want to address with the model and specify the objectives of our simulation. Based on this, we design a conceptual model, identifying the key agents, their attributes, and their potential behaviors. Keep in mind, instead of attempting to model the entire system in its complex entirety, a more pragmatic and effective approach would be to zero in on a specific problem within this larger system. By clearly defining the problem we aim to tackle, we can structure our model to best address it, ensuring relevance and applicability. dentifying the problem is not merely about pinpointing an issue. It also entails understanding its intricacies, its interconnected elements, and its impacts on various stakeholders. This involves asking questions like: How does this problem manifest within the field of AI governance? What causes it? Who does it affect and in what ways? What are the potential consequences if it remains unaddressed? The responses to these questions lay the foundation for our modeling efforts.

- System Formalization: In this step, we formalize the interactions and behaviors of the agents, usually through mathematical or logical rules. This involves defining how agents make decisions, interact with each other and their environment, and how these actions may change over time.

- Model Implementation: Here, we use computer software to implement our formalized model. This usually involves programming the behaviors and interactions of the agents within a simulation environment.

- Model Testing and Calibration: Once implemented, we run initial tests on the model to ensure it behaves as expected and fine-tune parameters to ensure it aligns with real-world data or expectations.

Experimentation: Once our model is calibrated, we can start running experiments, altering parameters or rules, and observing the resulting behaviors. This can help us identify emergent phenomena, test different scenarios, and gain insights into system dynamics.Model Analysis and Interpretation: Finally, we analyze the results of our simulations, interpret their meaning, and translate these insights into actionable policy recommendations or strategies.

I included steps 5 and 6 for completeness' sake. However, given that we will use DMDU on top of the ABM, our steps will diverge.

I admit, that this is still rather abstract. So, let's consider two examples for which we tease the application of the aforementioned methodological steps. The two examples are the following ones:

- Fairness in AI as the core problem

- Existential risk of AI as the core problem

Disclaimer: Keep in mind that this is a very rough and preliminary attempt of laying out how the modeling process could look like. More serious thought would need to go into fleshing out such model conceptualizations.

Example 1: Fairness in AI

Let's consider a hypothetical problem – ensuring fairness in AI systems across diverse demographic groups. Given the complexity and diversity inherent in this problem, ABM can be a particularly helpful tool to explore potential solutions.

- Problem Identification and Conceptual Model Design: We identify fairness in AI as our core problem. The key agents could be AI developers, end-users from diverse demographic groups, and regulatory bodies. Each group will have its own objectives and behaviors related to the development, use, and regulation of AI systems.

- System Formalization: We formalize the decisions and behaviors of the agents. For example, developers decide on algorithms based on their understanding of fairness; users interact with AI systems and experience outcomes; regulatory bodies set policies based on observed fairness levels.

- Model Implementation: We use ABM software to implement our model, coding the behaviors, interactions, and environment for the agents.

- Model Testing and Calibration: We test the model against real-world data or scenarios to ensure it accurately represents the dynamics of fairness in AI systems.

Experimentation: We run simulations to explore various scenarios. For instance, what happens to fairness when regulatory bodies tighten or loosen policies? What is the impact of different fairness definitions on the experience of diverse users?Model Analysis and Interpretation: We analyze the results of our simulations to understand the emergent phenomena and interpret these insights. Based on our findings, we may propose strategies or policies to improve fairness in AI systems.

Through this application of ABM, we can explore complex, diverse, and uncertain aspects of AI governance and support the design of robust, adaptable policies.

Example 2: Existential Risk of AI

Let's take a look at how to use agent-based modeling to address the existential risk posed by AI.

- Problem Identification and Conceptual Model Design: We first recognize the existential risk of AI as our core problem. The key agents in this scenario could be AI researchers and developers, AI systems themselves (especially if we consider the emergence of superintelligent AI), government regulatory bodies, international AI governance bodies, and various groups of people affected by AI technology (which can essentially be all of humanity).

- System Formalization: We formalize the decisions and behaviors of these agents. For instance, AI researchers and developers may pursue breakthroughs in AI capabilities, possibly pushing towards the development of superintelligent AI. AI systems, depending on their level of autonomy and intelligence, may behave based on their programming and potentially self-improve. Regulatory bodies create and enforce laws and regulations aimed at minimizing risk and promoting safe AI development and use. International governance bodies work on global standards and treaties. And affected groups may react in a variety of ways to the implementation of AI in society, possibly through political pressure, societal norms, or market demand.

- Model Implementation: Using a software platform suitable for ABM, we encode these behaviors and interactions, creating a virtual environment where these different agents operate.

- Model Testing and Calibration: We test the model to ensure that the interactions generate plausible outcomes that align with current understanding and data about AI development and its risks. This may involve tweaking certain parameters, such as the rate of AI capability development or the effectiveness of regulation enforcement.

Experimentation: We run a series of experiments where we adjust parameters and observe the outcomes. For example, we might examine a worst-case scenario where the development of superintelligent AI occurs rapidly and without effective safeguards or regulations. We could also model best-case scenarios where international cooperation leads to stringent regulations and careful, safety-conscious AI development.Model Analysis and Interpretation: Finally, we scrutinize the results of the experiments to identify patterns and gain insights. This might involve identifying conditions that lead to riskier outcomes or pinpointing strategies that effectively mitigate the existential risk.

By applying this methodology, we can use ABM as a tool to explore various scenarios and inform strategies that address the existential risk posed by AI. It should be noted, however, that the existential risk from AI is a challenging and multifaceted issue, and ABM is just one tool among many that can help us understand and navigate this complex landscape.

Steps 5 and 6 in both examples are just there to show you how a typical ABM process could look like. However, we would likely stop at step 4 and go down a different path – the path of DMDU which is described in the consequent section.

2. Embedding of Model in Optimization Setup

Eventually, we want to use the model to identify good policy recommendations. Usually, modelers run their own experiments, playing with their parameters, particular actions, handcraft some scenarios, calculate expected utilities, and provide policy recommendations based and weighted averages, etc. This can be very problematic as elaborated previously. The DMDU way offers an alternative. We can embrace uncertainty and find optimal and robust policy solutions – in a systematic way, considering ten thousands of scenarios, using AI and ML.

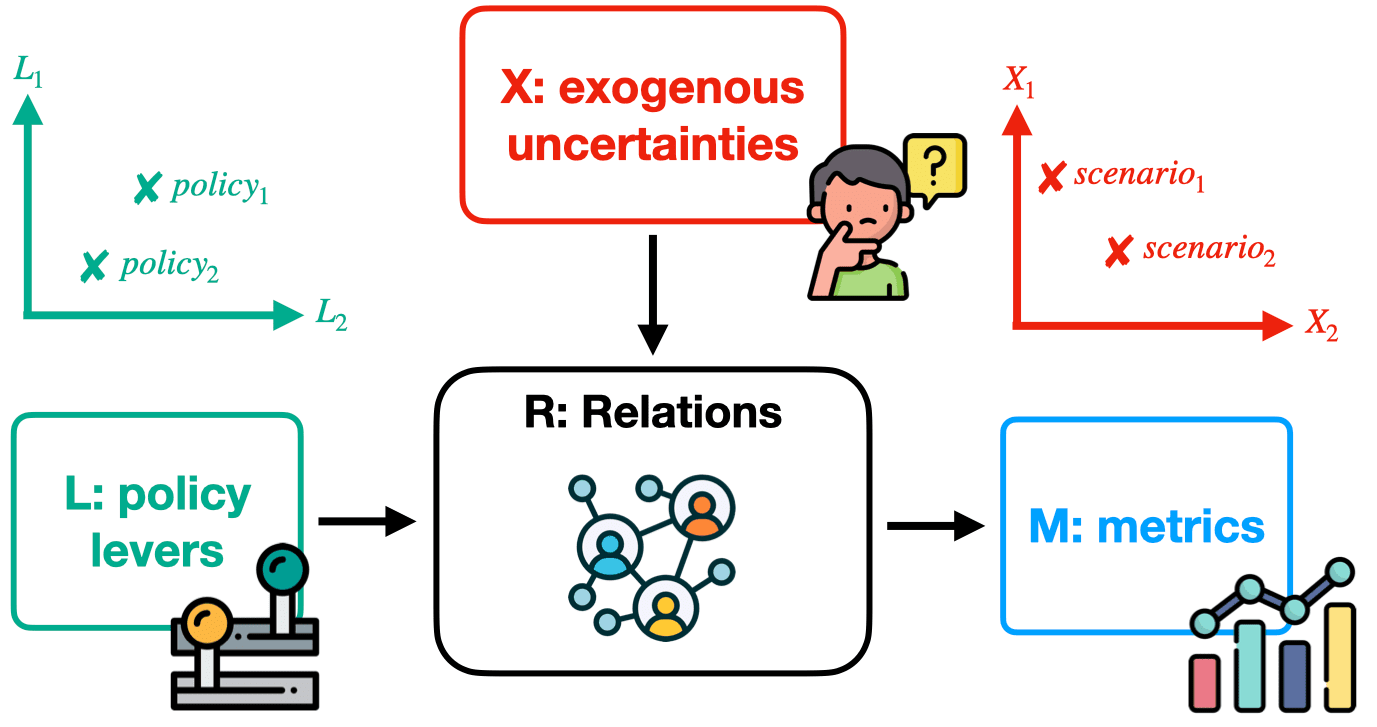

In order to use the AI part of DMDU, we need to briefly recap how model information can be structured. As described in my previous article, the inputs and outputs can be structured with the XLRM framework[2].

Keep in mind that the metrics are describing a set of variables that you (or rather your stakeholders) find particularly important to track. With the variables, we also provide the optimization direction (e.g., minimize inequality, maximize GWP, minimize number of causalities, etc.).

In order to embed the model in an optimization setup, we need to specify X, L, R, and M first. We need to provide the variables and their plausible domains. Examples will be described later. But first, I would like to talk about the optimization process and how it differs from the simulation process.

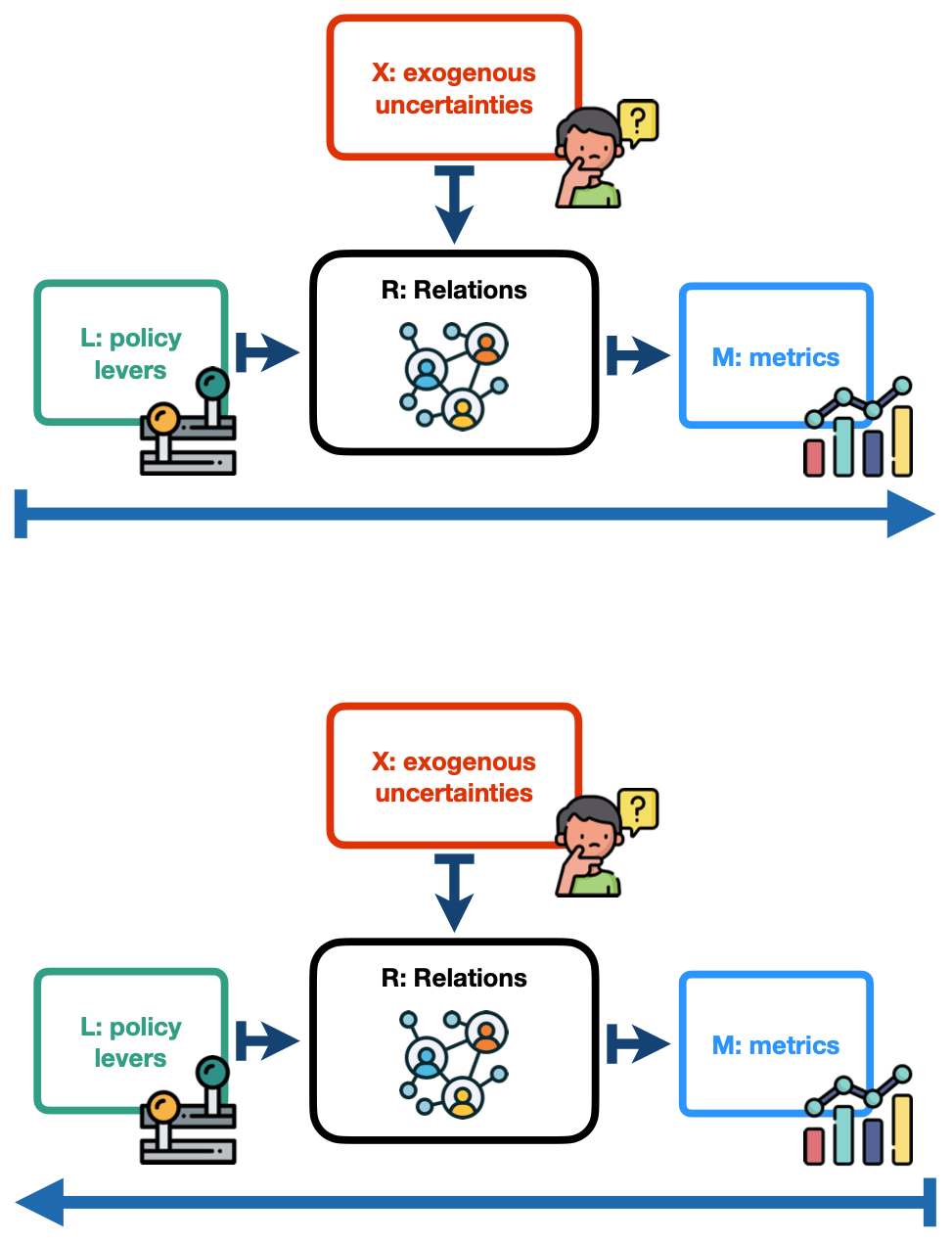

Simulation versus Optimization

In agent-based modeling, simulation and optimization operate as two distinct but interconnected components, each serving a unique role in the decision-making process. However, they differ substantially in terms of their focal point and methodological approach, particularly concerning the flow of data from inputs to outputs or vice versa.

Simulation, in the context of agent-based modeling, is typically seen as a process-driven approach where the key concern lies in the understanding and elucidation of complex system behavior. This begins with defining the inputs, a mixture of policy levers and exogenous uncertainties. By manipulating these variables and initiating the simulation, the system's states are allowed to evolve over time according to pre-established rules defined by the relations (R) . The emergent outcome metrics are then observed and analyzed. The direction of interest in simulation is essentially from inputs to outputs – from the known or controllable aspects to the resultant outcomes.

Optimization, on the other hand, operates in a seemingly inverted manner. This process begins by identifying the metrics of interest – the desired outcomes – and proceeds by employing computational techniques, often involving AI algorithms, to find the optimal combination of policy levers that achieve these goals under particular scenarios. Here, the direction of attention is from outputs back to inputs. We begin with a predetermined end state and traverse backward, seeking out the best strategies to reach these objectives.

The optimization process is one of the key parts of the DMDU approach. Although, we usually use several AI and ML algorithms, using optimization to search for policies is central. For this optimization, we like to use a specimen of the multi-objective evolutionary algorithm (MOEA) family. They facilitate the identification of Pareto-optimal policies. These are policies that cannot be improved in one objective without negatively affecting another. The uncertainty inherent in DMDU scenarios often necessitates the consideration of multiple conflicting objectives. MOEAs, with their inherent ability to handle multiple objectives and explore a large and diverse solution space, offer an effective approach to handling such complex, multi-dimensional decision problems. They work by generating a population of potential solutions and then iteratively evolving this population through processes akin to natural selection, mutation, and recombination. In each iteration or generation, solutions that represent the most efficient trade-offs among the objectives are identified and preserved. This evolutionary process continues until a set of Pareto-optimal solutions is identified. By employing MOEAs in DMDU, decision-makers can visualize the trade-offs between competing objectives through the generated Pareto front. This enables them to understand the landscape of possible decisions and their impacts, providing valuable insights when the optimal policy is not clear-cut due to the deep uncertainty involved. Thus, MOEAs contribute significantly to the robustness and adaptability of policy decisions under complex, uncertain circumstances.

X: Exogenous Uncertainties

Here, I want to simply list a few key parameters that could be considered uncertain which we could take into account:

- pace of rapid capability gain (aka. intelligence explosion)

- importance of hardware progress

- number of secret AGI labs

- initial willingness of public to push back

- strength of status quo bias

- probability of constant scaling laws

- improvement pace of effective compute

- development of spending of big labs on compute

- required FLOP/s to run AGI

As you probably have noticed, these factors are rather abstract. For a better operationalization, we would need to know the details of the model. In principle, any parameter that is not affected by another parameter could be marked as an exogenous uncertainty. It depends on the model at hand. If a parameter within a model is properly operationalized, we can then choose a plausible range for each parameter. The ranges of all uncertainty variables span the uncertainty space, from which we can choose or sample scenarios.

L: Policy Levers

The other inputs to the model are the policy levers. Depending on which actors are willing to follow your advice, their possible actions are potential levers that can be affected. Also here, the selection of policy levers depends on the particular model and the problem that is modeled. The selection of relevant policy levers requires thorough research though and clearer operationalization. Some ideas that float around in the AI governance scene are listed below:

- various forms of compute governance[3]

- on-chip firmware to occasionally save snapshots of the the neural network weights stored in device memory (e.g., restricted to particular actors; or related to some snapshot frequency)

- monitoring the chip supply chain (globally; or threshold values, e.g. if organization exceeds some number like spending, market cap, etc.)

- auditing regulation; consider various implementations of auditing (actor scope, focus on data, focus on algorithmic transparency, various forms of certification requirements, post-deployment monitoring, etc.)

- setting negative and positive incentive structures

- involve general public to voice concerns (e.g., to change willingness to regulate)

- invest in AI alignment research

- persuade key players to advocate for international treaties

- promote safety research (e.g., through spending on safety campaigns)

- improve security at leading AI labs

- cutting off data access for big labs (e.g., through lawsuits)

- restrict labs from developing AGI through licenses[4]

Certainly, it's imperative to remain mindful that the policy levers we consider must be germane to our problem formulation, within our sphere of control, and sufficiently concrete to be actionable. In the context of AI governance, this means focusing on mechanisms and regulations that can directly impact the design, development, deployment, and use of AI systems. While the range of potential interventions might be vast, our model should hone in on those aspects we can directly influence or those that could feasibly be manipulated by the stakeholders we represent or advise. These could encompass regulations on AI transparency, safety research funding, or education programs for AI developers, among others. All considered policy actions need to be tangible, practical, and capable of precise definition within the model. Broad, ill-defined, or inaccessible policy levers can lead to vague, non-actionable, or even misleading results from the model. Hence, in crafting our agent-based model for AI governance, the judicious selection and specification of policy levers is of paramount importance.

It's crucial to underline that a policy isn't simply a single action, but a cohesive set of measures, a tapestry of interwoven strands that together create a concerted strategy. When we speak of finding an optimal policy in the context of AI governance, we're not on a hunt for a silver bullet — a solitary action that will neatly resolve all our challenges. Instead, we're seeking a potent blend of policy levers, each precisely tuned to yield the greatest collective impact. Think of it as orchestrating a symphony — each instrument plays its part, and when they're all in harmony, we create something far greater than the sum of its parts. The objective then is to discover the combinations of actions — their nature, their degree, their timing — that yield Pareto-optimal policy solutions. In other words, we aim to identify sets of actions that, when taken together, provide the best possible outcomes across our diverse set of goals and constraints, without one benefit being improved only at the expense of another. This more holistic perspective is vital for the effective governance of something as complex and multifaceted as AI.

R: Relations

In the context of the XLRM framework applied to an ABM for AI governance, "Relations" refers to the established cause-and-effect linkages and interactions that govern the behavior of the model. These encapsulate the fundamental rules of the model and shape how the system components (agents, states, etc.) interact over time and in response to various actions and external factors. They can include mathematical formulas, decision-making algorithms, probabilistic dependencies, and other types of deterministic or stochastic relationships. In an AI governance model, these could represent a wide range of interactions, such as how AI developers respond to regulations, how AI systems' behaviors change in response to different inputs and environments, how public perception of AI evolves over time, and how different policies might interact with each other. By defining these relations, we can simulate the complex dynamics of the AI governance system under a variety of conditions and policy actions. This allows us to gain insights into potential futures and the impacts of different policy options, thus supporting more informed and robust decision-making in the face of deep uncertainty.

Selecting appropriate relations for a model is a crucial and delicate task. It fundamentally shapes the model's behavior and its capacity to provide meaningful insights. When considering the integration of economic aspects into an ABM for AI governance, it would be tempting to adopt established macroeconomic theories, such as neoclassical economics. However, such an approach may not be best suited to the complex, dynamic, and deeply uncertain nature of AI governance. Consider the potential pitfalls of using something like Nordhaus' Dynamic Integrated Climate-Economy (DICE) model, which applies neoclassical economic principles to climate change economics. While the DICE model has made important contributions to our understanding of the economics of climate change, it has also been criticized for its oversimplifications and assumptions. For example, it assumes market equilibrium, perfect foresight, and rational, utility-maximizing agents, and it aggregates complex systems into just a few key variables. Now, if we were to integrate such a model with AI takeoff mechanisms, we could encounter a number of issues. For one, AI takeoff is a deeply uncertain and potentially rapid process, which might not align well with the DICE model's equilibrium assumptions and aggregated approach. Also, the model's assumption of perfect foresight and rational agents might not adequately reflect the behavior of stakeholders in the face of a fast-paced AI takeoff. It may ignore the potential for surprise, panic, or other non-rational responses, as well as the potential for unequal impacts or power dynamics. Such misalignments could lead to misleading results and poorly suited policy recommendations.

In contrast, an ABM that adopts principles from complexity economics could be a better choice. Complexity economics acknowledges the dynamic, out-of-equilibrium nature of economies, the heterogeneity of agents, and the importance of network effects and emergent (market) phenomena. It's more amenable to exploring complex, uncertain, and non-linear dynamics, such as those expected in AI governance and AI takeoff scenarios. As mentioned above, in an ABM for AI governance, we could model different types of agents (AI developers, regulators, public, AI systems themselves) with their own behaviors, objectives, and constraints, interacting in various ways. This could allow us to capture complex system dynamics, explore a wide range of scenarios, and test the impacts of various policy options in a more realistic and nuanced manner.

In conclusion, the selection of relations in a model is not a trivial task. It requires a deep understanding of the system being modeled, careful consideration of the model's purpose and scope, and a thoughtful balancing of realism, complexity, and computational feasibility. It's a task that demands not only technical expertise but also a good dose of humility, creativity, and critical thinking.

M: Performance Metrics

Performance metrics on AI governance could vary greatly depending on the specific context and purpose of the model. However, here are some potential metrics that might be generally applicable:

- Safety of AI Systems: A measure of how frequently AI systems result in harmful outcomes, potentially weighted by the severity of harm.

- Equity: This could assess the distribution of benefits and harms from AI systems across different groups in society. It could consider factors like demographic disparities in impacts or access to AI benefits.

- Public Trust in AI: A measure of public confidence in AI systems and their governance. This could be modeled as a function of various factors like transparency, communication, and past performance of AI systems.

- AI Progress Rate: This could measure the pace of AI development and deployment, which might be influenced by various policies and conditions.

- Adherence to Ethical Guidelines: A measure of how well the behavior of AI systems and AI developers aligns with established ethical guidelines.

- Resilience to AI-Related Risks: This could assess the system's ability to withstand or recover from various potential AI-related risks, such as misuse of AI, AI accidents, or more catastrophic risks like a rapid, uncontrolled AI takeoff.

- Economic Impact: This could assess the economic effects of AI systems and their governance, such as impacts on jobs, economic productivity, or inequality.

- Compliance Costs: A measure of the costs associated with compliance to AI regulations, both for AI developers and regulators.

- Risk Reduction: This metric could assess the degree to which policies and measures effectively mitigate potential existential risks associated with AI.

- Rate of Safe AI Development: Measuring the pace of AI development that complies with established safety measures and guidelines.

- Compliance with Safety Protocols: This could assess the degree to which AI developers adhere to safety protocols, and how effectively these protocols are enforced.

- Resilience to AI Takeoff Scenarios: This would assess the system's ability to detect, respond to, and recover from potential rapid AI takeoff scenarios.

- International Collaboration on AI Safety: Since existential risk is a global concern, a metric evaluating the extent and effectiveness of international cooperation on AI safety could be important.

- Public Awareness of AI Risk: It could be beneficial to measure public understanding and awareness of the existential risks posed by AI, as this can impact policy support and adherence to safety guidelines.

- Effectiveness of Risk Containment Measures: Measuring the effectiveness of policies and strategies intended to prevent or mitigate AI-induced harm, like controls on AI-related resources or emergency response plans for potential takeoff scenarios.

- AI Behavior Alignment: Evaluating how closely AI behaviors align with human values and safety requirements, which is crucial to prevent AI systems from taking unintended and potentially harmful actions.

Remember, the key to effective performance metrics is to ensure that they align with the purpose of your model and accurately reflect the aspects of the system that you care most about. The specifics will depend on your particular context, purpose, and the nature of the AI governance system you are modeling.

3. Exploratory Analysis

With our agent-based model for AI governance now structured, our variables defined, and their relevant ranges established, we can proceed to the final, yet crucial, stage — the exploratory analysis. This step is what makes DMDU approaches so powerful; it allows us to navigate the vast landscape of possibilities and understand the space of outcomes across different policies and scenarios.

In exploratory analysis, rather than predicting a single future and optimizing for it, we explore a broad range of plausible future scenarios and analyze how various policy actions perform across these. The goal is to identify robust policies – those that perform well across a variety of possible futures, rather than merely optimizing for one presumed most likely future.

The Process in a Nutshell

Once we have initiated our large-scale, scenario-based exploration, the next critical step is identifying vulnerable scenarios. These are scenarios under which our system performs poorly on our various performance metrics. By examining these vulnerable scenarios, we gain insights into the conditions under which our proposed policies might falter. This understanding is essential in designing resilient systems capable of withstanding diverse circumstances. For each of these identified scenarios, we then use a process known as multi-objective optimization, often employing evolutionary algorithms, to find sets of policy actions that perform well. These algorithms iteratively generate, evaluate, and select policies, seeking those that offer the best trade-offs across our performance metrics. The aim here is not to find a single, 'best' policy but rather to identify a set of Pareto-optimal policies. These are policies for which no other policy exists that performs at least as well across all objectives and strictly better in at least one.

However, due to the deep uncertainty inherent in AI governance, we cannot be sure that the future will unfold according to any of our specific scenarios, even those that we've identified as vulnerable. Therefore, we re-evaluate our set of Pareto-optimal policies across a much larger ensemble of scenarios, often running into the tens of thousands. This process allows us to understand how our policies perform under a wide variety of future conditions, effectively stress-testing them for robustness. In this context, a robust policy is one that performs satisfactorily across a wide range of plausible futures[5]. For further elaboration, see this section of my previous article.

Overall, the goal of this exploratory analysis is to illuminate the landscape of potential outcomes, and in doing so, guide decision-makers towards policies that perform sufficiently well – even in particularly bleak scenarios. By applying these methods, we can navigate the deep uncertainties of AI governance with a more informed, resilient, and robust approach.

What's the Outcome of DMDU?

The culmination of the entire modeling and Decision Making under Deep Uncertainty process is a suite of robust and adaptable policies and strategies for governing AI, designed to perform well under a wide range of plausible futures. The core deliverables of this process can be roughly divided into three categories: insights, recommendations, and tools for ongoing decision-making.

1. Insights: The process of employing DMDU methodologies within AI governance ushers in a wealth of insights that can have profound impacts on the way we approach the field. These insights span across the three vital facets: system dynamics, key drivers of outcomes, and potential vulnerabilities.

- System Dynamics: A comprehensive exploration of varied scenarios under DMDU lays bare the intricate workings of the socio-technical system that is AI governance. Through modeling and analysis, we come to understand the complex interdependencies and feedback loops within the system, the hidden patterns that may not be immediately apparent, and how different elements of the system respond to changes and shocks. This understanding is crucial, not just in making sense of the present, but also in anticipating the future, enabling us to forecast potential trends and systemic shifts, and to plan accordingly. In the context of AI governance and the mitigation of existential risk, the system dynamics can be quite complex. For instance, we may examine the interaction between AI research and development (R&D) efforts and the level of regulation. Too little regulation might foster rapid progress but could also increase risk, whereas too stringent regulation might hamper innovation but reduce immediate hazards. Another critical dynamic might involve the international competition in AI technology. The race for supremacy in AI could potentially discourage cooperation and information sharing, thereby increasing the risk of uncontrolled AI development and deployment.

- Key Drivers of Outcomes: DMDU also brings to light the key variables that have the most significant influence on outcomes. It helps us identify critical leverage points, i.e., places within the system where a small intervention can lead to large-scale changes. By revealing these drivers, DMDU enables us to focus our efforts where they are likely to have the most significant impact. This understanding of outcome drivers is invaluable in formulating effective and targeted policy interventions. With respect to AI governance, the key drivers of outcomes could include the pace of AI R&D, the robustness of regulation, the level of international cooperation, and the availability of resources for risk mitigation efforts. For example, a rapid pace of R&D, especially if it outstrips the development of regulatory measures, could significantly increase the risk. On the other hand, strong international cooperation, leading to shared standards and concerted efforts to control risks, could be a significant factor in reducing the likelihood of adverse outcomes.

- Potential Vulnerabilities: Perhaps most importantly, DMDU allows us to identify potential vulnerabilities – areas where our policies and strategies might be susceptible to failure under certain conditions. By shedding light on these vulnerabilities, DMDU equips us to design more resilient strategies, capable of withstanding a broad range of future scenarios. It also enables us to anticipate potential threats and challenges, to take proactive measures to mitigate risks, and to put contingency plans in place. Potential vulnerabilities in the AI governance system might include weak points in regulatory frameworks, a lack of technical expertise in regulatory bodies, or insufficient investment in risk mitigation measures. For example, if regulations are unable to keep up with the pace of AI development, it could leave gaps that might be exploited, leading to increased risks. Alternatively, a sudden surge in AI capabilities, often referred to as a "hard takeoff", could catch policymakers and society at large off guard, with catastrophic consequences if not adequately prepared for.

2. Policy Options and Trade-offs: The DMDU process generates a set of Pareto-optimal policy solutions. These are distinct combinations of policy actions that have demonstrated satisfactory performance across a spectrum of scenarios. It's important to note that these are not prescriptive "recommendations" as such, but rather a diverse suite of potential solutions. As researchers or policy analysts, our role is not to dictate a single best policy, but to articulate the various options and their respective trade-offs. Each Pareto-optimal policy solution represents a unique balance of outcomes across different objectives. The inherent trade-offs between these policy solutions reflect the complex, multi-dimensional nature of AI governance. By effectively outlining these options and elucidating their respective strengths and weaknesses, we offer stakeholders a comprehensive overview of potential paths. Our goal is to facilitate informed decision-making by providing a clear, detailed map of the policy landscape. This output should instigate thoughtful deliberation among stakeholders, who can weigh the various trade-offs in light of their own values, priorities, and risk tolerance. Through such a process, the stakeholders are empowered to select and implement the policies that best align with their objectives, all the while having a clear understanding of the associated trade-offs. This approach supports a more democratic and inclusive decision-making process, ensuring that decisions about AI governance are informed, thoughtful, and responsive to a diverse range of needs and perspectives.

3. Tools for Ongoing Decision-Making: The enduring value of the DMDU process lies not only in the immediate insights it offers but also in the arsenal of tools it provides for sustainable decision-making. These tools, derived from the extensive modeling and data analysis conducted during the process, remain invaluable resources for continued policy evaluation and strategy adaptation.

- Policy Evaluation and 'What-if' Analysis: One of the most direct applications of these tools lies in their capacity to evaluate new policies. Given a proposed policy, we can deploy these tools to conduct predictive analysis and assess the potential outcomes under a variety of scenarios. This 'what-if' analysis is a powerful instrument for decision-makers, enabling them to forecast the impacts of different policy alternatives before implementation, thereby reducing the element of uncertainty. Beyond this, these tools are also adept at 'stress-testing' existing policies. They allow us to simulate potential changes in conditions and understand how our current strategies might fare in the face of these changes. In essence, they offer a safe environment to gauge the potential weaknesses and strengths of our strategies under various circumstances, providing an opportunity for proactive policy refinement.

- Adaptive Strategy Development: As the field of AI governance is continuously evolving, the need for adaptable strategies is paramount. The DMDU tools play a crucial role in this aspect, serving as dynamic platforms that can incorporate new data and information as they become available. This flexibility facilitates the continuous updating and refining of our strategies, ensuring they remain aligned with the shifting landscape of AI governance. These tools are not static but are designed to evolve, learn, and adapt in tandem with the socio-technical system they aim to govern. They help create a responsive and resilient AI governance framework, one capable of addressing the inherent uncertainty and flux within the system, and adjusting to new developments, be they technological advancements, shifts in societal values, or changes in the regulatory environment.

The potential impact of these deliverables in the world of AI governance is significant. Insights and policy options with trade-offs from the DMDU process can inform the development of more effective and resilient governance strategies, shape the debate around AI policy, and guide the actions of policymakers, industry leaders, and other stakeholders. The decision-support tools can enhance the capacity of these actors to make well-informed decisions in the face of uncertainty and change. Ultimately, by integrating DMDU methods into the field of AI governance, we can enhance our collective capacity to navigate the challenges and uncertainties of AI, mitigate potential risks, and harness the immense potential of AI in a way that aligns with our societal values and goals.

Conclusion

In an era where AI technologies play a pivotal role in shaping our world, the necessity to recalibrate our approach to AI governance is paramount. This article seeks to underline the critical need for embracing innovative methodologies such as DMDU to tackle the intricacies and challenges this transformative technology brings.

DMDU methodology, with its emphasis on considering a broad spectrum of possible futures, encourages an AI-driven approach to AI governance that is inherently robust, adaptable, and inclusive. It's a methodology well-equipped to grapple with socio-technical complexities, the vast diversity of stakeholder values, deep uncertainties, and the need for robust, evolving policies – all core aspects of AI governance.

As we look ahead, we are faced with a choice. We can continue to navigate the terrain of AI governance with the existing tools and maps or we can reboot our journey, equipped with a new compass – DMDU and similar methodologies. The latter offers a means of moving forward that acknowledges and embraces the complexity and dynamism of AI and its governance.

Referencing back to the title, this 'rebooting' signifies a shift from traditional, deterministic models of governance to one that embraces uncertainty and complexity. It's an AI-driven approach to AI governance, where AI is not just the subject of governance but also a tool to better understand and navigate the complexities of governance itself. In a world growing more reliant on AI technologies, the application of methodologies like DMDU in AI governance might provide a fresh perspective. As stakeholders in AI governance – encompassing policy makers, AI developers, civil society organizations, and individual users – it may be beneficial to explore and consider the potential value of systems modeling and decision-making under deep uncertainty. Incorporating such approaches could potentially enhance our strategies and policies, making them more robust and adaptable. This could, in turn, better equip us to address the broad spectrum of dynamic and evolving challenges that AI presents.

In conclusion, rebooting AI governance is not just a call for an AI-driven approach to AI governance, but also an invitation to embrace the uncertainties, complexities, and opportunities that lie ahead. By adopting DMDU, we can ensure our path forward in AI governance is not just responsive to the needs of today, but resilient and adaptable enough to navigate the future. As we continue to traverse this dynamic landscape, let's commit to a future where AI is governed in a manner that's as innovative and forward-thinking as the technology itself.

- ^

For simplicity's sake, within the scope of this article, I refer to the combination of systems modeling AND decision-making under deep uncertainty (DMDU) as simply DMDU.

- ^

Further explanations on the terminology can be found in the introduction article.

- ^

As for example suggested in: Shavit, Y. (2023). What does it take to catch a Chinchilla? Verifying Rules on Large-Scale Neural Network Training via Compute Monitoring. arXiv preprint arXiv:2303.11341.

- ^

As recently promoted by Sam Altman at his Congress hearing.

- ^

There are more stringent definitions of robustness which can depend on a plethora of factors, including how risk averse our stakeholders are with respect to particular performance metrics. E.g., we might be extremely risk averse when it comes to the variable number of causalities. For a good analysis of various robustness metrics, see McPhail, C., Maier, H. R., Kwakkel, J. H., Giuliani, M., Castelletti, A., & Westra, S. (2018). Robustness metrics: How are they calculated, when should they be used and why do they give different results?. Earth's Future, 6(2), 169-191.

This looks super interesting, thanks for posting! I especially appreciate the "How to apply" section

One thing I'm interested in is seeing how this actually looks in practice - specifying real exogenous uncertainties (e.g. about timelines, takeoff speeds, etc), policy levers (e.g. these ideas, different AI safety research agendas, etc), relations (e.g. between AI labs, governments, etc) and performance metrics (e.g "p(doom)", plus many of the sub-goals you outline). What are the conclusions? What would this imply about prioritization decisions? etc

I appreciate this would be super challenging, but if you are aware of any attempts to do it (even if using just a very basic, simplifying model), I'd be curious to hear how it's gone

Thank you for your thoughtful comment! You've highlighted some of the key aspects that I believe make this kind of AI governance model potentially valuable. I too am eager to see how these concepts would play out in practice.

Firstly, on specifying real exogenous uncertainties, I believe this is indeed a crucial part of this approach. As you rightly pointed out, uncertainties around AI development timelines, takeoff speeds, and others are quite significant. A robust AI governance framework should indeed have the ability to effectively incorporate these uncertainties.

Regarding policy levers, I agree that an in-depth understanding of different AI safety research agendas is essential. In my preliminary work, I have started exploring a variety of such agendas. The goal is not only to understand these different research directions but also to identify which might offer the most promise given different (but also especially bleak) future scenarios.

In terms of relations between different entities like AI labs, governments, etc., this is another important area I'm planning to looking into. The nature of these relations can significantly impact the deployment and governance of AI, and we would need to develop models to help us better understand these dynamics.

Regarding performance metrics like p(doom), I'm very much in the early stages of defining and quantifying these. It's quite challenging because it requires balancing a number of competing factors. Still, I'm confident that our approach will eventually enable us to develop robust metrics for assessing different policy options. An interesting notion here is that it p(doom), is quite an aggregated variable. The DMDU approach would provide us with the opportunity to have a set of (independent) metrics that we can attempt to optimize all at the same time (think Pareto-optimality here).

As to the conclusions and the implications for prioritization decisions, it's hard to say before running optimizations or simulations, let alone before formally modeling. Given the nature of ABMs for example, we would expect emerging macro-behaviors and phenomena that arise from the defined micro-behaviors. Feedback and system dynamics are something to discover when running the model. That makes it very hard to predict what we would likely see. However, given that Epoch (and maybe others) are working on finding good policies themselves, we could include these policies into our models as well and check whether different modeling paradigms (ABMs in this case) yield similar results. Keep in mind that this would entail simply running the model with some policy inputs. There is no (multi-objective) optimization involved at this stage yet. The optimization in combination with subsets of vulnerable scenarios would add even more value to using models.

In terms of attempts to implement this approach, there is nothing out there. Previous modeling on AI governance has mostly focused on traditional modeling, e.g. (evolutionary) game theory and neoclassical macro-economic models (with e.g. Nordhaus' DICE). At the moment, there are simple first attempts to use complexity modeling for AI governance. More could be done. Beyond the pure modeling, decision-making under deep uncertainty as a framework has not been used for such purposes yet.

What I would love to see is that there is more awareness of such methodologies, their power, and potential usefulness. Ideally, some (existing or new) organization would get some funding and pick up the challenge of creating such models and conducting the corresponding analyses. I strongly believe, this could be very useful. For example, the Odyssean Institute (which I'm a part of as well) has the intention to apply this methodology (+ more) to a wide range of problems. If particular funding would be available for AI governance application, I'm sure, they would go for it.

Although this article might interest me, it is also >30 minutes long and it does not appear to have any kind of TL;DR, and the conclusion is too vague for me to sample test the article‘s insights. It is really important to provide such a summary. For example, for an article of this type and length, I’d really like to know things like:

Fair point! The article is very long. Given that this post is discussing less theory but rather teases an abstract application, it seemed originally hard to summarize. I updated the article and added a summary.