All of Tom Barnes🔸's Comments + Replies

I think some form of AI-assited governance have great potential.

However, it seems like several of these ideas are (in theory) possible in some format today - yet in practice don't get adopted. E.g.

Enhancing epistemics and decision-making processes at the top levels of organizations, leading to more informed and rational strategies.

I think it's very hard to get even the most basic forms of good epistemic practices (e.g. putting probabilities on helpful, easy-to-forecast statements) embedded at the top levels of organizations (for standard moral maze-t...

Hi Arden, thanks for the comment

I think this was something that got lost-in-translation during the grant writeup process. In the grant evaluation doc this was written as:

I think [Richard's research] clearly fits into the kind of project that we want the EA community to be - [that output] feels pretty closely aligned to our “principles-first EA” vision

This a fairly fuzzy view, but my impression is Richard's outputs will align with the takes in this post both by "fighting for EA to thrive long term" (increasing the quality of discussion around EA in the publ...

I thought it might be helpful for me to add my own thoughts, as a fund manger at EAIF (Note I'm speaking in a personal capacity, not on behalf of EA Funds or EV).

- Firstly, I'd like to apologise for my role in these mistakes. I was the Primary Investigator (PI) for Igor's application, and thus I share some responsibility here. Specifically as the PI, I should have (a) evaluated the application sooner, (b) reached a final recommendation sooner, and (c) been more responsive to communications after making a decision

- I did not make an initial decision until Novem

Specifically as the PI, I should have (a) evaluated the application sooner, (b) reached a final recommendation sooner, and (c) been more responsive to communications after making a decision

This comment feels to me like temporarily embarrassed deadline-meeter, and I don't think that's realistic. The backlog is very understandable given your task and your staffing, I assume you're doing what you can on the staffing front but even if that's resolved it's just a big task and 3 weeks is a very ambitious timeline even with full staffing. Given that, it's n...

My boring answer would be to see details on our website. In terms of submission style, we say:

...

- We recommend that applicants take about 1–2 hours to write their applications. This does not include the time spent developing the plan and strategy for the project – we recommend thinking about those carefully prior to applying.

- Please keep your answers brief and ensure the total length of your responses does not exceed 10,000 characters. We recommend a total length of 2,000–5,000 characters.

- We recommend focusing on the substantive arguments in favour of your proj

Currently we don't have a process for retroactively evaluating EAIF grants. However, there are a couple of informal channels which can help to improve decision-making:

- We request that grantees fill out a short form detailing the impact of their grant after six months. These reports are both directly helpful for evaluating a future application from the grantee, and indirectly helpful at calibrating the "bang-for-your-buck" we should expect from different grant sizes for different projects

- When evaluting the renewal of a grant, we can compare the initial appli

Hey - I think it's important to clarify that EAIF is optimising for something fairly different from GiveWell (although we share the same broad aim):

- Specifically, GiveWell is optimising for lives saved in the next few years, under the constraint of health projects in LMICs, with a high probability of impact and fairly immediate / verifable results.

- Meanwhile, EAIF is focused on a hits-based, low-certainty area, where the evidence base is weaker, grants have longer paths to impact, and the overarching goal is often unclear.

As such, a direct/equivalent c...

I think the premise of your question is roughly correct: I do think it's pretty hard to "help EA notice what it is important to work on", for a bunch of reasons:

- It could lead to new, unexpected directions which might be counterintuive / controversial.

- it requires the community to have the psychological, financial and intellectual safety to identify / work on causes which may not be promising

- It needs a non-trivial number of people to engage with the result of exploration, and act upon it (including people who can direct substantial resources)

- It has a very lo

Good Question! We have discussed running RFP(s) to more directly support projects we'd like to see. First, though, I think we want to do some more strategic thinking about the direction we want EAIF to go in, and hence at this stage I think we are fairly unsure about which project types we'd like to see more of.

Caveats aside, I personally[1] would be pretty interested in:

- Macrostrategy / cause prioritization research. I think a substantional amount of intellectual progress was made in the 2000s / early 2010s from a constellation of different places (e.

Hey, good question!

Here's a crude rationale:

- Suppose that by donating $1k to an EAIF project, they get 1 new person to consider donating more effectively.

- This 1 new person pledges to give 1% of their salary to GiveWell's top charities, and they do this for the next 10 years.

- If they make (say) $50k / year, then over 10 years they will donate $5k to GiveWell charities.

- The net result is that a $1k donation to EAIF led to $5k donated to top GiveWell charities - or a dollar donated to EAIF goes 5x further than a GiveWell Top charity

Of cou...

I agree there's no single unified resource. Having said that, I found Richard Ngo's "five alignment clusters" pretty helpful for bucketing different groups & arguments together. Reposting below:

...

- MIRI cluster. Think that P(doom) is very high, based on intuitions about instrumental convergence, deceptive alignment, etc. Does work that's very different from mainstream ML. Central members: Eliezer Yudkowsky, Nate Soares.

- Structural risk cluster. Think that doom is more likely than not, but not for the same reasons as the MIRI cluster. Instead, this cluster f

I wrote the following on a draft of this post. For context, I currently do (very) part-time work at EAIF

Overall, I‘m pretty excited to see EAIF orient to a principles-first EA. Despite recent challenges, I continue to believe that the EA community is doing something special and important, and is fundamentally worth fighting for. With this reorientation of EAIF, I hope we can get the EA community back to a strong position. I share many of the uncertainties listed - about whether this is a viable project, how EAIF will practically evaluate grants under this worldview, or if it’s even philosophically coherent. Nonetheless, I’m excited to see what can be done.

Is your claim "Impartial altruists with ~no credence on longtermism would have more impact donating to AI/GCRs over animals / global health"?

To my mind, this is the crux, because:

- If Yes, then I agree that it totally makes sense for non-longtermist EAs to donate to AI/GCRs

- If No, then I'm confused why one wouldn't donate to animals / global health instead?

[I use "donate" rather than "work on" because donations aren't sensitive to individual circumstances, e.g. personal fit. I'm also assuming impartiality because this seems core to EA to me, but of course one could donate / work on a topic for non-impartial/ non-EA reasons]

Mildly against the Longtermism --> GCR shift

Epistemic status: Pretty uncertain, somewhat rambly

TL;DR replacing longtermism with GCRs might get more resources to longtermist causes, but at the expense of non-GCR longtermist interventions and broader community epistemics

Over the last ~6 months I've noticed a general shift amongst EA orgs to focus less on reducing risks from AI, Bio, nukes, etc based on the logic of longtermism, and more based on Global Catastrophic Risks (GCRs) directly. Some data points on this:

- Open Phil renaming it's EA Community Gro

Thanks for sharing this, Tom! I think this is an important topic, and I agree with some of the downsides you mention, and think they’re worth weighing highly; many of them are the kinds of things I was thinking in this post of mine of when I listed these anti-claims:

...Anti-claims

(I.e. claims I am not trying to make and actively disagree with)

- No one should be doing EA-qua-EA talent pipeline work

- I think we should try to keep this onramp strong. Even if all the above is pretty correct, I think the EA-first onramp will continue to appeal to lots of gr

Great post, Tom, thanks for writing!

One thought is that a GCR framing isn't the only alternative to longtermism. We could also talk about caring for future generations.

This has fewer of the problems you point out (e.g. differentiates between recoverable global catastrophes and existential catastrophes). To me, it has warm, positive associations. And it's pluralistic, connected to indigenous worldviews and environmentalist rhetoric.

Over the last ~6 months I've noticed a general shift amongst EA orgs to focus less on reducing risks from AI, Bio, nukes, etc based on the logic of longtermism, and more based on Global Catastrophic Risks (GCRs) directly... My guess is these changes are (almost entirely) driven by PR concerns about longtermism.

It seems worth flagging that whether these alternative approaches are better for PR (or outreach considered more broadly) seems very uncertain. I'm not aware of any empirical work directly assessing this even though it seems a clearly empirical...

One point that hasn't been mentioned: GCR's may be many, many orders of magnitude more likely than extinctions. For example, it's not hard to imagine a super deadly virus that kills 50% of the worlds population , but a virus that manages to kill literally everyone, including people hiding out in bunkers, remote villages, and in antarctica, doesn't make too much sense: if it was that lethal, it would probably burn out before reaching everyone.

The framing "PR concerns" makes it sound like all the people doing the actual work are (and will always be) longtermists, whereas the focus on GCR is just for the benefit of the broader public. This is not the case. For example, I work on technical AI safety, and I am not a longtermist. I expect there to be more people like me either already in the GCR community, or within the pool of potential contributors we want to attract. Hence, the reason to focus on GCR is building a broader coalition in a very tangible sense, not just some vague "PR".

Speaking personally, I have also perceived a move away from longtermism, and as someone who finds longtermism very compelling, this has been disappointing to see. I agree it has substantive implications on what we prioritise.

Speaking more on behalf of GWWC, where I am a researcher: our motivation for changing our cause area from “creating a better future” to “reducing global catastrophic risks” really was not based on PR. As shared here:

...We think of a “high-impact cause area” as a collection of causes that, for donors with a variety of values and start

I haven't yet decided, but it's likely that a majority of my donations will go to this year's donor lottery. I'm fairly convinced by the arguments in favour of donor lotteries [1, 2], and would encourage others to consider them if they're unsure where to give.

Having said that, lotteries have less fuzzies than donating directly, so I may separately give to some effective charities which I'm personally excited about.

Thanks - I just saw RP put out this post, which makes much the same point. Good to be cautious about interpreting these results!

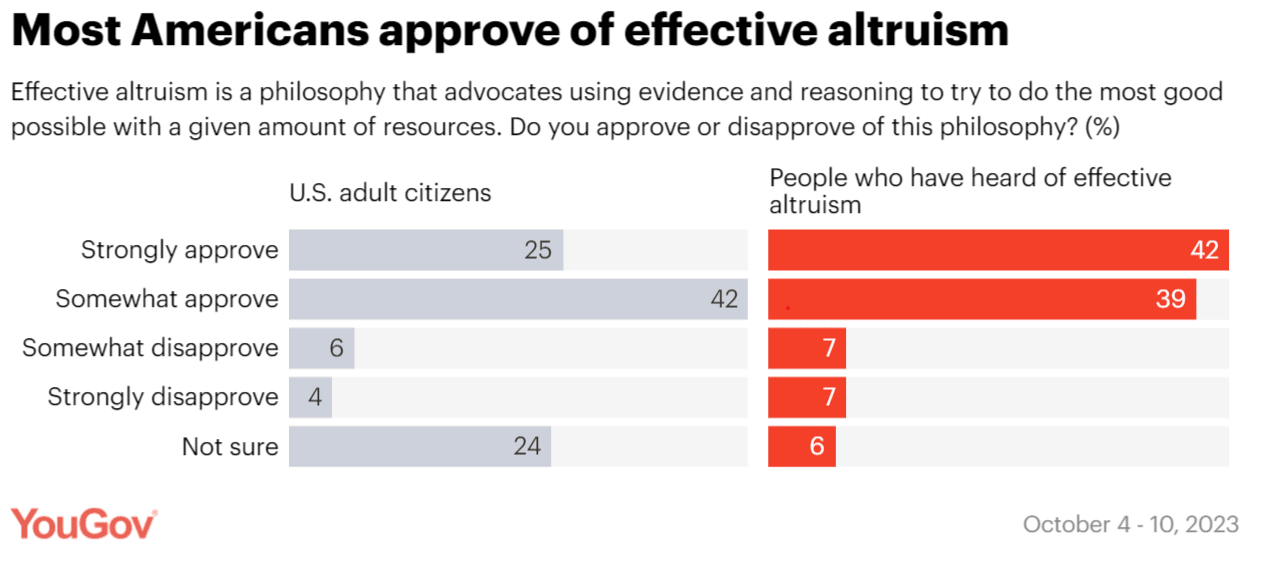

YouGov Poll on SBF and EA

I recently came across this article from YouGov (published last week), summarizing a survey of US citizens for their opinions on Sam Bankman-Fried, Cryptocurrency and Effective Altruism.

I half-expected the survey responses to be pretty negative about EA, given press coverage and potential priming effects associating SBF to EA. So I was positively surprised that:

(it's worth noting that there were only ~1000 participants, and the survey was online only)

I am very sceptical about the numbers presented in this article. 22% of US citizens have heard of Effective Altruism? That seems very high. RP did a survey in May 2022 and found that somewhere between 2.6% and 6.7% of the US population had heard of EA. Even then, my intuition was that this seemed high. Even with the FTX stuff it seems extremely unlikely that 22% of Americans have actually heard of EA.

(FYI to others - I've just seen Ajeya's very helpful writeup, which has already partially answer this question!)

(FYI to others - I've just seen Ajeya's very helpful writeup, which has already partially answer this question!)

Ten Project Ideas for AI X-Risk Prioritization

I made a list of 10 ideas I'd be excited for someone to tackle, within the broad problem of "how to prioritize resources within AI X-risk?" I won’t claim these projects are more / less valuable than other things people could be doing. However, I'd be excited if someone gave a stab at them

10 Ideas:

- Threat Model Prioritization

- Country Prioritization

- An Inside-view timelines model

- Modelling AI Lab deployment decisions

- How are resources currently allocated between risks / interventions / countries

- How to allocate

Thanks Ajeya, this is very helpful and clarifying!

I am the only person who is primarily focused on funding technical research projects ... I began making grants in November 2022

Does this mean that prior to November 2022 there were ~no full-time technical AI safety grantmakers at Open Philanthropy?

OP (prev. GiveWell labs) has been evaluating grants in the AI safety space for over 10 years. In that time the AI safety field and Open Philanthropy have both grown, with OP granting over $300m on AI risk. Open Phil has also done a lot of research on the pro...

Following the episode with Mustafa, it would be great to interview the founders of leading AI labs - perhaps Dario (Anthropic) [again], Sam (OpenAI), or Demis (DeepMind). Or alternatively, the companies that invest / support them - Sundar (Google) or Satya (Microsoft).

It seems valuable to elicit their honest opinions[1] about "p(doom)", timelines, whether they believe they've been net-positive for the world, etc.

- ^

I think one risk here is either:

a) not challenging them firmly enough - lending them undue credibility / legitimacy in the minds of listener

For deception (not deceptive alignment) - AI Deception: A Survey of Examples, Risks, and Potential Solutions (section 2)

This looks very exciting, thanks for posting!

I'll quickly mention a couple of things that stuck out to me that might make the CEA potentially overoptimistic:

- IQ points lost per μg/dl of lead - this is likely a logarithmic relationship (as suggested by Bernard and Schukraft). For a BLL of 2.4 - 10 μg/dl, IQ loss from 1 μg/dl increase may be close to 0.5, but above 10, it's closer to 0.2 per 1 μg/dl increase, and above 20, closer to 0.1. Average BLL in Bangladesh seem to be around 6.8 μg/dl, though amongst residents living near turmeric sources of lead, it co

It would be great to have some way to filter for multiple topics.

Example: Suppose I want to find posts related to the cost-effectiveness of AI safety. Instead of just filtering for "AI safety", or for just "Forecasting and estimation", I might want to find posts only at the intersection of those two. I attempted to do this by customizing my frontpage feed, but this doesn't really work (since it heavily biases to new/upvoted posts)

it relies primarily on heuristics like organiser track record and higher-level reasoning about plans.

I think this is mostly correct, with the caveat that we don't exclusively rely on qualitative factors and subjective judgement alone. The way I'd frame it is more as a spectrum between

[Heuristics] <------> [GiveWell-style cost-effectiveness modelling]

I think I'd place FP's longtermist evaluation methodology somewhere between those two poles, with flexibility based on what's feasible in each cause

I'll +1 everything Johannes has already said, and add that several people (including myself) have been chewing over the "how to rate longtermist projects" question for quite some time. I'm unsure when we will post something publicly, but I hope it won't be too far in the future.

If anyone is curious for details feel free to reach out!

This looks super interesting, thanks for posting! I especially appreciate the "How to apply" section

One thing I'm interested in is seeing how this actually looks in practice - specifying real exogenous uncertainties (e.g. about timelines, takeoff speeds, etc), policy levers (e.g. these ideas, different AI safety research agendas, etc), relations (e.g. between AI labs, governments, etc) and performance metrics (e.g "p(doom)", plus many of the sub-goals you outline). What are the conclusions? What would this imply about prioritization decisions? etc

I appreci...

Should recent ai progress change the plans of people working on global health who are focused on economic outcomes?

I think so, see here or here for a bit more discussion on this

If you think that AI will go pretty well by default (which I think many neartermists do)

My guess/impression is that this just hasn't been discussed by neartermists very much (which I think is one sad side-effect from bucketing all AI stuff in a "longtermist" worldview)

Great question!

One can claim Gift Aid on a donation to the Patient Philanthropy Fund (PPF), e.g. if donating through Giving What We Can. So a basic rate taxpayer gets a 25% "return" on the initial donation (via gift aid). The fund can then be expected to make a financial return equivalent to an index fund (~10% p.a for e.g. S&P 500).

So, if you buy the claim that your expected impact will be 9x larger in 10 years than today, then a £1,000 donation today will have an expected (mean) impact of £11,250, for longtermist causes (£1,000 * 1.25 * 9)[1]

Th...

I think this could be an interesting avenue to explore. One very basic way to (very roughly) do this is to model p(doom) effectively as a discount rate. This could be an additional user input on GiveWell's spreadsheets.

So for example, if your p(doom) is 20% in 20 years, then you could increase the discount rate by roughly 1% per year

[Techinically this will be somewhat off since (I'm guessing) most people's p(doom) doesn't increase at a constant rate, in the way a fixed discount rate does.]

Rob Besinger of MIRI tweets:

...I'm happy to say that MIRI leadership thinks "humanity never builds AGI" would be the worst catastrophe in history, would cost nearly all of the future's value, and is basically just unacceptably bad as an option.

Just to add that the Research Institute for Future Design (RIFD) is a Founders Pledge recommendation for longtermist institutional reform

(disclaimer: I am a researcher at Founders Pledge)

OpenPhil might be in a position to expand EA’s expected impact if it added a cause area that allowed for more speculative investments in Global Health & Development.

My impression is that Open Philanthropy's Global Health and Development team already does this? For example, OP has focus areas on Global aid policy, Scientific research and South Asian air quality, areas which are inherently risky/uncertain.

They have also take a hit based approach philosophically, and this is what distinguishes them from GiveWell - see e.g.

...Hits. We are explicitly purs

OpenPhil might be in a position to expand EA’s expected impact if it added a cause area that allowed for more speculative investments in Global Health & Development.

My impression is that Open Philanthropy's Global Health and Development team already does this? For example, OP has focus areas on Global aid policy, Scientific research and South Asian air quality, areas which are inherently risky/uncertain.

They have also take a hit based approach philosophically, and this is what distinguishes them from GiveWell - see e.g.

...Hits. We are explicitly purs

GiveWell have looked into Global Health regulation - see more here: https://www.givewell.org/research/public-health-regulation-update-August-2021

Thanks for writing this Lizka! I agree with many of the points in this [I was also a visiting fellow on the longtermist team this summer]. I'll throw my two cents in about my own reflections (I broadly share Lizka's experience, so here I just highlight the upsides/downsides things that especially resonated with me, or things unique to my own situation):

Vague background:

- Finished BSc in PPE this June

- No EA research experience and very little academic research experience

- Introduced to EA in 2019

Upsides:

- Work in areas that are intellectually stimulating

This is super helpful, thank you!

Which departments/roles do you think are most important to work in from an EA perspective? The Cabinet Office, HM Treasury and FCDO seem particularly impactful, but are also the most crowded and competitive. Are there lesser known departments doing neglected but important work? (e.g. my impression is DEFRA would be this for animal welfare policy - are there similar opportunities in other cause areas?). Thanks!

I'm pretty confident (~80-90%?) this is true, for reasons well summarized here.

I'm interested in thoughts on the OOM difference between animal welfare vs GHD (i.e. would $100m to animal welfare be 2x better than GHD, or 2000x?)