Austin

Bio

Hey there, I'm Austin, currently running https://manifund.org. Always happy to meet people; reach out at akrolsmir@gmail.com!

Posts 43

Comments241

To be clear, "10 new OpenPhils" is trying to convey like, a gestalt or a vibe; how I expect the feeling of working within EA causes to change, rather than a rigorous point estimate

Though, I'd be willing to bet at even odds, something like "yearly EA giving exceeds $10B by end of 2031", which is about 10x the largest year per https://forum.effectivealtruism.org/posts/NWHb4nsnXRxDDFGLy/historical-ea-funding-data-2025-update.

Some factors that could raise giving estimates:

- The 3:1 match

- If "6%" is more like "15%"

- Future growth of Anthropic stock

- Differing priorities and timelines (ie focus on TAI) among Ants

Also, the Anthropic situation seems like it'll be different than Dustin in that the number of individual donors ("principals") goes up a lot - which I'm guessing leads to more grants at smaller sizes, rather than OpenPhil's (relatively) few, giant grants

Appreciate the shoutout! Some thoughts:

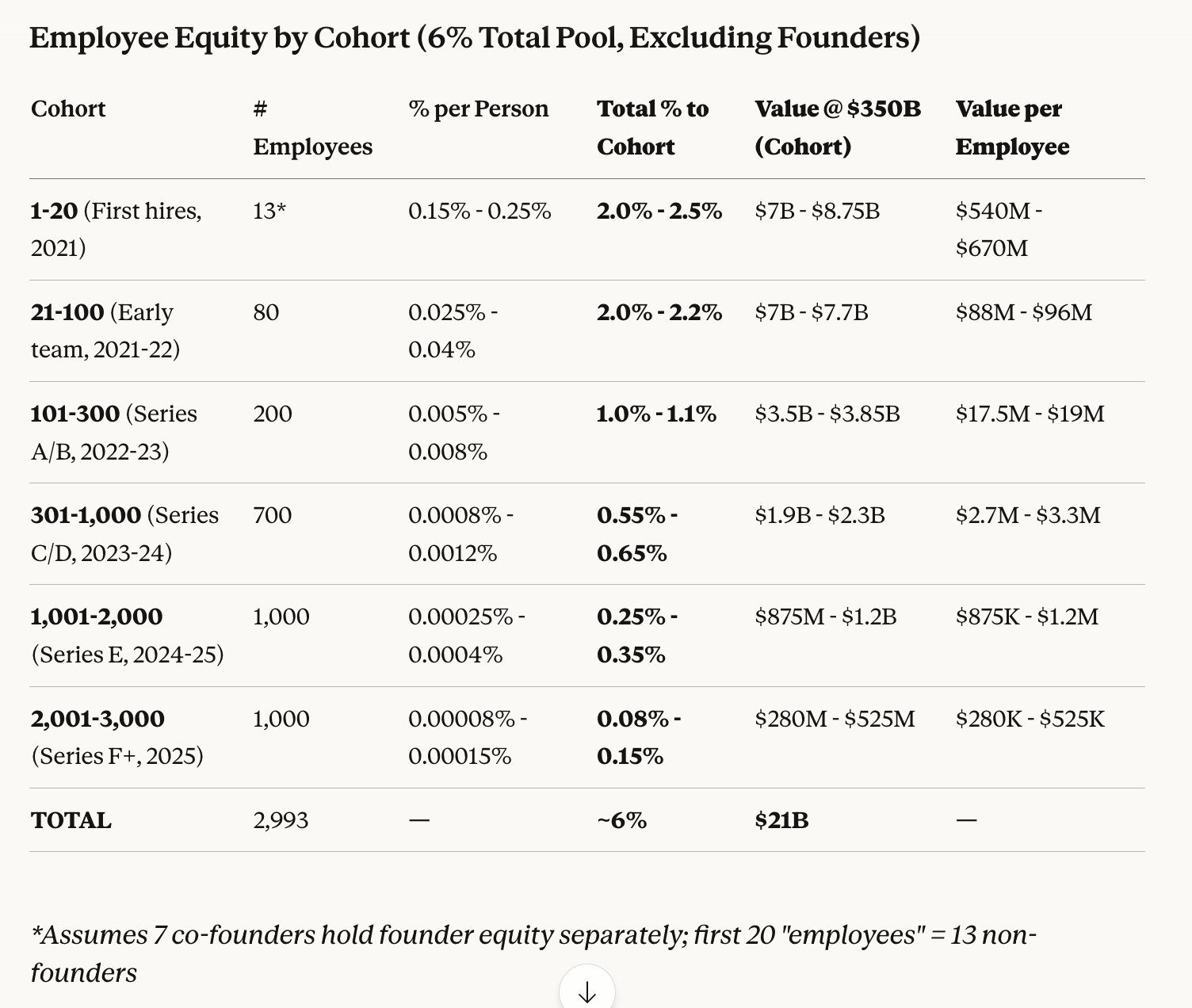

- Anthropic's been lately valued at $350b; if we estimate that eg 6% of that is in the form of equity allocated to employees, that's $21B between the ~3000 they currently have, or an average of $7m/employee.

- I think 6% is somewhat conservative and wouldn't be surprised if it were more like 12-20%

- Early employees have much (OOMs) more equity than new hires. Here's one estimated generated by Claude and I:

- Even after discounting for standard vesting terms (4 years), % of EAs, and % allocated to charity, that's still mindboggling amounts of money. I'd guess that this is more like "10 new OpenPhils in the next 2-6 years"

- I heard about the IPO rumors at the same time as everyone else (ie very recently), but for the last 6 months or so, the expectation was that Anthropic might have a ~yearly liquidity event, where Anthropic or some other buyer buys back employee stock up to some cap ($2m was thrown around as a figure)

- As reported in other places, early Anthropic employees were offered a 3:1 match of donations of equity, iirc up to 50% of their total stock grant? New employees now are offered 1:1 match, but the 3:1 holds for the early ones (though not cofounders)

For the $1m estimate, I think the figures were intended to include estimated opportunity cost foregone (eg when self-funding), and Marcus ballparked it at $100k/y * 10 years? But this is obviously a tricky calculation.

tbh, I would have assumed that the $300k through LTFF was not your primary source of funding -- it's awesome that you've produced your videos on relatively low budgets! (and maybe we should work on getting you more funding, haha)

Thanks for the thoughtful replies, here and elsewhere!

- Very much appreciate data corrections! I think medium-to-long term, our goal is to have this info in some kind of database where anyone can suggest data corrections or upload their own numbers, like Wikipedia or Github

- Tentatively, I think paid advertising is reasonable to include. Maybe more creators should be buying ads! So long as you're getting exposure in a cost-effective way and reaching equivalently-good people, I think "spend money/effort to create content" and "spend money/effort to distribute content" are both very reasonable interventions

- I don't have strong takes on quality weightings -- Marcus is much more of a video junkie than me, and has spent the last couple weeks with these videos playing constantly, so I'll let him weigh in. But broadly I do expect people to have very different takes on quality -- I'm not expecting people to agree on quality, but rather want people to have the chance to put down their own estimates. (I'm curious to see your takes on all the other channels too!)

- Sorry if we didn't include your feedback in the first post -- I think the nature of this project is that waiting for feedback from everyone is going to delay our output by too much, and we're aiming to post often and wait for corrections in public, mostly because we're extremely bandwidth constrained (running this on something like 0.25 FTE between Marcus and me)

Hey Liron! I think growth in viewership is a key reason to start and continue projects like Doom Debates. I think we're still pretty early in the AI safety discourse, and the "market" should grow, along with all of these channels.

I also think that there are many other credible sources of impact other than raw viewership - for example, I think you interviewing Vitalik is great, because it legitimizes the field, puts his views on the record, and gives him space to reflect on what actions to take - even if not that many people end up seeing the video. (compare irl talks from speakers eg at Manifest - much fewer viewers per talk but the theory of impact is somewhat different)

I have some intuitions in that direction (ie for a given individual to a topic, the first minute of exposure is more valuable than the 100th), and that would be the case for supporting things like TikToks.

I'd love to get some estimates on what the drop-off in value looks like! It might be tricky to actually apply - we/creators have individual video view counts and lengths, but no data on uniqueness of viewer (both for a single video and across different videos on the same channel, which I'd think should count as cumulative)

The drop-off might be less than your example suggests - it's actually very unclear to me which of those 2 I'd prefer.

AI safety videos can have impact by:

- Introducing people to a topic

- Convincing people to take an action (change careers, donate)

- Providing info to people working in the field

And shortform does relatively better on 1 and worse on 2 and 3, imo.

Thanks for the post! It seems like CEA and EA Funds are the only entities left housed under EV (per the EV website); if that's the case, why bother spinning out at all?