christian.r

Bio

Biosecurity at Coefficient Giving. Previously GCR Lead at Founders Pledge.

Posts 20

Comments55

Topic contributions1

It's worth separating two issues:

- MacArthur's longstanding nuclear grantmaking program as a whole

- MacArthur's late 2010s focus on weapons-usable nuclear material specifically

The Foundation had long been a major funder in the field, and made some great grants, e.g. providing support to the programs that ultimately resulted in the Nunn-Lugar Act and Cooperative Threat Reduction (See Ben Soskis's report). Over the last few years of this program, the Foundation decided to make a "big bet" on "political and technical solutions that reduce the world’s reliance on highly enriched uranium and plutonium" (see this 2016 press release), while still providing core support to many organizations. The fissile materials focus turned out to be badly-timed, with Trump's 2018 withdrawal from the JCPOA and other issues. MacArthur commissioned an external impact evaluation, which concluded that "there is not a clear line of sight to the existing theory of change’s intermediate and long-term outcomes" on the fissile materials strategy, but not on general nuclear security grantmaking ("Evaluation efforts were not intended as an assessment of the wider nuclear field nor grantees’ efforts, generally. Broader interpretation or application of findings is a misuse of this report.")

Often comments like the ones Sanjay outlined above (e.g. "after throwing a lot of good money after bad, they had not seen strong enough impact for the money invested") refer specifically to the evaluation report of the fissile materials focus.

My understanding is that the Foundation's withdrawal from the field as a whole (not just the fissile materials bet of the late 2010s) coincided with this, but was ultimately driven by internal organizational politics and shifting priorities, not impact.

I agree with Sanjay that "some 'creative destruction' might be a positive," but I think that this actually makes it a great time to help shape grantees' priorities to refocus the field's efforts back on GCR-level threats, major war between the great powers, etc. rather than nonproliferation.

I think the 80K profile notes (in a footnote) that their $1-10 billion guess includes many different kinds of government spending. I would guess it includes things like nonproliferation programs and fissile materials security, nuclear reactor safety, and probably the maintenance of parts of the nuclear weapons enterprise -- much of it at best tangentially related to preventing nuclear war.

So I think the number is a bit misleading (not unlike adding up AI ethics spending and AI capabilities spending and concluding that AI safety is not neglected). You can look at the single biggest grant under "nuclear issues" in the Peace and Security Funding Index (admittedly an imperfect database): it's the U.S. Overseas Private Investment Corporation (a former government funder) paying for spent nuclear fuel storage in Maryland...

A way to get at a better estimate of non-philanthropic spending might be to go through relevant parts of the State International Affairs Budget, the Bureau of Arms Control, Deterrence and Stability (ADS, formerly Arms Control, Verification, and Compliance), and some DoD entities (like DTRA), and a small handful of others, add those up, and add some uncertainty around your estimates. You would get a much lower number (Arms Control, Verification, and Compliance budget was only $31.2 million in FY 2013 according to Wikipedia -- don't have time to dive into more recent numbers rn).

All of which is to say that I think Ben's observation that "nuclear security is getting almost no funding" is true in some sense both for funders focused on extreme risks (where Founders Pledge and Longview are the only ones) and for the field in general

Just to clarify:

- MacArthur Foundation has left the field with a big funding shortfall

- Carnegie Corporation is a funder that continues to support some nuclear security work

- Carnegie Endowment is a think tank with a nuclear security program

- Carnegie Foundation is an education nonprofit unrelated to nuclear security

FWIW, @Rosie_Bettle and I also found this surprising and intriguing when looking into far-UVC, and ended up recommending that philanthropists focus more on "wavelength-agnostic" interventions (e.g. policy advocacy for GUV generally)

Thanks for writing this! I like the post a lot. This heuristic is one of the criteria we use to evaluate bio charities at Founders Pledge (see the "Prioritize Pathogen- and Threat-Agnostic Approaches" section starting on p. 87 of my Founders Pledge bio report).

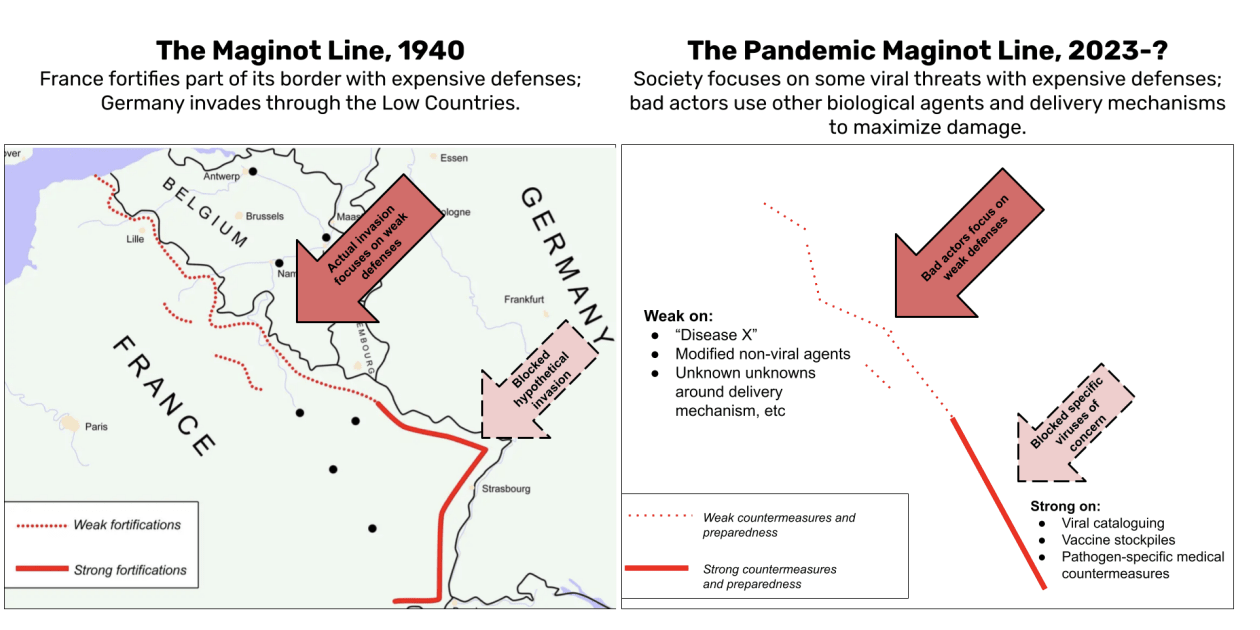

One reason that I didn't see listed as one of your premises is just the general point about hedging against uncertainty: we're just very uncertain about what a future pandemic might look like and where it will come from, and the threat landscape only becomes more complex with technological advances and intelligent adversaries. One person I talked to for that report said they're especially worried about "pandemic Maginot lines":

I also like the deterrence-by-denial argument that you make...

[broad defenses] might also act as a deterrent because malevolent actors might think: "It doesn't even make sense to try this bioterrorist attack because the broad & passive defense system is so good that it will stop it anyways"

... though I think for it to work you have to also add a premise about the relative risk of substitution, right? I.e. if you're pushing bad actors away from BW, what are you pushing them towards, and how does the risk of that new choice of weapon compare to the risk of BW? I think most likely substitutions (e.g. chem-for-bio substitution, as with Aum Shinrikyo) do seem like they would decrease overall risk.

An interesting quote relevant to bio attention hazards from an old CNAS report on Aum Shinrikyo:

"This unbroken string of failures with botulinum and anthrax eventually convinced the group that making biological weapons was more difficult than Endo [Seiichi Endo, who ran the BW program] was acknowledging. Asahara [Shoko Asahara, the founder/leader of the group] speculated that American comments on the risk of biological weapons were intended to delude would-be terrorists into pursuing this path."

Footnote source in the report: "Interview with Fumihiro Joyu (21 April 2008)."

Thank you for drawing attention to this funding gap! Really appreciate it