All of Habryka [Deactivated]'s Comments + Replies

So long and thanks for all the fish.

I am deactivating my account.[1] My unfortunate best guess is that at this point there is little point and at least a bit of harm caused by me commenting more on the EA Forum. I am sad to leave behind so much that I have helped build and create, and even sadder to see my own actions indirectly contribute to much harm.

I think many people on the forum are great, and at many points in time this forum was one of the best places for thinking and talking and learning about many of the world's most important top...

It feels appropriate that this post has a lot of hearts and simultaneously disagree reacts. We will miss you, even (perhaps especially) those of us who often disagreed with you.

I would love to reflect with you on the other side of the singularity. If we make it through alive, I think there's a decent chance that it will be in part thanks to your work.

Habryka, just wanted to say thank you for your contributions to the Forum. Overall I've appreciated them a lot! I'm happy that we'll continue to collaborate behind the scenes, at least because I think there's still plenty I can learn from you. I think we agree that running the Forum is a big responsibility, so I hope you feel free to share your honest thoughts with me.

I do think we disagree on some points. For example, you seem significantly more negative about CEA than I am (I'm probably biased because I work there, though I certainly don't think it's per...

(I think this level of brazenness is an exception, the broader thing has I think occurred many dozens of times. My best guess, though I know of no specific example, is that probably as a result of the FTX stuff, many EA organizations changed websites and made requests to delete references from archives, in order to lower their association with FTX)

> Risk 1: Charities could alter, conceal, fabricate and/or destroy evidence to cover their tracks.

I do not recall this having happened with organisations aligned with effective altruism.

(FWIW, it happened with Leverage Research at multiple points in time, with active effort to remove various pieces of evidence from all available web archives. My best guess is it also happened with early CEA while I worked there, because many Leverage members worked at CEA at the time and they considered this relatively common practice. My best guess is you can find many other instances.)

At one point CEA released a doctored EAG photo with a "Leverage Research" sign edited to be bizarrely blank. (Archive page with doctored photo, original photo.) I assume this was an effort to bury their Leverage association after the fact.

Now, consider this in the context of AI. Would the extinction of shumanity by AIs be much worse than the natural generational cycle of human replacement?

I think the answer to this is "yes", because your shared genetics and culture create much more robust pointers to your values than we are likely to get with AI.

Additionally, even if that wasn't true, humans alive at present have obligations inherited from the past and relatedly obligations to the future. We have contracts and inheritance principles and various things that extend our moral circle of c...

Yeah, this.

From my perspective "caring about anything but human values" doesn't make any sense. Of course, even more specifically, "caring about anything but my own values" also doesn't make sense, but in as much as you are talking to humans, and making arguments about what other humans should do, you have to ground that in their values and so it makes sense to talk about "human values".

The AIs will not share the pointer to these values, in the same way as every individual does to their own values, and so we should a-priori assume the AI will do worse things after we transfer all the power from the humans to the AIs.

In the absence of meaningful evidence about the nature of AI civilization, what justification is there for assuming that it will have less moral value than human civilization—other than a speciesist bias?

You know these arguments! You have heard them hundreds of times. Humans care about many things. Sometimes we collapse that into caring about experience for simplicity.

AIs will probably not care about the same things, as such, the universe will be worse by our lights if controlled by AI civilizations. We don't know what exactly those things are, but the only pointer to our values that we have is ourselves, and AIs will not share those pointers.

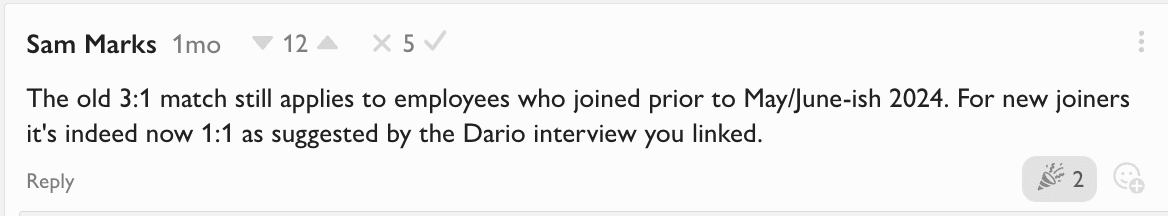

It's been confirmed that the donation matching still applies to early employees: https://www.lesswrong.com/posts/HE3Styo9vpk7m8zi4/evhub-s-shortform?commentId=oeXHdxZixbc7wwqna

Given that I just got a notification for someone disagree-voting on this:

This is definitely no longer the case in the current EA Funding landscape. It used to be the case, but various changes in the memetic and political landscape have made funding gaps much stickier, and much less anti-inductive (mostly because cost-effectiveness prioritization of the big funders got a lot less comprehensive, so there is low-hanging fruit again).

...I’m not making any claims about whether the thresholds above are sensible, or whether it was wise for them to be suggested when they were. I do think it seems clear with hindsight that some of them are unworkably low. But again, advocating that AI development be regulated at a certain level is not the same as predicting with certainty that it would be catastrophic not to. I often feel that taking action to mitigate low probabilities of very severe harm, otherwise known as “erring on the side of caution” somehow becomes a foreign concept in discussions of A

You're welcome, and makes sense. And yeah, I knew there was a period where ARC avoided getting OP funding for COI reasons, so I was extrapolating from that to not having received funding at all, but it does seem like OP had still funded ARC back in 2022.

Thanks! This does seem helpful.

One random question/possible correction:

Is Kelsey an OpenPhil grantee or employee? Future Perfect never listed OpenPhil as one of its funders, so I am a bit surprised. Possibly Kelsey received some other OP grants, but I had a bit of a sense Kelsey and Future Perfect more general cared about having financial independence from OP.

Relatedly, is Eric Neyman an Open Phil grantee or employee? I thought ARC was not being funded by OP either. Again, maybe he is a grantee for o...

(I am somewhat sympathetic to this request, but really, I don't think posts on the EA Forum should be that narrow in its scope. Clearly modeling important society-wide dynamics is useful to the broader EA mission. To do the most good you need to model societies and how people coordinate and such. Those things to me seem much more useful than the marginal random fact about factory farming or malaria nets)

I don't think this is true, or at least I think you are misrepresenting the tradeoffs and diversity here. There is some publication bias here because people are more precise in papers, but honestly, scientists are also not more precise than many top LW posts in the discussion section of their papers, especially when covering wider-ranging topics.

Predictive coding papers use language incredibly imprecisely, analytic philosophy often uses words in really confusing and inconsistent ways, economists (especially macroeconomists) throw out various terms in...

Developing "contextual awareness" does not require some special grounding insight (i.e. training systems to be general purpose problem solvers naturally causes them to optimize themselves and their environment and become aware of their context, etc.). This was back in 2020, 2021, 2022 one of the recurring disagreements between me and many ML people.

Sure, I don't think it makes a difference whether the chicken grows to a bigger size in total, or grows to a bigger size more quickly, both would establish a prior that you need fewer years of chicken-suffering for the same amount of meat, and as such that this would be good (barring other considerations).

No, those are two totally separate types of considerations? In one you are directly aiming to work against the goals of someone else in a zero-sum fashion, the other one is just a normal prediction about what will actually happen?

You really should have very different norms about how you are dealing with adversarial considerations and how you are dealing with normal causal/environmental considerations. I don't care about calling them "vanilla" or not, I think we should generally have a high prior against arguments of the form "X is bad, Y is hurting X, therefore Y is good".

Huh, yeah, seems like a loss to me.

Correspondingly, while the OP does not engage in "literally lying" I think sentences like "In light of this ruling, we believe that farmers are breaking the law if they continue to keep these chickens." and "The judges have ruled in favour on our main argument - that the law says that animals should not be kept in the UK if it means they will suffer because of how they have been bred." strike me as highly misleading, or at least willfully ignorant, based on your explanation here.

- Plus #1: I assume that anything the animal industry doesn't like would increase costs for raising chickens. I'd correspondingly assume that we should want costs to be high (though it would be much better if it could be the government getting these funds, rather than just decreases in efficiency).

I think this feels like a very aggressive zero-sum mindset. I agree that sometimes you want to have an attitude like this, but I at least at the present think that acting with the attitude of "let's just make animal industry as costly as possible" would understan...

The Humane League (THL) filed a lawsuit against the UK Secretary of State for Environment, Food and Rural Affairs (the Defra Secretary) alleging that the Defra Secretary’s policy of permitting farmers to farm fast-growing chickens unlawfully violated paragraph 29 of Schedule 1 to the Welfare of Farmed Animals (England) Regulations 2007.

Paragraph 29 of Schedule 1 to the Welfare of Farmed Animals (England) Regulations 2007 states the following:

- “Animals may only be kept for farming purposes if it can reasonably be expected, on the basis of their g

Does someone have a rough fermi on the tradeoffs here? On priors it seems like chickens bred to be bigger would overall cause less suffering because they replace more than one chicken that isn't bread to be as big, but I would expect those chickens to suffer more. I can imagine it going either way, but I guess my prior is that it was broadly good for each individual chicken to weigh more.

I am a bit worried the advocacy here is based more on a purity/environmentalist perspective where genetically modifying animals is bad, but I don't give that perspective m...

Welfare Footprint Project has analysis here, which they summarize:

...Adoption of the Better Chicken Commitment, with use of a slower-growing breed reaching a slaughter weight of approximately 2.5 Kg at 56 days (ADG=45-46 g/day) is expected to prevent “at least” 33 [13 to 53] hours of Disabling pain, 79 [-99 to 260] hours of Hurtful and 25 [5 to 45] seconds of Excruciating pain for every bird affected by this intervention (only hours awake are considered). These figures correspond to a reduction of approximately 66%, 24% and 78% , respectively, in the time exp

I agree that this is what the post is about, but the title and this[1] sentence do indeed not mean that, under any straightforward interpretation I can think of. I think bad post titles are quite costly (cf. lots of fallout from "politics is the mindkiller" being misapplied over the years), and good post titles are quite valuable.

- ^

"This points to an important conclusion: The most valuable dollars to aren't owned by us. They're owned by people who currently either don't donate at all, or who donate to charities that are orders of magnitude less effecti

The title and central claim of the post seems wrong, though my guess is you mean it poetically (but poetry that isn't true is I think worse, though IDK, it's fine sometimes, maybe it makes more sense to other people).

Clearly the dollars you own are the most valuable. If you think someone else could do more with your dollars, you can just give them your dollars! This isn't guaranteed to be true (you might not know who would ex-ante best use dollars, but still think you could learn about that ex-post and regret not giving them your money after the oppo...

Clearly you believe that probabilities can be less than 1%, reliably. Your probably of being struck by lightning today is not "0% or maybe 1%", it's on the order of 0.001%. Your probability of winning the lottery is not "0% or 1%" it's ~0.0000001%. I am confident you deal with probabilities that have much less than 1% error all the time, and feel comfortable using them.

It doesn't make sense to think of humility as something absolute like "don't give highly specific probabilities". You frequently have justified belief of a probability being very highly spec...

I agree that this is an inference. I currently think the OP thinks that in the absence of frugality concerns this would be among the most cost-effective uses of money by Open Phil's standards, but I might be wrong.

University group funding was historically considered extremely cost-effective when I talked to OP staff (beating out most other grants by a substantial margin). Possibly there was a big update here on cost-effectiveness excluding frugality-reputation concerns, but currently think there hasn't been (but like, would update if someone from OP said otherwise, and then I would be interested in talking about that).

Copying over the rationale for publication here, for convenience:

...Rationale for Public Release

Releasing this report inevitably draws attention to a potentially destructive scientific development. We do not believe that drawing attention to threats is always the best approach for mitigating them. However, in this instance we believe that public disclosure and open scientific discussion are necessary to mitigate the risks from mirror bacteria. We have two primary reasons to believe disclosure is necessary:

1. To prevent accidents and well-intentioned dev

IMO, one helpful side effect (albeit certainly not a main consideration) of making this work public, is that it seems very useful to have at least one worst-case biorisk that can be publicly discussed in a reasonable amount of detail. Previously, the whole field / cause area of biosecurity could feel cloaked in secrecy, backed up only by experts with arcane biological knowledge. This situation, although unfortunate, is probably justified by the nature of the risks! But still, it makes it hard for anyone on the outside to tell how serious ...

I agree that things tend to get tricky and loopy around these kinds of reputation-considerations, but I think at least the approach I see you arguing for here is proving too much, and has a risk of collapsing into meaninglessness.

I think in the limit, if you treat all speech acts this way, you just end up having no grounding for communication. "Yes, it might be the case that the real principles of EA are X, but if I tell you instead they are X', then you will take better actions, so I am just going to claim they are X', as long as both X and X' include cos...

...In survey work we’ve done of organizers we’ve funded, we’ve found that on average, stipend funding substantively increased organizers’ motivation, self-reported effectiveness, and hours spent on organizing work (and for some, made the difference between being able to organize and not organizing at all). The effect was not enormous, but it was substantive.

[...]

Overall, after weighing all of this evidence, we thought that the right move was to stick to funding group expenses and drop the stipends for individual organizers. One frame I used to think about thi

This is circular. The principle is only compromised if (OP believes) the change decreases EV — but obviously OP doesn't believe that; OP is acting in accordance with the do-what-you-believe-maximizes-EV-after-accounting-for-second-order-effects principle.

Maybe you think people should put zero weight on avoiding looking weird/slimy (beyond what you actually are) to low-context observers (e.g. college students learning about the EA club). You haven't argued that here. (And if that's true then OP made a normal mistake; it's not compromising principles.)

Lightspeed Grants and the S-Process paid $20k honorariums to 5 evaluators. In addition, running the round probably cost around 8-ish months of Lightcone staff time, with a substantial chunk of that being my own time, which is generally at a premium as the CEO (I would value it organizationally at ~$700k/yr on the margin, with increasing marginal costs, though to be clear, my actual salary is currently $0), and then it also had some large diffuse effects on organizational attention.

This makes me think it would be unsustainable for us to pick up running Lightspeed Grants rounds without something like ~$500k/yr of funding for it. We distributed around ~$10MM in the round we ran.

Some of my thoughts on Lightspeed Grants from what I remember: I don’t think it’s ever a good idea to name something after the key feature everyone else in the market is failing at. It leads to particularly high expectations and is really hard to get away from. (Eg OpenAI) The S-process seemed like a strange thing to include for something intended to be fast. As far as I know the S-process has never been done quickly.

You seem to be misunderstanding both Lightspeed Grants and the S-Process. The S-Process and Lightspeed Grants both feature speculation/ventur...

A non-trivial fraction of our most valuable grants require very short turn-around times, and more broadly, there is a huge amount of variance in how much time it takes to evaluate different kinds of applications. This makes a round model hard, since you both end up getting back much later than necessary to applications that were easy to evaluate, and have to reject applications that could be good but are difficult to evaluate.

You cannot spend the money you obtain from a loan without losing the means to pay it back. You can do a tiny bit to borrow against your future labor income, but the normal thing to do is to declare personal bankruptcy, and so there is little assurance for that.

(This has been discussed many dozens of times on both the EA Forum and LessWrong. There exist no loan structures as far as I know that allow you to substantially benefit from predicting doom.)

Most concrete progress on worst-case AI risks — e.g. arguably the AISIs network, the draft GPAI code of practice for the EU AI Act, company RSPs, the chip and SME export controls, or some lines of technical safety work

My best guess (though very much not a confident guess) is the aggregate of these efforts are net-negative, and I think that is correlated with that work having happened in backrooms, often in context where people were unable to talk about their honest motivations. It sure is really hard to tell, but I really want people to consider the hypoth...

...That's their... headline result? "We do not find, however, any evidence for a systematic link between the scale of refugee immigration (and neither the type of refugee accommodation or refugee sex ratios) and the risk of Germans to become victims of a crime in which refugees are suspects" (pg. 3), "refugee inflows do not exert a statistically significant effect on the crime rate" (pg. 21), "we found no impact on the overall likelihood of Germans to be victimized in a crime" (pg. 31), "our results hence do not support the view that Germans were victim

That seems very sad. Insect farming seems much lower suffering in-expectation.

Thanks for flagging this concern! I'd share it if insect farming had existed as a substitute for the factory farming of other animals. However, in practice, insect farming tries (and mostly fails) to substitute for:

Though I understand that we don't ... (read more)