All of Jonas_'s Comments + Replies

good question, jonas; thanks for asking it!

- he bought a ticket to summer camp; we refunded it ~immediately and uninvited him from the event.

- after that, and before (or possibly during?) manifest, i made the decision to, conditional on his having bought a ticket, allow him to participate in the event. a few clarifications:

- i made this decision, not the others on the manifest team; i bear sole responsibility/deserve blame-in-expectation if it was wrong.[1]

- i made this decision under quite a lot of stress/pressure, after agonizing about it for a couple days, and

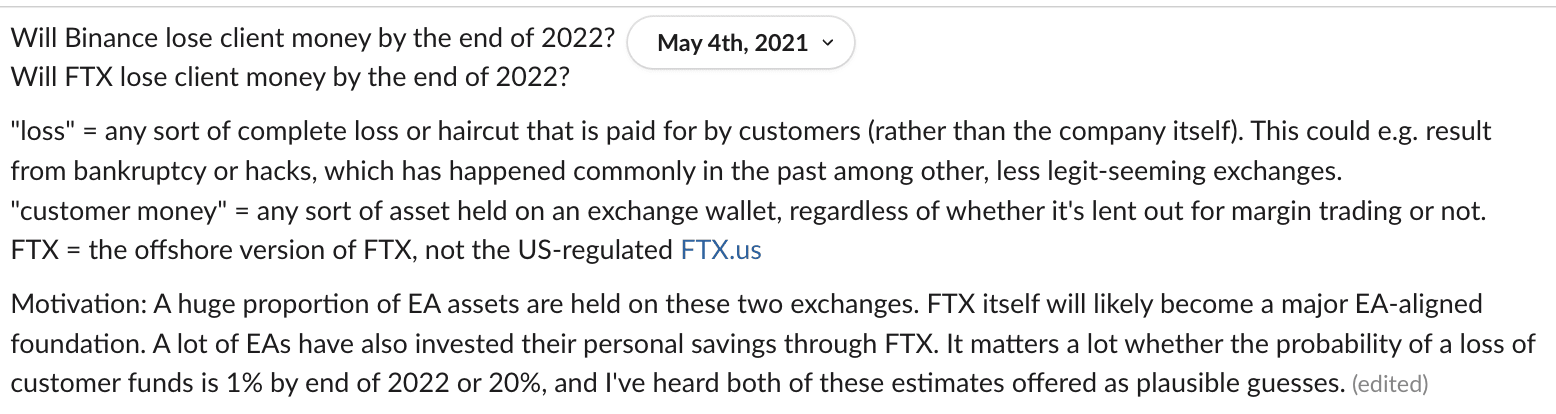

I recall feeling most worried about hacks resulting in loss of customer funds, including funds not lent out for margin trading. I was also worried about risky investments or trades resulting in depleting cash reservers that could be used to make up for hacking losses.

I don't think I ever generated the thought "customer monies need to be segregated, and they might not be", primarily because at the time I wasn't familiar with financial regulations.

E.g. in 2023 I ran across an article written in ~2018 that commented an SIPC payout in a case of a broker co-mingling customer funds with an associated trading firm. If I had read that article in 2021, I would have probably suspected FTX of doing this.

A 10-15% annual risk of startup failure is not alarming, but a comparable risk of it losing customer funds is. Your comment prompted me to actually check my prediction logs, and I made the following edit to my original comment:

...

- predicting a 10% annual risk of FTX collapsing with

FTX investors and the Future Fund (though not customers)FTX investors, the Future Fund, and possibly customers losing all of their money,

- [edit: I checked my prediction logs and I actually did predict a 10% annual risk of loss of customer funds in November 2021, though I lower

I don't think so, because:

- A 10–15% annual risk was predicted by a bunch of people up until late 2021, but I'm not aware of anyone believing that in late 2022, and Will points out that Metaculus was predicting ~1.3% at the time. I personally updated downwards on the risk because 1) crypto markets crashed, but FTX didn't, which seems like a positive sign, 2) Sequoia invested, 3) they got a GAAP audit.

- I don't think there was a great implementation of the trade. Shorting FTT on Binance was probably a decent way to do it, but holding funds on Binance for that p

Is a 10-15% annual risk of failure for a two-year-old startup alarming? I thought base rates were higher, which makes me think I'm misunderstanding your comment.

You also mention that the 10% was without loss of costumer funds, but the Metaculus 1.3% was about loss of costumer funds, which seems very different.

10% chance of yearly failure without loss of customer funds seems more than reasonable, even after Sequoia invested, in such a high-variance environment, and not necessarily a red flag.

I disagree with that quote but I do think the fact that Will is reporting this story now with a straight face is a bad sign.

My steelman would be "look if you think two people would have a positive-sum interaction and it's cheap to facilitate that, doing so is a good default". It's not obvious to me that Will spent more than 30 seconds on this. But the defense is "it was cheap and I didn't think about it very hard", not "Sam had ideas for improving twitter".

- Going even further on legibly acting in accordance with common-sense virtues than one would otherwise, because onlookers will be more sceptical of people associated with EA than they were before.

- Here’s an analogy I’ve found helpful. Suppose it’s a 30mph zone, where almost everyone in fact drives at 35mph. If you’re an EA, how fast should you drive? Maybe before it was ok to go at 35, in line with prevailing norms. Now I think we should go at 30.

Wanting to push back against this a little bit:

- The big issue here is that SBF was reckless

I normally think of community health as dealing with interpersonal stuff, and wouldn't have expected them to be equipped to evaluate whether a business was being run responsibly. It seems closer to some of the stuff they're doing now, but at the time the team was pretty constrained by available staff time (and finding it difficult to hire), so I wouldn't expect them to have been doing anything outside of their core competency.

Maybe a lesson is that we should be / should have been clearer about scopes, so there's more of an opportunity to notice when something doesn't belong to anyone?

I'd be interested in specific scenarios or bad outcomes that we may have averted. E.g., much more media reporting on the EA-FTX association resulting in significantly greater brand damage? Prompting the legal system into investigating potential EA involvement in the FTX fraud, costing enormous further staff time despite not finding anything? Something else? I'm still not sure what example issues we were protecting against.

much more media reporting on the EA-FTX association resulting in significantly greater brand damage?

Most likely concern in my eyes.

The media tends to report on lawsuits when they are filed, at which time they merely contain unsubstantiated allegations and the defendant is less likely to comment. It's unlikely that the media would report on the dismissal of a suit, especially if it was for reasons seen as somewhat technical rather than as a clear vindication of the EA individual/organization.

Moreover, it is pretty likely to me that EVF or other EA-aff...

I broadly agree with the picture and it matches my perception.

That said, I'm also aware of specific people who held significant reservations about SBF and FTX throughout the end of 2021 (though perhaps not in 2022 anymore), based on information that was distinct from the 2018 disputes. This involved things like:

- predicting a 10% annual risk of FTX collapsing with

FTX investors and the Future Fund (though not customers)FTX investors, the Future Fund, and possibly customers losing all of their money,- [edit: I checked my prediction logs and I actua

predicting a 10% annual risk of FTX collapsing with FTX investors and the Future Fund (though not customers) losing all of their money,

Do you know if this person made any money off of this prediction? I know that shorting cryptocurrency is challenging, and maybe the annual fee from taking the short side of a perpetual future would be larger than 10%, not sure, but surely once the FTX balance sheet started circulating that should have increased the odds that the collapse would happen on a short time scale enough for this trade to be profitable?[1]

I f

A meta thing that frustrates me here is I haven’t seen much talking about incentive structures. The obvious retort to negative anecdotal evidence is the anecdotal evidence Will cited about people who had previous expressed concerns who continued to affiliate with FTX and the FTXFF, but to me, this evidence is completely meaningless because continuing to affiliate with FTX and FTXFF meant a closer proximity to money. As a corollary, the people who refused to affiliate with them did so at significant personal & professional cost for that two-year period....

I disagree-voted because I have the impression that there's a camp of people who left Alameda that has been misleading in their public anti-SBF statements, and has a separate track record of being untrustworthy.

So, given that background, I think it's unlikely that Will threatened someone in a strong sense of the word, and possible that Bouscal or MacAulay might be misleading, though I haven't tried to get to the bottom of it.

I wish this post's summary was clearer on what, exactly, readers could/should do to help with vote pairing. I think this could be valuable during the 2024 election!

Vote pairing seems to be more cost-effective than making calls, going door to door, or other standard forms of changing election outcomes, provided you are in the very special circumstances which make it effective.

What are those circumstances?

Tens of thousands of people have participated in swaps

Do you have a source for this? How many of those were in swing states?

I worry 'wholesomeness' overemphasizes doing what's comfortable and convenient and feels good, rather than what makes the world better:

- As mentioned, wholesomeness could stifle visionaries, and this downside wasn't discussed further.

- Fighting to abolish slavery wasn't a particularly wholesome act, in fact it created a lot of unwholesome conflict. Protests aren't wholesome. I expect a lot of important future work to look and feel unwholesome. (I'm aware you could fit it into the framework somehow, but it's an awkward fit.)

- I worry it'll make EA focus even more

I definitely think it's important to consider (and head off) ways that it could go wrong!

Your first two bullets are discussed a bit further in the third essay which I'll put up soon. In short, I completely agree that sometimes you need visionary thought or revolutionary action. At the same time I think revolutionary action -- taken by people convinced that they are right -- can be terrifying and harmful (e.g. the Cultural Revolution). I'd really prefer if people engaging in such actions felt some need to first feel into what is unwholesome about them, so t...

If someone else had written my comment, I would ask myself how good that person's manipulation detection skills are. If I judge them to be strong, I would deem the comment to be significant evidence, and think it more likely that Owen has a flaw that he healed, and less likely that he's a manipulator. If I judge them to be weak (or I simply don't have enough information about the person writing the comment), I would not update.

If there are a lot of upvotes on my comment, that may indicate that readers are naïvely trusting me and making an error, or have good reason to trust my judgment, or have independently reached similar conclusions. I think it's most likely a combination of all of these three factors.

Over the years, I’ve done a fair amount of community building, and had to deal with a pretty broad range of bad actors, toxic leadership, sexual misconduct, manipulation tactics and the like. Many of these cases were associated with a pattern of narcissism and dark triad spectrum traits, self-aggrandizing behavior, manipulative defense tactics, and unwillingness to learn from feedback. I think people with this pattern rarely learn and improve, and in most cases should be fired and banned from the community even if they are making useful contribut...

I think what Jonas has written is reasonable, and I appreciate all the work he did to put in proper caveats. I also don’t want to pick on Owen in particular here; I don’t know anything besides what has been publicly said, and some positive interactions I had with him years ago. That said: I think the fact that this comment is so highly upvoted indicates a systemic error, and I want to talk about that.

The evidence Jonas provides is equally consistent with “Owen has a flaw he has healed” and “Owen is a skilled manipulator who charms men, and harasses women”....

If you're running an event and Lighthaven isn't an option for some reason, you may be interested in Atlantis: https://forum.effectivealtruism.org/posts/ya5Aqf4kFXLjoJeFk/atlantis-berkeley-event-venue-available-for-rent

This seems the most plausible speculation so far, though probably also wrong: https://twitter.com/dzhng/status/1725637133883547705

Regarding the second question, I made this prediction market: https://manifold.markets/JonasVollmer/in-a-year-will-we-think-that-sam-al?r=Sm9uYXNWb2xsbWVy

The really important question that I suspect everyone is secretly wondering about: If you book the venue, will you be able to have the famous $2,000 coffee table as a centerpiece for your conversations? I imagine that after all the discourse about it, many readers may feel compelled to book Lighthaven to see the table in action!

Yeah, I disagree with this on my inside view—I think "come up with your own guess of how bad and how likely future pandemics could be, with the input of others' arguments" is a really useful exercise, and seems more useful to me than having a good probability estimate of how likely it is. I know that a lot of people find the latter more helpful though, and I can see some plausible arguments for it, so all things considered, I still think there's some merit to that.

How to fix EA "community building"

Today, I mentioned to someone that I tend to disagree with others on some aspects of EA community building, and they asked me to elaborate further. Here's what I sent them, very quickly written and only lightly edited:

...Hard to summarize quickly, but here's some loose gesturing in the direction:

- We should stop thinking about "community building" and instead think about "talent development". While building a community/culture is important and useful, the wording overall sounds too much like we're inward-focused as opposed to t

Hmm, I personally think "discover more skills than they knew. feel great, accomplish great things, learn a lot" applies a fair amount to my past experiences, and I think aiming too low was one of the biggest issues in my past, and I think EA culture is also messing up by discouraging aiming high, or something.

I think the main thing to avoid is something like "blind ambition", where your plan involves multiple miracles and the details are all unclear. This seems also a fairly frequent phenomenon.

If there's no strategy to profitably bet on long-term real interest rates increasing, you can't infer timelines from real interest rates. I think the investment strategies outlined in this post don't work, and I don't know if there's a strategy that works.

I want to caution against the specific trading strategies suggested in this post:

- Shorting LTPZ will cost you 7% per year in borrow fees.

- The short ETFs TBF, TBT, and TTT have 1% fees. In addition, short or levered ETFs rebalance frequently, and are known to have large hidden fees of 1-4% per year. Short ET

Accounting for fees, it's actually a lot worse than you wrote, see here: https://forum.effectivealtruism.org/posts/8c7LycgtkypkgYjZx/agi-and-the-emh-markets-are-not-expecting-aligned-or?commentId=LmJ6inhSESoJGyWjQ

I wouldn't be too surprised if someone on the GAP leadership team had indeed participated in an illegal straw donor scheme, given media reports and general impressions of how recklessly some of the SBF-adjacent politics stuff was carried out. But, I do think the specific title allegation is worded too strongly and sweepingly given the lack of clear evidence, and will probably turn out to be wrong.

I think both the original post and this comment don't do a great job at capturing the nuance of what's going on. I think the original post makes many valid points and isn't "90% just a confusion", and I suspect in fact may end up looking like it was correct about some of its key claims. But, I also suspect it'll be wrong on a lot of the details/specifics, and in particular it seems problematic to me that it makes fairly severe accusations without clear caveats on what we know and what we don't know at this point.

(I downvoted both this comment and the OP.)

At least 300 political contributions are suspected by the investigation to have been made with straw donors

This claim is false; the source says that they "were made in the name of a straw donor or paid for with corporate funds". Which is frustrating, as the former would be much more surprising and interesting than the latter.

What if the investor decided to invest knowing there was an X% chance of being defrauded, and thought it was a good deal because there's still an at least (100-X)% chance of it being a legitimate and profitable business? For what number X do you think it's acceptable for EAs to accept money?

Fraud base rates are 1-2%; some companies end up highly profitable for their investors despite having committed fraud. Should EA accept money from YC startups? Should EA accept money from YC startups if they e.g. lied to their investors?

I think large-scale defrauding un...

I don't know the acceptable risk level either. I think it is clearly below 49%, and includes at least fraud against bondholders and investors that could reasonably be expected to cause them to lose money from what they paid in.

It's not so much the status of the company as a fraud-commiter that is relevant, but the risk that you are taking and distributing money under circumstances that are too close to conversion (e.g., that the monies were procured by fraud and that the investors ultimately suffer a loss). I can think of two possible safe harbors under wh...

I expect a 3-person board with a deep understanding of and commitment to the mission to do a better job selecting new board members than a 9-person board with people less committed to the mission. I also expect the 9-person board members to be less engaged on average.

(I avoid the term "value-alignment" because different people interpret it very differently.)

I think 9-member boards are often a bad idea because they tend to have lots of people who are shallowly engaged, rather than a smaller number of people who are deeply engaged, tend to have more diffusion of responsibility, and tend to have much less productive meetings than smaller groups of people. While this can be mitigated somewhat with subcommittees and specialization, I think the optimal number of board members for most EA orgs is 3–6.

no lawyers/accountants/governance experts

I have a fair amount of accounting / legal / governance knowledge and as part of my board commitments think it's a lot less relevant than deeply understanding the mission and strategy of the relevant organization (along with other more relevant generalist skills like management, HR, etc.). Edit: Though I do think if you're tied up in the decade's biggest bankruptcy, legal knowledge is actually really useful, but this seems more like a one-off weird situation.

You seem to imply that it's fine if some board members are not value-aligned as long as the median board member is. I strongly disagree: This seems a brittle setup because the median board member could easily become non-value-aligned if some of the more aligned board members become busy and step down, or have to recuse due to a COI (which happens frequently), or similar.

TL;DR: You're incorrectly assuming I'm into Nick mainly because of value alignment, and while that's a relevant factor, the main factor is that he has an unusually deep understanding of EA/x-risk work that competent EA-adjacent professionals lack.

I might write a longer response. For now, I'll say the following:

- I think a lot of EA work is pretty high-context, and most people don't understand it very well. E.g., when I ran EA Funds work tests for potential grantmakers (which I think is somewhat similar to being a board member), I observed that highly skilled

Nitpick:

Gary Marcus was shared the full draft including all the background research / forecast drafts. So it would be more accurate to say "only read bits of it".