All of kokotajlod's Comments + Replies

This is quite a large range, but yeah I get that this comes out of nowhere. The range I cite is based on a few things:

- Anders and Stuart's paper from 2013 about building a Dyson swarm from Mercury and colonising the galaxy. They estimate a ~36 year construction time. However, they don't include costs to refine materials or build infrastructure, they estimate a 5 year construction time for solar captors, which I think is too long for post-AGI, and I think the disassembly of Mercury is unlikely.

- So I have replicated their paper and I played around with the mo

It would be good to flag in the main text that the justification for this is in Appendix 2 (initially I thought it was a bare asertion). Also, it is interesting that in @kokotajlod's scenario the 'wildly superintelligent' AI maxes out at 1 million-fold AI R&D speedup; I commented to them on a draft that this seemed implausibly high to me. I have no particular take on whether 100x is too low or too high as the theoretical max, but it would be interesting to work out why there is this Forethought vs AI Futures difference.

Interesting. Some thoughts:...

UPDATE: Apparently I was wrong in the numbers here, I was extrapolating from Rose's table in the OP but I interpreted "to" as "in" and also the doubling time extrapolation was Rose's interpolation rather than what the forecasters said. The 95% most aggressive forecaster prediction for most expensive training run in 2030 was actually $250M, not $140M. My apologies! Thanks to Josh Rosenberg for pointing this out to me.

(To be clear, I think my frustration with the XPT forecasters was still basically correct, if a bit overblown; $250M is still too low and the ...

Big +1 to the gist of what you are saying here re: courage. It's something I've struggled with a lot myself, especially while I was at OpenAI. The companies have successfully made it cool to race forward and uncool to say e.g. that there's a good chance doing so will kill everyone. And peer pressure, financial incentives, status incentives, etc. really do have strong effects, including on people who think they are unaffected.

I left this comment on one of their docs about cluelessness, reposting here for visibility:

...CLR embodies the best of effective altruism in my opinion. You guys are really truly actually trying to make the world better / be ethical. That means thinking hard and carefully about what it means to make the world better / be ethical, and pivoting occasionally as a result.

I am not a consequentialist myself, certainly not an orthodox EV-maximizing bayesian, though for different reasons than you describe here (but perhaps for related reasons?). I think I like your s

I agree that as time goes on states will take an increasing and eventually dominant role in AI stuff.

My position is that timelines are short enough, and takeoff is fast enough, that e.g. decisions and character traits of the CEO of an AI lab will explain more of the variance in outcomes than decisions and character traits of the US President.

Thanks for discussing with me!

(I forgot to mention an important part of my argument, oops -- You wouldn't have said "at least 100 years off" you would have said "at least 5000 years off." Because you are anchoring to recent-past rates of progress rather than looking at how rates of progress increase over time and extrapolating. (This is just an analogy / data point, not the key part of my argument, but look at GWP growth rates as a proxy for tech progress rates: According to this GWP doubling time was something like 600 years back then, whereas it's more l...

I agree with the claims "this problem is extremely fucking hard" and "humans aren't cracking this anytime soon" and I suspect Yudkowsky does too these days.

I disagree that nanotech has to predate taking over the world; that wasn't an assumption I was making or a conclusion I was arguing for at any rate. I agree it is less likely that ASIs will make nanotech before takeover than that they will make nanotech while still on earth.

I like your suggestion to model a more earthly scenario but I lack the energy and interest to do so right now.

My closing statement ...

Cool. Seems you and I are mostly agreed on terminology then.

Yeah we definitely disagree about that crux. You'll see. Happy to talk about it more sometime if you like.

Re: galaxy vs. earth: The difference is one of degree, not kind. In both cases we have a finite amount of resources and a finite amount of time with which to do experiments. The proper way to handle this, I think, is to smear out our uncertainty over many orders of magnitude. E.g. the first OOM gets 5% of our probability mass, the second OOM gets 5% of the remaining probability mass, and so fo...

What if he just said "Some sort of super-powerful nanofactory-like thing?"

He's not citing some existing literature that shows how to do it, but rather citing some existing literature which should make it plausible to a reasonable judge that a million superintelligences working for a year could figure out how to do it. (If you dispute the plausibility of this, what's your argument? We have an unfinished exchange on this point elsewhere in this comment section. Seems you agree that a galaxy full of superintelligences could do it; I feel like it's pretty plausible that if a galaxy of superintelligences could do it, a mere million also could do it.)

Disagree. Almost every successful moral campaign in history started out as an informal public backlash against some evil or danger.

The AGI companies involve a few thousand people versus 8 billion, a few tens of billions of funding versus 360 trillion total global assets, and about 3 key nation-states (US, UK, China) versus 195 nation-states in the world.

Compared to actually powerful industries, AGI companies are very small potatoes. Very few people would miss them if they were set on 'pause'.

I said IMO. In context it was unnecessary for me to justify the claim, because I was asking whether or not you agreed with it.

I take it that not only do you disagree, you agree it's the crux? Or don't you? If you agree it's the crux (i.e. you agree that probably a million cooperating superintelligences with an obedient nation of humans would be able to make some pretty awesome self-replicating nanotech within a few years) then I can turn to the task of justifying the claim that such a scenario is plausible. If you don't agree, and think that even such a su...

What part of the scenario would you dispute? A million superintelligences will probably exist by 2030, IMO; the hard part is getting to superintelligence at all, not getting to a million of them (since you'll probably have enough compute to make a million copies)

I agree that the question is about the actual scenario, not the galaxy. The galaxy is a helpful thought experiment though; it seems to have succeeded in establishing the right foundations: How many OOMs of various inputs (compute, experiments, genius insights) will be needed? Presumably a galaxy's ...

I also would like to see such breakdowns, but I think you are drawing the wrong conclusions from this example.

Just because Yudkowsky's first guess about how to make nanotech, as an amateur, didn't pan out, doesn't mean that nanotech is impossible for a million superintelligences working for a year. In fact it's very little evidence. When there are a million superintelligences they will surely be able to produce many technological marvels very quickly, and for each such marvel, if you had asked Yudkowsky to speculate about how to build it, he would have fai...

Thanks for this thoughtful and detailed deep dive!

I think it misses the main cruxes though. Yes, some people (Drexler and young Yudkowsky) thought that ordinary human science would get us all the way to atomically precise manufacturing in our lifetimes. For the reasons you mention, that seems probably wrong.

But the question I'm interested in is whether a million superintelligences could figure it out in a few years or less. (If it takes them, say, 10 years or longer, then probably they'll have better ways of taking over the world) Since that's the situatio...

Hey, thanks for engaging. I saved the AGI theorizing for last because it's the most inherently speculative: I am highly uncertain about it, and everyone else should be too.

But the question I'm interested in is whether a million superintelligences could figure it out in a few years or less. (If it takes them, say, 10 years or longer, then probably they'll have better ways of taking over the world) Since that's the situation we'll actually be facing.

I would dispute that "a million superintelligence exist and cooperate with each other to invent MNT" is ...

OK, so our credences aren't actually that different after all. I'm actually at less than 65%, funnily enough! (But that's for doom = extinction. I think human extinction is unlikely for reasons to do with acausal trade; there will be a small minority of AIs that care about humans, just not on Earth. I usually use a broader definition of "doom" as "About as bad as human extinction, or worse.")

I am pretty confident that what happens in the next 100 years will straightforwardly translate to what happens in the long run. If humans are still well-cared-for in 2...

Those words were not yours, but you did say you agreed it was the main crux, and in context it seemed like you were agreeing that it was a crux for you too. I see now on reread that I misread you and you were instead saying it was a secondary crux. Here, let's cut through the semantics and get quantitative:

What is your credence in doom conditional on AIs not caring for humans?

If it's >50%, then I'm mildly surprised that you think the risk of accidentally creating a permanent pause is worse than the risks from not-pausing. I guess you did say that ...

First of all, you are goal-post-moving if you make this about "confident belief in total doom by default" instead of the original "if you really don't think unchecked AI will kill everyone." You need to defend the position that the probability of existential catastrophe conditional on misaligned AI is <50%.

Secondly, "AI motives will generalize extremely poorly from the training distribution" is a confused and misleading way of putting it. The problem is that it'll generalize in a way that wasn't the way we hoped it would generalize.

Third, to answer your...

Thanks!

I think this is evidence for a groupthink phenomenon amongst superforecasters. Interestingly my other experiences talking with superforecasters have also made me update in this direction (they seemed much more groupthinky than I expected, as if they were deferring to each other a lot. Which, come to think of it, makes perfect sense -- I imagine if I were participating in forecasting tournaments, I'd gradually learn to reflexively defer to superforecasters too, since they genuinely would be performing well.)

Ironically, one of the two predictions you quote as example of bad prediction, is in fact an example of a good prediction: "The most realistic estimate for a seed AI transcendence is 2020."

Currently it seems that AGI/superintelligence/singularity/etc. will happen sometime in the 2020's. Yudkowsky's median estimate in 1999 was 2020 apparently, so he probably had something like 30% of his probability mass in the 2020s, and maybe 15% of it in the 2025-2030 period when IMO it's most likely to happen.

Now let's compare to what other people would have been saying...

FWIW you can see more information, including some of the reasoning, on page 655 (# written on pdf) / 659 (# according to page searcher) of the report. (H/t Isabel.) See also page 214 for the definition of the question.

Some tidbits:

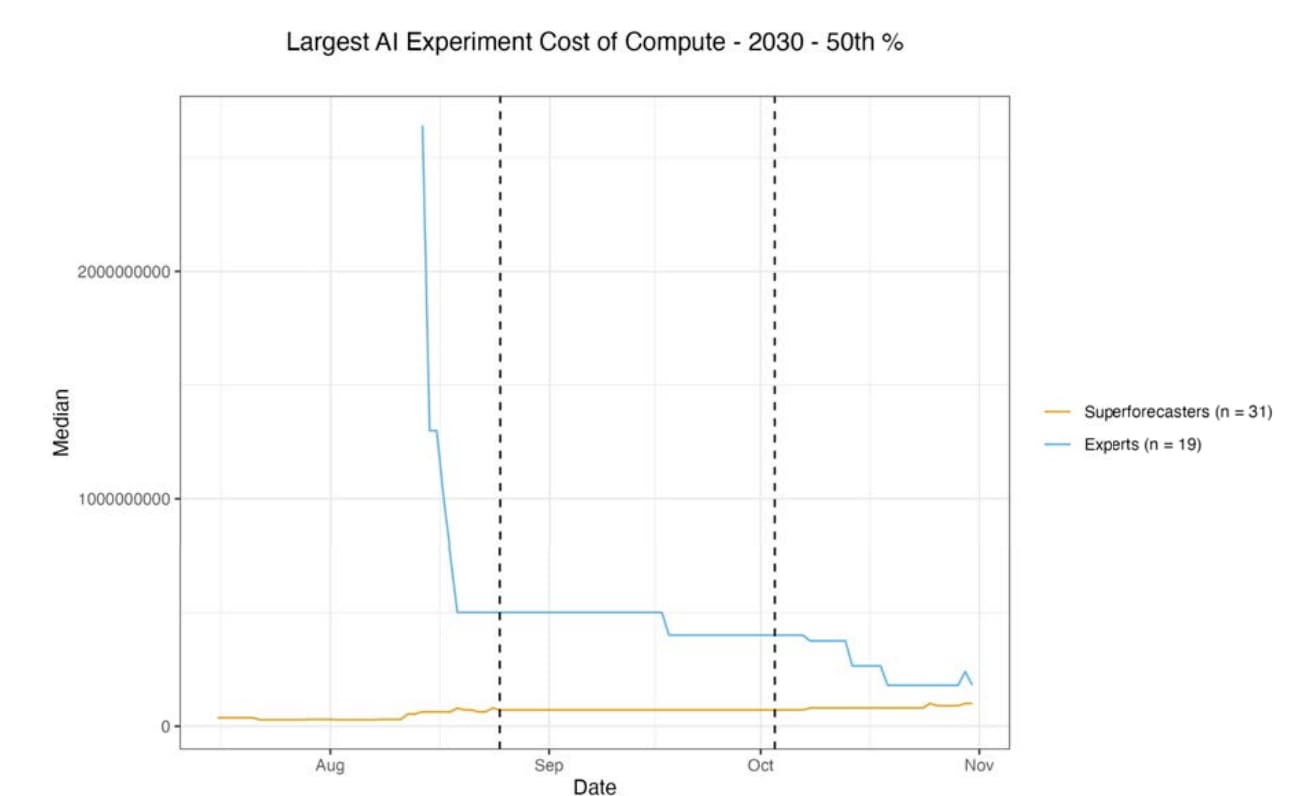

Experts started out much higher than superforecasters, but updated downwards after discussion. Superforecasters updated a bit upward, but less:

(Those are billions on the y-axis.)

This was surprising to me. I think the experts' predictions look too low even before updating, and look much worse after updating!

The part of the ...

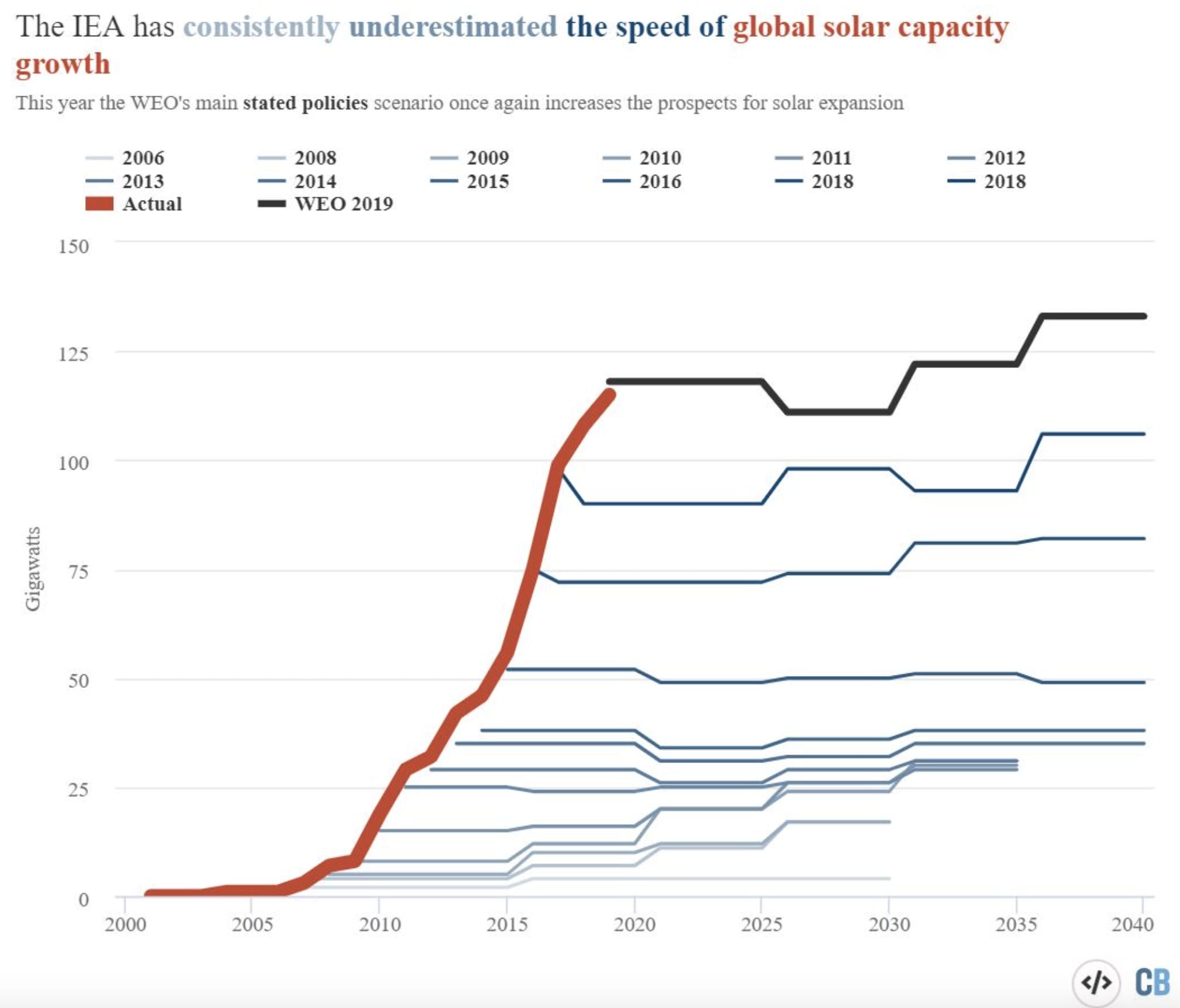

Reminds me of this:

A kind of conservativeness of "expert" opinion that doesn't correctly appreciate (rapid) exponential growth.

Fair, but still: In 2019 Microsoft invested a billion dollars in OpenAI, roughly half of which was compute: Microsoft invests billions more dollars in OpenAI, extends partnership | TechCrunch

And then GPT-3 happened, and was widely regarded to be a huge success and proof that scaling is a good idea etc.

So the amount of compute-spending that the most aggressive forecasters think could be spent on a single training run in 2032... is about 25% as much compute-spending as Microsoft gave OpenAI starting in 2019, before GPT-3 and before the scaling hypothesis. Th...

No, alas. However I do have this short summary doc I wrote back in 2021: The Master Argument for <10-year Timelines - Google Docs

And this sequence of posts making narrower points: AI Timelines - LessWrong

The XPT forecasters are so in the dark about compute spending that I just pretend they gave more reasonable numbers. I'm honestly baffled how they could be so bad. The most aggressive of them thinks that in 2025 the most expensive training run will be $70M, and that it'll take 6+ years to double thereafter, so that in 2032 we'll have reached $140M training run spending... do these people have any idea how much GPT-4 cost in 2022?!?!? Did they not hear about the investments Microsoft has been making in OpenAI? And remember that's what the most aggressive among them thought! The conservatives seem to be living in an alternate reality where GPT-3 proved that scaling doesn't work and an AI winter set in in 2020.

UPDATE: Apparently I was wrong in the numbers here, I was extrapolating from Rose's table in the OP but I interpreted "to" as "in" and also the doubling time extrapolation was Rose's interpolation rather than what the forecasters said. The 95% most aggressive forecaster prediction for most expensive training run in 2030 was actually $250M, not $140M. My apologies! Thanks to Josh Rosenberg for pointing this out to me.

(To be clear, I think my frustration with the XPT forecasters was still basically correct, if a bit overblown; $250M is still too low and the ...

- I haven’t considered all of the inputs to Cotra’s model, most notably the 2020 training computation requirements distribution. Without forming a view on that, I can’t really say that ~53% represents my overall view.

Sorry to bang on about this again and again, but it's important to repeat for the benefit of those who don't know: The training computation requirements distribution is by far the biggest cruxy input to the whole thing; it's the input that matters most to the bottom line and is most subjective. If you hold fixed everything else Ajeya inputs, but...

Don't apologise, think it's a helpful point!

I agree that the training computation requirements distribution is more subjective and matters more to the eventual output.

I also want to note that while on your view of the compute reqs distribution, the hardware/spending/algorithmic progress inputs are a rounding error, this isn't true for other views of the compute reqs distribution. E.g. for anyone who does agree with Ajeya on the compute reqs distribution, the XPT hardware/spending/algorithmic progress inputs shift median timelines from ~2050 to ~2090, which...

Another nice story! I consider this to be more realistic the previous one about open-source LLMs. In fact I think this sort of 'soft power takeover' via persuasion is a lot more probable than most people seem to think. That said, I do think that hacking and R&D acceleration are also going to be important factors, and my main critique of this story is that it doesn't discuss those elements and implies that they aren't important.

...In addition to building more data centers, MegaAI starts constructing highly automated factories, which will produce the

I think it mostly means that you should be looking to get quick wins. When calculating the effectiveness of an intervention, don't assume things like "over the course of an 85-year lifespan this person will be healthier due to better nutrition now." or "this person will have better education and thus more income 20 years from now." Instead just think: How much good does this intervention accomplish in the next 5 years? (Or if you want to get fancy, use e.g. a 10%/yr discount rate)

See Neartermists should consider AGI timelines in their spending decisions - ...

I also guess cry wolf-effects won't be as large as one might think - e.g. I think people will look more at how strong AI systems appear at a given point than at whether people have previously warned about AI risk.

Oh dang. How about: When you press the button it doesn't donate the money right away, but just adds it to an internal tally, and then once a quarter you get a notification saying 'time to actually process this quarter's donations, press here to submit your face for scanning, sorry bout the inconvenience'

Oh dang. I definitely want it to be the former, not the latter. Maybe we can get around the iOS platform constraints somehow, e.g. when you press the button it doesn't donate the money right away, but just adds it to an internal tally, and then once a quarter you get a notification saying 'time to actually process this quarter's donations, press here to submit your face for scanning, sorry bout the inconvenience'

Hmmm. I really don't want the karma, I was using it as a signal of how good the idea is. Like, creating this app is only worth someone's time and money if it becomes a popular app that lots of people use. So if it only gets like 20 karma then it isn't worth it, and arguably even if it gets 50 karma it isn't worth it. But if it blows up and hundreds of people like it, that's a signal that it's going to be used by lots of people.

Maybe I should have just asked "Comment in the comments if you'd use this app; if at least 30 people do so then I'll fund this app." Idk. If y'all think I should do something like that instead I'm happy to do so.

ETA: Edited the OP to remove the vote-brigady aspect.

Not sure I'm assuming that. Maybe. The way I'd put it is, selection pressure towards grabby values seems to require lots of diverse agents competing over a lengthy period, with the more successful ones reproducing more / acquiring more influence / etc. Currently we have this with humans competing for influence over AGI development, but it's overall fairly weak pressure. What sorts of things are you imagining happening that would strengthen the pressure? Can you elaborate on the sort of scenario you have in mind?

NIce post! My current guess is that the inter-civ selection effect is extremely weak and that the intra-civ selection effect is fairly weak. N=1, but in our civilization the people gunning for control of AGI seem more grabby than average but not drastically so, and it seems possible for this trend to reverse e.g. if the US government nationalizes all the AGI projects.

Nice. Well, I guess we just have different intuitions then -- for me, the chance of extinction or worse in the Octopcracy case seems a lot bigger than "small but non-negligible" (though I also wouldn't put it as high as 99%).

Human groups struggle against each other for influence/power/control constantly; why wouldn't these octopi (or AIs) also seek influence? You don't need to be an expected utility maximizer to instrumentally converge; humans instrumentally converge all the time.

Oh also you might be interested in Joe Carlsmith's report on power-seeking AI, it has a relatively thorough discussion of the overall argument for risk.

Nice analysis!

I think a main point of disagreement is that I don't think systems need to be "dangerous maximizers" in the sense you described in order to predictably disempower humanity and then kill everyone. Humans aren't dangerous maximizers yet we've killed many species of animals, neanderthals, and various other human groups (genocide, wars, oppression of populations by governments, etc.) Katja's scenario sounds plausible for me except for the part where somehow it all turns out OK in the end for humans. :)

Another, related point of disagreement:...

Great list, thanks!

My current tentative expectation is that we'll see a couple things in 1, but nothing in 2+, until it's already too late (i.e. until humanity is already basically in a game of chess with a superior opponent, i.e. until there's no longer a realistic hope of humanity coordinating to stop the slide into oblivion, by contrast with today where we are on a path to oblivion but there's a realistic possibility of changing course.)

OK, thanks. My vague recollection is that A&S were making conservative guesses about the time needed to disassemble mercury, I forget the details. But mercury is 10^23 kg roughly, so e.g. a 10^9 kg starting colony would need to grow 14 OOMs bigger, which it could totally do in much less than 20 years if its doubling time is much less than a year. E.g. if it has a one-month doubling time, then the disassembly of Mercury could take like 4 years. (this ignores constraints like heat dissipation to be clear... though perhaps those can be ignored, if we are ... (read more)