All of Madhav Malhotra's Comments + Replies

@trevor1 Thank you for the detailed response!

RE: Crossposting to LessWrong

- I've crossposted it now. If there are other forums relevant to cybersecurity topics in EA in particular, I'd appreciate suggestions :-)

RE: Personal Cybersecurity and IoT

- Yes, I agree that the best way to improve cybersecurity with personal IoT devices is to avoid them. I'll update the wording to be more clear about that.

Here's a summary of the report from Claude-1 if someone's looking for an 'abstract':

...There are several common misconceptions about biological weapons that contribute to underestimating the threat they pose. These include seeing them as strategically irrational, not tactically useful, and too risky for countries to pursue.

In reality, biological weapons have served strategic goals for countries in the past like deterrence and intimidation. Their use could also provide tactical advantages in conflicts.

Countries have historically taken on substantial risks in p

"There are many other things that could have been done to prevent Russia’s unprovoked, illegal attack on Ukraine. Ukraine keeping nuclear weapons is not one of them."

- Could you explain your thinking more for those not familiar with the military strategy involved? What about having nuclear weapons makes an invasion more viable? Which specific alternatives would be more useful in preventing the attacks and why?

Context: I'm hoping to find lessons from nuclear security that are transferable to the security of bioweapons and transformative AI.

Question: Are there specific reports you could recommend on prevening these nuclear security risks:

- Insider threats (including corporate/foreign espionage)

- Cyberattacks

- Arms races

- Illicit / black market proliferation

- Fog of war

A lot of people have gotten the message: "Direct your career towards AI Safety!" from EA. Yet there seem to be way too few opportunities to get mentorship or a paying job in AI safety. (I say this having seen others' comments on the forum and applied to 5+ fellowships personally where there were 500-3000% more applicants than spots).

What advice would you give to those feeling disenchanted by their inability to make progress in AI safety? How is 80K hours working to better (though perhaps not entirely) balance the supply and demand for AI safety mentorship/jobs?

It would be awesome if there were more mentorship/employment opportunities in AI Safety! Agree this is a frustrating bottleneck. Would love to see more senior people enter this space and open up new opportunities. Definitely the mentorship bottleneck makes it less valuable to try to enter technical AI safety on the margin, although we still think it's often a good move to try, if you have the right personal fit. I'd also add this bottleneck is way lower if you: 1. enter via more traditional academic or software engineer routes rather than via 'EA fellowshi...

For what it's worth, I run an EA university group outside of the U.S (at the University of Waterloo in Canada). I haven't observed any of the points you mentioned in my experience with the EA group:

- We don't run intro to EA fellowships because we're a smaller group. We're not trying to convert more students to be 'EA'. We more so focus on supporting whoever's interested in working on EA-relevant projects (ex: a cheap air purifier, a donations advisory site, a cybersecurity algorithm, etc.). Whether they identify with the EA movement or not.

- Since we're

...we're not hosting any discussions where a group organiser could convince people to work on AI safety over all else.

I feel it is important to mention that this isn't supposed to happen during introductory fellowship discussions. CEA and other group organizers have compiled recommendations for facilitators (here is one, for example), and all the ones I have read quite clearly state that the role of the facilitator is to help guide the conversation, not overly opine or convince participants to believe in x over y.

Out of curiosity @LondonGal, have you received any followups from HLI in response to your critique? I understand you might not be at liberty to share all details, so feel free to respond as you feel appropriate.

Context: I work as a remote developer in a government department.

Practices that help:

- Show up at least 3 minutes early to every meeting. Change your clocks to run 3 minutes ahead if you can't discipline yourself to do it. Shows commitment.

- On a related note, take personal time to reflect before a meeting. Think of questions you want to ask or what you want to achieve, even if you're not hosting the meeting and you just do it for 5 minutes.

- Try scheduling a calendar reminder with an intention before the meeting. Ex: Say back what others said

It takes courage to share such detailed stories of goals not going right! Good on you for having the courage to do so :-)

It seems that two kinds of improvements within EA might be helpful to reduce the probability of other folks having similar experiences.

Proactively, we could adjust the incentives promoted (especially by high-visibility organisations like 80K hours). Specifically, I think it would be helpful to:

- Recommend that early-career folks try out university programs with internships/coops in the field they think they'd enjoy. This

Thank you for your thoughtful questions!

RE: "I guess the goal is to be able to run models on devices controlled by untrusted users, without allowing the user direct access to the weights?"

You're correct in understanding that these techniques are useful for preventing models from being used in unintended ways where models are running on untrusted devices! However, I think of the goal a bit more broadly; the goal is to add another layer of defence behind a cybersecure API (or another trusted execution environment) to prevent a model from being stolen a...

I'm not sure this is a good idea.

- It seems possible that the individual interventions you're linking to research on are not representative of every possible intervention about skill development.

- Also, it seems possible that future interventions may integrate both building human and economic capital to enable recipients to make changes in their lives. Ie. Skill-building + direct cash transfers.

- Also, it's generally uncertain whether GiveDirectly will continue to be the most effective or endorsed donation recommendation. I say this given

Nice, thanks for your question!

One relevant thing here is that I'm not thinking about the book giveaway as just (or even primarily) an incentive to get people to view our site — I think most of the value is probably in getting people in our target audience to read the books we give out, because I think the books contain a lot of important ideas. I think I'd be potentially excited about doing this without the connection to 80k at all (though the connection to 80k/incentive to engage with us seems like an added bonus).

Re: physical books versus ebook:

- We do of

If you're interested in supporting education, scholarships to next generation education companies might be worth supporting (example - disclaimer, I've gone through the program of this particular company).

Regarding investments in environmental causes, more neglected causes are more valuable to invest in. For instance, supporting NOVEL carbon capture companies (ie. not tree planting).

Given the high-tech industry in Canada, it might be relatively advantageous to support neglected research priorities.

- For instance, you might be ab

It would be helpful to hear more details (including sources) about the problem you've found:

- What has the NSA publicly announced in its position on AGI?

- What has the external academic community or relevant nonprofits assessed their likely plans to be?

- Which decision-makers are involved in determining the NSA's policies on AGI development and/or safety?

Also, please add a more specific call to action describing:

- The action you want to be taken

- Which kinds of people are best suited to do this

"I'm not sure I buy the fourth point - while there will be some competition between plant-based and cell-based meat, they also both compete with the currently much larger traditional meat market, and I think there are some consumers who would eat plant-based but not cell-based and vice versa."

- How confident are you in your reasoning here?

- What kind of empirical evidence do you think would disprove/prove this argument?

The evidence I've seen (Source) suggests that consumers are largely confused about the difference between cell-based and lab-based ...

I'm curious, how do you think about the relative importance of promoting cell-based (cultivated) vs. plant-based meat?

- From an animal suffering perspective, they both displace animals that might suffer.

- From an environmental perspective, plant-based meat is currently much better. (Source)

- Economically, one could argue that more competition will lead to more product choice, winning over more consumers.

- But one could also argue that the competition between plant-based and animal-based meats will keep traditional meats being consumed for l

I'd be interested in hearing someone from Anthropic discuss the upsides or downsides of this arrangement. From an entirely personal standpoint, it seems odd that Anthropic gave up equity AND had restrictions in how the investment could be used. That said, I imagine there are MANY other details about I'm not aware of since I wasn't involved in the decision.

For anyone seeking more information on this, feel free to search up the key terms 'data poisoning' and 'Trojans.' The Centre for AI Safety has somewhat accessible content and notes on this under lecture 13 here.

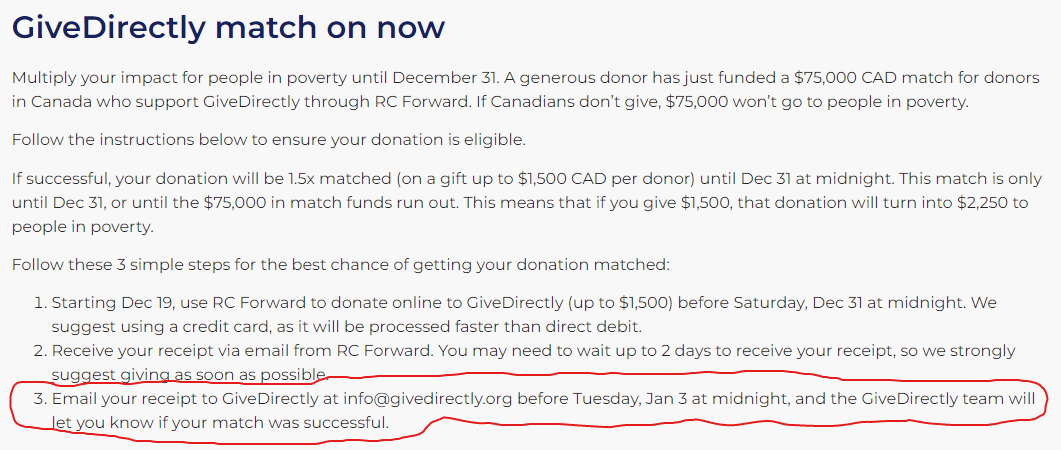

Update: lesson learned - read the fine print effectively

- My donation wasn't matched. I didn't do enough due diligence to read a final clause on their website that said we had to email our donation receipt somewhere.

- One 'little' mistake on my part is the difference between 50 people not stuck in poverty vs. 18 people not stuck in poverty.

- Doing good effectively = reading the fine print effectively.

I'm interested in building a career around technological risks. Sometimes, I think about all the problems we're facing. From biosecurity risks to AI safety risks to cybersecurity risks to ... And it all feels so big and pressing and hopeless and whatnot.

Clearly, me being existential about these issues wasn't helping anyone. So I've had to accept that I have to pick a smaller, very specific problem that my skillset could be useful in. Even if it's not solving everything, I won't solve anything if I don't specialise in that way.

Maybe some spirit ...

Update: the Double Up Drive donation matching is no longer available.

Donations to Animal Charity Evalutators, Hellen Keller International, and StrongMinds are still being matched.

For Canadian donors, donations to GiveDirectly are being matched to at least 50%.

Brief comment, but it is GREAT to see Kurzgesagt making more EA-aligned videos! I just watched their videos. on how helping others lead prosperous lives is good for your own interest.

- It's great to see EA content in other languages. When I watched the videos, they weren't yet released in English though I'll comment a link to the English video later.

- The simple explanations and cute visuals are quite a relief compared to complex/endless posts on the forum. I'd never heard of this line of reasoning on the forum and I'm pretty glad I got to learn it like

To elaborate on the point that I think Arjun is making, the general tip seems self-evidently good. It's not very valuable to state it, relative to the value of precise tips on HOW to get a mentor or how good this is relative to other good things (to figure out how much it should be prioritised compared to something else).

Useful context: I'm 19. I stopped reading after the "Use your brainspace wisely."

Overall impression: boring as stated :D

More specific feedback:

- The tips seem very diverse (tips on relationships, mental health, physical environment, and learning skills were all under the "Use your brainspace wisely". It's unclear how they relate together. Thus it's confusing to read / figure out where you can find what tip.

- This could be addressed by having very clear headings. Ex: "Tips on Where You Live." Ex: "Tips on the Relationships You Develop." Ex: "T

My aim in this article wasn't to be technically precise. Instead, I was trying to be as simple as possible.

If you'd like to let me know the technical errors, I can try to edit the post if:

- The correction seems useful for a beginner trying to understand AI Safety.

- I can find feasible ways to explain the technical issues simply.

Again, I agree with you regarding the reality that every civilisation has eventually collapsed. I personally also agree that it doesn't currently seem likely that our 'modern globalised' civilisation won't collapse, though I'm no expert on the matter.

I have no particular insight about how comparable the collapse of the Roman Empire is to the coming decades of human existence.

I agree that amidst all the existential threats to humankind, the content of this article is quite narrow.

The UX has so much improvement since the 2022 version of this :-) It feels concise and the scrolling to each new graph makes it interesting to learn each new thing. Kudos to whoever designed it this way!