All of Thomas Kwa🔹's Comments + Replies

Being really good at your job is a good way to achieve impact in general, because your "impact above replacement" is what counts. If a replacement level employee who is barely worth hiring has productivity 100, and the average productivity is 150, the average employee will get 50 impact above replacement. If you do your job 1.67x better than average (250 productivity), you earn 150 impact above replacement, which is triple the average.

I strongly disagree with a couple of claims:

MIRI's business model relies on the opposite narrative. MIRI pays Eliezer Yudkowsky $600,000 a year. It pays Nate Soares $235,000 a year. If they suddenly said that the risk of human extinction from AGI or superintelligence is extremely low, in all likelihood that money would dry up and Yudkowsky and Soares would be out of a job.

[...] The kind of work MIRI is doing and the kind of experience Yudkowsky and Soares have isn't really transferable to anything else.

- $235K is not very much money [edit: in the context of

$235K is not very much money. I made close to Nate's salary as basically an unproductive intern at MIRI.

I understand the point being made (Nate plausibly could get a pay rise from an accelerationist AI company in Silicon Valley, even if the work involved was pure safetywashing, because those companies have even deeper pockets), but I would stress that these two sentences underline just how lucrative peddling doom has become for MIRI[1] as well as how uniquely positioned all sides of the AI safety movement are.

There are not many organizations whose mes...

See the gpt-5 report. "Working lower bound" is maybe too strong; maybe it's more accurate to describe it as an initial guess at a warning threshold for rogue replication and 10x uplift (if we can even measure time horizons that long). I don't know what the exact reasoning behind 40 hours was, but one fact is that humans can't really start viable companies using plans that only take a ~week of work. IMO if AIs could do the equivalent with only a 40 human hour time horizon and continuously evade detection, they'd need to use their own advantages and have made up many current disadvantages relative to humans (like being bad at adversarial and multi-agent settings).

What scale is the METR benchmark on? I see a line that "Scores are normalized such that 100% represents a 50% success rate on tasks requiring 8 human-expert hours.", but is the 0% point on the scale 0 hours?

METR does not think that 8 human hours is sufficient autonomy for takeover; in fact 40 hours is our working lower bound.

What if we decide that the Amazon rainforest has a negative WAW sign? Would you be in favor of completely replacing it with a parking lot, if doing so could be done without undue suffering of the animals that already exist there?

Definitely not completely replacing because biodiversity has diminishing returns to land. If we pave the whole Amazon we'll probably extinct entire families (not to mention we probably cause ecological crises elsewhere and disrupt ecosystem services etc), whereas on the margin we'll only extinct species endemic to the deforested re...

It's plausible to me that biodiversity is valuable, but with AGI on the horizon it seems a lot cheaper in expectation to do more out-there interventions, like influencing AI companies to care about biodiversity (alongside wild animal welfare), recording the DNA of undiscovered rainforest species about to go extinct, and buying the cheapest land possible (middle of Siberia or Australian desert, not productive farmland). Then when the technology is available in a few decades and we're better at constructing stable ecosystems de novo, we can terraform the des...

I totally agree that there are some "out there" interventions that, in a perfect world, we would be funding much more. In particular biobanking (recording the DNA of species about to go extinct) should be considered much more, I totally agree. Unfortunately, the world is full of techno-pessimists, deontologists, post-structuralists, diplomats who don't know what any of the preceding words even mean, etc. This seems insane, but MANY conservationists are against de-extinction for (in my view) fairly straightforward technophobic reasons. Convincing THOS...

A couple more "out-there" ideas for ecological interventions:

- "recording the DNA of undiscovered rainforest species" -- yup, but it probably takes more than just DNA sequences on a USB drive to de-extinct a creature in the future. For instance, probably you need to know about all kinds of epigenetic factors active in the embryo of the creature you're trying to revive. To preserve this epigenetic info, it might be easiest to simply freeze physical tissue samples (especially gametes and/or embryos) instead of doing DNA sequencing. You might

Thanks for the reply.

- Everyone has different emotional reactions so I would be wary about generalizing here. Of the vegetarians I know, certainly not all are disgusted by meat. Disgust is often more correlated with whether they use a purity frame of morality or experience disgust in general than how much they empathize with animals [1]. Empathy is not an end; it's not required for virtuous action, and many people have utilitarian, justice-centered, or other frames that can prescribe actions with empathy taking a lesser role. As for me, I feel that after exp

- You're shooting the messenger. I'm not advocating for downvoting posts that smell of "the outgroup", just saying that this happens in most communities that are centered around an ideological or even methodological framework. It's a way you can be downvoted while still being correct, especially from the LEAST thoughtful 25% of EA forum voters

- Please read the quote from Claude more carefully. MacAskill is not an "anti-utilitarian" who thinks consequentialism is "fundamentally misguided", he's the moral uncertainty guy. The moral parliament usually recommends actions similar to consequentialism with side constraints in practice.

I probably won't engage more with this conversation.

Claude thinks possible outgroups include the following, which is similar to what I had in mind

...Based on the EA Forum's general orientation, here are five individuals/groups whose characteristic opinions would likely face downvotes:

- Effective accelerationists (e/acc) - Advocates for rapid AI development with minimal safety precautions, viewing existential risk concerns as overblown or counterproductive

- TESCREAL critics (like Emile Torres, as you mentioned) - Scholars who frame longtermism/EA as ideologically dangerous, often linking it to eugenics, colonialism

- My main concern is that the arrival of AGI completely changes the situation in some unexpected way.

- e.g. in the recent 80k podcast on fertility, Rob Wiblin opines that the fertility crash would be a global priority if not for AI likely replacing human labor soon and obviating the need for countries to have large human populations. There could be other effects.

- My guess is that due to advanced AI, both artificial wombs and immortality will be technically feasible in the next 40 years, as well as other crazy healthcare tech. This is not an uncommon view

- Before

Yeah, while I think truth-seeking is a real thing I agree it's often hard to judge in practice and vulnerable to being a weasel word.

Basically I have two concerns with deferring to experts. First is that when the world lacks people with true subject matter expertise, whoever has the most prestige--maybe not CEOs but certainly mainstream researchers on slightly related questions-- will be seen as experts and we will need to worry about deferring to them.

Second, because EA topics are selected for being too weird/unpopular to attract mainstream attention/fund...

I think the "most topics" thing is ambiguous. There are some topics on which mainstream experts tend to be correct and some on which they're wrong, and although expertise is valuable on topics experts think about, they might be wrong on most topics central to EA. [1] Do we really wish we deferred to the CEO of PETA on what animal welfare interventions are best? EAs built that field in the last 15 years far beyond what "experts" knew before.

In the real world, assuming we have more than five minutes to think about a question, we shouldn't "defer" to experts ...

Can you explain what you mean by "contextualizing more"? (What a curiously recursive question...)

I mean it in this sense; making people think you're not part of the outgroup and don't have objectionable beliefs related to the ones you actually hold, in whatever way is sensible and honest.

Maybe LW is better at using disagreement button as I find it's pretty common for unpopular opinions to get lots of upvotes and disagree votes. One could use the API to see if the correlations are different there.

IMO the real answer is that veganism is not an essential part of EA philosophy, just happens to be correlated with it due to the large number of people in animal advocacy. Most EA vegans and non-vegans think that their diet is a small portion of their impact compared to their career, and it's not even close! Every time you spend an extra $5 finding a restaurant with a vegan option you could help 5,000 shrimp instead. Vegans have other reasons like non-consequentialist ethics, virtue signaling or self-signaling, or just a desire not to eat the actual flesh/...

I really enjoyed reading this post; thanks for writing it. I think it's important to take space colonization seriously and shift into "near mode" given that, as you say, the first entity to start a Dyson Swarm has a high chance to get DSA if it isn't already decided by AGI, and it's probably only 10-35 years away.

Assorted thoughts

- Rate limits should not apply to comments on your own quick takes

- Rate limits could maybe not count negative karma below -10 or so, it seems much better to rate limit someone only when they have multiple downvoted comments

- 2.4:1 is not a very high karma:submission ratio. I have 10:1 even if you exclude the april fool's day posts, though that could be because I have more popular opinions, which means that I could double my comment rate and get -1 karma on the extras and still be at 3.5

- if I were Yarrow I would contextualize more or use m

When 80,000 Hours pivoted to AI, I largely stopped listening to the podcast, thinking that as part of the industry I would already know everything. But I recently found myself driving a lot and consuming more audio content, and the recent ones eg with Holden, Daniel K and ASB are incredibly high quality and contain highly nontrivial, grounded opinions. If they keep this up I will probably keep listening until the end times.

What inspiring and practical examples!

Maybe a commitment to impact causes EA parents to cooperate at maximizing it, which means optimally distributing the parenting workload whatever society thinks. In EA with lots of conferences and hardworking impactful women, it makes sense that the man's op cost is often lower. Elsewhere couples cooperate to maximize income, but men tend to have higher earning potential so maybe the woman would often do more childcare anyway.

My sense is that parenting falls on the woman due not only to gender norms, but also higher ave...

I don't have reason to think that prioritizing women's careers is more common in EA than in other similarly educated groups. And within EA, I definitely think it's still most common that women are doing more of the parenting work. But I wanted to highlight some examples to show that multiple configurations really are possible!

There are a few mistakes/gaps in the quantitative claims:

Continuity: If A ≻ B ≻ C, there's some probability p ∈ (0, 1) where a guaranteed state of the world B is ex ante morally equivalent to "lottery p·A + (1-p)·C” (i.e., p chance of state of the world A, and the rest of the probability mass of C)

This is not quite the same as either property 3 or property 3' in the Wikipedia article, and it's plausible but unclear to me that you can prove 3' from it. Property 3 uses "p ∈ [0, 1]" and 3' has an inequality; it seems like the argument still goes through with ...

Ok interesting! I'd be interested in seeing this mapped out a bit more, because it does sound weird to have BOS be offsettable with positive wellbeing, positive wellbeing to be not offsettable with NOS, but BOS and NOS are offsetable with each other? Or maybe this isn't your claim and I'm misunderstanding

This is what kills the proposal IMO, and EJT also pointed this out. The key difference between this proposal and standard utilitarianism where anything is offsettable isn't the claim that that NOS is worse than TREE(3) or even 10^100 happy lives, sin...

It is not necessary to be permanently vegan for this. I have only avoided chicken for about 4 years, and hit all of these benefits.

- Because evidence suggests that when we eat animals we are likely to view them as having lower cognitive capabilities or moral status (see here for a wikipedia blurb about it).

- I have felt sufficient empathy for chicken for basically the whole time I haven't eaten it. I also went vegan for (secular) Lent four years ago, and felt somewhat more empathy for other animals, but my sense is eating non-chicken animals didn't meaningfull

I perceive it as +EV to me but I feel like I'm not the best buyer of short timelines. I would maybe do even odds on before 2045 for smaller amounts, which is still good for you if you think the yearly chance won't increase much. Otherwise maybe you should seek a bet with someone like Eli Lifland. The reason I'm not inclined to make large bets is that the markets would probably give better odds for something that unlikely, eg options that pay out with very high real interest rates; whereas a few hundred dollars is enough to generate good EA forum discussion.

No bet. I don't have a strong view on short timelines or unemployment. We may find a bet about something else; here are some beliefs

- my or Linch's position vs yours on probability of extinction from nuclear war (I'd put $2 against your $98 that you ever update upwards by 50:1 on extinction by nuclear war by 2050, but no more for obvious reasons)

- >25% that global energy consumption will increase by 25% year over year some year before 2035 (30% is the AI expert median, superforecaster median is <1%), maybe more

- probably >50% that a benchmark by Mechani

Thanks for the suggestions, Thomas! Would you like to make the following bet?

- If the global energy consumption as reported by Our World in Data (OWID) increases by more than 25 % from year Y to Y + 1, for any Y <= 2033 (such that the maximum Y + 1 is 2034, before 2035), I donate 12 k$ to an organisation or fund of your choice in July 2035 (OWID updates their data in June).

- Otherwise, you donate 4 k$ to an organisation or fund of my choice in July 2035.

Your expected gain is more than 0 (= (0.25*12 - (1 - 0.25)*4)*10^3), but sufficiently so? You said ">2...

A footnote says the 0.15% number isn't an actual forecast: "Participants were asked to indicate their intuitive impression of this risk, rather than develop a detailed forecast". But superforecasters' other forecasts are roughly consistent with 0.15% for extinction, so it still bears explaining.

In general I think superforecasters tend to anchor on historical trends, while AI safety people anchor on what's physically possible or conceivable. Superforecasters get good accuracy compared to domain experts on most questions because domain experts in many fields...

I think I would take your side here. Unemployment above 8% requires replacing so many jobs that humans can't find new ones elsewhere even during the economic upswing created by AGI, and there is less than 2 years until the middle of 2027. This is not enough time for robotics (on current trends robotics time horizons will be under 1 hour) and AI companies can afford to keep hiring humans even if they wouldn't generate enough value most places, so the question is whether we see extremely high unemployment in remotable sectors that automate away existing jobs...

Spreading around the term “humane meat” may get it into some people’s heads that this practice can be humane, which could in turn increase consumption overall, and effectively cancel out whatever benefits you’re speculating about.

I don't know what the correct definition of "humane" is, but I strongly disagree with this claim in the second half. The question is whether higher-welfare imports reduce total suffering once we account for demand effects. So we should care about improving conditions from "torture camps" -> "prisons" -> "decent". Tortu...

Yeah, because I believe in EA and not in the socialist revolution, I must believe that EA could win some objective contest of ideas over socialism. In the particular contest of EA -> socialist vs socialist -> EA conversions I do think EA would win since it's had a higher growth rate in the period both existed, though someone would have to check how many EA deconverts from the FTX scandal became socialists. This would be from both signal and noise factors; here's my wild guess at the most important factors:

- Convinced EAs have been exposed to the "root

At risk of further psychoanalyzing the author, it seems like they're naturally more convinced by forms of evidence that EAs use, and had just not encountered them until this project. Many people find different arguments more compelling, either because they genuinely have moral or empirical assumptions incompatible with EA, or because they're innumerate. So I don't think EA has won some kind of objective contest of ideas here.

Nevertheless this was an interesting read and the author seems very thoughtful.

One problem is putting everything on a common scale when historical improvements are so sensitive to the distribution of tasks. A human with a computer with C, compared to a human with just log tables, is a billion times faster at multiplying numbers but less than twice as fast at writing a novel. So your distribution of tasks has to be broad enough that it captures the capabilities you care about, but it also must be possible to measure a baseline score at low tech level and have a wide range of possible scores. This would make the benchmark extremely difficult to construct in practice.

If your algorithms get more efficient over time at both small and large scales, and experiments test incremental improvements to architecture or data, then they should get cheaper to run proportionally to algorithmic efficiency of cognitive labor. I think this is better as a first approximation than assuming they're constant, and might hold in practice especially when you can target small-scale algorithmic improvements.

I'm worried that trying to estimate by looking at wages is subject to lots of noise due to assumptions being violated, which could result in the large discrepancy you see between the two estimates.

One worry: I would guess that Anthropic could derive more output from extra researchers (1.5x/doubling?) than from extra GPUs (1.18x/doubling?), yet it spends more on compute than researchers. In particular I'd guess alpha/beta = 2.5, and wages/r_{research} is around 0.28 (maybe you have better data here). Under Cobb-Douglas and perfect competition th...

(edited to fix numbers, I forgot 2 boxes means +3dB)

dB is logarithmic so a proportional reduction in sound energy will mean subtracting an absolute number of dB, not a percentage reduction in dB.

HouseFresh tested the AirFanta 3Pro https://housefresh.com/airfanta-3pro-review/ at different voltage levels and found:

- 12.6 V: 56.3 dBA, 14 minutes

- 6.54 V: 43.3 dBA, 28 minutes

So basically you subtract 13 dB when halving the CADR. I now realize that if you have two boxes, the sound energy will double (+3dB) and so you'll actually only get -10 dB from running two at ...

I broadly agree with section 1, and in fact since we published I've been looking into how time horizon varies between domains. Not only is there lots of variance in time horizon, the rate of increase also varies significantly.

See a preliminary graph plus further observations on LessWrong shortform.

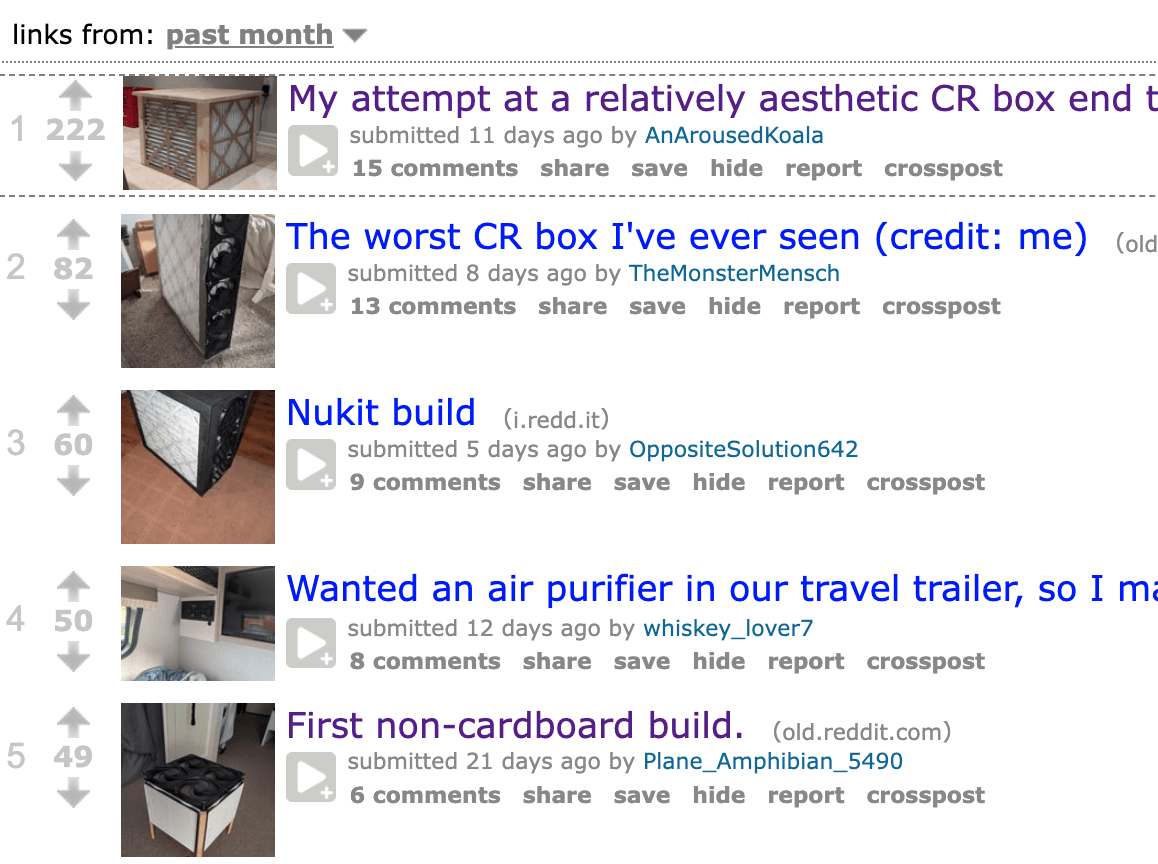

That's a box fan CR box; the better design (and the one linked) uses PC fans which are better optimized for noise. I don't have much first-hand experience with this, but physics suggests that noise from the fan will be proportional to power usage, which is pressure * airflow, if efficiency is constant, and this is roughly consistent with various tests I've found online.

Both further upsizing and better sound isolation would be great. What's the best way to reduce duct noise in practice? Is an 8" flexible duct quieter than a 6" rigid duct or will most of the noise improvement come from oversizing the termination, removing tight bends or installing some kind of silencer device? I might suggest this to a relative.

Isn't particulate what we care about? The purpose of the filters is to get particulate out of the air, and the controlled experiment Jeff did basically measures that. If air mixing is the concern, ceiling fans can mix air far more than required, and you can just measure particulate in several locations anyway.

A pair of CR boxes can also get 350 CFM CADR at the same noise level for less materials cost than either this or the ceiling fan, and also have much less installation cost. E.g. two of this CleanAirKits model on half speed would probably cost <$250 if it were mass-produced. This is the setup in my group house living room and it works great! DIY CR boxes can get to $250/350 CFM right now.

The key is having enough filter area to make the static pressure and thus power and noise minimal-- the scaling works out such that every doubling of filter area at a gi...

On a global scale I agree. My point is more that due to the salary standards in the industry, Eliezer isn't necessarily out of line in drawing $600k, and it's probably not much more than he could earn elsewhere; therefore the financial incentive is fairly weak compared to that of Mechanize or other AI capabilities companies.