Vasco Grilo🔸

Bio

Participation4

I am a generalist quantitative researcher. I am open to volunteering and paid work. I welcome suggestions for posts. You can give me feedback here (anonymously or not).

How others can help me

I am open to volunteering and paid work (I usually ask for 20 $/h). I welcome suggestions for posts. You can give me feedback here (anonymously or not).

How I can help others

I can help with career advice, prioritisation, and quantitative analyses.

Posts 231

Comments2913

Topic contributions40

We'll likely take you up on this.

You are welcome to reach out any time. I am also open to looking into CEAs you may have done in the past if you think that is helpful. I also gave feedback on the CEAs of 7 charities Animal Charity Evaluators (ACE) assessed in 2025.

An impact analysis or cost-effectiveness estimate is one of the most asked questions.

Great to know.

When talking with the organizations, we however almost always land on gathering data on the project first to inform why a program is generating results (otherwise, a CEA/CEE isn't very actionable).

That makes sense. At the same time, I think there are cases where a CEA could show an intervention is not promising even without any data collection. It could be that the parameters required to make the intervention cost-effective relative to a relevant benchmark are not realistic. CEAs are one way Ambitious Impact (AIM) assesses which charities to incubate.

Even if the actual number of animal/dollar isn't actionable (yet), a CEA/CEE can be used to identify the most likely drivers for impact.

Agreed. The CEA could show whether an intervention is cost-effective or not relative to a relevant benchmark crucially depends on some key parameters. Then the data collection could focus on decreasing the uncertaity in these.

I worry just 4 human-anchored pain intensities are not enough for reliable comparisons, even for an early stage. For shrimp-anchored annoying pain 10^-6 times as intense as human-anchored annoying pain (the ratio between the individual number of neurons of shrimps and humans), and this 10^-6 times as intense as human-anchored excruciating pain, shrimp-anchored annoying pain would be 10^-12 (= (10^-6)^2) times as intense as human-anchored excruciating pain. It seems super hard to cover such a wide range of pain intensities with any significant reliability using just 4 values?

Thanks for sharing, Nicoll. Could you share how much demand you have found for cost-effectiveness analyses (CEAs)? I am open to reviewing these for free.

Hi Jamie. I agree.

The Happier Lives Institute’s investigations into intervention cost-effectiveness find that some mental health interventions are over 5x more effective than GiveDirectly’s cash transfers

Nitpick. I do not think the above is exactly supported by the graph with the cost-effectiveness comparison. According to this, only Friendship Bench (48.5 WELLBY/k$) is over 5 times more cost-effective than GiveDirectly, which corresponds to having a cost-effectiveness over 45.6 WELLBY/k$ (= (1 + 5)*7.6/10^3). There are 2 charities, Friendship Bench and StrongMinds (40.4 WELLBY/k$), which are more than 5 times as cost-effective as GiveDirectly, which corresponds to having a cost-effectiveness over 38.0 WELLBY/k$ (= 5*7.6/10^3), but they both deliver the same type of mental health intervention ("Task-shifted group

psychotherapy").

Thanks for the update. I like your BHAG of reaching 1 million pledgers.

This year, we shift from laying foundations to strengthening them while starting to push more seriously on growth. We’re aiming to grow 40% on most of our key pledge and donations metric (e.g. aiming for roughly 1500 new 🔸10% Pledges). This puts us on a trajectory of achieving our BHAG by 2040.

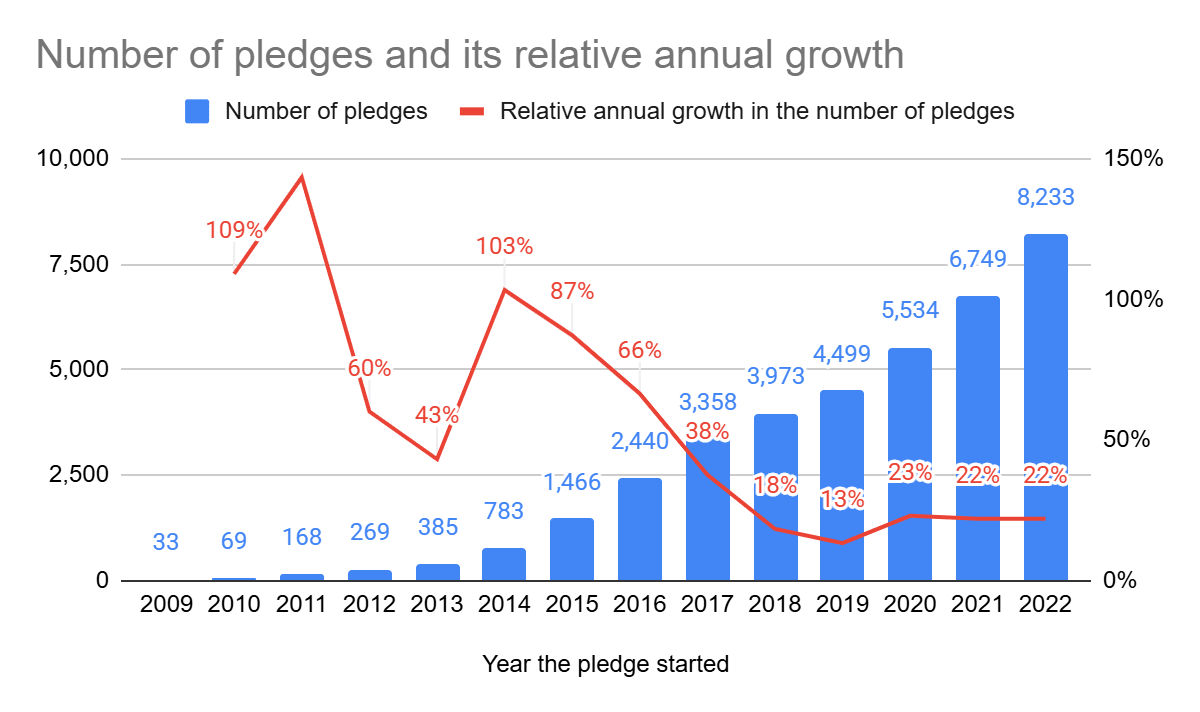

Below is the growth of the number of 10 % Pledges from 2009 to 2022. An annual growth of 40 %/year from 2025 to 2040 seems very ambitious relative to 2018-2022. On the other hand, you now have "16 core staff", whereas you only had a few during 2018-2022?

Thanks for the post, Dilan.

Likewise, if we're optimising for the reduction of near-term animal suffering, then we can compare welfare improvement projects using a standardised measure of suffering like Ambitious Impact's "Suffering-Adjusted Days" (SADs) and invest in the ones which reduce the most SADs per dollar.

1 SAD corresponds to 1 human-day of disabling pain. So it is not time-bound. It can be used to measure reductions in suffering even if they happen far into the future (although I guess more than 90 % of the overall effect materialises in the 1st 100 years for the vast majority of interventions).

Many advocates are working towards a broader project of ending industrial food animal production (IFAP).

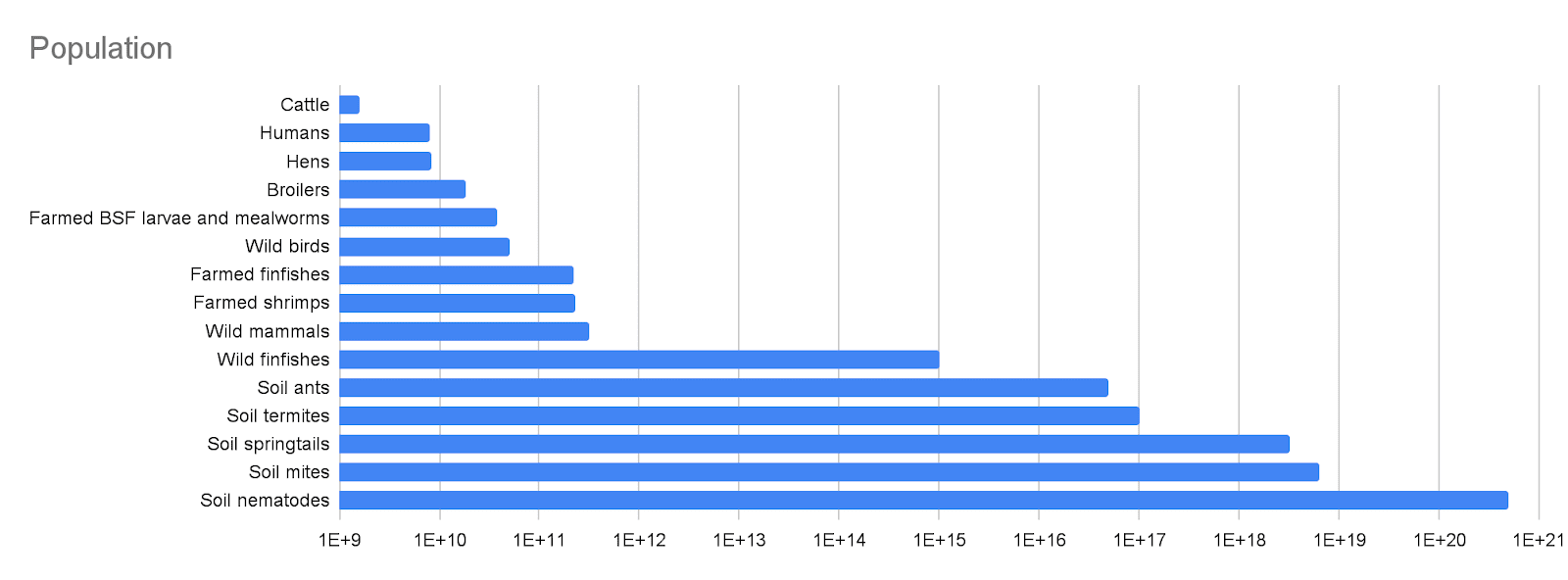

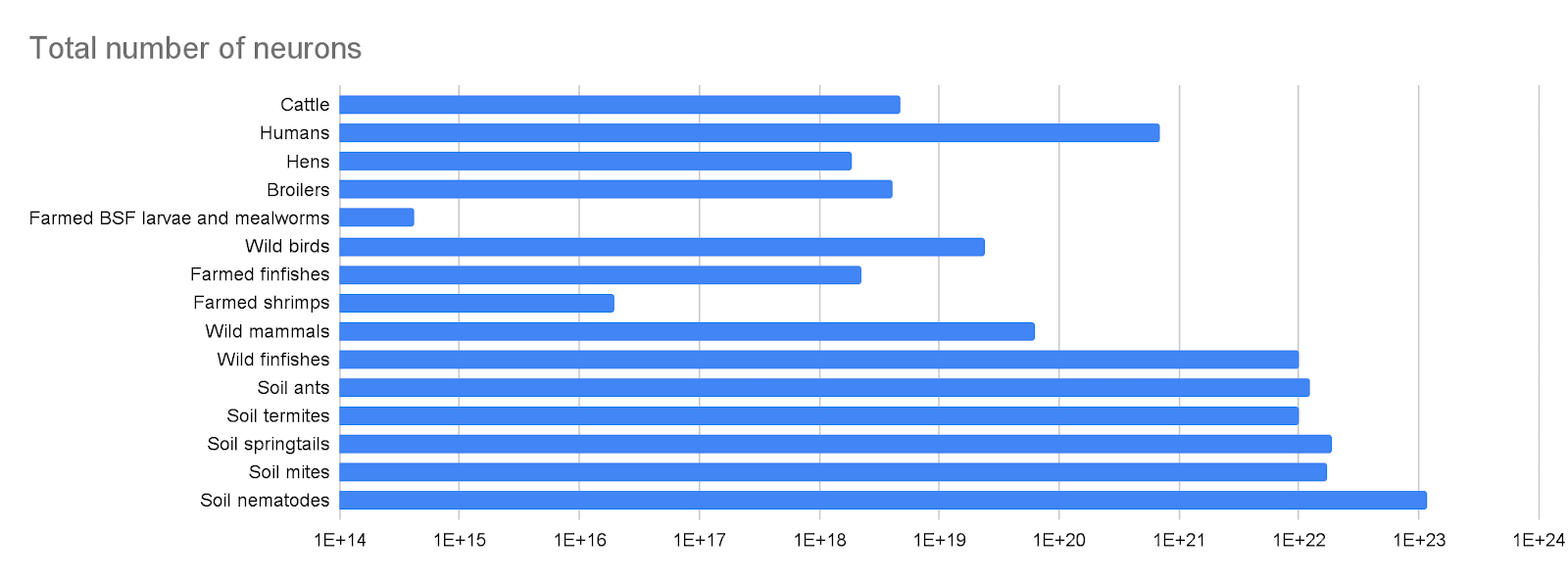

There is a sense in which reducing animal suffering (as measured by SADs) is a broader project than ending large-scale farming? Farmed animals account for a tiny fraction of all animals and neurons.

Each of these is attacking IFAP from a different angle. Therefore it's extremely hard - if not outright impossible - to compare their cost-effectiveness, because each project's "unit of impact" is completely different.

Since you mention "if not outright impossible", do you think ending industrial farming is intrinsically good? I believe what matters is just decreasing suffering, and increasing happiness. So I would ultimately assess interventions decreasing industrial farming in these terms. I worry about using non-welfare final outcomes because animals in large-scale farming can have positive lives, with more happiness than suffering, under some conditions. In this case, they would prefer existing over not existing. I also think positive lives are very much possible in practice. I estimate that the welfare per chicken-year of slower growth broilers is 92.9 % higher than that of fast growth broilers, and that of layers in barns 80.4 % higher than that of layers in battery cages, which suggests lives in the improved conditions that are negative, but close to neutral (estimates of 100 % higher welfare would suggest neutral lives). I guess cows, broilers, and layers in organic production have positive lives.

Thanks for the good points, Matt.

So we can't assume that CG is filling EG organizations' budgets until their multiplier is about 1.

What if funding caps are part of CG's strategy to maximise impact? They may result in greater diversification of funding sources, and therefore greater resilience against shortfalls in CG's funding, and potentially more funding longterm. The benefits will not be observed nearterm. So the expected marginal multiplier may be closer to 1 than the observed nearterm marginal multiplier.

The ideal funding cap would vary by grantee neglecting CG's assessment costs. However, having a single or a few funding limits could save time, and therefore be closer to optimal.

@Melanie Basnak🔸, do you have any thoughts?

To clarify, I do not know whether keeping an updated dashboard is a good use of time relative to other activities you could be doing. My overarching point is just that I personally find valuable the reporting of some clear metrics that seem reasonably correlated with impact, like the number of active subscribers to the EA Newsletter.