Venkatesh

Bio

I am a doctoral student of Public Policy and Political Economy at UTDallas and looking for opportunities in the social sector, especially in think tanks. I've previously interned at the Center for Global Development and worked as a Data Analyst at a boutique consulting firm for ~2.7 years. My undergraduate degree was in Physics.

If you are considering reading something I have written on this forum, please see: Interpreting the Systemistas-Randomistas debate on development strategy

How others can help me

Point me to opportunities for an empiricist to work in the policy sector (think tanks, consultancies etc.)

Posts 7

Comments43

Great comment David! It made me focus in on the heart of the question here. It is simply this - What is the right counterfactual to EA, the philosophy/academic discipline? OP is comparing EA philosophy/discipline to Econ. But is that fair? When I read your comment this morning, I noticed how I utterly failed to clarify that EA is less rigorous... than what?! It got me thinking empirically and I quickly whipped up some stuff using OpenAlex which is a open-source repository of publications used by bibliometricians. Now this preliminary analysis I show doesn't resolve the question of what is counterfactual to EA, but it begins to describe the problem better.

If you're interested, see my GitHub repo with more details on the research design that I imagined and the Python/R code. I wonder if OP will like this because I'm doing stuff that a (design-based) econometrician would do :-)

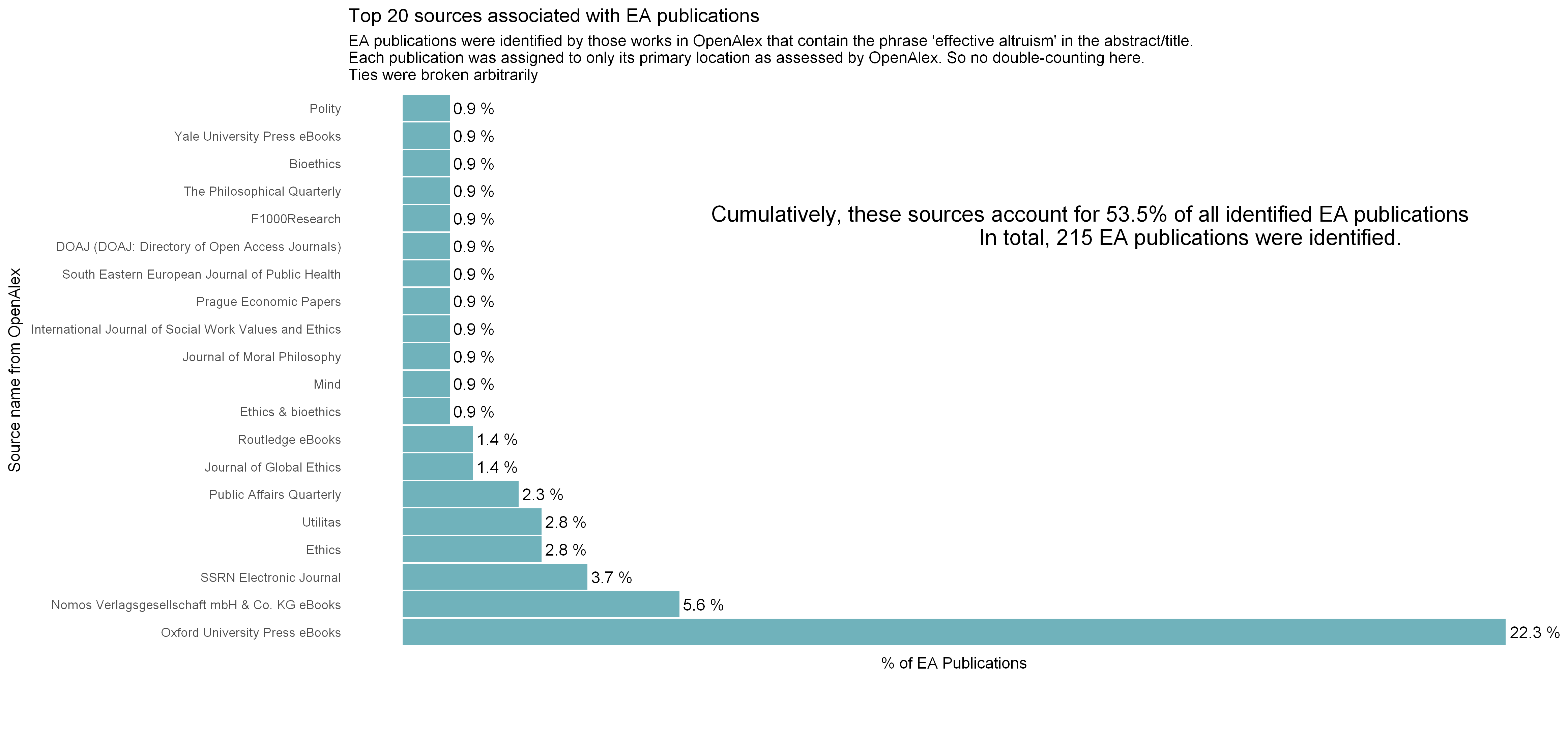

First up, lets validate what David said makes sense - Are EA publications in top journals?

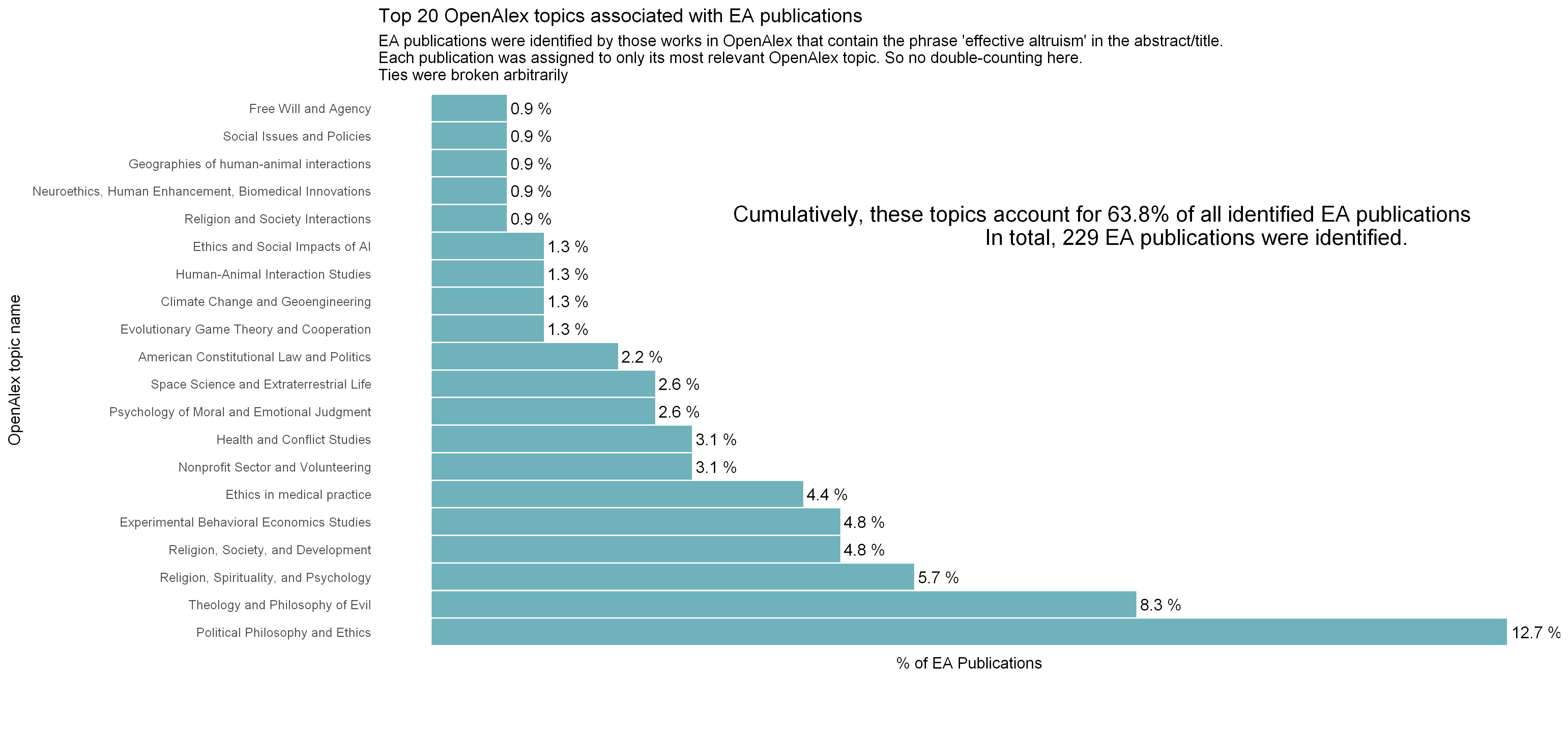

Yep. David is not wrong. But of course that is not the question! The claim I want to make is that EA publications are more insular than other stuff and the obstacle to making this claim is what the hell is "other stuff"?! This is where OpenAlex topics come in. OpenAlex uses a clsutering+classification pipeline to classify papers as belonging to some topic. Here is a plot showing that:

Now, the next step would be to ask ourselves, "EA has such a spread of topics. But field X has a much wider spread. This is why EA is insular" But what is that field X? EA compared to Deontology? Utilitarianism? These have around for decades - how is that a fair comparison group? What exactly is the benchmark to weigh EA, the discipline, against?

Now maybe the way to do this, is to pull out all the papers in these topics I have plotted above from OpenAlex and compare against those. But I guess a better way to do this would be to pull out abstracts of all publications, clean up, tokenize, cluster and see whats close by and compare against that. Can someone else make this pipeline more concrete?

EDIT: Fixed broken links

I loved this critique and sympathize with it. As somebody studying Public Policy which requires interfacing with all the different social science departments in my university, I empathize with what you mean by the "insularity" of the economics department!

But I think you are missing a bigger critique here. It is that EA philosophy has a lack of rigor stemming from an intrinsic insularity that is not inherited from elsewhere. If anything, EA has to start acknowledging ideas from other disciplines and not reinvent the wheel! This includes acknowledging economics, and as you rightly say, other humanities. In fact, this opinion is shared by an economist - Tyler Cowen. See his critique of Parfit's 'On What Matters?' (Link to article). My reading of his critique is that Parfit (who planted the seeds for EA philosophy) was too much of a philosopher to his own detriment! He couldn't engage with scholars from other disciplines to see the progress already made on many of the questions he was interested in.

Overall, I agree with you that EA philosophy must engage widely with ideas from outside. But I disagree that this is a problem it inherited from economics. I think this is intrinsic to EA philosophy due to the circumstances in which it was born (i.e.) an academic sitting in some cabin in the woods. That is why I don't find it appealing at all when EA people plan to live together with other EAs or date other EAs or find employment only in EA-aligned organizations. This is altogether to the detriment of EA evolving into new areas and X risks! To me the best EA people are ones who know of EA philosophy but engage with it only once in a while to check in and see what's going on. These are the folks who will act as bridges that will ultimately make EA philosophy much better. We don't post as much or come to all the events but we come when we feel the need to!

In my own head, the way I deal with this is, is to create a distinction between "Parfitianism" and "EA". "Parfitianism" is done and it is a good starting point for us to learn from. But to build "EA" we need to engage mostly outside of EA philosophy, as you rightly say, and start building something better. In fact, I think Parfit himself believed in something like this, when he concluded Reasons and Persons with a section titled, "How both human history, and the history of ethics, may be just beginning"

I probably won't read another post on the forum for a while. Out of the stuff that is currently on the forum this was the one worth reading. Good post!

Really liked this one -brief and to the point! Here is my attempt to condense it further presuming I understood the author properly and also understood the ITN framework properly (correct me if I'm wrong about either!) :

Say I subscribed to the ITN (Importance, Tractability, Neglectedness) framework before I started my work on cause area X and wrote down my scores for I, T and N. When I look at an example of someone failing and giving up (like the one OP mentions in the post), my first instinct would be to do 2 things:

- Increase the N score I had given earlier for X, since I now see one less person/entity working on X.

- Reduce the T score I had given earlier, since there is failure.

If I understood it correctly, this post argues that 2 should be modified: Modified 2. Maintain the T score I had given earlier, since the devil is in the details and the details of my solve are different from the failed solve I am looking at.

So, I still update my neglectedness (a small update) but maintain my tractability score (no update). Overall, not an "over-update".

If someone said "I am not going to wear masks because I am not going to defer to expert opinions on epidemiology of COVID19" how would someone taking the advice of this article respond to that?

Overall, being a noob, I found the language in this article difficult to read. So, I am giving you a specific scenario that many people can relate to and then trying to learn what you are saying from that.

I am pretty sure this is not worthy of a full cross post, but I think a shortform could be tolerable.

I wrote a piece about Economic Complexity. I have seen a few posts on the forum (Like 1 and 2) about Complexity Science and I appreciate the healthy skepticism people have of it. If you are also such an appreciator, you might like my piece.

I attended EAGx Berkeley event on Dec 2, 2022. Previously I had engaged with EA by participating & later facilitating in Intro to EA Virtual programs, writing on this forum and attending the US Policy careers speaker series. All these previous engagements were virtual. This was the first time I was in a room full of EAs! I want to thank the organizers for giving me a travel grant for this event. It would have been impossible for me to attend this event without it.

It was a net positive experience for me. I had 4 goals in mind. 3 of them went much better than expected and 1 of them didn't go as well. I explain my goals and how they went below. They are listed in priority:

- Find an internship (especially in DC)

- When I applied to the event, this goal was Find an internship (anywhere) and it was 2nd priority. But I was getting closer to the final rounds of a fellowship program which was conditional on getting a DC internship. So, I increased priority before the conference.

- This goal didn't go as well as I thought it would. Many orgs I spoke to didn't have applications open. They just asked me to fill some sort of 'general interest form' on their website and told me when positions might open. I guess December is not really hiring season (at least for the orgs I was interested in)

- Get advice on if I should stop at my Masters or go all the way to a PhD

- When I applied to the event, this goal was originally What after Masters and it was 3rd priority. But one of my profs at uni spoke with me to consider a PhD a week before the conference. So, it changed and got higher priority before the conference.

- I got some of the best advice on this. The 1-on-1 and office hours really helped me. Also, on day 1 of the conference Alex Lawsen spoke about An easy win for hard decisions.. So I ended up writing up a Google doc on this decision and sending it to many of the people I did 1-on-1s with. In addition, I attended a session on 'Theory of Change' by Michael Aird and it is going to play a huge role in how I finally make this decision.

- Get advice on starting a student group

- This was actually priority 1 when I applied. But my cofounder also ended up getting selected for the event and since he could also talk about it with folks it made sense to push this down, given there were other goals that had to be prioritized as well.

- I got good suggestions for this. My cofounder spoke with Jessica Mccurdy. I spoke with another student group organizer who got me into the 'EA Groups' Slack channel.

- Learn if there is any intersection between AI safety and policy

- I made up this goal on day 2 of the conference given the number of AI safety events that happened. That is why it is bottom priority.

- I learnt that the jargon here is "AI Governance". I saw that many AI safety orgs didn't really hire folks to do this (with notable exceptions). I hope to spend a few hours this winter break looking into this given that a few people told me that this is a fairly neglected area. If I find it really interesting, I might end up writing up something on the forum about this.

Other thoughts:

- Given that the FTX scandal happened recently, I was kind of worried the event would be all about that. I had read enough about it on social media and on the forum, felt bad for the people who got cheated, recognized what I can learn from it and by the time the conference rolled on I was over it. I didn't want it to be stirred up all over again. So, I was glad to not be forced into any conversations about it. There were some events on the agenda on that topic but I was able to avoid them. So, discussions on this very important disaster did happen but it was out of the way for those who had worked through it which I really appreciated.

- I was generally encouraged and felt optimistic seeing a bunch of people who (are atleast trying to) care about the world. Some of them were eccentric, some of them were too gloomy (IMO) about x-risks but all were kind people.

- I personally felt that I was generally being too risk averse. Seeing people daring to try out of the ordinary stuff has helped me update a little bit more towards risk taking (of course keeping in mind to internalize risks to the max)

I ended up doing some of my own empirics here. See my comment below in response to David.

I am beginning to see what Linch is saying. The actual hard question here is - What is the appropriate counterfactual to EA? EA is more insular compared to what exactly? Bob says compare the state of Econ against the state of EA but is that fair? I wrote some more initial thoughts down in my Github repo along with some R/Python code.

I can't believe my first instinct was to think about the comparison group. But I got there and better late than never!