For most of my time as an effective altruist, I didn’t know any of the famous EAs. I was just a guy with a blog. But over time, I’ve gotten to know more high-up effective altruists, especially since I’ve started working for Forethought. And as I’ve done this, I’ve grown increasingly impressed with their decision-making. The community leaders who shape beliefs are hugely impressive.

I used to wonder: to what extent are the crucial EA ideas carefully vetted? Did adequate research go into deciding that engineered pandemics were a bigger existential risk than nuclear weapons? Did people think enough before deciding that AI was a major existential risk? To what extent were the ideas taken up for mimetic reasons, rather than because of their accuracy?

But I’ve grown a lot more confident in the decision-making process. A huge amount of analysis and discussion goes into people’s views. More than a decade of full-time work went into writing What We Owe The Future. All the arguments were carefully vetted. Many smart people discussed these ideas before any were made public. If Toby Ord or Will MacAskill says something in a talk, I am now very confident that a lot of thought went into it. You should treat it more as the takeaway from an expert after careful consideration, instead of a random throwaway take.

The big-name effective altruist public intellectuals that I’ve met are both very clever and very meticulous. If you ever have the good fortune to speak either with Will MacAskill or Toby Ord, it will very quickly become clear that they are quite brilliant. I’ve had a number of conversations where I mention some philosophy argument I’ve been thinking about for days to Will, only for him to raise a number of very strong objections that I’d never thought of.

Toby is similarly brilliant; he has publications in math, computer science, philosophy, and then is somehow also the person responsible for all the best arguments against an imminent intelligence explosion—even though he thinks one is reasonably likely. He’s just OP.

It isn’t just that they’re smart; they are also extremely meticulous. I am not this careful by default. So it’s nice to know that the community that I am part of is, in general, a lot more careful than I am. I wouldn’t trust myself unilaterally to form beliefs about which existential threats are bigger than which other ones. It is a good thing that I do not have to. Every time I have met one of the bigwig EA philosophers, I’ve been impressed. And note: often when I’ve met people I respected, I haven’t been impressed, and in some cases, my opinion of them has even gone down.

One thing that I especially appreciate is how collaborative this process tends to be. The judgments are formed by groups of people discussing and analyzing. Crowds tend to be wiser than individuals. Crowds of careful and brilliant people tend to do well.

That has been my perception on the inside. But what about from the outside? Effective altruism has just clearly been right on lots of important things. If you judge EA by its fruits, it does quite well. Many of the examples I’m going to give were also given by Will in his recent talk at EAG.

I got into EA just a bit before the AI craze hit. I read The Precipice, and thought AI would be a somewhat big deal. But people weren’t on board mostly. I remember trying to talk my Dad and high-school classmates (different people, to be clear) into thinking that AI alignment was going to be important. Most people were skeptical that AI would be big.

Of course, five years later, it’s obvious to pretty much everyone that AI is a big deal. It has already been hugely transformative, and its impact will only grow. The early effective altruists ventured out and made a bold prediction about a transformative technology. And we were vindicated. The early skeptics who spent years snarking about EAs believing in a magic machine God were simply wrong, and we were right.

Another thing effective altruists were warning about back in the day was the possibility of a big pandemic. Pandemics have killed a lot of people historically. Still, people were skeptical. Effective altruists were some of the only people thinking seriously about pandemic preparedness.

Then a global pandemic hit, killed millions of people, and torched the global economy. Again, EAs were simply correct. The thing we’d been warning about happened. In fact, the two plausible causes—lab leak and animal farming—were two major threats EAs had warned about for the entire existence of the movement.[1]

EAs were also warning, for a long time, about the importance of health aid sent overseas. In contrast, non-EA leftists were more likely to call these institutions colonialist and call EAs racist for neglecting domestic political issues. But when Trump got elected, we were vindicated in the worst of ways: he destroyed much of USAID, and this was the single act in his presidency that led to the most deaths.

And note: many early EA predictions weren’t easy things to get right. The dominant view at the time, in left-wing spaces, was that the big existential risks were global warming and biodiversity loss. By default, that was what you’d care about if you wanted to reduce existential risks. Effective altruists were some of the only people who had the foresight to see that other existential risks were important. We swam against the dominant social institutions, and were vindicated.

And effective altruists, unlike other movements, routinely reject false but ideologically inconvenient ideas. Almost no EAs believe in the AI water use scare. By default, you should expect EAs to be disproportionately worried about AI water use, just like those who think taxes are immoral are also especially likely to think they’re economically harmful. But EAs don’t; instead, after carefully considering the evidence, the community has reached a near-consensus that AI water use is totally fake. Can you think of many other movements that reject widely believed ideologically convenient arguments simply because they aren’t true?

This concern for truth is rare among movements in general. The environmentalists, for example, managed to shut down nuclear power over the protestations of the relevant experts. In general, movements are not evidence-based. Effective altruists are one of the only evidence-based movements—where your best bet for predicting its behavior isn’t guessing what’s socially fashionable but instead what is true.

These were mostly straightforward predictions. Giving other examples of non-obvious things effective altruists have gotten right will inevitably be controversial. You might not agree with some examples. But I think there are a number of other cases where EAs have gotten super non-obvious things right, on important topics, where most people are wrong.

One of these has been recognizing that Longtermism is correct. Longtermism is the idea that making the far future go better is a top global priority. Longtermism is a controversial idea. Most people don’t think much about making the long-run future go well. And yet the arguments for it are really good. This is a nice test case because it’s:

- Narrow.

- Unpopular.

- Supported by powerful arguments.

And yet EAs largely converged on Longtermism. A controversial philosophical idea was widely adopted by EAs, simply because it was supported by strong arguments. Many people changed their careers based on these considerations. They started doing work that was less meaningful because they were convinced it was more important.

A similar story holds for wild animal suffering mattering. It’s super unintuitive to most people. And yet the arguments for it are really good. EAs are some of the only people to realize that nearly all the world’s expected suffering is experienced by wild animals and that this is a big deal. EAs similarly disproportionately recognize the evils of factory farming. We’ve helped free billions of animals from cages.

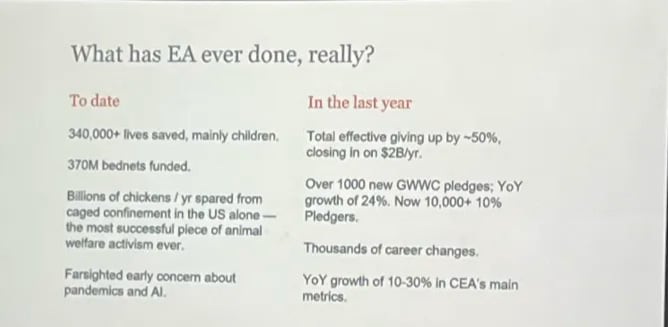

EAs are both unusually wise and unusually morally reflective. Lots of thought goes into the important EA decision-making. And more than 10,000 EAs give at least 10% of their income to effective charities. Will, in his talk, estimates that this has saved over 300,000 lives. That’s amazing! In the accounting, you would think people would mention that, at least occasionally, when complaining about EA being a smokescreen for neglecting the welfare of third-worlders in favor of caring about the welfare of future people. Similarly, you would think that the animal activists who say that effective altruism is bad for animals would see fit to mention that billions fewer chickens remain ensnared by the sharp metal of a cage because of effective altruists.

Crucially, I think EAs have often been right even when the truth was inconvenient. Most people don’t think there are strong duties to give to charities, even though there are good arguments for the existence of such obligations. Yet EAs have become convinced that giving is hugely morally important. Recognizing your moral obligations to give away money is sufficiently inconvenient that most people don’t do it. But EAs largely do.

I assume people will object that EAs got SBF wrong. They thought he was a nice philanthropist, and were wrong. But most people thought SBF was a nice philanthropist. It was only in hindsight that we know that he was scamming people. And EAs, to their credit, strongly and immediately criticized him. The markets did not anticipate that SBF was scamming people.

All-in-all, I am quite happy about the belief-forming process of the EA community. It’s not infallible, certainly. Many random beliefs end up being associated with EAs for largely sociological reasons. And an unfortunate number of implausible beliefs are imported from Rationalism and believed with extreme confidence by many EAs. But overall, I think we’re doing pretty well. The next time you feel tempted to mock one of the weird EA ideas, watch yourself. Because we might soon be vindicated.

All in all, I think effective altruists are often way too pusillanimous. We should be more willing to say: effective altruism is very good both epistemically and morally. Being an effective altruist is neither cringe nor embarrassing. Despite being relatively small, EAs have had an unprecedented moral impact. Hundreds of thousands of extra people would be dead if the movement had never existed. If you give away some of your income to good charities or take a high-impact career, you should be proud. The criticisms of effective altruism are by and large lame.

It’s 2026—we no longer have to pretend that Emile Torres and Timnit Gebru are raising good objections and no longer have to self-flagellate for five hours about the sins of Sam Bankman-Fried every time we tell someone about effective charities. Effective altruism is just an overwhelmingly good thing and we should stop pretending that it isn’t.

- ^

I’m not super counting this as a correct prediction though because opposition to animal farming is mostly on moral grounds, not pandemic-related.