I thought this article made some important points, so received Andrew Critch's permission to repost this on the Forum from his blog. You can find the original post at http://acritch.com/entitlement/.

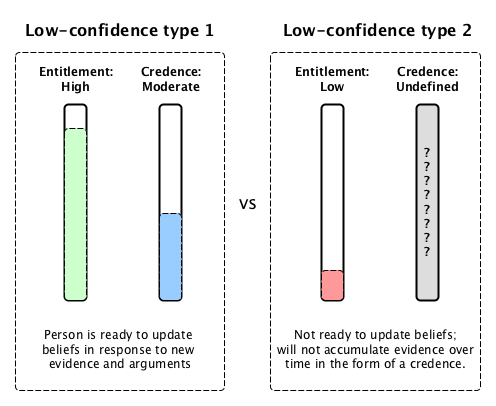

Sometimes the world needs you to think new thoughts. It’s good to be humble, but having low subjective credence in a conclusion is just one way people implement humility; another way is to feel unentitled to form your own belief in the first place, except by copying an “expert authority”. This is especially bad when there essentially are no experts yet — e.g. regarding the nascent sciences of existential risks — and the world really needs people to just start figuring stuff out.

Over the years, I’ve had a vague impression that many educated people somehow shy away from forming their own impressions of things, other than by copying the conclusions of an “expert authority” or an affect like “optimism” or “pessimism”. And they often think it would be foolish to even try anything else.

|

Note: If you’re unfamiliar with subjective credences as probabilities, This Tutorial should help. If you’re uncomfortable assigning subjective credences to things, try The Credence Calibration Game. |

Obstinance: not what I’m aiming for

Before I go on complaining, remember the Law of Equal and Opposite Advice. There was a dark time when people felt it was reasonable to argue about when Michael Jackson was born… not to figure out the answer, but to win a debate about it. Some people reading this might be too young to remember, but there was a time when folks would get into heated debates about the population of Canada. Party lines would be drawn, sometimes never to be crossed again. People were obstinate.

Alice: “I still think it’s 20 million.”

Bob: “Whatever, I know it’s 24 million. I heard it on the radio the other day. Charlie knows I’m right.”

Alice: “Come on Charlie, you heard the radio. It was 20, right?”

Charlie: “No, no, it was 24.”

Alice: “Screw you guys.”

This is obviously suboptimal. They should be pooling their evidence, not fighting over whose is better. This is an instance of “Everyone is entitled to their own opinion” gone awry, and it’s not what this blog post is about. This post is a correction to a correction.

An example: personal health

One’s personal health is another topic where, like existential risk, there are no expert authorities. There are doctors, who have reasonable priors that are based on averages of people with a similar symptoms to you, but are not necessarily well-specialized to you.

I’ll use an example to illustrate what I mean here — not sufficient evidence to convince you of a trend, but hopefully enough to point at what I’m talking about: the reaction I get to using a binary search to identify which dietary supplement was most likely to have good short-term effects on me, where I found that a mixture of B vitamins, prior to knowing what any of the supplements in particular were supposed to do, had the strongest noticeable positive effect on me among a bunch of other supplements.

Folks often respond to my description of the experiment with “Couldn’t that just be a placebo effect?”

This puzzled me. The placebo effect wouldn’t favor me liking any particular supplement over any other, given that I hadn’t known what to expect from them in advance.

Then they’d say “That’s just anecdotal evidence”, as though somehow probability/statistics couldn’t enter into the reasoning process. This also puzzled me, until I realized that a lot of people only think of probabilities as measuring frequencies, and aren’t aware that they’re also a very robust way of tracking and combining uncertainties that follow nice coherence laws.

Then they’d say “Couldn’t it just be confirmation bias?”, which again, wasn’t a strong hypothesis given my lack of expectations…

But as the conversations continued, I started to realize that what they were actually saying is that science is hard and you can’t do it on your own.

There is some truth to this. There’s a lot to consider when designing an experiment, and it helps a lot to have some other eyes on your design. But I’m worried that this attitude is going a little too far. Maybe stories about “biases” and “placebo effects” and the like are causing smart people who are actually capable of statistical inference to… just give up. Give up and let the scientific establishment publish articles about average effects across populations. Don’t try to figure out stuff on your own, unless you’re part of a lab wherein the establishment has given you the role of figuring out something in particular… then, you’re golden.

What’s going on here

This is, of course, an exaggerated description of a problem, but nonetheless, I think a problem exists here to some real degree. At least a good 20 or 30 people I could list view “biases” and “placebo effects” like magical sources of wrongness that can’t be modeled, accounted for, or guarded against, except by somehow by some authoritative group called “experts”. Why is that?

Again, I’m not hinting that we should give up on teamwork. I’m saying that you can use probability, too. If you can do math and hold facts about the real world in mind at the same time, it’s not that hard.

So why not try?

It seems to me that what it actually takes to do statistical inference on your own, for people who already have the mathematical and empirical fluency, iscajones. Or, to use a less etymologically sexist term, a sense of entitlement: Entitlement to figure stuff out for yourself; not to be a nay-sayer who says “There’s no evidence” for this or that, but to stick your neck out sometimes and say “I’ve seen enough evidence now for me to believe X… bring on your criticisms and counter-evidence!”

|

Qiaochu Yuan makes the observation, “I think people fail to realize that when you’re self-experimenting, you don’t necessarily want to science hard enough to establish conclusions approximately true for everyone: you’re already happy to establish conclusions that are approximately true for you. And you just don’t have to science as hard for that.” I theorize that, moreover, that failed realization comes from failing to see science as a probabilistic inference procedure — like the kind you’d use to guess the color of the next jellybean from a bag — and instead viewing science as a Thing That Someone Out There Is Doing Properly. Compare “The last 8 [jelly beans | times I tried the medication] have all been [green | successful], so the next one probably will be, too”, and contrast with “You can’t do studies on yourself ‘properly’, ergo you shouldn’t do them at all.” This same attitude hampers research into existential risks: “We can’t run a randomized controlled trial across parallel Earths yet, so there’s no data to inform safety measures yet”. That may sound like an exaggerated stance, but I recently heard Andrew Ng, a prominent machine learning expert, literally telling a crowd of around 1000 researchers,

… as though you needed to know a building was definitely going to burn down before you’d install a fire extinguisher. (Luckily, a panel moderator called him out on this.) |

World-scale relevance

What worries me most about this is not that folks might not figure out what supplements to take, but the effect on people reasoning in general about what the world needs. Too many potentially-highly-effective do-gooders, upon realizing that there are facts about whether certain world-scale interventions are effective, might fear the embarrassment of being wrong so much that they’re afraid to do their own analyses.

This is bad. Granted, what I’m saying is a higher-order correction — aboosting, if you like machine learning — to the important first order realization that there are facts of the matter about what you can do for the world, which I think is even more important. But charity evaluators like GiveWell can’t figureeverything out for you. In fact, GiveWell started because not enough people were doing careful analyses of charitable causes, and that’s still a problem today. Condorcet’s Jury Theorem requires some amount of independence in the population for the group as a whole to find the truth… just like how independent measurements with different instruments is a much better way to confirm a physical phenomenon.

So what I’m hoping to do with this post is to inspire more GiveWells. I want more individuals who go around investigating this group or that group, using quantitative and probabilistic reasoning to piece together evidence from varied sources and arguments and figure out what the world needs. The world is a big place, and there is just too much going on for one group to investigate it all. We need parallel processing here, folks. distributed computing.

What sort of computations should you do?

Surely, we don’t want society to regress to the naive pre-Wikipedia state where so many people feel like they can win a debate about when Michael Jackson was born. Rather, we need more quantitative, literate people with a solid grasp that there are facts, but also that evidence obeys statistical rules that they can learn to apply on their own.

This is not a small ask. It’s not something I’m expecting from every high school graduate in the next 5 years. But it is something I think a lot of my friends who care about the world and have made it through good undergraduate programs in quantitative sciences can learn to do well. And if they do, the world will be safer for it.

Be the expert you wish to see in the world

Really, folks. We can’t depend a centralized authority node to compute all the answers.

And you don’t have to be an arrogant walking bag of hubris, either. When someone comes along and wants to engage you in a disagreement, start figuring shit out together. Ask “What have you seen or heard that made you think that?” to quickly jump to the part of the conversation where you share data instead of just your conclusions. Be ready to re-compute your opinion based on things someone else has seen. Even if you think you’re a good reasoner, someone else’s retinas — or even their social instincts — can make a useful additional sensor through which to gather evidence.

Honestly, what I really want is for people to think about what sort of belief computations, distributed across individuals, would make a good machine learning algorithm for the world to follow? and to then instantiate those computations. Independent measurements and assessments are just part of that. In the best case, you’ll start thinking about things like conditional independence of measurements to avoid over-counting evidence that you and your friend both saw.

That would have to be the topic of a whole other blog post. But, interestingly, given my circle of friends, some folks reading this might already know about conditional independence, and you might already be able to imagine a graph of beliefs propagating between people based on correlated sources of information. That’s good. The world needs that. The world needs you.

Compute a subjective credence already.

Feel entitled to believe.