Written by the core organising team for EAGxCambridge, this retrospective evaluates the conference, gives feedback for the CEA events team, and makes recommendations for future organisers. It’s also a general update for the community about the event, and an exercise in transparency. We welcome your feedback and comments about how we can improve EA conferences in the future.

You can watch 19 of the talks on CEA’s YouTube channel, here.[1]

Attendees’ photos are here, and professional photos are in a subfolder.

Summary

We think EAGxCambridge went well.

The main metric CEA uses to evaluate their events is ‘number of connections’. We estimate around 4200 new connections[2] resulted, at an all-told[3] cost of around £53 per connection (=$67 at time of writing), which is a better cost-per-connection than many previous conferences. The low cost-per-connection is partly driven by the fact that the event was on the large side compared to the historical average (enabling economies of scale to kick in) and encompassed 3 days; it was also kept low by limiting travel grants.

Of these 4200 new connections, around 1700 were potentially ‘impactful’[4] as rated by attendees. (Pinch of salt: as a rule, people don’t know how impactful any given connection is.)

The likelihood-to-recommend scores were on a par with other EA conferences, which are usually very highly rated. (The average answer was 8.7 on a 1-to-10 scale.)

Besides making connections, we also wanted to encourage and inspire attendees to take action.

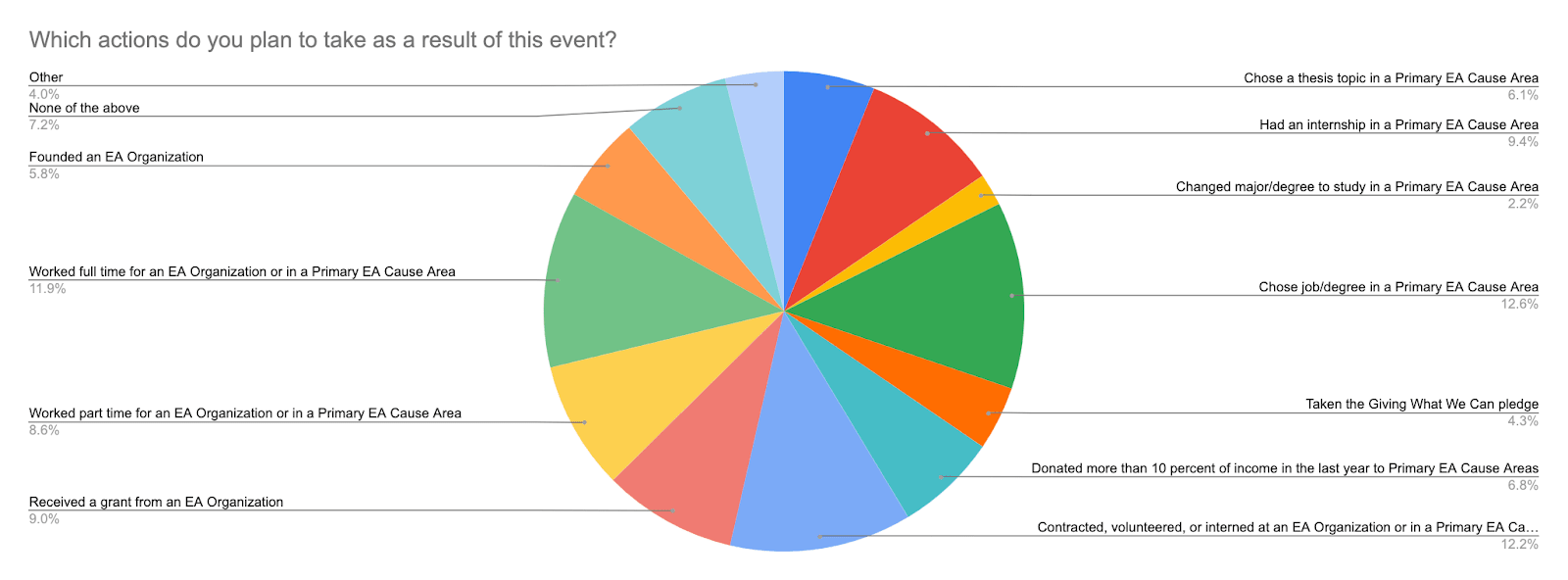

82% of survey respondents said they planned to take at least one of a list of actions (e.g. ‘change degree’) as a result of the conference, including 14.5% resolving to found an EA organisation and 30% resolving to work full-time for such an organisation or in a primary cause area. After applying a pinch of salt, those numbers suggest the conference inspired people to take significant action. We heard of several anecdotal cases where the conference triggered people to apply for particular jobs or funding, or resulted in internships or research collaborations.

We're very thankful to everyone who made this happen: volunteers, attendees, session hosts, and many others.

Contents

For more in-depth commentary, click through to the relevant part of the Google Doc using the links below.

Basic stats

Strategy

Core team

Budget

Admissions

Stewardship

Venues

- Main venue: Guildhall first floor

- Secondary venue: ground floor

- Tertiary venue: Lola Lo

- Acoustics

- Coordinating with venue staff on the day

- Overall view

Volunteers

Attendee experience

- More snacks

- Better acoustics

- Faster wifi

- Food was “incredible” / “amazing” / “extremely good” / “really excellent”

- Attendee Slack

Content

Merch

Satellite events

Communications

Feedback survey results

- ^

For the sake of the search index, those videos are:

Testing Your Suitability For AI Alignment Research | Esben Kran

Good News in Effective Altruism | Shakeel Hashim

Combating Catastrophic Biorisk With Indoor Air Safety | Jam Kraprayoon

Should Effective Altruists Focus On Air Pollution? | Tom Barnes

Alternative Proteins and How I Got There | Friederike Grosse-Holz

How Local Groups Can Have Global Impact | Cambridge Alternative Protein Project

Global Food System Failure And What We Can Do About It | Noah Wescombe

Infecting Humans For Vaccine Development | Jasmin Kaur

Existential Risk Pessimism And The Time Of Perils | David Thorstad

How To Make The Most of EAGx | Ollie Base

Spreading Life Across The Universe: Best Or Worst Idea Ever? | Anders Sandberg

Missing Data In Global Health And Why It Matters | Saloni Dattani

Alignment In Natural & Artificial Intelligence: Research Avenues | Nora Ammann

What Does It Take To Found A High-Impact Charity? | Judith Rensing

Fireside Chat | Lord Martin Rees

The Rising Cost Of AI Training And Its Implications | Ben Cottier

Fireside Chat | David Krueger

Virtues For Effective Altruists | Stefan Schubert

Anders Sandberg on Exploratory Engineering, Value Diversity, and Grand Futures - ^

The feedback survey defined a connection as “a person you feel comfortable asking for a favour. This could be someone you met at the event for the first time or someone you’ve known for a while but didn’t feel comfortable reaching out to until now. A reasonable estimate is fine.”

- ^

By ‘all-told’ cost I mean to include the payment of core organisers, which is often excluded from EAG(x) cost-per-connection figures. The figure that’s more comparable with other EAG(x) retrospectives (i.e. excluding the payment of core organisers) is £43.46 (55 USD at time of writing.) A reason not to take such figures quite at face value is that EA conferences benefit a lot from free labour, particularly from volunteers.

- ^

The feedback survey defined impactful connections as “connections which might accelerate (or have accelerated) you on your path to impact (e.g. someone who might connect you to a job opportunity or a new collaborator on your work).”

Amazing write up, appreciate it a lot!v such clear and comprehensive communication! Of course as a global health person its a bit heartbreaking to see then very tiny content related to that, especially when I would imagine more than 1/16 th of attendees are likely to be bent that way.

But I suppose that's the way the EA wind is blowing.

Nice one.

Thanks, Nick.

I wanted to aim high with cause diversity, as it seemed vital to convey the important norm that EA is a research question rather than a pile of 'knowledge' one is supposed to imbibe. I consider us to have failed to meet our ambitions as regards cause diversity, and would advise future organisers to move on this even earlier than you think you need to. It seems to me that an EAGx (aimed more towards less experienced people) should do more to showcase cause diversity than an EA Global.

From our internal content strategy doc:

From the retrospective:

Some data on response rates (showing basically that 'meta-EA' is the easiest to book):

What explains the high rate of inviting AI people? From memory, I might explain it this way: We had someone who worked in the AI safety field working with us on content, who I (half-way through) asked to specialize on AI content in particular, meaning that while my attention (as content lead and team lead) was split among causes and also among non-content tasks, his attention was not, resulting in us overall having more attention on AI. We then (over-?)compensated for a dearth of content not-that-long before the conference by sending out a large number of invites based on the lists we'd compiled, which were AI-heavy by that point. That means that we made a choice, under quite severe time/capacity constraints, to compromise on cause diversity for the sake of having abundant content.

Thanks so much for the comprehensive and honest reply. I'm actually encouraged that the low ratio wasn't intentional but the result of other understandable content pressures.

I've already said this to you and the team, but a huge congratulations once again on a successful event from us at CEA, and thanks for writing up these thoughts and sharing feedback with us :)

Thanks for writing this up! I'd be interested if you had time to say more about what you think the main theory of change of the event was (or should have been).

What I'll say should be taken more as representative of how I've been thinking, than of how CEA or other people think about it.

These were our objectives, in order:

1: Connect the EA UK community.

2: Welcome and integrate less well-connected members of the community. Reduce the social distance within the UK EA community.

3: Inspire people to take action based on high-quality reasoning.

The main emphasis was on 1, where the theory of impact is something like:

The EA community will achieve more by working together than they will by working as individuals; facilitating people to build connections makes collaboration more likely. Some valuable kinds of connections might be: mentoring relationships, coworking, cofounding, research collabs, and not least friendships (for keeping up one's motivation to do good).

We added other goals beyond connecting people, since a lot of changes to plans will come from one-off interactions (or even exposures to content); I think of someone deciding to apply for funding after attending a workshop on how to do that.

Plausibly though, longer-lasting, deeper connections dominate the calculation, because of the 'heavy tail' of deep collaborations, such as an intern hire I heard of which resulted from this conference.

I'll tag @OllieBase (CEA Events) in case he wants to give his own answer to this question.

I thought these goals were reasonable!

The guidance I give to EAGx organisers about goals is:

--

We support EAGx events primarily to connect the community. Deep collaborations, within and between community members, seem key to achieving many of the goals the community has. We think that we can form more of these collaborations by helping people meet others who might be useful collaborators.

However, there are other important goals that EAGx events are particularly well-placed to serve:

Feel free to set further goals specific to your conference (“e.g. re-animate the national EA group”).

Thanks David, that's just the kind of reply I was hoping for! Those three goals do seem to me like three of the most important. It might be worth adding that context to your write-up.

I'm curious whether there's much you did specifically to achieve your third goal - inspiring people to take action based on high quality reasoning - beyond just running an event where people might talk to others who are doing that. I wouldn't expect so, but I'd be interested there was.

We did encourage speakers to include action points and action-relevant information in their content, and tried to prioritise action-relevant workshops (e.g. "what it takes to found a charity"); I think that's about all. Thanks for the tip to include the goals in the write-up.