“A great deal of thinking well is about knowing when to think at what levels of abstraction. On the one hand, abstractions drop detail, and the reliability of inferences using them varies in complex ways with context. On the other hand, reasoning abstractly can be much faster and quicker, and can help us transfer understanding from better to less known cases via analogy . . . my best one factor theory to explain the worlds I’ve liked best, such as “rationalists”, is that folks there have an unusually high taste for abstraction . . . Thus my strongest advice for my fellow-traveler worlds of non-academic conversation is: vet your abstractions more. For example, this is my main criticism, which I’ve repeated often, of AI risk discussions. Don’t just accept proposed abstractions and applications.” — Robin Hanson

TL;DR

- I am compiling analogies about AI safety on this sheet.

- You can submit new ones and points for/against the existing ones with this form.

The Function of Analogies

“All non-trivial abstractions, to some degree, are leaky.” — Joel Spolsky

When you are trying to explain some novel concept A, it can be helpful to point out similarities and differences with a more familiar concept B.

Simple Comparisons

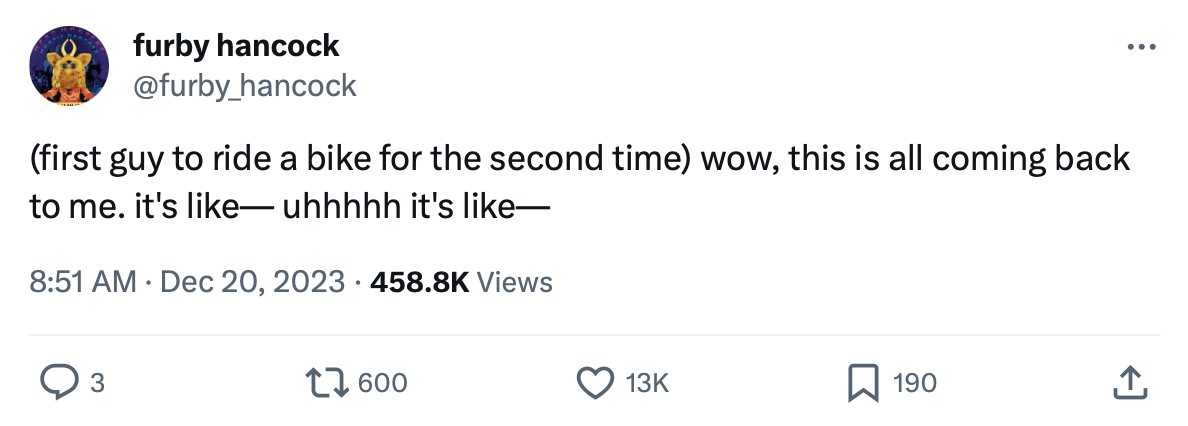

One simple type of comparison you might want to make, when claiming that target A has some property X, is to conjure analogue B, where B trivially has property X. For example, “AI is a technology that might do more harm than good, like cigarettes.” What do you really gain from deploying such an analogy, beyond directly claiming, “AI is a technology that might do more harm than good”? Often, I suspect the answer is: not much. In fact, such a comparison can be more distracting than illuminating because your audience might assume the analogy is a load-bearing warrant for your position and then focus, for example, on whether cigarettes have done more harm than good, instead of engaging with your actual claim about AI. To see how an analogy can distract from a claim, consider these satirical examples:

- He was as tall as a 6’3” tree.

- John and Mary had never met. They were like two hummingbirds, who had also never met.

Or consider this real-life example:

The use cases for this simple type of analogy mostly involve arguing for the existence of property X or better defining the boundary of the set of objects for which X holds, neither of which apply to objects that are 6’3” or creatures who have never met. For example, when arguing with a naïve techno-optimist, who might not grant for free that there even is such a property “technology that might do more harm than good,” appealing to cigarettes could be constructive. Or if you’re trying to explain why AI is a dual-use technology to someone who has never heard the term, you might want to provide gain-of-function research as a central example of this set, so your audience has a better sense of what it includes.

For more on the ways analogies can lead your audience astray when they are mistaken for load-bearing warrants, see Matthew Barnett's post on AI analogies.

Arguments by Analogy

We arrive at a more interesting and useful type of analogy than A, B ∈ X when some unintuitive conclusion C follows from the comparison. Suppose I want to argue that AI should be subject to government regulation. I would analogize: “AI is a technology that might do more harm than good, like cigarettes. Just as cigarettes receive government regulation, so too should AI.” The structure of the argument is:

B => C

A ~ B

∴ A => C

Where ~ stands for sufficiently similar in the relevant ways. Understanding this relationship is critical for understanding the function of analogies. A and B cannot be similar in all ways; if they were, they might as well be identical, and there would be nothing to gain by making the analogy. When A is too similar to B, you get more degenerate analogies like these:

- Her eyes were like two brown circles with big black dots in the center.

- The lamp just sat there, like an inanimate object.

This is the reason behind statements you may have heard like “There is no such thing as a perfect analogy.” If A and B were identical in every respect, the analogy would be redundant.

Arguments by analogy are vulnerable to attacks in multiple places, which can make them a weaker strategy than arguing directly for A => C. Opponents might attack the first premise that B => C. In the case of cigarettes, they might dispute whether the government is justified in its regulations. Sometimes, it is easier to respond by proposing some new analogue B’ rather than getting mired in a discussion about the effect of cigarette smoking on Medicare spending.

The second premise is typically where the action is. Opponents will point out dissimilarities between A and B, to which you will respond that those are not the relevant similarities, and they will argue that A and B are not sufficiently similar on the relevant dimensions, which you will contest. For example, they might point out that Big Tobacco has a terrible history of lying to the public, whereas scaling labs, uh, do not. You would respond that “history of lying to the public” is not a necessary condition for justifying government regulation, rather, cigarettes and AI are both dangerous inventions that could do more harm than good. Conceding this, your opponent might then argue that cigarettes are addictive, but AI is, uh, not so much. You would respond that addiction might not be grounds for regulating AI, but AI development poses risks to which people cannot meaningfully consent; addiction is just one type of these harms; there are other cases that justify government intervention (e.g., negative externalities).

Because A and B are necessarily different, and the argument can be attacked in multiple places, a weak analogy is a recipe for idea inoculation. I try to reserve analogies for settings that support high-bandwidth communication, like a long conversation, so I can dispel the immediate objections that would have ossified into aversion to these kinds of claims about AI risk.

As you refine your understanding of the similarities and differences between A and B and which properties imply C, you might synthesize a crisp argument for A => C that does not rely on analogy to B! And if you have such an argument, why bother with B in the first place? Of course, you might not have the crisp argument at your disposal when the conversation begins (excavating it may have been why you initiated the conversation), but even if you did, there are reasons you might want to analogize anyway:

- Analogy as evidence. For example, “Posing a risk to which people cannot meaningfully consent is sufficient to justify government regulation. This is why the government regulates cigarettes.” Be warned, when you use analogies as evidence, such as to establish a base rate, you assume the burden of covering the reference class, rather than cherry-picking favorable examples.

- Analogy as intuition. Making salient the well-worn mental path from B to C makes it easier for your audience to follow the same path from A to C. Many literary analogies have this flavor: they make more vivid the reader’s mental picture.

- Analogy as shortcut. You can also use the analogy to save time, perhaps gesturing at something broad like “technology that might do more harm than good,” rather than precisely identifying the relevant properties X that imply C. I’m wary of this approach because I think exercising reasoning transparency will give your audience an understanding that is more robust to future counterarguments they encounter.

- Analogy as consistency check. Sometimes consistency is an independent reason to conform to precedent (e.g., for courts), in which case the analogy has weight even if B ought not imply C (e.g., if the government ought not regulate cigarettes). But this is almost never an important consideration.

- Many other reasons...

How to Use the Bank

“Now, as I write, if I shut my eyes, if I forget the plane and the flight and try to express the plain truth about what was happening to me, I find that I felt weighed down, I felt like a porter carrying a slippery load . . . There is a kind of psychological law by which the event to which one is subjected is visualized in a symbol that represents its swiftest summing up.” — Antoine de Saint-Exupéry, Wind, Sand and Stars

I think some AI safety analogies are quite good, and others are quite bad. Unlike Mr. Saint-Exupéry, I do not think the first analogy that comes to mind is reliably the Analogy of Shortest Inferential Distance. The bank allows us to crowdsource analogies, along with arguments for/against specific comparisons to build consensus about the best ones.

You should feel free to experiment with different carrier sentences for these analogies. They are stored in the bank in the form "A is like B in that C," but you can pack a bigger punch by creatively rearranging these elements. For example, rather than, “Everyone having their own AGI is like everyone having their own nuclear weapon because both are dangerous technologies,” you can go straight for “You wouldn’t give everyone their own nuke, would you?”

For now, the bank does not include disanalogies, but sometimes these can be very instructive as well. For example, Stuart Russell writes, “Unlike the structural engineer reasoning about rigid beams, we have very little experience with the assumptions that will eventually underlie theorems about provably beneficial AI.” For another example, I once heard a MATS scholar cast doubt on the Bioanchors report by remarking, “humans would not have gotten to flight by trying to mimic birds.”

I will also scrutinize submitted analogies that are primarily intended to express skepticism toward AI risk, not on principle but because I often find them low quality and lacking good faith. Stuart Russell gives voice to such a straw skeptic, arguing that “electronic calculators are superhuman at arithmetic. Calculators didn’t take over the world; therefore, there is no reason to worry about superhuman AI.”

Shall I Compare Thee

“More complex and subtle proposals may be more appealing to EAs, but each added bit of complexity that is necessary to get the proposal right makes it more likely to be corrupted.” — Holly Elmore

Because there are no perfect analogies, the name of the game is nuance triage. Analogies to AI will foreground some aspects of AI risk at the expense of others. Your job is to select the analogy that minimizes the value of lost information by eliding the least important pieces of nuance.

Some people who work in AI safety might object to compromising any amount of nuance. They might argue that AI is so unlike any technology humanity has seen before, that making comparisons to pedestrian concepts like nuclear proliferation would be disingenuous. Even if there are real similarities, it would be unwise to anchor our expectations to these precedents. Rather, we must attend vigilantly to the particular ways AI is distinct from previous risks if we hope to stand a chance at survival. For example, an objector might continue, our inclination to anthropomorphize AI models has been disastrous for our understanding of them.

For better or worse, the pattern matching that powers analogies plays a fundamental role in learning new ideas. Cognitive scientists have gone as far as suggesting that “analogy is a candidate to be considered the core of human cognition.” Linguists George Lakoff and Mark Johnson write, “metaphor is pervasive in everyday life, not just in language but in thought and action. Our ordinary conceptual system, in terms of which we both think and act, is fundamentally metaphorical in nature.” If they are right, it would be anti-natural for us to resist this predisposition.

Moreover, the public’s attention is scarce; if we do not compromise some nuance, the discourse will be monopolized by bad analogies like “calculators didn’t take over the world.” By choosing our analogies carefully, we can raise the quality of the public conversation on AI risk.

Matthijs Maas of the Legal Priorities Project published a great report a few months ago surveying AI analogies and their implications for public policy. He identifies 55 frames, like AI as a brain, AI as a tool, and AI as a weapon, and he explicates some of the implications to policymakers of analogies that invoke these frames. He also cautions that “unreflexive drawing of analogies in a legal context can lead to ineffective or even dangerous laws, especially once inappropriate analogies become entrenched.” So let's choose appropriate analogies! You can review the bank here and submit new analogies and revisions here.

Cillian Crosson pointed out to me that explaining AI risk through many analogies without being wed to any particular one shows your audience that none of them should be treated as especially load-bearing for your arguments. I agree that this approach reduces the risks of entrenched analogies and idea inoculation.

An analogy that has become entrenched:

Acknowledgements

“Communication in abstracts is very hard . . . Therefore, it often fails. It is hard to even notice communication has failed . . . Therefore it is hard to appreciate how rarely communication in abstracts actually succeeds.” — chaosmage

Thank you to the attendees of a FAR Labs writing workshop, including Hannah Betts, Lawrence Chan, and David Gros for early feedback on this project. Thank you to Juan Gil for the boulder meme.

I realized halfway through writing this post that I lean on cigarettes as an extended example; I don’t mean to elevate this as a particularly strong or interesting analogy.

These examples are funny because they flout the Gricean maxim of quantity by providing no information at all.

Sometimes people might turn to the simple type of analogy when stating the conclusion explicitly would invite criticism, and merely implying the conclusion provides some plausible deniability. In this spirit, Matthijs Maas writes that analogies about AI can “often serve as fertile intuition pumps.” But I think it is rarely worth sacrificing reasoning transparency to smuggle in your conclusions.

The argument does not rely on identifying the precise shared properties X that are responsible for C. For example, I may think a medical result from rats will generalize to humans without understanding the exact mechanism behind the effect. The permitted absence of X is both a strength and weakness of arguments by analogy, as we will see later.

“While in any given year, smokers are likely to spend more on healthcare than never smokers, smokers live fewer years and overall cost taxpayers less than never smokers.” Source

The path analogy in this sentence is one such example.

Refuting this argument by analogy is left as an exercise to the reader. Hint: “Sufficiently similar in the relevant ways.”

This is great! I've publicly spoken about AI Safety a couple of times, and I've found some analogies to be tremendously useful. There's one (which I've just submitted), that I particularly like:

From this op-ed by Ezra Klein.

Thanks, Agustín! This is great.

Nice post. I also have been exploring reasoning by analogy. I like some of the ones in your sheet, like "An international AI regulatory agency" --> "The International Atomic Energy Agency".

I think this effort could be a lot more concrete. The "AGI" section could have a lot more about specific AI capabilities (math, coding, writing) and compare them to recent technological capabilities (e.g. Google Maps, Microsoft Office) or human professions (accountant, analyst).

The more concrete it is, the more inferential power. I think the super abstract ones like "AGI" --> "Harnessing fire" don't give much more than a poetic flair to the nature of AGI.

Please submit more concrete ones! I added "poetic" and "super abstract" as an advantage and disadvantage for fire.

Executive summary: Analogies can be useful for explaining AI safety concepts, but they should be chosen carefully to avoid misrepresenting key ideas or distracting from the main arguments.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.