Transformative AI and Compute - A holistic approach - Part 1 out of 4

This is part one of the series Transformative AI and Compute - A holistic approach. You can find the sequence here and the summary here.

This work was conducted as part of Stanford’s Existential Risks Initiative (SERI) at the Center for International Security and Cooperation, Stanford University. Mentored by Ashwin Acharya (Center for Security and Emerging Technology (CSET)) and Michael Andregg (Fathom Radiant).

This post attempts to:

- Introduce a simplified model of computing which serves as a foundational concept (Section 1).

- Discuss the role of compute for AI systems (Section 2).

- In Section 2.3 you can find the updated compute plot you have been coming for.

- Explore the connection of compute trends and more capable AI systems over time (Section 3).

Epistemic Status

This article is Exploratory to My Best Guess. I've spent roughly 300 hours researching this piece and writing it up. I am not claiming completeness for any enumerations. Most lists are the result of things I learned on the way and then tried to categorize.

I have a background in Electrical Engineering with an emphasis on Computer Engineering and have done research in the field of ML optimizations for resource-constrained devices — working on the intersection of ML deployments and hardware optimization. I am more confident in my view on hardware engineering than in the macro interpretation of those trends for AI progress and timelines.

This piece was a research trial to test my prioritization, interest and fit for this topic. Instead of focusing on a single narrow question, this paper and research trial turned out to be more broad — therefore a holistic approach. In the future, I’m planning to work more focused on a narrow relevant research questions within this domain. Please reach out.

Views and mistakes are solely my own.

1. Compute

Highlights

- Computation is the manipulation of information.

- There are various types of computation. This piece is concerned with today’s predominant type: digital computers.

- With compute, we usually refer to a quantity of operations used — computed by our computer/processor. It is also used to refer to computational power, a rate of compute operations per time period.

- Computation can be divided into memory, interconnect, and logic. Each of these components is relevant for the performance of the other.

- For an introduction to integrated circuits/chips, I recommend “AI Chips: What They Are and Why They Matter” (Khan 2020).

This section discusses the term compute and introduces one conceptual model of a processor — a helpful analogy for analyzing trends in compute.

Compute is the manipulation of information or any type of calculation — involving arithmetical and non-arithmetical steps. It can be seen as happening within a closed system: a computer. Examples of such physical systems include digital computers, analog computers, mechanical computers, quantum computers, or wetware computers (your brain).[1]

For this report, I am focusing on today’s predominant type of computing: digital computing. This is for two reasons: First, it is the predominant type of computing of human engineered systems. Second, my expertise lies within the digital computing domain.

That does not mean that other computing paradigms, such as quantum computing[2], are not of relevance. They require a different analysis and are out of scope for this piece. I believe these paradigms are unlikely to be important to AI progress in the next 10 years, as they are far less mature than digital computing. Beyond that point, however, they might become cost-competitive with digital computing, and I am uncertain as to whether they could speed up AI progress by a significant amount.

1.1 Logic, Memory and Interconnect

For this piece, we can refer to compute as either arithmetical or non-arithmetical operations. An example of an arithmetic operation is adding two numbers (a ← b + c) or multiplying two numbers (a ← b x c), whereas non-arithmetic operations are, for example, comparisons: “Is a greater than b (a > b)?” Based on the resolution, we then continue with a selection of arithmetic operations. Such a selection of operations is called a program or application.

Therefore, when referring to compute used, we simply count how many of those basic operations the computer has done. Compute used is a quantity of operations. A floating point operation (FLOP) is such an example of an operation on floating point numbers. Those metrics and their nuances are discussed in Part 4 - Appendix B.

For an application, such as calculating the quickest route from A to B, we require a clearly defined number of operations, e.g., 10,000,000 of such operations. This number does not give any insights into how long it takes to compute this. Think of it as a distance; how long it will take depends on your vehicle and many other external factors.

Now that we have defined the compute consists of several operations, we need something which executes those operations: a computer.

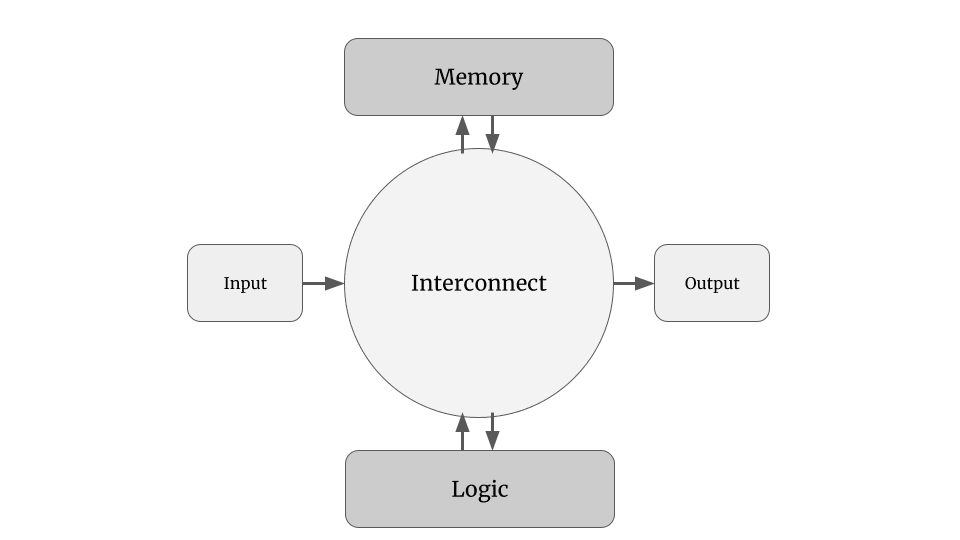

The three essential elements of a computer are: Logic, Memory, Interconnect.

- The logic component is where we conduct the arithmetical and non-arithmetical operations.

- The memory supplies the logic via the interconnect with the information on which and which the logic processes. It is also the place where we store our results.

- The interconnect supplies the logic and memory with the information that needs to be processed or just got processed.

Whereas many people often only think about the logic unit as part of a computer, the memory and interconnect are as important. We need to transport the manipulated information and store it.

Consequently, all of those three components are influencing one another:

- If our memory capacity is limited, we cannot save all of the results.

- If the interconnect does not supply the logic fast enough with more information, the logic is waiting and stalled.

This is a basic model which applies to a wide variety of computers. Your brain can also be conceptualized this way. There are places where you store information: memory; we can recall this information (if lucky): interconnect, then manipulate it: logic, and potentially save it again via the interconnect. Depending on your task, you might be_ bottlenecked_ by either one of those components. You might not process as much information as coming in. Your interconnect is faster than your logic — you are overutilized (>100%).

In contrast, modern computers are often underutilized (<100%): The logic can theoretically process more information per second than the interconnect supplies: .

Consequently, solely improving one component of our model does not lead to better performance. In general, during the last decades, we have seen innovations that fall into either one of those three buckets to increase performance:

- Increasing the processing speed of the logic: more operations per second [OP per second].

- Increasing the memory capacity: we can recall and save more information that needs to be or has been processed [Unit of information].

- Increasing the interconnect speed: the amount of information we can supply to our logic or memory per second [Unit of information per second]:

Examples of such innovations are pipelining, multicore, heterogeneous architectures, or specialized processors. In the future, I plan to publish a more extended introduction to compute — understanding those innovations helps us to understand caveats of performance metrics and forecast their trends.

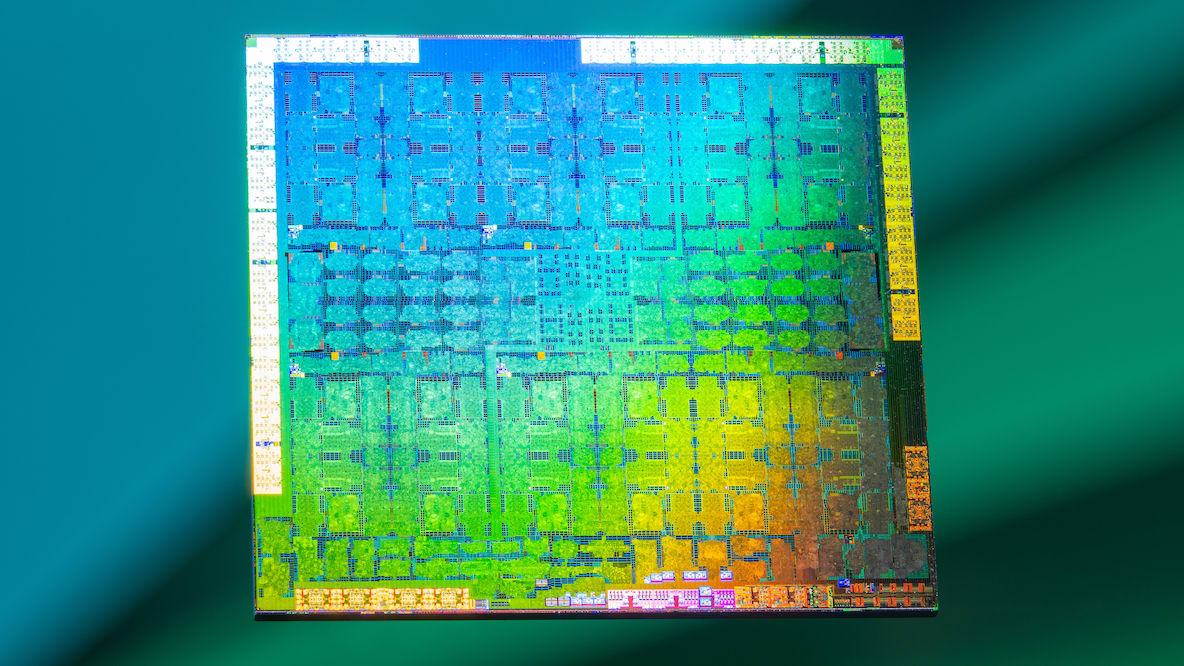

1.2 Chips or Integrated Circuits

Our theoretical model for a computer then gets embedded into a substrate we call a chip or an integrated circuit.

I would consider this the most complex machine we humans have ever created. I will not get into the details on how we get our theoretical computer model onto an actual working chip. For an introduction to chips, especially AI chips, I can recommend the piece: “AI Chips: What They Are and Why They Matter” (Khan 2020).

2. Compute in AI Systems

Highlights

- Compute is required for training AI systems. Training requires significantly more compute than inference (the process of using a trained model).

- Next to algorithmic innovation and available training data, available compute for the final training run is a significant driver of more capable AI systems.

- OpenAI observed in 2018 that since 2012 the amount of compute used in the largest AI training runs has been doubling every 3.4 months.

- In our updated analysis (n=57, 1957 to 2021), we observe a doubling time of 6.2 months between 2012 and mid-2021.

Computation has two obvious roles in AI systems: training and inference. This section discusses those and presents compute trends.

2.1 Computing in AI Systems

Training

Training a neural network, also referred to as learning, describes the process of determining the value of the weights and biases in the network. It is a fundamental component of ML systems, be it supervised, unsupervised or reinforcement learning.[3]

When we refer to compute used for training, we usually refer to the amount of compute used for the final training run. Common metrics are operations (OPs), floating point operations (FLOPs) or Petaflop/s-days[4]. Once we have trained an AI system, we theoretically can give a quantity of operations conducted — this is the metric we are interested in.

Nonetheless, during the development stage, we also require training runs for trial and error where we tweak the architecture, hyper-parameters and others. Consequently, the amount of compute for the final training run might be useful as a proxy for the capabilities of the AI system, however, the total cost can differ significantly.

Inference

Once a network is trained, the output can be computed by running the computation, determined by the weights and network architecture. This process is referred to as inference or forward pass. A trained network can be distributed and then deployed for applications. At this point, the network is static: all computations and intermediate steps are defined. Only an input is necessary to carry out inference. An inference is done over and over. Examples are doing a single Google search, asking a voice assistant such as Siri or Alexa, and others.

Training is computationally more complex than inference. There are two primary reasons: First, the training of the weights and biases is an iterative process – usually, thousands of backward passes are required for a single input to obtain the desired result. Secondly, for the training process, at least for supervised learning, the labeled training data needs to be available to the computation system, which requires memory capacity. Therefore, combining those two points, training is a significantly more complex process regarding memory and computational resources, as one requires thousands of backward passes for a single input of the enormous training set.[5]

This also leads to important consequences for the field of compute governance, as once an actor acquires a trained model, the required amount of compute for running the inference is significantly less, which can lead to so-called model theft (Anderljung and Carlier 2021).

We have discussed the two distinct computations of AI systems: training and inference. For this piece, I am mostly concerned about the required compute for the final training run[6], as we have observed meaningful trends for this metric over time, and there seems to be an agreement that the amount of compute used plays a role for the capabilities of the AI systems (Section 3 discusses this connection).

For the rest of the piece, my usage of the term “compute used” is synonymous with “the amount of compute used for the final training run of an AI system”.

2.2 Compute Trends: 2012 to 2018

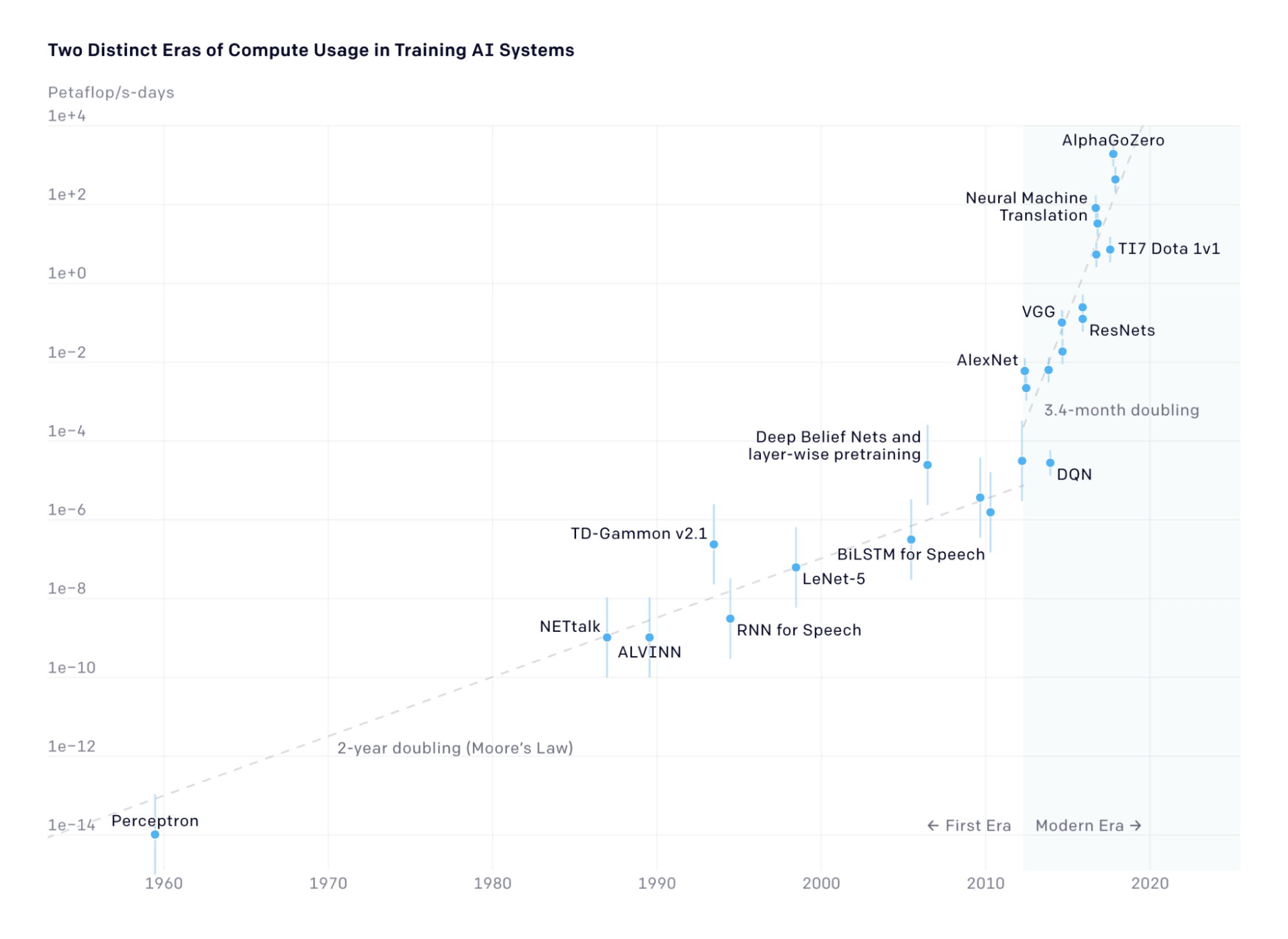

In the blogpost “AI and Compute”, Amodei and Hernandez analyze the compute used for final training runs (Amodei and Hernandez 2018). At this point, it remains the best available analysis.

We can summarize the main insights as follows:

- We can divide the history of compute used for AI systems based on the trend lines into two eras: the first and modern era.

- The modern era started with AlexNet in 2012. AlexNet was the first publication that leveraged graphical processing units (GPUs) for the training run (we discuss this development in Part 2 - Section 4.2).

- From 2012 to 2018: the amount of compute used in the largest AI training runs has been increasing exponentially, with a doubling time of 3.4 months

- Since 2012 this metric has grown by more than 300,000x.

The authors do not comment on the breakdown of this trend: is it dominated by increased spending or hardware improvements? Other people at the time noted that this trend has to be due to increased spending on compute since this growth was far faster than growth in computing capabilities per dollar (Carey 2018; Garfinkel 2018; Crox 2019). Eventually, this would lead to massive spending.

The blogpost closes with an outlook where they could imagine this trend continuing, e.g., due to continued improved hardware by hardware startups.[7] As this piece's latest AI system was AlphaZero which was published in December 2018, we tried to crowdsource more compute estimates and take an updated look.

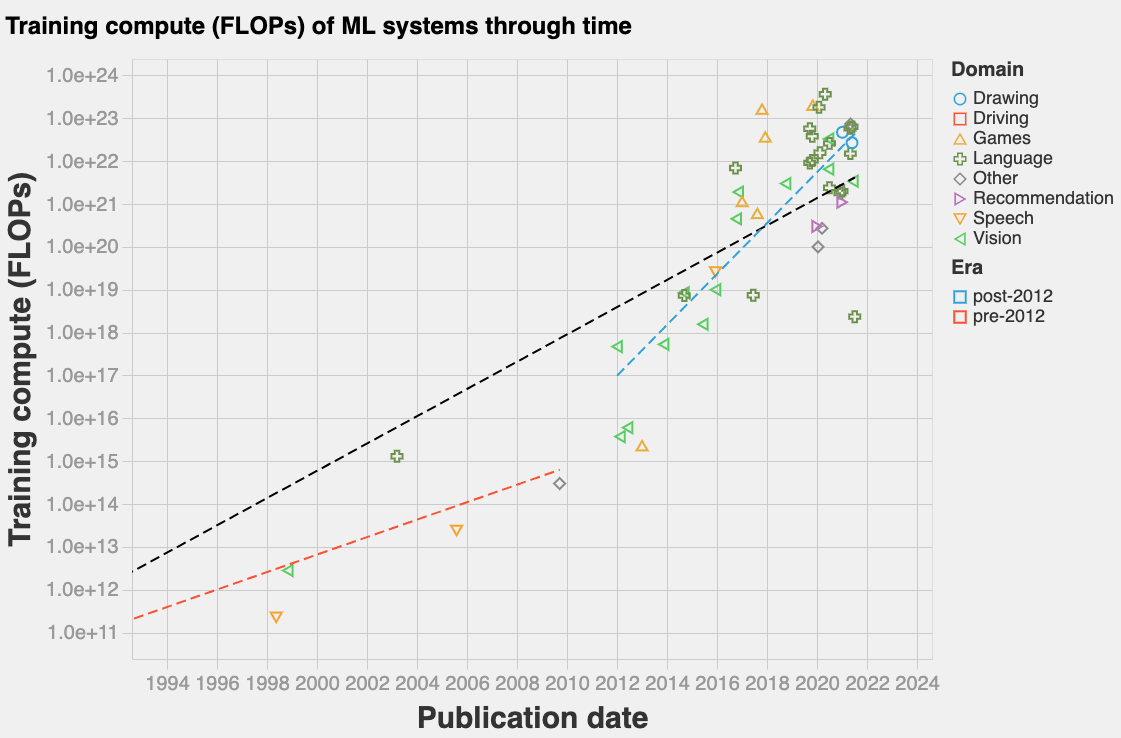

2.3 Compute Trends: An Update[8]

We are not the first to take an updated look at this trend. Lyzhov sketched this (Lyzhov 2021) and also Branwen discussed this (Branwen 2020). Also, there are several posts that commented on AI and Compute (Carey 2018; Garfinkel 2018; Crox 2019). I will reference some interpretations in Part 2 - Section 4: Forecasting Compute.

Our dataset (Sevilla et al. 2021) contains the same results as the one used in “AI and Compute” and includes a total of 57 models. We have added some recent notable systems such as GPT-3, AlphaFold, PanGu-α, and others. Note that those estimates are either our or others’ best guesses. Most publications do not disclose the amount of compute used. You can find the calculations and sources in the note of the corresponding cell. The public dataset is available and continuously updated as its own independent project. We would appreciate any suggestions and comments. An interactive version of the graph below is available here.

The key insights are:

- If we analyze the same period, as in the AI and Compute piece, from AlexNet to AlphaZero (12-2017), we observe a doubling time of 3.6 months (versus 3.4 months (Amodei and Hernandez 2018)).

- Our data includes 28 additional models which have been released since then.

- Groundbreaking AI systems, such as AlphaFold, required less compute than previous systems.

- Only three systems used more compute than the leader of the original “AI and Compute”, AlphaGo Zero. Those systems are AlphaStar, Meena, and GPT-3.

- In our dataset, we find the following doubling times:

- 1957 to 2021 doubling time: 11.5 months

- Pre 2012 doubling time: 18.0 months

- Post 2012 doubling time: 6.2 months

- However, note that the results and trends should be taken with caution, as this model relies on an incomplete dataset due to an ambiguous selection process and the compute numbers are mostly estimates.

For now, we do not interpret those trends. I do not want to make strong predictions that new state-of-the-art AI systems will not end up using more compute. Nonetheless, we should also not assume that the incredibly fast doubling rate seen in “AI and Compute” will occur in the future, either. For some discussion around this update, see Lyzhov’s post “"AI and Compute" trend isn't predictive of what is happening“ (Lyzhov 2021).

We also think that the selection of the models in the dataset plays an important role. Our heuristic criteria of inclusion was along the lines of: “important publication within the field of AI OR lots of citations OR performance record on common benchmark.” Also, it is unclear on which models we should base this trend. The piece AI and Compute also quickly discusses this in the appendix. Given the recent trend of efficient ML models due to emerging fields such as Machine Learning on the Edge, I think it might be worthwhile discussing how to integrate and interpret such models in analyses like this — ignoring them cannot be the answer.

We have discussed the historical trends of compute for final training runs and seen a tremendous increase in compute used — but has this resulted in more capable AI systems? We explore this question in the next section.

3. Compute and AI Alignment

Highlights

- Compute is a component (an input) of AI systems that led to the increasing capabilities of modern AI systems. There are reasons to believe that progress in computing capabilities, independent of further progress in algorithmic innovation, might be sufficient to lead to a transformative AI[9].

- Compute is a fairly coherent and quantifiable feature — probably the easiest input to AI progress to make reasonable quantitative estimates of.

- Measuring the quality of the other inputs, data and algorithmic innovation, is more complex.

- Strongly simplified, compute only consists of one input axis: more or less compute — where we can expect that an increase in compute leads to more capable and potentially unsafe systems.

- According to “The Bitter Lesson”, progress arrives via approaches based on scaling computation by search and learning, and not by building knowledge into the systems. Human intuition has been outpaced by more computational resources.

- The strong scaling hypothesis is stating that we only need to scale a specific architecture, to achieve transformative or superhuman capabilities — this architecture might already be available. Scaling an architecture implies more compute for the training run.

- Cotra's report on biological anchors forecasts the computational power/effective compute required to train systems that resemble, e.g. the human brain’s performance. Those estimates can provide compute milestones for transformative capabilities of AI systems.

We have seen an enormous upwards trend in the used compute for AI systems. It is also well known that modern AI systems are more capable at various tasks. However, the relative importance of three driving factors (algorithmic innovation, data, and compute) for this progress is unclear.

This section explores the role of compute for more capable systems and discusses hypotheses that favor compute to be a predominant factor in the past, and the future. In my opinion, there seems to be consensus amongst experts that compute has played an important role (at least as important as the other two factors) in AI progress over the last decade and will continue doing so.

My best guess is that compute is not an input that we can leverage to create safe AI systems. Strongly simplified, compute only consists of one input axis: more or less compute — where we can expect that an increase in compute leads to more capable and potentially unsafe systems (as I will discuss in this section). Data and algorithms have more than one input axis which could, in theory, be used to affect the safety of an AI system. Algorithms could be designed to understand human intent and be aligned, and data could be more or less biased, leading to wrong interpretations and learnings.

Consequently, within the field of AI governance, compute is a unique sub-domain, and its importance could be harnessed to achieve better AI alignment outcomes, by,. e.g., regulating access to compute. I will discuss this in Part 3 - Section 6.

The following subsections will explore different hypotheses about the role of compute for AI systems capabilities and their safety: “The Bitter Lesson”, the scaling hypothesis, qualitative assessments and compute milestones using biological anchors.

3.1 The Bitter Lesson

Rich Sutton wrote the commonly-cited piece “The Bitter Lesson”. He argues that algorithmic innovation mostly matters insofar as it creates general-purpose systems capable of making use of great amounts of compute. He bases this lesson as a historical observation:

- AI researchers tried to integrate knowledge into their agents/systems.

- This has helped in the short-term.

- However, in the long run, this progress fades and it even inhibits further progress.

- Breakthrough progress eventually then comes by a contrary approach that relies on scaling computation.

It is a bitter lesson because the latter approach is more successful than the human-centric approach, which relies on intuition from the researchers and is often more personally satisfying. (Sutton 2019):

“One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.”

He closes with:

“We want AI agents that can discover like we can, not which contain what we have discovered.”

3.2 Scaling Hypothesis

The scaling hypothesis is similar to the bitter lesson. Gwern describes it the following (Branwen 2020):

“The strong scaling hypothesis is that, once we find a scalable architecture like self-attention or convolutions, which like the brain can be applied fairly uniformly, we can simply train ever larger NNs and ever more sophisticated behavior will emerge naturally as the easiest way to optimize for all the tasks & data.

More powerful NNs are ‘just’ scaled-up weak NNs, in much the same way that human brains look much like scaled-up primate brains.”

Consequently, to achieve more capabilities, we only need to scale a specific architecture (where it is still open if we already have this architecture). Scaling an architecture implies more parameters and requires more compute for training this network. According to Gwern “the scaling hypothesis has only looked more and more plausible every year since 2010” (Branwen 2020).[10]

GPT-2 (Radford et al. 2019) to GPT-3 (Brown et al. 2020) is an example of scaling and is seen as evidence for the scaling hypothesis. Finnveden extrapolates this trend and discusses this in his forum post “Extrapolating GPT-N performance” (Finnveden 2020).

3.3 AI and Efficiency

We have discussed that increased compute has led to more capable AI systems, but how does its relevance compare to the two other main factors: data and algorithmic innovation?

The best available piece investigating algorithmic innovation is “AI and Efficiency” by Hernandez and Brown from OpenAI. This analysis examines the algorithmic improvement of the last years by having a constant benchmark (AlexNet performance on ImageNet) and training modern AI systems until they achieve similar performance. The algorithmic improvement is then seen in the reduced amount of compute to achieve similar performance. Their analysis shows that we have seen a 44x increase in algorithmic progress. This result is more likely to be an underestimate for various reasons — see their paper for a discussion on this (Hernandez and Brown 2020).

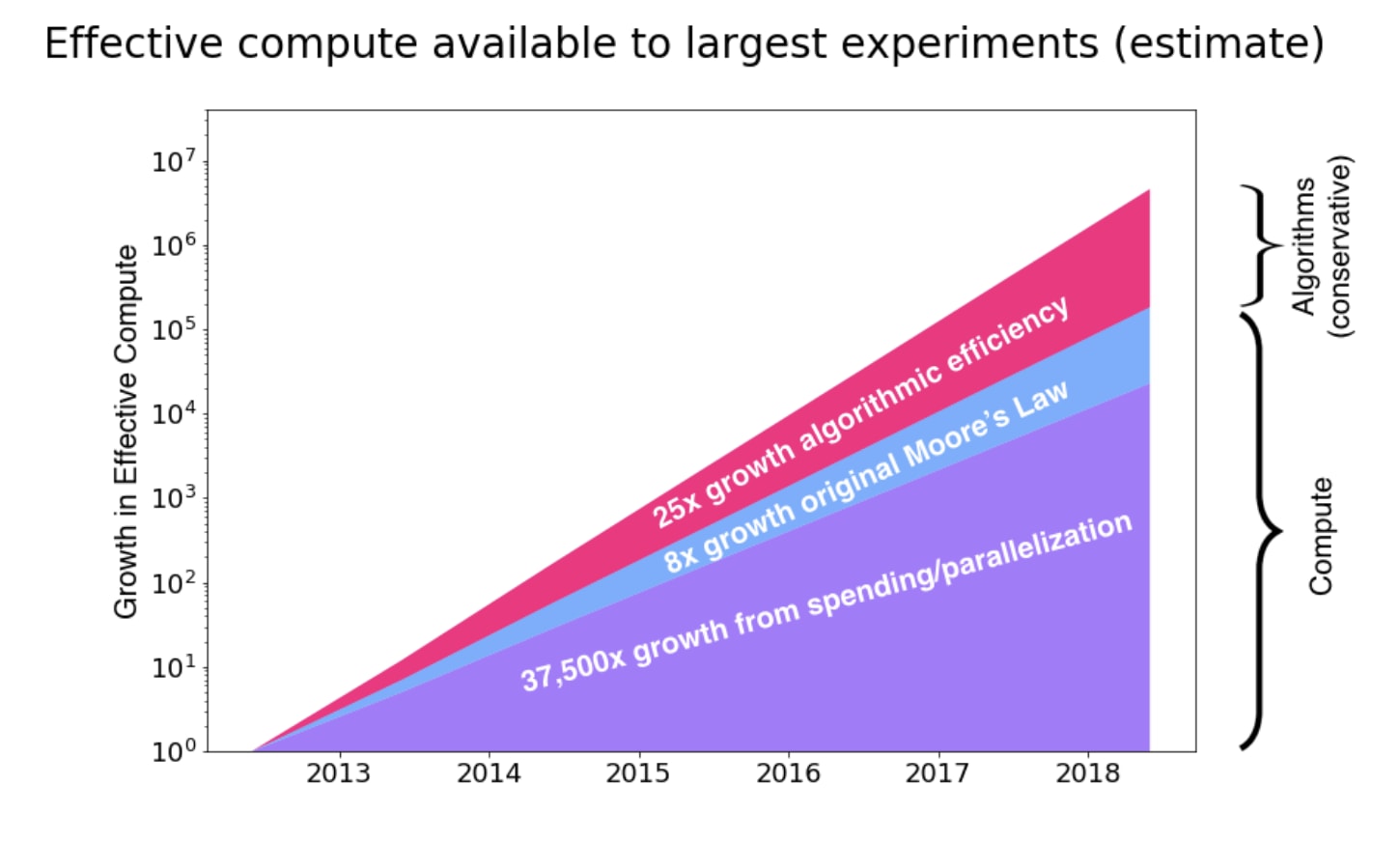

Those insights are important to decompose the effective compute available (Figure 3.1).

This analysis is highly speculative, according to the authors. Nonetheless, it also highlights our lack of decomposition in the purple part, “37’500x growth from spending/parallelization”.[11]

“We’re uncertain whether hardware or algorithmic progress actually had a bigger impact on effective compute available to large experiments over this period.”

Consequently, thinking about effective compute is a critical concept to measure progress. The idea is also used in the timeline by Cotra, which we will discuss in the next Part 2 Section 4.1.

3.4 Qualitative Assessment

Lastly, in the 2016 Expert Survey on Progress in AI, leading AI researchers were asked about the improvements without the same growth of (1) computing cost, (2) algorithmic progress, (3) training data, (4) research effort, and (5) funding.

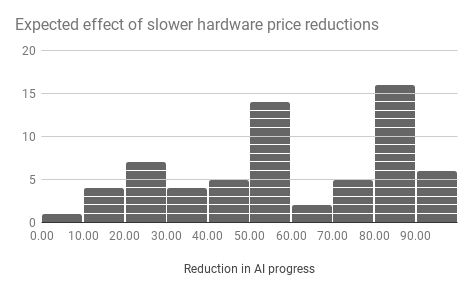

For (1) computing cost, the question was the following:

“Over the last 10 years the cost of computing hardware has fallen by a factor of 20. Imagine instead that the cost of computing hardware had fallen by only a factor of 5 over that time (around half as far on a log scale). How much less progress in AI capabilities would you expect to have seen? e.g. If you think progress is linear in 1/cost, so that 1-5/20=75% less progress would have been made, write ’75’. If you think only 20% less progress would have been made write ’20’.”

For this question, the median answer was 50% and the following distribution:

The other factors were at most as important or less important than the reduction in hardware prices. For a comparison to the other factors, see the blog post (Grace et al. 2016).[12]

3.5 Compute Milestones

Compute milestones describe estimates and forecasts on the required computational power for matching well-known capable compute systems, such as the human-brain. The theory is that we know computational systems, such as the human-brain, or evolution, which have resulted in general intelligence. To this point, we have not created an artificial system yet which matches those capabilities. Consequently, we could be missing some fundamental algorithmic insights and/or our existing AI systems do not match their computational requirement yet.

Once we hit those milestones and no transformative capabilities are unlocked before, we can be fairly sure that the missing pieces are algorithmic. So far it seems like a few algorithmic advances have helped modern AI improve, but a great deal of progress has been due to scaling alone. In some ways, the resultant systems already outperform humans, despite taking far less training and compute to train than, e.g., human evolution. If one accepts the scaling hypothesis, one might believe that using massively more compute will get us to general intelligence, and processes that have led to intelligent behavior in previous systems, such as biological systems, are a natural reference point. For a discussion on this, I refer to the book Superintelligence by Bostrom.

There are various analogies to draw in regards to our components from our basic concept of a computer: logic, interconnect, memory. We are unsure if our capabilities in regards to logic, interconnect and memory within the human brain have been matched, but maybe an individual component has been matched yet.[13] Whereas I’m uncertain about the interconnect within the brain, the interconnect between different brains is likely to be surpassed. While we as humans are having a hard time interfacing with others due to limited bandwidth communication, such as written text or speech, the interconnect between computer systems seems to have significantly higher bandwidth and does not have the same restrictions as we humans do: connecting and scaling compute systems is easier than it is for human brains.

Various reports introduce these milestones by using biological anchors. Most notably “How Much Computational Power Does It Take to Match the Human Brain?” by (Carlsmith 2020), “Semi-informative priors over AI timelines” by (Davidson 2021), and the draft report on AI timelines by (Cotra 2020). Consequently, those milestones can provide meaningful insights for forecasting transformative AI. We will discuss this in Part 2 - Section 4.1.

Those reports list the following milestones (Davidson 2021; Cotra 2020; Carlsmith 2020):

The amount of compute required to simulate ...

- (1) … the evolution of the human brain (Evolutionary hypothesis)

- Evolution has created intelligent systems, such as the mammalian brain. This milestone estimates the amount of compute which happened inside the nervous systems throughout evolution.

- (2) ... total computation done over a human lifetime (Lifetime learning hypothesis)

- This milestone is based on the learning process of a human child’s brain until they reach the age 30.

- (3) The computational power of the human brain.

- When could computers perform any cognitive task that the human brain can?

- “How Much Computational Power Does It Take to Match the Human Brain?” (Carlsmith 2020).

- (4) The amount of information in the human genome.

- This milestone estimates that our system would require as much information in its parameters as there is the human genome, next to being computationally as powerful as the brain.

All of the milestones rely on some kind of estimate on the required amount of (effective) compute.

Therefore, depending on the details of this estimate, matching those biological counterparts could already be unlocked before we reach their computational requirements. We could already have developed more efficient algorithms, will create better algorithms, or other modules of the system, such as memory capacity or the machine-to-machine interconnect, could unlock these milestones earlier. Consequently, those estimates could provide some kind of upper bound of when we will reach capabilities similar to the biological counterparts — assuming that it is a pure computational process and we have the right algorithms.

3.6 Conclusion

We have learned that compute is one of the key contributors of today’s more capable AI systems[14]. Compute is, next to data and algorithmic innovation, important and increased compute has led to more capable AI systems. There are reasons to believe (milestones, and scaling hypothesis) that a continued trend in compute could lead to capable, if not, transformative AI systems. I do not see the list of milestones as complete, rather, those are the ones I stumbled across. There are also empirical investigations on scaling laws available (Kaplan et al. 2020; Hestness et al. 2017).

During my limited research time, the scaling hypothesis and “The Bitter Lesson” became more plausible to me. As Sutton describes: It is a historical observation — where I would see the recent years as additional confirmation (e.g., GPT-3). I would be interested in the opinions of AI experts on this.

Compute is a quantity, and computational power is a rate, which is clearly defined and measurable — only the stacked layers of abstractions make it not easily accessible (discussed in Part 2 - Section 5.1). However, comparing it to the quality of data, or algorithmic innovation, I am more optimistic for compute measurement than for the others. Therefore, compute is an especially interesting component for analyzing the past and the present, but also forecasting the future. Controlling and governing it can be harnessed to achieve better AI alignment outcomes (see Part 3 - Section 6: Compute Governance). The next section explores forecasting compute.

Next Post: Forecasting Compute

The next post "Forecasting Compute [2/4]" will attempt to:

- Discuss the compute component in forecasting efforts on transformative AI timelines (Part 2 - Section 4).

- Propose ideas for better compute forecasts (Part 2 - Section 5).

Acknowledgments

You can find the acknowledgments in the summary.

References

The references are listed in the summary.

One could argue the universe is a computer as well: pancomputationalism. ↩︎

You can read some thoughts on quantum computing in the series “Forecasting Quantum Computing” by Jaime Sevilla. ↩︎

Compute produces the data as an interactive environment for reinforcement learning. Therefore, more compute leads to more available training data. ↩︎

A petaflop/s equals floating point operations per second. A day has . Therefore, a petaflop/s-day equals floating point operations. ↩︎

Nonetheless, according to estimates, overall most compute is probably used for the deployed AI systems — inference. Whereas, as outlined, the training process is computational more complex, the repetitive behavior of inference once deployed, leads to overall more used compute. In the future those resources could be repurposed for training (if we do not see different hardware for training and inference — discussed in Section 4.2) (compute for training >> compute for inference but number of inferences >> number of training runs) (Amodei and Hernandez 2018). ↩︎

The final training run refers to the last training of an AI system before stopping updating the learned weights and biases and deploying the network for inference. There are usually dozens to hundreds of training runs of AI systems to tweak the architecture and hyper-parameters optimally. While this metric is relevant for the development costs, it is not an optimal proxy for the systems’ capabilities. ↩︎

“We think it’d be a mistake to be confident this trend won’t continue in the short term.” (Amodei and Hernandez 2018). ↩︎

The data used in this section is coming out of a project by Jaime Sevilla, Pablo Villalobos, Matthew Burtell and Juan Felipe Cerón. We collaborated to add more compute estimates to the public database. I can recommend their first analysis: “Parameter counts in Machine Learning”. ↩︎

Transformative AI, as defined by Open Philanthropy in this blogpost: “Roughly and conceptually, transformative AI is AI that precipitates a transition comparable to (or more significant than) the agricultural or industrial revolution.” ↩︎

For more thoughts and a discussion on this, I can recommend “The Scaling Hypothesis” by Gwern (or the summary in the AI Alignment Newsletter #156). ↩︎

I would also describe the purple part as an open research question. How can we decompose this — differentiating between parallelization, an engineering effort, and spending, where it is easier to find upper limits? ↩︎

I would be interested in an update on this. However, I also did not spend time looking for an update on this in the recent AI experts surveys. ↩︎

I initially made the claim that there are reasons to believe that the available memory capacity of compute systems might match the human brain or at least be sufficient (at least the information we can consciously recall and access). However, while thinking more about this claim, I became uncertain. I started wondering if the brain also has something similar to a memory hierarchy as it is the default for compute systems (different levels of memory capacities which can be accessed at different speeds). I would be interested in research on this. ↩︎

In general, computational power is key to our modern society, and might also be the foundation of life in the future: digital minds. The future of humanity could be computed on digital computers — see “Digital People Would Be An Even Bigger Deal” by Holden Karnofsky or “Sharing the World with Digital Minds” by Bostrom. ↩︎

I really appreciated the extension on "AI and Compute". Do you have a sense of the extent to which your estimate of the doubling time differs from "AI and Compute" stems from differences in selection criteria vs new data since its publication in 2018? Have you done analysis on what the trend looks like if you only include data points that fulfil their inclusion criteria?

For reference, it seems like their criteria is "... results that are relatively well known, used a lot of compute for their time, and gave enough information to estimate the compute used." Whereas yours is "important publication within the field of AI OR lots of citations OR performance record on common benchmark". "... used a lot of compute for their time" would probably do a whole lot of work to select data points that will show a faster doubling time.

I have been wondering the same. However, given that OpenAI's "AI and Compute" inclusion criteria are also a bit vague, I'm having a hard time which of our data points would fulfill their criteria.

In general, I would describe our dataset matching the same criteria because:

n=100) .I'd be interested in discussing more precise inclusion criteria. As I say in the post:

Thanks! What happens to your doubling times if you exclude the outliers from efficient ML models?

The described doubling time of 6.2 months is the result when the outliers are excluded. If one includes all our models, the doubling time was around ≈7 months. However, the number of efficient ML models was only one or two.

@lennart apologies if this is a silly question, but either there's an error in footnote 4, or I misunderstand something fundamental:

Shouldn't this read something like (in verbatim spoken words)

"A petaflop per second is ten to the power of five floating point opeations per second. A day has [...] 10 to the power of five seconds. Therefore, a 'petaflop-per-second' DAY is 10 to the power of twenty floating point operations."

You've said a petaflop/s is x flop/s for one day, which seems like a typo maybe?

Would you say "petaflop-per-second" days if reading out loud?

You're right. Corrected, thanks!