Cross-posted on my blog

There are a few pieces of information that are required to properly analyze the value of Giving What We Can‘s membership.

They’re necessary for GWWC’s managers to evaluate different strategies. If GWWC was an object-level charity, we wouldn’t donate to it without knowing these numbers. And if GWWC were a public company, investors would not provide funding without such disclosure. As such, hopefully these metrics are already being collected internally, and publicly sharing them should not be very difficult, though very valuable. If not, GWWC should start collecting them!

GWWC already publishes the number of members it has at any given point and the total amount pledged. From this it’s easy to derive how many joined in any given year. However, it’s hard to judge what these people did later – how many fulfilled the pledge, and how much did they donate? Worse, this makes it hard to forecast the value of a new member, so we can’t tell how much effort we should put into extensive growth. As far as I can see (sorry if I just couldn’t find the data), we do not currently release the data required to make this analysis.

As part of it’s annual report, GWWC should release data on each cohort: how many of that cohort fulfilled the pledge by donating 10%; how many were ‘excused’ from donating 10% ( e.g. by being students); how many failed to abide by the pledge, donating less than 10% despite having an income; and how many did not respond.

Example Disclosure

In case it’s confusing what exactly I’m suggesting GWWC release, here’s an example (with totally made-up numbers). As part of it’s 2014 annual report, GWWC could report:

- 2011 cohort:

- Of the 107 who joined in 2011…

- 75 donated over 10% in 2014

- 15 were students and did not donate 10% in 2014

- 10 had incomes but did not donate 10% in 2014

- 7 could not be contacted in 2014

- Total of $450,000 donated in 2014

- 2012 cohort:

- Of the 107 who joined in 2012…

- 50 donated over 10% in 2014

- 53 were students and did not donate 10% in 2014

- 2 had incomes but did not donate 10% in 2014

- 2 could not be contacted in 2014

- Total of $300,000 donated in 2014

- 2013 cohort:

- etc.

While in the 2013 annual report, GWWC would have reported

- 2011 cohort:

- Of the 107 who joined in 2011…

- 45 donated over 10% in 2013

- 56 were students and did not donate 10% in 2013

- 3 had incomes but did not donate 10% in 2013

- 3 could not be contacted in 2013

- Total of $250,000 donated in 2013

- 2012 cohort:

- Of the 107 who joined in 2012…

- 16 donated over 10% in 2013

- 89 were students and did not donate 10% in 2013

- 1 had incomes but did not donate 10% in 2013

- 1 could not be contacted in 2013

- Total of $100,000 donated in 2013

This would allow us to see how each cohort matures of time, helping us answer some very important questions:

- How much is a member worth, after taking into account the risk of non-fulfillment?

- How does the donation profile of a member change over time – does it rise as they progress in their career or fall as members drop out?

- How much does the value of a member differ with the discount rate we use?

- Are the cohorts improving or deteriorating in quality? Are the members who joined in 2012 more likely to still be a member in good standing in 2014 than they 2010 cohort were in 2012? Do they donate more or less?

There are some other numbers that might be nice to know, for example breaking the data down by age, sex, nationality, or even CEA employee vs non-employee, but it’s important not to impose too high a reporting burden.

Why this is not idle speculation

This might seem a bit ambitious. Yes, it would be nice if GWWC released this data. But is it really a pressing issue?

I think it is.

Bank problems: Extend and Pretend

Sometimes banks will make a series of bad loans – loans which are repaid at a significantly lower than expected rate, perhaps because the bank was trying to grow aggressively. When the first signs of this emerge, like people being late on payments, banks have two alternatives. The honest one is to admit there is a problem and ‘write down’ the loan – take a loss to profits. The perhaps less honest one is to extend and pretend – give the borrowers more time to repay and pretend to yourself/auditors/investors that they will come good in the end. This doesn’t actually create any value; it just delays the day of reckoning. Worse, it propagates bad information in the meantime, causing people to make bad decisions.

Unfortunately they neglected the Litany of Gendlin:

What is true is already so.

Owning up to it doesn’t make it worse.

Not being open about it doesn’t make it go away.

And because it’s true, it is what is there to be interacted with.

Anything untrue isn’t there to be lived.

People can stand what is true,

for they are already enduring it.

—Eugene Gendlin

GWWC: Dilutive Growth?

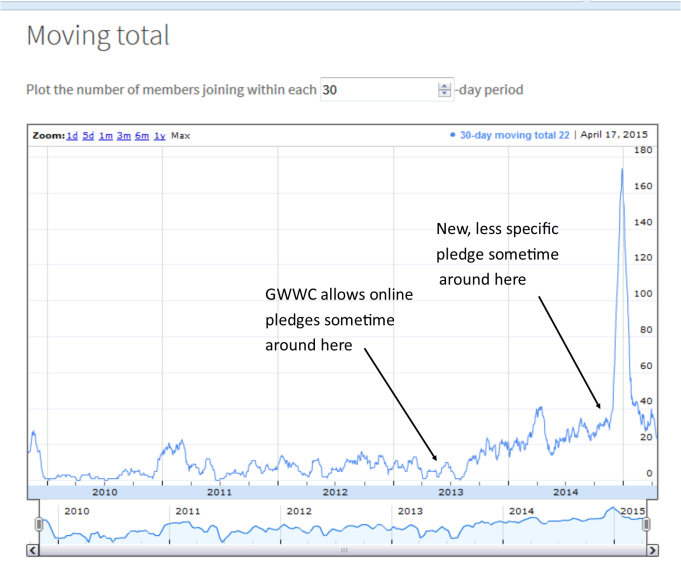

About a year ago, people were concerned that GWWC’s growth was slowing – only growing linearly, rather than exponentially. This would be pretty bad, and people were justifiably concerned. However, GWWC made a few changes with the aim of promoting growth. Most pertinently:

- Allowing people to sign up online, rather than having to mail in a hand-signed paper form. This happened between April and June 2013.

- Adjusting the pledge to become more cause-neutral, rather than just about global poverty. This happened late 2014.

The concern is that, though these measures have increased the number of members, they may have done so by reducing the average quality of members. Making it easier to join means more marginal people, with less attachment to the idea, can join. This is still good if their membership adds value, but they dilute the membership, which means we shouldn’t account for the average new member being signed up now as being equally valuable as the members who joined up in 2010. Additionally, the reduction in pomp and circumstance might reduce the gravitas of the pledge, making people take it less seriously and increase drop-out rates. If so, moving to paperless pledges might have reduced the value of sub-marginal members as well as diluting them. This makes it harder to forecast the value of members, and might lead to over-investment in acquiring new ones.

The comparison with banks should be pretty clear – a bank that’s struggling to grow starts accepting less creditworthy applicants so it can keep putting up good short term numbers, but at the cost of reducing the long-run profitability. Similarly GWWC, struggling to grow, starts accepting lower quality members so it can keep putting up good short term numbers, but at the cost of reducing the long-run donations.

This seems potentially a big risk, and it’s the sort of issue that this data would allow us to address. Of course, there are many other applications of the data as well.

And GWWC in fact has even stronger reasons than banks to report this data. The bank might be wary of giving information to its competitors, but GWWC has no such concerns. Indeed, if releasing more data makes it easier for someone else to launch a competing, better version of GWWC, all the better!

If you liked this you might also like: Happy 5th Birthday, Giving What We Can and GiveWell is not an Index Fund

Hi Dale, Thanks for your post. It’s great to see someone take such a deep interest, and thank you for the specificity of your suggestions. As it happens, we’re about to put up an impact evaluation. I’m sorry it’s been a little while since the previous one (done by Nick Beckstead). I was hoping to do it a bit earlier, but was tied up finishing my PhD. It will address various points like what we might expect the value of a new member to be, and how this changes with different discount rates. I’ll take your comments, like about wanting to be able to follow individual cohorts, into consideration. We’ve also recently taken on a Community Director (Alison Woodman) who focuses on members – both to improve membership retention and to increase our understanding of our membership for impact evaluation purposes. So hopefully in the future our impact evaluations will be more thorough and frequent.

Jacob’s already addressed the most pressing points. I just had a couple of other considerations: As he said, the main thing in December was the Pledge event, which was amazing. Giving season and new year’s resolutions always cause a bit of a bump in membership too.

I’m don’t think that we should think of members who joined under the newer pledge as ‘lower quality’. The new pledge is more general, but it is so in the sense that it is cause neutral. People who plan to donate to animal charities because they think that they can help fellow creatures even more by doing so seem every bit as serious in helping others effectively as those donating to eradicate extreme poverty. We’ve even had a couple of people join because of the change in the pledge who donate to poverty eradication charities, but who thought that we might in future find an even more effective cause than that, and wanted to make sure if they took a lifelong pledge they would definitely be able to keep it. These people seem at least as likely to continue donating as others.

While I agree that it’s easier to join online than by sending off a letter, I’m less convinced that the new method of joining is going to be one which makes it less likely people feel engaged. We still send people a physical pledge form, to maintain the feeling of solidity. But doing it online means that an email immediately gets sent to the person, and we now make more effort to follow-up with and get to know members (which we have more capacity for because of the reduction in admin moving to the online system).

By the way, you seem very comfortable with numbers, knowledgable and engaged – how would you feel about working with our research team? We’d be very grateful for the help!

Thanks for the response.

Glad to hear it! I look forward to seeing the data.

Well, that's true unless you don't think animals count as 'others'. Presumably we should discount their value by whatever credence we place in animals counting as moral agents. A wide range of new focuses are now acceptable to GWWC members, and regardless of one's specific personal beliefs, it seems likely that some of those members will focus on something you don't personally think is very valuable. For example, it seems plausible that some people might (falsely) think that donating to a political party was the best way of helping people. Yet presumably no-one would think that increasing donations to both Democrat and Republican parties by $100 is equal to $200 going to AMF!

However, my complaint wasn't really about the target of their donations - I assume that in practice most will mainly donate to AMF etc. anyway - but that they'll see a higher dropout rate. I don't think this concern can really be verified or refuted without seeing the data.

This seems like a bit of a misnomer. People were always requesting a signup form online - you could combine paper forms in the post with an email saying 'Welcome ... the forms are on their way'.

Your point about the overhead costs is a good one though. My impression is this could be outsourced - at least in the US I'm pretty sure there are firms that will deal with your mailing for you, but that still costs money.

Very kind of you. Unfortunately I work a more-than-full-time job, so can't really commit to anything. However, I get the impression that the EA community has a fair number of math-literate people who would be willing to work on relevant questions just out of interest if the data was available. In this case I'd probably analyse the cohort data if it was available - and the same probably goes for object-level issues as well. This would have a couple of advantages:

The relevance of this isn't clear. Both before and after the change, every particular GWWC donor is giving to a charity that the donor likes but that some people don't consider maximally valuable. The question is whether the change made things worse. On average, I expect it made things better, since I expect cause neutrality to be helpful.

Perhaps your point is that previously this impact was valuable to one constituency--people who prioritize development--whereas now the value is spread to a wider range of donors with a wider range of values. I can see why this might make fundraising harder, since it's a classic example of a public goods problem, but it doesn't seem to make the project less impactful overall.

I expect that GWWC's dropout rate is increasing over time. I don't see why this change in particular would be expected to lead to a higher dropout rate. At face value, one might expect a broader pledge to have a lower dropout rate.

I'm implicitly assuming that global poverty is an unusually uncontroversial cause.

I guess in the limit if the pledge became sufficiently expansive it would become impossible to fail to comply!

I think locally the effect is likely to be negative, though I don't have much confidence either way till we see the data. Nor do I see much value in speculating on this, save to encourage the release of such data.

Thanks! We actually have had quite a few people with expertise go over the impact evaluation - as you say, it's pretty fun. I was thinking of other things we could do with more researchers on. Sounds like you're already plenty busy though!

Here is that impact analysis. It's well worth a read, particularly the section 'Overview of GWWC’s performance so far' as this speaks to many of Dale's questions.

Your link just points to this post?

Fixed.

Thanks very much. Some interesting points:

It occurs to me that, if true, this would have important implications for GWWC's marketing, namely that we should deemphasize students, as many of them would have dropped out before they start making bank, and increase the emphasis on mature targets.

Thank you for this post. I agree with its central premise and I know that Michelle is already working on an impact evaluation that will contain a lot of this sort of information.

However, your post contains a couple of misleading points that I thought would be worth correcting.

For future reference, it may have been courteous to contact someone at Giving What We Can before posting this. In case that sounds intimidating I can assure you they are all very friendly :)

(Disclosure: I manage Giving What We Can's website as a volunteer.)

Thanks very much for this!

Evaluating impact is very difficult, and Michelle has a really hard and thankless job to do. I'm excited for the GWWC impact report and I'd be very understanding if it isn't entirely perfect, as ideas for measuring are hard to come by and even harder to execute. I think it would have been more polite to suggest this to GWWC privately and get their feedback before publishing it here.

I do think seeing cohort data would be pretty interesting, though, and I'm glad that you brought it up.

Personally, my top concern is instead about counterfactuals. Ravi Patel's Facebook campaign is amazing -- can you really move millions of dollars to charity through an FB event?! It would seem unlikely. I wonder if when GWWC opened the pledge, many people already giving 10% joined, as now they don't feel constrained by cause area. (Of course these people may have been influenced by GWWC years before, especially if GWWC played an important role in the normalization of 10% as the EA giving schelling point.) I know GWWC tracks "how much would you have given if not for GWWC" data, and I'm interested to see how that fits into the report.

That seems clearly what'd happen, and from what I hear is what most people think. People who'd favoured non-poverty causes and who join in the months after that change are unlikely to be giving as a result of GWWC's work in those months after all, going out and convincing people to give to them from scratch. (Not to say that it's not valuable for them to record their giving, or that the moves away from poverty have been a mistake.)

That would have been my guess, but a surprising number of these new members came through Ravi's work, and wouldn't have even known about the change to the pledge. Michelle could give a more exact share.

Oh, does the GWWC central team know how many of these members were non-poverty people? What was Ravi’s work, was it something the GWWC team did to follow up changing the pledge?

Hi. I recently joined Giving What We Can as Director of Community and am very happy to answer member-related questions!

Of the 83 people who became members between Dec 2014 - Jan 2015 after clicking 'attend' or 'maybe' on the Facebook event, 7 of these have said upon joining that they intend to give to non-poverty causes. There are an additional 7 members or so who joined during that period (bearing in mind the pledge change happened in early December) who also said they intend to give to non-poverty causes.

So it seems the spike over Dec-Jan is attributable more to the Facebook event than to the broadening of the pledge, although that certainly had a noticeable effect. Having spoken to quite a few of those who joined because of the event, I'd guess that Rob is right that most of these were likely not aware of the pledge change.

The pledge change and Ravi's work just happened to coincide - we hadn't heard from Ravi beforehand. He and a group of friends came up with the idea of a New Year pledging event and did most of the work themselves, though the GWWC team helped out.

I believe the updated pledging form asks people to specify the broad cause(s) they will donate to (eg poverty, animal suffering, existential risk), so that information would be readily available to the GWWC team.

Ravi Patel is a medical student in Cambridge who independently led the enormously successful campaign to get new pledges as a New Years resolution. It was hosted in a Facebook group, and you can see here the people posting as they joined. https://www.facebook.com/events/1581545938749145/ This coincides with the Dec/jan spike on the chart above.

It's not addressed to any one individual, but there was this. Maybe I should have made it more personalized.

Thanks for sticking your head out with this post, I've heard a lot of people express similar or stronger concerns but say they're too frightened about prompting a pile-on (or in some cases organised and tactical retaliation). One thing some of these people have said is that internal knowledge at and research by CEA reveals unflattering facts about the issues you've raised, but that CEA hides this from impact evaluations and isn't honest about it with donors. For example, people not donating and staying on the member lists, including prominent EAs.

Concerns like these should be made publicly. If true, the wider community should know; if false, CEA should have the opportunity to refute them.

I absolutely agree, like I suggested when complementing Dale for sticking his head out. (If that is the phrase? Google is ambiguous between “head” and “neck”.) I like to think I would state them myself, given the anonymity this forum allows, and I wouldn’t pay much social cost anyway as I don’t talk to EAs much any more now that I’m distant from the main EA centers. But like I said I’ve heard them second hand from a lot of people who wouldn’t want the sources to be guessed at. I’ll ask them if there’s anything I can post on their behalf in this thread.

The one thing I have heard from people other than these people is about some of the EAs who don’t donate but stay on the member lists. That was still second hand however, from other people who weren’t criticising but might not like the implication that they were. I’m not sure it’s right to name individuals in this venue either, beyond identifying classes like “employees or trustees of organisations”. Ambiguity about what counts as a donation vs. self-serving may also be at play.

Hi Impala, I'm sorry you feel this way! I assure you we're actually all nice and not at all scary, so do feel free to contact us to discuss whatever you'd like. I don't know precisely what you're talking about, but I don't know of anyone you might be talking about as 'prominent EAs' who are both members of Giving What We Can and not donating. There are definitely people like Paul Christiano who support saving to donate later, because they think that the savings rate at the moment is unusually high and we're currently in an unusually good position to learn more, so that donating in the future will be even more valuable than now. But Paul is not a member, for that very reason.

Like I said to Gregory, I am limited in what I can say without violating confidences, but I personally wouldn’t find saying other things scary if it’s anonymous. Is there an anonymous way to send messages to you which doesn’t reveal my email (which contains the username I use around the Web)?

Impala, you can trivially set up a new gmail account to send anonymous email.

Upvoted for morally admirable concern for people's confidences.

This is starting to sound like it would be scary for me. I think Hauke may have made an anonymous feedback form for this purpose. I'll ask him.

Yes, fortunately I am both quite autistic and also don't know many EA people in person.

Yeah, there's an obvious problem whereby organizations with 'dirty secrets' will tend not to share them. As a result, in investment we tend to work out what they key metrics are and then assume the worst if companies don't release them. (Sovereigns can get a bit more leeway because they are incompetent and big). My understanding is that GiveWell uses a similar methodology of assuming the worst from the charities it looks at.

o.O

I just want to comment on the issue of new cohorts being more likely to drop out because they e.g. signed up online.

I'm not sure if that will happen or not, but even if it does, I don't think it's such a bad thing. Let's say the retention rate for members who join on paper is 80%, and that for those who would only ever join online is 50%. While it would be a shame to have those online members start dropping out, that is still potentially a lot of extra members who we never would have had otherwise. If they never signed up at all, presumably fewer still would continue to give in the long term, because they would not have made any public commitment or received regular reminders from us about giving and updates on the latest research from us and GiveWell.

In the early days of GWWC, having members 'drop out' was regarded as very bad. While I understand the reasons behind that, I think this is was a mistake. Being too cautious about accepting new members would prevent us from encouraging a large number of people to give more and give more effectively.

Yup, I agree:

but just am concerned that this means our forecasts might overestimate the value of adding another member, causing relative over-investment.

My understanding is that GWWC are working on an impact evaluation which will look at issues of membership attrition, so will hopefully cover the first bullet point. I'd guess the other ones will remain matters of conjecture until enough time has passed. Given the changes you are worried about happened in 2013-2014, they'd have to be disastrous to see much of a signal 1-2 years post. I agree the longitudinal data will be interesting over time.

(If there is a drop off in 'member quality', though, it might be difficult to find out why: there's a plausible selection effect whereby the more committed people are likely to discover (and join) GWWC earlier. I'd guess the secular trend - regardless of strategic choices GWWC makes - is that later members are less likely to fulfil their pledges.)

Although the change in the pledge precedes the massive spike in pledges, it played little causal role. A far more important event was the hugely successful recruitment event run by Ravi Patel and others, which recruited hundreds of people around the Christmas season. (https://www.facebook.com/events/1581545938749145/)

(For disclosure: I'm a member of GWWC, formerly volunteered for them, and continue to donate to them directly. I'm not officially involved though, so please don't take my remarks as anything official - given how inept I generally am at remarking, I'm sure GWWC would want you to do the same!)

I actually did roughly this analysis last year and have shared the results with some donors. At the time we didn't release it because we were still chasing up giving data from 2013 and without having completed donation reporting for that year we didn't have that many years' worth of data to report (i.e. it couldn't say anything about switching to allowing online sign-ups). It also simply takes a lot of work to polish up that kind of analysis to make sure you aren't publishing errors.

It should be public at some point (as part of the impact evaluation Michelle is working on).

Glad to hear it!

This should make publishing the raw/cohort data more appealing - CEA doesn't have to actually do the analysis itself, just maintain data integrity, which it should be doing as a matter of course.