An approach to reconsidering a prediction

- separate what you believe about the situation from what it might actually be.

- ignore ideas of relative likelihood of consequences of the situation.

- consider the list of consequences (events) independently from each other, as endpoints of separate pathways.

- backtrack from those separate pathways to new ideas of the Actual Situation.

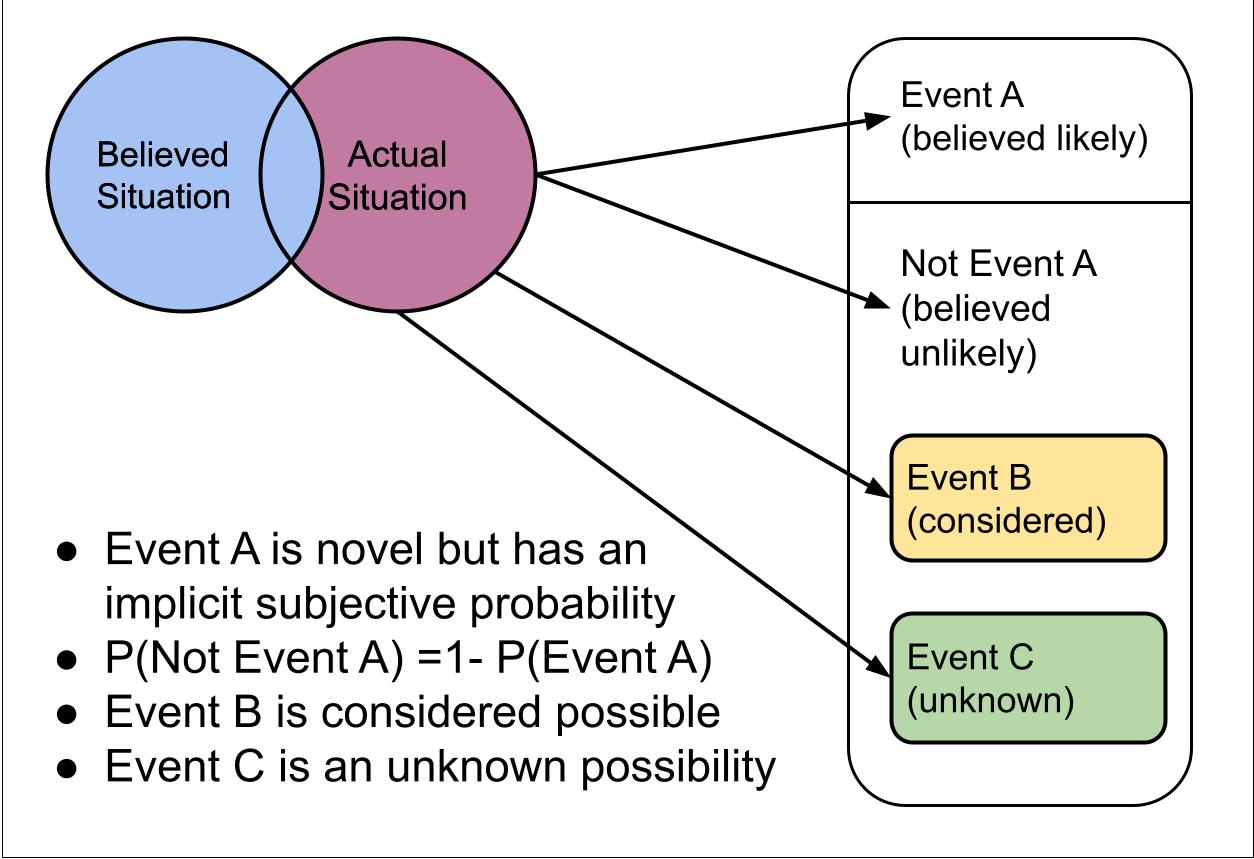

You can use the summary graphic below to help you follow those steps.

A graphic representing a prediction

Here's my simple model of the result of a prediction. EA folks have very sophisticated models that you use to make predictions, and this is my simple summary of the result.

Caveats if you use this approach as a cognitive aid

Ignore whether Event A:

- occurs over short or long periods of time

- is anchored to a calendar date

- is repeated after the Believed Situation is believed to recur

The most you can know, if Event A is novel, is that:

- Event A or an event Not A will occur.

- Event A's actual likelihood is unknown.

- Event Not A could be any of several events of unknown likelihood.

A few EA-relevant predictions to reconsider

EA-relevant predictions that suit this approach include:

- Believed Situation: companies working on automating human cognitive and physical abilities through (robot-enabled) advanced AGI develop the technology.

Event A: society adjust successfully to the enormous prosperity and technological capability that advanced AI enables.

Event B: AGI kill us all. - Believed Situation: society reaches a point where CO2-emissions and other anthropogenic forces of environmental destruction pose an existential threat.

Event A: governments respond in a timely and effective fashion to prevent additional extremes (and greater extremes) of climate events and biodiversity loss through regulation, economic incentives, geo-engineering, and other advanced technology.

Event B: climate change destroys civilization. - Believed Situation: a worldwide pandemic of greater threat than the SARS-COV-2 virus threatens from natural or man-made sources.

Event A: governments respond with regulation and other tools (all applied knowledgeably and responsibly) to prevent pandemics due to all sources.

Event B: the horrible virus cause a massive pandemic. - ...and so on

Application to an Extreme Heat Wave Scenario

Here is a scenario:

- Believed Situation: extreme heat waves hit China and kill 10 million people in a year.

- Event A: world governments rally to reduce anthropogenic emissions to 0 quickly.

- Event B: world focuses on cheap body cooling tech for people w/o air conditioning.

I made up an Event B that I will pretend is what most people believe is the less likely alternative to Event A. Who knows what most people would think is less likely. But what about Event C?

There's many possibilities you or I can imagine for what happens after such a terrible heat wave. As part of speculating, you can change your beliefs about the actual situation.

- Event C: China chooses to cover-up of the true extent of the deaths. The actual number is close to 15,000,000 million dead but half of those died in botched disaster relief efforts due to medical supply chain issues and lousy policy recommendations made in the first days of the heat wave.

or

- Event C: 10,000,000 people died in one year's heat waves despite heroic, wise and strong efforts by the Chinese government (many more people would have died if not for the government's effective response), so China deploys aerosol geo-engineering locally, creating a conflict with another country (maybe India).

or

- Event C: China plans a return to coal production to guarantee power after the combination of a drought and killer heat wave led to migration out of some manufacturing hubs. The coal production provides additional power needed for more AC in those cities and China works to bring people back. Outright hostile UN discussions begin over how to reduce impacts of climate change and several countries inform the global community that they will unilaterally employ aerosol geo-engineering as a short-term heat wave mitigation measure.

Conclusion: you can reconsider your risk predictions anytime

So, EA folks typically have an Event B that is an existential or extinction threat. Their Event B alternatives are unlikely for most effective altruists(for example, a 1/10 chance of extinction because AGI run amuck).

Here are my suggestions for broadening effective altruist models of risk. It might help EA folks to:

- look skeptically at their Event A, the likely (and positive) future, and find its flaws, supposing that the actual situation were different than they believe.

- make up some Event C's, alternatives to Event A and Event B, supposing that you don't know the likelihood off any Event, but want to consider all plausible ones.

- Finally, suppose that an Event C were the most likely event and then explore what that implies about the current actual situation.

A few final words

The cognitive aid I offered here is just to make you think a little harder, not about likelihood values but about your list of events that you consider potential consequences of the current situation. Broadening that list can help you feel greater uncertainty, and that feeling can guide you away from obvious mistakes that unwarranted confidence in the future causes.

Yes, usually people with strong beliefs are thought to be the overconfident ones, but I think that exploring the possible without the constraint of partial credences can also offer relief from unwarranted confidence. You might worry that responding to such uncertainty without constraining its alternatives numerically (even if the probability numbers are made up) results in distress and indecision. However, real situations of broad uncertainty are precisely when denial tends to kick in and opportunities for positive change are ignored.

Concentrating on how to feel more positive expectations of the future is actually dangerous in situations of broad uncertainty. Concentrating on mapping out the steps to the alternatives and continuing to check whether you're still on the pathway to one or another alternative is wiser. You're looking for ways to get your pathway to quickly diverge away from negative futures and toward positive ones rather than remain ambiguous. Your uncertainty about which pathway you are on is your motive to find a quick and early divergence toward a positive future. So long as there's great uncertainty, there's great motivation.

I have offered a few ideas of how to respond with unweighted beliefs, for anyone interested. This post's material will show up in that document.

Can you give examples of how these techniques helped you be better at forecasting?

In lieu of this, my own opinion is that this is not a good way to become a better forecaster. I'd recommend people a) instead read notes on Superforecasting, b) read posts with the forecasting tag on the Forum, c) concretely practice forecasting skills themselves by actually doing forecasts, d) do calibration exercises online and e) build various Fermi models for questions of interest.

Forecasting is at a pretty nascent and preparadigmatic stage, so there's still a lot of uncertainties left. Nonetheless, I do not think we're entirely lost, and my best guess is that most people who want to be better at forecasting can gain more from reading the existing literature and doing the known exercises than not doing this.

An answer to forecasting

Hmm, what has helped me be a better forecaster is developing a useful and small ontology in areas that interest me and then researching to validate specifics of it that lead quickly to useful predictions.I match ontological relations to research information to develop useful expectations about my current pathway.

As far as predictions relevant to EA's bigger picture doomy stuff, uhh, I have done a bit of forecasting (on climate at gjopen, you'll have to browse history there) and a bit of backcasting (with results that might not fit the forum's content guidelines), but without impact by doing so, as far as I know.

Linch, if you look at what I'm describing, it's not far from combining forward chaining to scenarios and backward chaining from scenarios, and people do that all the time. As far as how it helps my forecasting abilities, it doesn't. It helps my planning abilities.

That said, I'm drawn to convenient mathematical methods to simplify complex estimates and want to learn more about them, and I am not trying to subvert forecasters or their methods. However, overall, the use of probability in forecasting mostly upsets me. I see the failure that it has meant for climate change policy, for example, and I get upset[1]. But I think it's cool for simpler stuff with better evidence that doesn't involve existential or similar risks.

The rest of this is not specifically written for you, Linch, though it is a general response to your statements. I suspect that your words reflect a consensus view and common misinterpretation of what I wrote. Some EA community members are focused on subjective probabilities and forecasting of novel one-off extreme negative events. They take it seriously. I get that. If my ideas matter so little, I'm confident that they will ignore them.

EAs can use their forecast models to develop plans

To me, a prediction is meant to convey confidence in a particular outcome, so confidence that one event from a list of several will occur, given the current situation. Considering all possible future events as uncertain (unknown in their relative probability) is useful, as I wrote, to create a broad uncertainty about the future. To do so is not forecasting, obviously. However, for EA's, what I suggest does have application for the whole point of your work in forecasting.

You folks work on serious forecasts of novel events (like an existential threat to humanity, what you sometimes call a "tail risk") because you want to avoid them. Without experience of such situations in the past, you can only rely on models (ontologies) to guide your forecasts. Those models have useful information for planning in them, indirectly.[2]

Remember to dislike ambiguous pathways into the future

The problem I think any ethical group (such as the EA community) working to save humanity faces is that it can seem committed, in some respects, to ambiguous pathways that allow for both positive and negative futures.

As is, our pathways into the future are ambiguous, or are they? If they are not, then belief in some future possibilities drops to (effectively) zero or rises to one hundred percent. That's how you know that your pathway is unambiguously going toward some specific future. Apparently, for EA folks, our pathways remain ambiguous with respect to many negative futures. You have special names for them, extinction risks, existential risks, suffering risks, and so on.

Use ontologies that inform your forecasts, but use them to create plans

My post was getting at how to use the ontologies that inform your forecasts, but in as simple terms as possible. But here is another formulation:

To do that, you might have to change your understanding of what you believe about the current situation and the likelihood of alternative futures (for example, by arbitrarily reversing those likelihoods and backtracking).[3]

An EA shortcut to broad uncertainty about the future

A lot of outcomes are not considered "real" for people until they have some sort of pivotal experience that changes their perspective. By flip-flopping the probabilities that you expect (for example, 98% chance of human extinction from AGI), you give yourself permission to ask:

"What would that mean about the present, if that particular future were so likely?[4]"

Now, I think the way that some people would respond to that question is:

"Well, the disaster must have already happened and I just don't know about it in this hypothetical present."

If that's all you've got, then it is helpful to keep a present frame of reference, and go looking for different ideas of the present and the likely future from others. Your own mental filtering and sorting just won't let you answer the question well, even as a hypothetical, so find someone else who really thinks differently than you do.

"Broad uncertainty" does not imply that you think we are all doomed. However, since the chronic choice in EA is to think of unlikely doomish events as important, broad uncertainty about the future is a temporary solution. One way to get there is to temporarily increase your idea of the likelihood of those doomish events, and backtrack to what that must mean about the present[5]. By responding to broad uncertainty you can make plans and carry out actions before you resolve any amount of the uncertainty.

Using precondition information to change the future, in detail

Once you collect information about preconditions for the various positive or negative events:

Then, ideally, you, your group, your country, your global society, make that pathway change instead of procrastinating on that change and stressing a lot of people out.

Conclusion

So, what I presented earlier was just really shortform quality work on what could be called modeling, but is not forecasting. It is much simpler.

It's just a way to temporarily (only temporarily, because I know EA's love their subjective probabilities) withdraw from assigning probabilities to novel future events, events without earlier similar events to inform you of their likelihood.

It is important to return to your forecasts, take the list of compared alternative events in those forecasts, and learn more about how to avoid unfavorable alternatives, all while ignoring their likelihood. Those likelihood numbers could make you less effective, either by relieving you or discouraging you when neither's appropriate.

Policy-makers choose present and short-term interests repeatedly over longer-term concerns. In the case of climate change and environmental concerns in general, the last 50 years of policy have effectively doomed humanity to a difficult 21st century.

Not the prediction probabilities, those should be thrown out, for the simple reason that, even if they are accurate, when you're talking about the extinction of humanity, for example, the difference between a 1% and a 10% chance is not relevant to planning against the possibility.

Like most people, you could wonder what the point is of pretending that the unlikely is very likely. Well, what is the likelihood, in your mind, of having poor quality information about the present situation?

Rather than you having to have such an experience yourself, for example,in the case of climate change, by helplessly watching a beloved family member perish in a heat wave, you just temporarily pretend that future extreme climatic events are actually very likely.

When you backtrack, you are going through your pathway in reverse, from the future to the present. You study how you got to where you ended up from where you are right now. You can collect this information from others. If they predict doom, how did it happen? From that, you learn about what steps not to take going forward.