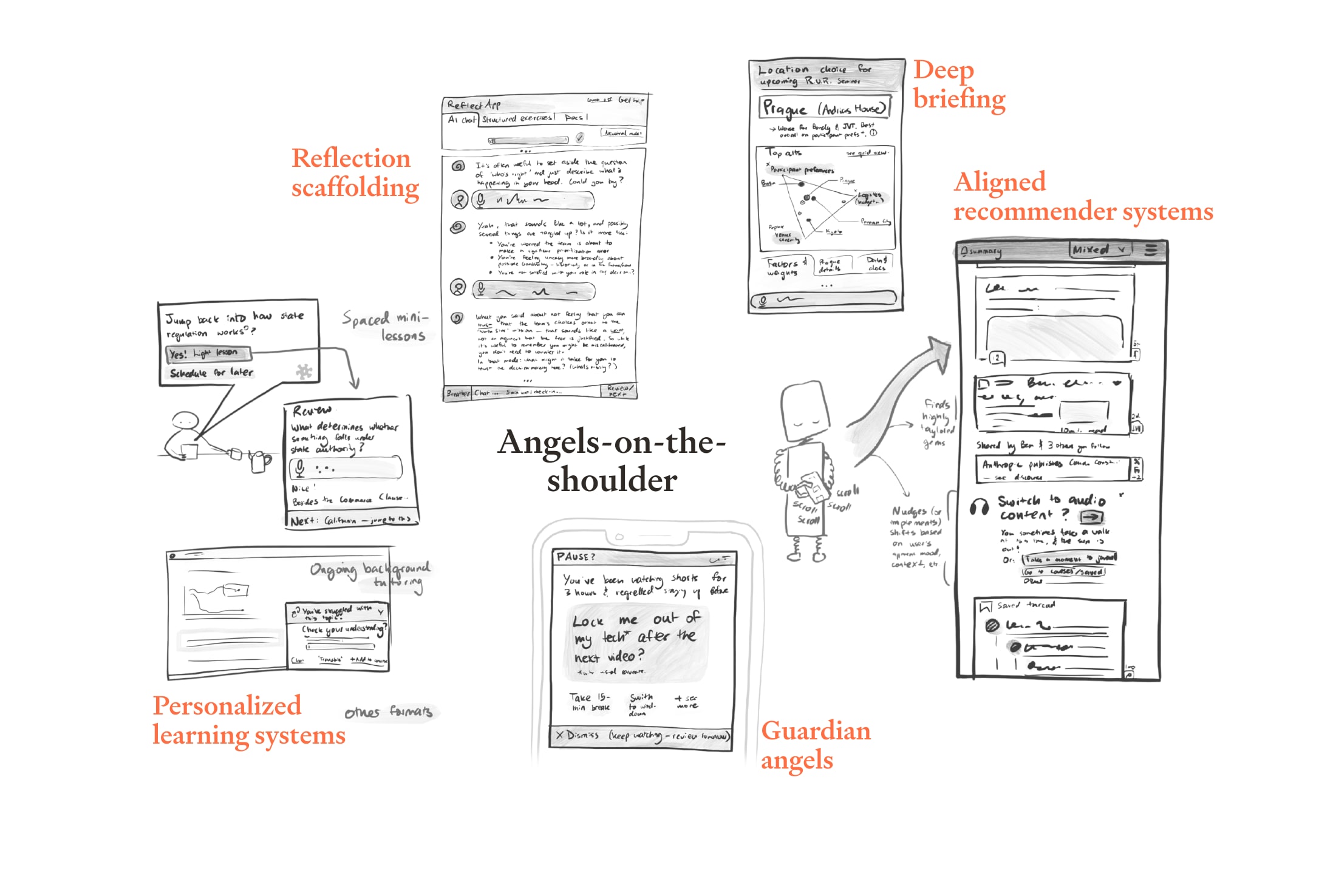

We’ve recently published a set of design sketches for technological analogues to ‘angels-on-the-shoulder’: customized tools that leverage near-term AI systems to help people better navigate their environments and handle tricky situations in ways they’ll feel good about later.

We think that these tools could be quite important:

- In general, we expect angels-on-the-shoulder to mean more endorsed decisions, and fewer unforced errors.

- In the context of the transition to more advanced AI systems that we’re faced with, this could be a huge deal. We think that people who are better informed, more situationally aware, more in touch with their own values, and less prone to obvious errors are more likely to handle the coming decades well.

We’re excited for people to build tools that help this to happen, and hope that our design sketches will make this area more concrete, and inspire people to get started.

The (overly-)specific technologies we sketch out are:

- Aligned recommender systems — Most people consume content recommended to them by algorithms trained not to drive short-term engagement, but to meet long-term user endorsement and considered values

- Personalised learning systems — When people want to learn about (or keep up-to-date on) a topic or area of work, they can get a personalised “curriculum” (that’s high quality, adapted to their preferences, and built around gaps in their knowledge) integrated into their routines, so learning is effective and feels effortless

- Deep briefing — Anyone facing a decision can quickly get a summary of the key considerations and tradeoffs (in whichever format works best for them), as would be compiled by an expert high-context assistant, with the ability to double-click on the parts they most want to know more about

- Reflection scaffolding — People thinking through situations they experience as tricky, or who want to better understand themselves or pursue personal growth, can do so with the aid of an expert system, which, as an infinitely-patient, always-available Socratic coach, will read what may be important for the person in their choice of words or tone of voice, ask probing questions, and push back in the places where that would be helpful

- Guardian angels — Many people use systems that flag when they might be about to do something they could seriously regret, and help them think through what they endorse and want to go for (as an expert coach might)

If you have ideas for how to implement these technologies, issues we may not have spotted, or visions for other tools in this space, we’d love to hear them.

This article was created by Forethought. Read the full article on our website.

Very cool. I’m a forever optimist when it comes to the potential of AI tools to improve decision making and how people reason about the world or interact with the world.

There is such a huge risk with any such tools of incentive misalignment (I.e. quality of reasoning and error reduction often isn’t well rewarded in most professional contexts).

For these to work, I strongly believe the integration method is absolutely critical. A stand alone platform or app, or something that needs to be proactively engaged is going to struggle I fear.

Something that works with organisations and groups to build the better incentives would be high impact I feel.