Thank you to Tyler Johnson for his comments. All mistakes are my own or Shoshana Zuboff's. Though mainly mine.

Executive Summary

This piece analyzes lessons for AI Governance research, based on the case studies of Google and Facebook in the early 2000s. I draw particularly on from Zuboff’s The Age of Surveillance Capitalism, which I think is a flawed but useful book.

Looking at Google/Facebook in the early 2000s is a useful analogy for AI development because:

(I) similar “Big Tech” firms have significant control over AI Labs;

(II) today’s AI entrepreneurs have remarkably similar incentives and worldviews to the internet/social media pioneers of the 2000s

Findings:

- Google’s business model shifted towards targeted advertising (in part) because of financial pressures after the Doc-Com-Crash. This seems to parallel OpenAI’s move towards commercialisation in 2019. Technology companies founded with aspirational intentions can be sensitive to financial pressures.

- 9/11 and ‘national security’ rhetoric contributed to (I) expanded state control over the internet; and (II) weaker consumer protections. The former seems to already be occurring with AI development; I worry the latter may occur too.

- ‘Best practices’ for M-Health/Facial Recognition seem inadequate for protecting consumer privacy, though may have had positive counterfactual impact relative to no regulation at all.

Introduction

Recently, I read The Age of Surveillance Capitalism by Shoshana Zuboff. I think it has some interesting lessons for AI Governance researchers.

Zuboff looks at the twin case studies of Google and Facebook, and argues that their business models are driven by novel “surveillance imperatives”. In this piece, I try to draw lessons from AI Governance researchers from the book, whilst side-stepping a critical examination of the book’s key claims. I do not intend this piece to be a book review. Surveillance Capitalism was released several years ago; there are already excellent book reviews (e.g. Morozov) and even a review of reviews by Jansen.

This is not to say that, given the book’s critical acclaim, we can simply assume its conclusions. There are several key problems with the book.

Zuboff describes the loss of privacy from targeted advertising in extremely strong, emotive terms (e.g. Facebook users are like abandoned elephant carcasses). But she doesn’t draw a clear line between “surveillance capitalism” and ordinary (nice) capitalism, which uses data to improve goods/services. So, when Amazon uses Kindle data to recommend books to users, are they being nice capitalists, or evil surveillance capitalists? It’s unclear. Additionally, while the book is almost 700 pages, the policy solutions are pretty thin. A couple of pages are dedicated to describing artistic movements. And finally, Zuboff doesn’t seem to engage much with prior relevant scholarship.

So in spite of all these problems, why is the book useful for AI Governance researchers? Surveillance Capitalism provides useful (and detailed) case studies into Google and Facebook in the early 2000s. I think this is a useful analogy because of two (fairly independent) reasons:

(I) similar “Big Tech” firms have significant control over AI Labs;

(II) today’s AI entrepreneurs have remarkably similar incentives and worldviews to the internet/social pioneers of the 2000s

Let me outline both.

I) Big Tech’s Influence Over AI Labs

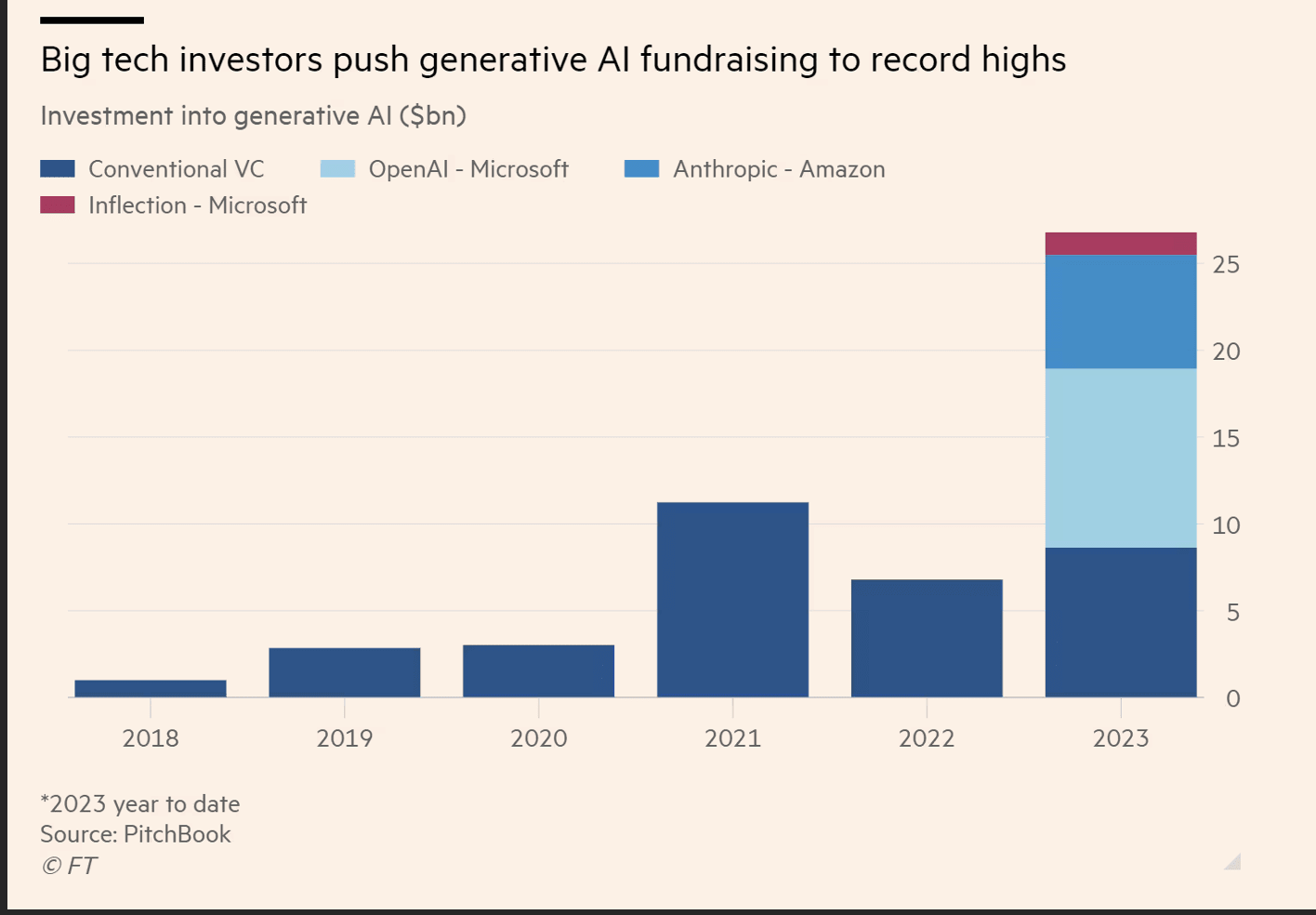

Microsoft’s influence over OpenAI was pretty visible in the recent Sam Altman saga (more on this below). The fraction of investment from Microsoft and Amazon into Generative AI is increasing and now exceeds conventional Venture Capital fundraising. Whilst I speak a little about the Altman saga below, I recommend Merredith Witthaker’s piece for a strong formulation of this claim.

Perhaps Google/Facebook have relatively less influence than Microsoft or Amazon. Though, perhaps they all fit into a similar reference class of ‘large technology firms’. So, by studying Google/Facebook in the early 2000s, we can learn how Microsoft/Amazon are likely to direct AI development.

From: https://www.ft.com/content/c6b47d24-b435-4f41-b197-2d826cce9532

II) AI entrepreneurs and internet pioneers are similar agents

In addition, AI entrepreneurs and internet pioneers are similar agents, with similar commercial incentives surrounding similar technologies.

Firstly, the internet is a useful analogue for AI. Both are described as ‘general-purpose technologies’ (GPTs). Elsewhere I have argued that looking at other GPTs isn’t a very useful way to make analogies to AI development. Many GPTs had very different incentives behind their development: for example, there was no paleolithic Sam Altman who pioneered the domestication of animals. Instead, I think the salient facts about AI development are that: (I) there are strong commercial and geopolitical incentives driving its development. Similar incentives were at play for Google and Facebook in the early 2000. Google’s revenue was almost $1 billion in 2003. Security services became particularly interested in the internet as a strategic asset after 9/11 (see below).

Additionally, AI developers and internet pioneers had similar worldviews. Today’s developers often describe the emergence of AI as an inevitability. Mustafa Suleyman’s book describes AI development as “The Coming Wave”. In an interview with the Economist, Sam Altman suggested “there’s no “magic red button” to stop AI”. As described by Zuboff, “inevitabilism” was also prominent amongst the executives of the nascent internet. For example, former Google Executives, Eric Schmidt and Jared Cohen write “soon everyone on Earth will be connected”, so warned against “bemoaning the inevitable”. Mark Zuckerberg described Facebook’s/Meta’s mission in evolutionary terms: the next phase of social evolution will be a “global community,” facilitated by Facebook.

Further, AI developers envision AI development as potentially heralding utopia, notwithstanding existential risk. (Interestingly, inevitability rhetoric seems to be a general feature of modern utopianism.)[1] OpenAI’s mission statement imagines a utopian future “a world where all of us have access to help with almost any cognitive task”. Satya Nadella describes the default scenario of continued AI development simply: “it’s utopia”. Zuboff describes the same worldviews for Facebook/Google executives. Eric Schmidt argues, “Technology is now on the cusp of taking us into a magical age”; Larry Page suggests future society will enjoy total “abundance”; and Mark Zuckerberg wrote “a lot more of us are gonna do what today is considered the arts”.

Even if you don’t believe that “Big Tech” firms have any significant control over AI, it seems difficult not to agree that today’s AI entrepreneurs are similar agents in similar circumstances to the internet/social media pioneers. Overall, therefore, I expect that studying Facebook/Google should yield some useful lessons for how AI development will unfold – as directed by AI developers, or “Big Tech”. Hopefully, this qualitative research provides a useful complement to existing quantitative research on AI competition (e.g. from Armstrong et al., here). (Another interesting question is whether private surveillance contributes to suffering risks, or risks from stable totalitarianism. This won’t be discussed here).

This piece proceeds as follows: first, I describe the case study of Google post the ‘dot-com-crash’; next, I describe the influence of the heightened “securitised” political landscape influenced Google; finally, I describe how Facebook/Google approached voluntary safeguards.

My research here is pretty provisional. Please leave comments if you believe I have made any oversights.

A) Aspirational Tech Companies Have Slippery Business Models

Zuboff argues that asking Google to start honoring consumer privacy is “like asking Henry Ford to make each Model T by hand or asking a giraffe to shorten its neck.” We should be skeptical of this maximalist rhetoric.[2] However, a weaker version of Zuboff’s claim – e.g. financial pressures provide strong incentives for technology companies to move towards certain business models – is plausible. The case study of Google in the early 2000s is particularly clear.

Google was founded with aspirational intentions: early Google executives believed the internet technology would bring about a techno-utopia (see above). The motto “Don’t Be Evil”, which later formed part of Google’s Corporate Code of Conduct, had its roots in the early 2000s: according to Google employee Paul Buchheit, the slogan was "a bit of a jab at a lot of the other companies … who at the time, in our opinion, were kind of exploiting the users to some extent".

For the first couple years after its founding August 1998, Google’s revenues came from mainly from licensing deals – providing web services to Yahoo! or BIGLOBE – with modest revenue from sponsored ads linked to keywords in searches. Competitors like Overture and Inktomi had different models, and allowed advertisers to pay for high-ranking listings. Founders Sergey Brin and Larry Page seemed opposed to an advertising-funded business model: in their seminar 1998 paper which introduced the concept of the internet, they wrote, "We expect that advertising funded search engines will be inherently biased towards the advertisers and away from the needs of the consumers... it is crucial to have a competitive search engine that is transparent and in the academic realm."

What changed? Zuboff identifies the dot-com crash (March 2000) as a key turning point. According to one commentator, "The VCs were screaming bloody murder": Google was running out of cash. In response, Google’s founders tasked AdWords (the predecessor to Google Ads) with ways of increasing revenue. Brin and Page hired Eric Schmidt as CEO to pivot towards profit via a “belt-tightening” program. The outcome? Targeting advertising. Instead of linking adverts to keywords, AdWords linked adverts to users, with pricing depending upon the ad’s position on the search results page and the likelihood of clicks.

Zuboff’s explanation here is slightly unclear. She wants to be analytical in describing the influence of “imperatives” of surveillance capitalism on Google, but as noted elsewhere, her writing sometimes seems descriptive or tautological. [3]

Again, however, Zuboff’s argument can be saved by being watered down slightly. A more general interpretation here could be that without the Dot-Com Crash, it is less likely that Google would have transitioned to targeted advertising, or that any transition would have occurred later. So, the overall story is a large digital company moving away from the aspiration intentions of its founders, based on financial realities.

Does this sound familiar?

OpenAI was founded as a non-profit in 2015 by Sam Altman and a group of Silicon Valley investors, including Elon Musk. However, by 2019, only around a tenth of the initial $1bn pledged had materialized. OpenAI needed more capital. So in 2019, it created a capped-for-profit subsidiary (OpenAI LP) in order to attract top AI talent and increase its compute investments. OpenAI’s techno-utopian “mission”, a word that appears 14 times in the announcement post, features front and center: “ensuring the creation and adoption of safe and beneficial AGI for all”.

OpenAI’s move towards commercialisation had significant knock-on effects during the Sam Altman Saga of November 2023. (For those who are somehow unaware, Altman was fired as CEO of OpenAI for not being “consistently candid”; after backlash from workers and investors, Altman was reinstated 5 days later).

Firstly, OpenAI’s commercialisation gave significantly more influence to Microsoft. Microsoft had committed to invest $13 billion into Microsoft, which OpenAI had likely received a fraction of by November. Microsoft used this pending investment as leverage to call for Altman’s reinstatement. Additionally, from 2019 Microsoft possessed rights to OpenAI’s intellectual property for pre-AGI technology. Given this IP control, Microsoft CEO Satya Nadella could credibly recreate OpenAI within Microsoft.

Secondly, commercialisation of OpenAI gave its employees more financial interest in higher profitability – OpenAI started giving stock-options to employees. By the 6th of November 2023, 738 out of OpenAI’s around 770 employees had signed an open letter demanding Altman’s return. It seems like loyalty to Altman’s leadership and OpenAI’s mission were important contributors. However, financial incentives likely also played some role. OpenAI was in discussion to sell existing employees’ shares at an $86 billion valuation. As one OpenAI staffer posted, "Everyone knew that their money was tied to Sam. They acted in their best interest."

The commercialisation of OpenAI in 2019 seems to have had a significant counterfactual impact on Altman’s reinstatement as CEO, and the subsequent restructuring of OpenAI’s independent board. In contrast, in conversations, EAs seem to emphasize different contingent factors. Perhaps the key problem was the Board’s “PR”, and if only they had publicly explained what not being “consistently candid” actually meant. Or perhaps it was down to bad corporate design. Both factors seem plausible. But, the ‘inside view’ should also include the historical influence of OpenAI’s move towards commercialisation in 2019. (This is in no way a new point).

The more novel point I wish to make is that OpenAI’s corporate restructuring is not unprecedented for a technology firm founded with aspirational goals. Financial pressures pushed Google towards targeted advertising. I’m not entirely sure what follows from this. Google only constitutes a reference class of 1. Perhaps the more general pattern is that, for companies with quasi-utopian goals, the ends can justify the means: major, unintended changes in direction are necessitated by the broader benefits for all of humanity. If this is true, and if existing corporate structures (e.g. OpenAI’s Operating Agreement) become major impediments to profit, perhaps we should expect further changes to OpenAI’s structure.

B) Beware Securitisation

Alongside the Dot-Com Crash, Zuboff cites a second crucial factor which encouraged Google to shift towards a targeted advertising business model, unconstrained by regulation: 9/11. Prior to 9/11, the Federal Trade Commission (FTC) was edging towards privacy-protecting legislation: a report from 2000 recommended federal legislation, as “self-regulatory initiatives to date fall far short”. However, as noted by Peter Swire, "everything changed" after 9/11, with privacy provisions disappearing overnight. The Patriot Act and other measures expanded collection of personal information. FTC privacy guidelines shifted from the broader concerns of privacy rights to a “harms-based” strategy: in effect, privacy rights only mattered if there were concrete physical harms (e.g. identity theft) which could be identified.

These changes were part of a broader tend, in which the internet became increasingly interconnected with perceptions of “national security” and security services. This fits the model of “securitization”, from International Relations, the word for a process in which state actors transform subjects from regular political issues into matters of "security". Former CIA Director Michael Hayden describes the "militarization of the world wide web" post-9/11: “What 9/11 did was to produce socially negative consequences that hitherto were the stuff of repressive regimes and dystopian novels.... The suspension of normal conditions is justified with reference to the ‘war on terrorism.’” Alongside a weakening of privacy regulation, there was cross-pollination between the private and public sectors: Google was awarded contracts for search technology by NSA and CIA, and its systems used for information sharing between intelligence agencies; and NSA starting to develop its own “Google-like” tools, including ICREACH, a search tool for analyzing metadata. The US PRISM program was implemented, using automated processes to identify terrorist content online using data from US internet and social media companies. As Burton writes, these trends illustrate “some of the key tenets of securitization theory: that states will use and implement special measures, including technological solutions, to what are seen as existential threats to the state”.

Concerningly, AI is increasingly being framed in terms of existential security, both in the West and China. A study from CSET analyzes the rhetorical framing of AI, using English-language articles from 2012 to 2019 which had referenced to “AI competition.” It found, “since 2012, a growing number of articles … have included the competition frame, but prevalence of the frame as a proportion of all AI articles peaked in 2015.” This national security framing is increasingly prevalent amongst US policymakers.

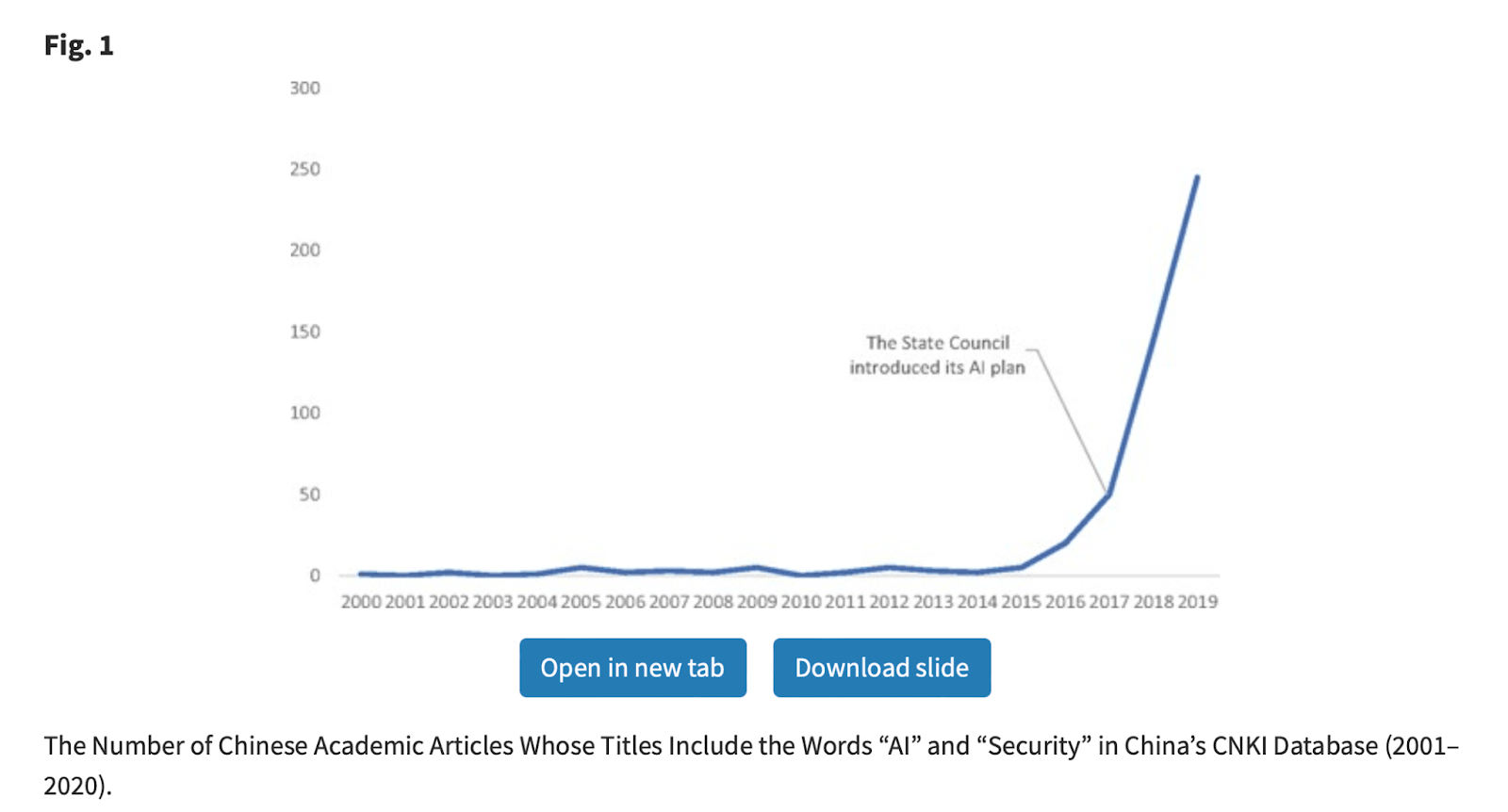

Similarly, national security rhetoric around AI has been particularly prominent since the the State Council of China’s “New Generation Artificial Intelligence Development Plan” in 2018. This document cites “national security” 8 times, "national defense" 11 times, and “security” 48 times (Zheng). This language is also increasing rapidly in Chinese academic articles.

From: https://academic.oup.com/cjip/article/14/3/417/6352222?login=true

The ‘securitisation’ of the internet in the early 2000s, in part, expanded state control/influence over the internet. State investment into AI has been increasing in recent years – even if it falls short of the common-used “arms-race” framing. Chinese state investment into AI was estimated at several billion dollars in 2019; the US Chips Act, which aimed to boost domestic US AI capability, cost $280 billion. Similarly to the ‘securitisation’ of the internet, it seems plausible that ‘national security’ rhetoric has contributed to increased state investment. Perhaps this isn’t intrinsically concerning, and all else equal, US federal investment into AI is good.

However, the ‘securitisation’ of the internet not only boosted state control, but also stopped progress on consumer-friendly regulation from the FTC regulation. Could the securitisation of AI also lead to weaker protections for consumers? Some technology CEOs have already used national security rhetoric to argue against breaking apart their companies. Facebook CEO Mark Zuckerberg once warned that Chinese firms, who “do not share the values that we have”, would come to dominate, if US regulators try to “clip the wings of these [American] companies and make it so that it’s harder for them to operate in different places or they have to be smaller”. AI entrepreneurs don’t seem to universally spit out the same rhetoric: e.g. Sam Altman has called for increased global cooperation. (Although, in January 2024, OpenAI agreed to start collaborating with the Pentagon.)

What can we learn from the ‘securitisation’ of the ‘internet’? It shows that rhetoric around ‘national security’ can contribute to (I) expanded state control over technology; and (II) weaker consumer protections. The former seems to already be occurring with AI development. Perhaps the latter may occur too.

C) 'Best practices’ have a mixed track record for protecting privacy

Another important takeaway from Surveillance Capitalism are two prominent cases of self-regulation failing to protect consumer privacy.

Take ‘M-health’ (Mobile-Health) tools. By 2016, there was over 100,000 m-health apps but no federal regulation surrounding their use. In April 2016, the FTC released ‘Best Practices’, encouraging developers “make sure your app doesn’t access consumer information it doesn’t need,” “let consumers select particular contacts, rather than having your app request access to all user contacts,” and let users “choose privacy-protective default settings.” Zuboff argues these ‘best practices’ failed to stop M-Health companies sharing consumer data. A study, conducted by the Munk School, found that most fitness trackers sent activity data to servers. Similarly, an investigation of Diabetes apps found they granted automatic data collection from third parties, activated cameras and microphones, and had ineffective or absent privacy policies. The M-Health tools example constitutes the failure of ‘best-practices’ to be followed by companies, post-agreement.

Zuboff also describes inadequate outcomes within the development of ‘best practices’. In 2015, National Telecommunications and Information Association (NTIA) tried to create guidelines for biometric information for facial recognition, consulting with various consumer advocacy groups and corporate groups. The corporate groups insisted on using facial-recognition: as one lobbyist told the press, “Everyone has the right to take photographs in public... if someone wants to apply facial recognition, should they really need to get consent in advance?” Consumer Advocates eventually left the talks. NTIA’s “Privacy Best Practice Recommendations” were eventually released in 2016. The results were pretty weak: companies who use facial recognition technology in “physical premises… [are] encouraged to provide … notice to consumers”. The Consumer Federation of America describes the regulations as offering “no real protection for individuals.”

These two examples provide pretty clear instances of ‘best practices’ for digital companies providing less than adequate protection for consumers. Several countries have started to use ‘best practices’ to regulate AI: e.g. Japan's focus on "soft law"; or voluntary safeguards in the US. At a first glance, the history of digital self-regulation/best practices might make you less optimistic about ‘best-practices’ for AI. This seems to be the view of several consumer protection advocates. Several caveats are needed though. First, neither case directly involved “Big Tech” companies.[4] Secondly, they only represent two (potentially cherry-picked) examples. Notwithstanding these caveats, I cautiously agree that the historical examples make me more skeptical of ‘best practices’ for digital technologies: that is, the gulf between ‘best practices’ and actual regulation for consumer protection is wider than I assumed. But, this doesn’t suggest that ‘best practices’ have no benefits relative to worlds in which they are not implemented at all.

A fairly clear indication of this comes from perhaps part of Surveillance Capitalism which was most shocking to me, in which Zuboff describes Facebook’s mass experimentation into social networks and voting. In 2012, Facebook researchers published a study titled “A 61-Million-Person Experiment in Social Influence and Political Mobilization.” You can read it today. It involved manipulating the news feeds of Facebook users, to measure effects of “social contagion” on voting in the 2010 midterms. Facebook carried out a similar study in 2014 – experimental evidence of massive-scale emotional contagion through social networks – altering the news feeds of almost 700,000 people to test whether this would change their own posting behavior. Between 2007 and 2014, Facebook's Data Science group ran hundreds of tests, with “few limits”.

Wild stuff. Does this show that ‘best practices’ have no benefits? You could argue the contrary. Prior to any public commitments, Facebook experimented at their own will. Following public backlash to the release of the 2014 study, Facebook announced a ‘new framework’ for research, which included internal guidelines and a review panel. I don’t think Facebook has carried out (public) mass-scale social experimentation since . Zuboff wants to present this case study as a failure of self-regulation – which it may have been, all things considered. (These internal ethics guidelines haven’t stopped Facebook Targeting Ads at Sad Teens, for example). But, this doesn’t bely the claim that ‘best practices’ in 2014 might have positive counterfactual impact.

However, it's possible that ‘best practices’ might worsen the overall regulatory environment for consumers overall, if they legitimate undesirable behaviors, and make more stringent regulation less likely to happen. For example, NTIA guidelines for acceptable ‘commercial facial recognition’ might weaken public norms around the technology, and reduce the likelihood of a federal ban. This seems different for AI development, where AI labs have considered future conditional pauses to AI development – which seems more ambitious than current regulators’ plans, with regard to long-term AI governance.

In short, the examples of ‘best practices’ surrounding M-Health tools and facial recognition make me more skeptical of self-regulation relative to actual regulation. Whether or not, counterfactually, ‘best practices’ improve the situation for consumers depends on how ‘best practices’ influence actual regulation.

- ^

Zuboff cites Frank and Fritzie Manuel who write, “since the end of the eighteenth century the predictive utopia has become a major form of imaginative thought... the contemporary utopia... binds past, present, and future together as though fated.” Karl Marx, described as the last great utopian thinker, wrote in the Communist Manifesto, “What the bourgeoisie, therefore, produces, above all, is its own grave-diggers. Its fall and the victory of the proletariat are equally inevitable.”

- ^

There are some examples of Big Tech companies responding to public or internal pressure: e.g. YouTube’s adjustment to its algorithms in 2019, or Google stepping away from Project Maven in 2018

- ^

These three interpretations respond to 3 “Theses” from Mozorov. https://thebaffler.com/latest/capitalisms-new-clothes-morozov

Interpretation I (Descriptive): Surveillance capitalism is a description of current data practices by companies like Google and Facebook, with limited theoretical ambition.)

E.g. “In the years following its IPO in 2004, Google’s spectacular financial breakthrough first astonished and then magnetized the online world … When Google’s financial results went public, the hunt for mythic treasure was officially over”

Interpretation II (Analytical): A more radical hypothesis that surveillance capitalism represents a new, dominant form of capitalism, changing the basic mechanisms and imperatives of the economic system.)

E.g. the word “imperative” appears over 100 times in Surveillance Capitalism

Interpretation III (Tautological): “surveillance capitalists engage in surveillance capitalism because the system's imperatives demand it.

- ^

Facebook/Google likely did not have major interests in M-Health in 2016. Similarly industry groups which were involved in the NTIA biometric guidelines, e.g. International Biometrics Industry Association, don’t seem closely tied to Big Tech

Thanks for writing this Charlie, this was great

Thank you Nathan!!