Disclaimer:

This piece came out of a Summer Research Project with the Existential Risk Alliance. This section was intended to be part of an introduction for an academic article, which mutated into a stand-alone piece. My methodology was shallow dives (2-4 hours of research) into each case study. I am not certain about my reading of these case studies, and there may be some important historical factors which I’ve overlooked. If you have expertise about any one of these case studies, please tell me what I’ve missed! For disclosure: I have taken part in several AI protests, and have tried to limit the effects of personal bias on this piece.

I am particularly grateful to Luke Kemp for mentoring me during the ERA program, and to Joel Christoph for his help as research manager. I’d also like to thank Gideon Futerman, Tyler Johnston, Matthijs Maas, and Alistair Stewart for their useful comments.

1. Executive Summary:

Main Research Questions:

- How should we look for historical analogies for restraining AI development?

- Are there any successful historical precedent(s) for AI protests?

- What general lessons can we learn from these case studies?

Research Significance:

- Unclear whether there are any relevant historical analogues for restraining AI development

- Efficacy is a crucial consideration for AI activism, yet little research has been done

Findings

Based on a new framework for identifying analogues for AI restraint, and shallow historical dives (2-5 hours) into 6 case studies, this project finds that:

- General-Purpose-Technologies are a flawed reference class for thinking about restraining AI development.

- Protests and public advocacy have been influential in restraining several technologies with comparable ‘drivers’ and ‘motivations for restraint’

In particular, protests have had counterfactual influence in the following cases:

- Cancellation of prominent SAI geo-engineering experiments (30-60% influence* for SCOPEx, 10-30%* for cancellation of SPICE)

- De-nuclearization in Kazakhstan in early 1990s (5-15%*) and in Sweden in late 1960s (1-15%*)

- Reagan’s move towards a ‘nuclear freeze’ in the 1980s, and subsequent international treaties (20-40%* for former, 5-15%* for latter)

- Changing UK’s climate policies in 2019 (40-70%* for specific emissions reductions)

- Germany’s phase-out of nuclear power from 2011 to 2023 (10-30%*)

- Domestic bans on CFCs in late 1970s (5-25%*), and influential for stricter international governance from 1990 (1-10%*)

- Europe’s de-facto moratorium on GMOs in late 1990s (30-50%*)

* Confidence Interval = Probability of event occurring given protests - Probability of event occurring without protests

More General Lessons:

- Geopolitical Incentives are not Overriding: activists influenced domestic and international policy on strategically important technologies like nuclear weapons and nuclear power.

- Protests can Shape International Governance: Nuclear Freeze campaign helped enable nuclear treaties in 1980s, and protests against CFCs helped enable stricter revision of Montreal Protocol

- Inside and Outside-Game Strategies are Complementary: different ‘epistemic communities’ of experts advising governments pushed for stricter regulation of CFCs in 1980s and for denuclearization in Kazakhstan in 1990s; both groups were helped by public mobilisations.

- Warning Shots are Important: use of nuclear weapons in WW2, Fukushima power station meltdown, and discovery of Ozone Hole all important for success of respective protests.

- Activists can Overwhelm Corporate Interests: protests succeeded in spite of powerful commercial drivers for fossil fuels, nuclear power, and GMOs.

- Technologies which pose perceived Catastrophic Risks can be messaged saliently: there was significant media coverage of proposed SAI experiments that focused on ‘injustice’ not risk.

2. Introduction

Mustafa Suleyman, in his recent book, ‘The Coming Wave’, asks whether there is any historical precedent for containing the proliferation of AI: “Have We Ever Said No?” (p38). His answer is clear. For ‘General Purpose Technologies’ like AI, “proliferation is the default” (p30). Given the history of technology, in addition to the particular dynamics at play for AI – increasing nationalism, prevalence of open-sourcing, high economic rewards available – we should expect to continue, like “one big slime mold slowly rolling toward an inevitable future” (p142). Eventually these technologies will proliferate across society. There is a ‘Coming Wave’, the title of Suleyman’s book.

Trying to hold back the ‘coming wave’ would be as ineffectual as Cnut, the King who tried to order back the tide: “The option of not building, saying no, perhaps even just slowing down or taking a different path isn’t there” (p143). Suleyman’s policy proposals, like other high-profile publications like The Precipice [1], don’t include slowing down AI development. Instead, they feature an Apollo Program for AI safety and mandates for AI companies to fund more safety research.

Given the inevitability of the 'coming wave', protest groups who advocate for moratorium on powerful Ai models are bound to fail. Suleyman doesn’t mention emerging groups like the 'Campaign for AI Safety' or 'PauseAI'. While coalitions between developers and civil society groups can create “new norms” around AI – just as abolitionists and the suffragettes did – these new norms don’t include slowing down development. In short, a historical perspective about technological inevitability suggests that slowing down AI development, including by protests, is unlikely to succeed.

In this piece, I try to challenge this historical narrative. There are several cases in which we have said no to powerful corporate and geopolitical incentives. And protests have been influential in several of them.

I think that this research is significant because while efficacy is a crucial consideration for AI protests, little research has been done. There has been several projects looking at the possibility of slowing down AI, including here and here. However, my other post, an in-depth study of GM protests, is the only other extended piece I know of which looks specifically at efficacy of AI protests.

EAs seem to think that slowing AI development is not tractable.[2] They often focus on the ‘inside-view’, including the strong economic and geopolitical drivers behind AI development. However, as noted by others, it is important to complement our inside-view views about AI development with an outside-view understanding of technological history.

I’d also like to make a brief caveat to say that there are many areas which I do not address, including the ethics of protest; the desirability of slowing or pausing AI development either unilaterally or multilaterally; and other downside risks from protests. Instead, I am simply looking at the efficacy of protests and technological restraint.

This piece will proceed as follows. In the first section, I set out a framework for analogies for restraining AI development. I then identify 6 relevant and useful case studies involved ‘protests’ (broadly defined to include public advocacy, as well as public demonstrations). In the next section, I go through each case study, and analyze what role protest had. Finally, I conclude and draw general meta-lessons for protests, including those against AI.

3. Have We Ever Said No?

To support his claim that “proliferation is default”, Mustafa Suleyman mostly looks at our attempts to contain other General-Purpose-Technologies (GPTs), technologies which spawn other innovations and become widespread across the economy. He acknowledges that there are some short-term successes: for example, the Ottoman Empire did not possess a printing press until 1727; the Japanese shogunate shut out the world for almost 300 years; and imperial China refused to import manufactured British technology. Yet, in the long-run, “none of it worked” (p40): all of these societies were forced to adopt new technologies. Similarly, our modern attempts at containment are rare and flawed because international agreements are not effectively enforced: e.g. consider bans on chemical weapons which are routinely violated. The one potential exception, the case of nuclear weapons, has several caveats, including its extremely high barriers to development and our close shaves with nuclear war. Modern and historical failures of containment suggest that pausing AI isn’t “an option. The wave is coming, and Suleyman predicts we will have ‘Artificial Capable Intelligences’ in the next 5 years.

(A quick aside: this view echoes theories of technological determinism, the view that technology develops and proliferates according to its own inner logic. Nick Bostrom’s “Technological Completion Conjecture” suggests that if scientific progress does not stop, then humanity will eventually develop all possible technological capabilities which are basic and important. Alan Dafoe suggests that military-economic competition exerts a powerful selection pressure on technological development.)

I think that there are two main problems with Suleyman’s reasoning.

Firstly, even if all technologies proliferate in the long-run, this doesn’t mean that short-term restraint is impossible. It may be true that on a big-picture history scale of analysis, technologies with strong strategic advantages will almost certainly be built, per Dafoe’s position. (This seems plausible to me!). However, as Dafoe himself recognises, this doesn’t deny that at the micro level, social groups have quite a lot of flexibility in steering different technologies. Suleyman himself gives many examples from imperial Japan to the Ottoman empire. There are additional cases involving GPTs, including: funding cuts hampering progress in nanotechnology in early 2000s, the canceled Soviet internet of the 1960s, and prevailing perceptions around masculinity favoring the development of internal combustion engines over electric alternatives in the early 20th century. Even if AI does proliferate in the long-run, how long is the long-run? A year, a decade, or 300 years? Even a short pause to AI development might be vital for securing humanity’s survival.

More fundamentally, I think that GPTs are not the best reference class for thinking about slowing down AI. AI might indeed be, or soon become, the “new electricity”. (Some scholars think it’s premature to call AI a GPT.) However, even if GPTs are the best reference class for AI as a technology, this does not mean that they are the best reference class for restraining AI development.

This is because, firstly, AI, electricity, and other GPTs all had vastly different ‘drivers’. Frontier AI models are being built by a handful of multi-billion-dollar corporations, within a political climate of increasing US-China competition. In contrast, electricity, and other modern GPTs were developed by a few individual ‘tinkerers’: individuals like Benjamin Franklin for electricity; Gutenberg for the printing press; Graham Bell for the telephone; Carl Benz for the internal combustion engine. They weren’t part of large multinationals, or, as far as I’m aware, conducting scientific research because of perceived ‘arms races’. Or consider older GPTs. There was no paleolithic Sam Altman who pioneered the domestication of animals, the invention of fire, or the smelting of iron. Several GPTs first started being used even before the existence of states. Our ability to restrain different technologies depends on the different forces which are driving their development. Different ‘drivers’ matter.

In addition, there are various motivations for restraining different GPTs. Groups like PauseAI are mainly motivated by the perceived catastrophic risks from AI systems in the future. Few PauseAI protestors are campaigning because of immediate risks to their jobs, unlike the Luddites. The motivations to restrain AI development are more comparable to protests against nuclear weapons. But there are also important disanalogies: AI has not yet had a ‘warning shot’ comparable to the dropping of nuclear bombs on Hiroshima and Nagasaki. Perhaps differences in motivations for restraint are less significant than differences in ‘drivers’. However, I think they are still important in order to avoid inappropriate analogies like, “PauseAI are neo-Luddites”.

Thus, my criteria for analogues of restraining AI development include:

1. Drivers for Development

- Who is developing the technology? (Private sector, governments, universities, individuals)

- What academic/intellectual value did the technology have?

- What strategic/geopolitical value did the technology have?

- What commercial value does the technology have? What lobbying power did firms have?

- Relatedly, what public value did the technology bring? Was technological restraint personally costly for citizens

2. Motivations for Restraint

- Did the technology pose current or future perceived risks?

- Did the technology pose perceived catastrophic risks?

- Were there clear ‘warning shots’, events demonstrating the destructive potential of this technology?

- Did the technology offer significant benefits to the public?

In addition, I am looking for cases where ‘protests’ – defined broadly to include public demonstrations, actions by social movement organizations, or public advocacy – might have been influential for restraining the technology.

This framework has been simplified, with several factors left out.[3] However, I hope it serves as a rough starting point for identifying useful and relevant analogues for technological restraint, including cases which protests are involved.

4. Protests Which Said No

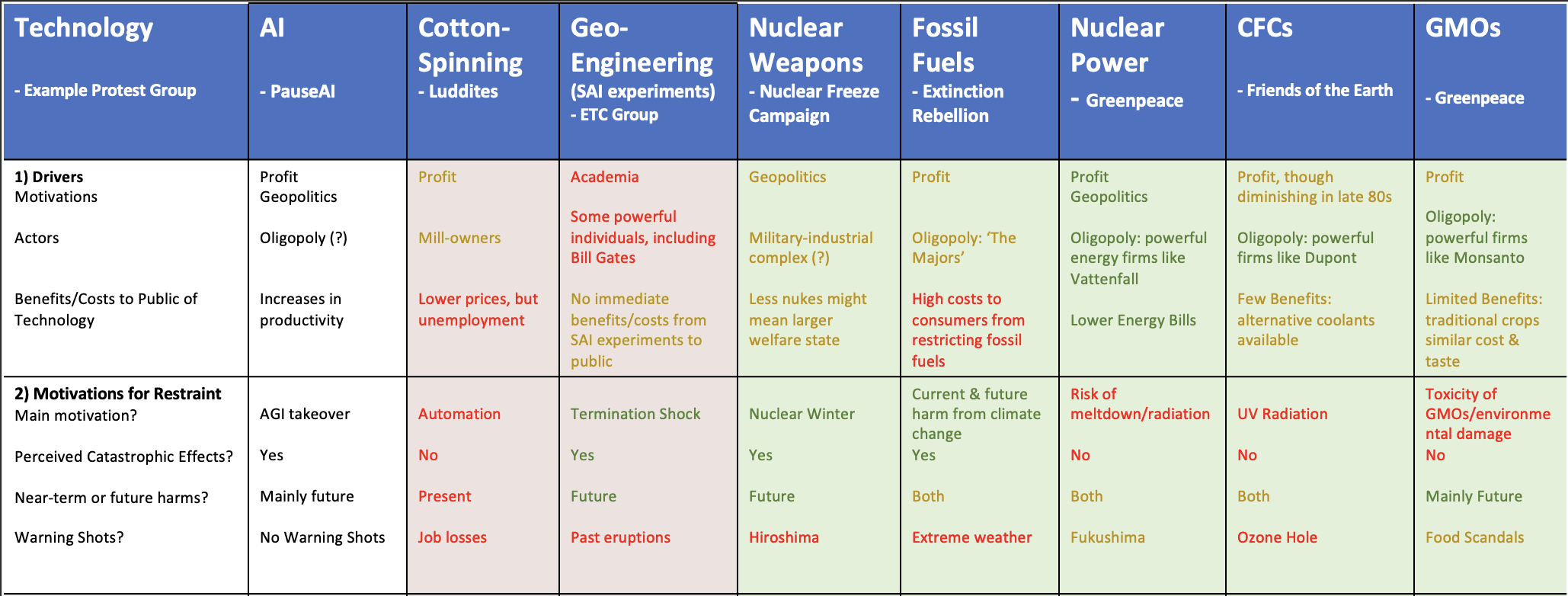

Based on this criteria, I found 7 potential analogues for restraining AI, all of which had corresponding protest movements.

The different 'drivers' and 'motivations for restraint' for each technology colour coded in terms of closeness to AI: green (close fit); orange (medium fit); red (low fit).

I have set aside many other interesting examples of technological restraint, either because they didn’t significantly involve protest, or because the technologies had substantially different drivers.[4] Please add comments if you think I’ve missed a particularly relevant example of technological restraint which had accompanying protests!

In the sub-sections that follow, I give a brief overview of each of these case studies, detailing their analogies and disanalogies with AI restraint, as well as whether protests were influential.

A. Geo-engineering

One analogue for AI protests could be protests geo-engineering experiments. Public advocacy groups have targeted low environmental impact outdoor experiments into Stratospheric Aerosol Injection (SAI), a proposed intervention which would involve spraying reflective particles into the stratosphere to reflect sunlight and cool the Earth. Proponents suggest that it could be immensely valuable for society as a whole, saving society trillions of dollars per year by mitigating the costs of global warming.

The two most prominent SAI experiments have both been cancelled.

SCoPEx, or Stratospheric Controlled Perturbation Experiment, was first planned in 2017, and is led by Harvard University with significant funding from several billionaires and private foundations. In 2021, a small group of researchers had planned to conduct a ‘dry-run’ – without any particles released – in northern Sweden. However, in February 2022, a group of Swedish environmental organizations and the Indigenous Saami Council published a letter demanding that the project be cancelled, citing “moral hazard” and “risks of catastrophic consequences” from SAI. The letter received significant media attention. Around a month later, the Harvard advisory committee put the test on hold until 2022. No further SCoPEx experiments have occurred or have been planned since. Given the timing of the cancellation – only weeks after the open letter – public advocacy (which I define ‘protests’ to encompass here) was likely the key reason.

The second prominent experiment which was cancelled was SPICE (Stratospheric Particle Injection for Climate Engineering). After being announced in mid-September 2011, SPICE received negative media attention, and was later postponed on 26th September to allow for more “deliberation and stakeholder engagement”. Later that same day, the ETC Group published an open letter, ‘Say No to the “Trojan Hose”: No SPICE in Our Skies’, which was eventually signed by over 50 NGOs. SPICE was cancelled in April 2012.

I think that public pressure was less significant than in the SCoPEx case. The decision to postpone the project in September 2011 was made in part because of anticipated NGO opposition, but also because of a potential conflict of interest: several engineers on SPICE had applied for a patent for a stratospheric balloon. Matthew Watson, the principal scientist at SPICE, cited the COI issue as the reason for the cancellation in 2012. Jack Stigloe, in his book “Experiment Earth”, de-emphasizes the role of public pressure, saying, “the decision [was] made by the SPICE team themselves”. Public protests may have counterfactually contributed to the cancellation of SPICE, though this is more uncertain than the case of SCoPEx.

More generally, however, the norms against SAI experimentation have created a de-facto moratorium on SAI experimentation by universities (there is no formal moratorium at present). One field experiment took place in Russia in 2009, though this occurred prior to the public backlash to SPICE in 2011, and additionally did not provide much relevant information for future experiments or implementation of SAI. In 2022, the US start-up Make Sunsets released particles via balloons in Mexico. However, Make Sunsets released less than 10 grams of sulfur per flight (commercial flights emit about 100 grams per minute), and experiments were banned in the Mexico in January 2023. Other experiments occurred in secret in the UK in October 2021 and September 2022, under the name Stratospheric Aerosol Transport and Nucleation (SATAN). However, SATAN was also a small-scale project (with hardware costs less than $1,000), conducted by an independent researcher, and did not attempt to monitor effects on atmospheric chemistry.

Does this case study suggest AI protests might be effective? To a degree. Protests have been influential in canceling major outdoor SAI experiments, particularly SCoPEx. The perception of uncertain, catastrophic risks from SAI experiments are similar to the perception of risks from ‘Giant AI Experiments’.

However, the drivers behind SAI experiments are very different to AI research, which is led by private corporations. Make Sunsets is a commercial venture, which sells ‘carbon credits’, but it operates at a much smaller scale: it has received $750,000 in VC funding, in contrast to the billions for AI labs. Both high-profile successes of SAI protests involved university-run projects. Universities are likely to be more sensitive to public pressure than large corporations who have significant sunk investment in AI projects.

Despite these key differences, there are several lessons which can be drawn. One lesson is that protests can have high leverage over a technology in early stages of development, particularly when universities are involved: e.g. CRISPr. However for AI, this would suggests that the best time for AI protests might have been in the 2010s when universities dominated AI research.

One positive lesson is that future catastrophic risks from technologies can be messaged in a salient way. The risks from SAI experiments might seem difficult to message. However, the proposed SCoPEx experiment received outsized media attention, with a common ‘hero-villain’ narrative: Indigenous Groups versus powerful American university, backed by Bill Gates (e.g. here, here, here). This supports my findings elsewhere that promoting emotive ‘injustice’ frames are vital for advocacy groups protesting future catastrophic risks.

Additionally, the coalition opposed to SCoPEx was broad – involving the Saami Council, the Swedish Society for Nature Conservation, Friends of the Earth, and Greenpeace. The literature suggests that diverse protest groups are more likely to succeed. While SAI case study is not the closest analogue for AI protests, it holds practical lessons in terms of messaging and coalition-building.

B. Nukes

Another protest movement relevant for AI protests could be protests against nuclear weapons. Both technologies share striking similarities, both posing catastrophic risks having emerged from a nascent field of science. One New York Times piece challenges readers to tell apart quotes referring to each technology. It’s quite difficult.

Protests against nuclear weapons have had particular success in Kazakhstan, Sweden, and the US.

I) Kazakhstan

Kazakhstan inherited a large nuclear arsenal after gaining independence from the USSR in 1991. Initially, Kazakhstan agreed to denuclearize. However, by 1992, President Nazarbayev suggested Kazakhstan should be a temporary nuclear state, citing fears of Russian imperialism and technical difficulties disarming. Eventually, by 1995, Kazakhstan had signed important international treaties, alongside Ukraine and Belarus, such as START I, the Lisbon Protocol, and the NPT, and eventually gave up its nuclear weapons in 1995. Why did this happen? US diplomatic efforts were undoubtedly important. The creation of the Cooperative Threat Reduction (CPT) initiative, a Department of Defence Program, was vital for pushing Kazakhstan back towards denuclearisation. It provided financial and technical assistance aiding the difficult technical task of removing and destroying nuclear weapons. A network of American and Soviet experts who shared similar views about the importance of non-proliferation, and convinced two US Senators – Sam Nunn (D-GA) and Richard Lugar – to create the CPT. Similar groups of experts who share similar worldviews and shape political outcomes by giving information and advice to politicians are called ‘epistemic communities’ in the literature. Thus, US diplomacy and ‘epistemic communities’ were undoubtedly important for de-nuclearisation in Kazakhstan.

However, solely focusing on US diplomatic efforts is too simplistic: domestic opposition was also significant. The Nevada-Semipalatinsk Movement (NSM), led by the poet Olzhas Suleimenov, began protesting nuclear testing in 1989, and forced forced authorities to cancel 11 out of the 18 nuclear tests scheduled that year. Two years later, the Semipalatinsk test site – which had been the largest nuclear test site in the USSR – was closed. As President Nazarbayev himself acknowledged, NSM protests were key to its closure. In short, ‘epistemic communities’ of non-proliferation experts who were providing technical support to Kazakhstan’s government were aided by public mobilization in achieving denuclearisation.

II) Sweden

Similarly, protest movements may have been important for Sweden’s moves towards denuclearisation. Between 1940s to the 1960s, Sweden actively pursued nuclear weapons, and possessed the needed requisite capability by the late 1950s. However, by 1968, Sweden formally abandoned the program.

Why did this happen? Firstly, the small nuclear program in development was seen as offering limited deterrent value. Instead, it might have just pulled Sweden into a war. Secondly, the ruling Social Democrats (SDs) believed that the costs were too high, given other fiscal demands on the welfare state. Public opinion was also significant. Sweden moved from a situation in the 1950s in which most Swedes and leading SDs favored the nuclear program, to widespread opposition in the 1960s. Going ahead with the nuclear weapons program would have led to an implosion in the SD Party. Grass-root movements in Sweden, including the Action Group against Swedish Nuclear Bombs, strengthened anti-nuclear public and elite sentiments, contributing to eventual denuclearisation. However, I have not looked into this case in detail, so I am fairly uncertain about the counterfactual impact of protests.

III) Nuclear Freeze Campaign

Further, nuclear protest movements influenced foreign policy and international policymaking. The Nuclear Freeze campaign of the 1980s, which called for halting the testing, production, and deployment of nuclear weapons grew into a mass movement with widespread support: petitions with several million signatures, referendums passed in 9 different states, and a House resolution passed. In response, Reagan dramatically changed his rhetoric, from suggesting protestors had been manipulated by the Soviets, to saying, “I’m with you”. Public opinion was a clear motivator for Reagan: in 1983, he suggested to his secretary of state that, “If things get hotter and hotter and arms control remains an issue, maybe I should go see [Soviet leader Yuri] Andropov and propose eliminating all nuclear weapons.” Reagan’s rhetorical and diplomatic shift paved the way for several international agreements, including the 1987 INF Treaty and the 1991 START Treaty reducing strategic nuclear weapons.

What lessons can we draw from protests against nuclear weapons? First, there is the trivial point that the use of nuclear weapons in WW2, and the clear demonstration of their catastrophic potential, was a key motivator for all of these cases. ‘Warning shots’ are helpful for mobilizing public opinion.

Increasingly, AI is becoming a geopolitical battleground: for example, the US has sought to boost its domestic AI capabilities through the CHIPs Act, and China explicitly aims to be the world's primary AI innovation center by 2030. Yet, the examples in Sweden and Kazakhstan suggest that activists can influence domestic government policy towards a geopolitically important technology. The Nuclear Freeze Campaign in the US suggests that protests can influence international policymaking.

C. Fossil Fuels

Environmental protests provide a pertinent analogue to protests against advancements in artificial intelligence (AI). Both technologies have comparable ‘motivations for restraint’: both climate change and AI are perceived to threaten civilisational collapse, although AI risk is often considered more speculative and poses less obvious concrete harms today,

Further, both technologies have similar drivers. Like the dominance of 'Big Tech' in the AI landscape – Microsoft via OpenAI, Google via Deepmind, now Amazon via Anthropic – a handful of global fossil fuel giants, commonly referred to as 'the Majors', wield significant influence. These corporations, like ExxonMobil, BP, Chevron, etc., control substantial portions of the sector's reserves (12%), production (15%), and emissions (10%). Fossil fuel companies wield formidable lobbying power, employing an array of tactics, including disseminating doubts about climate science (Oreskes & Conway, 2010).

AI-lobbying is increasing and is particularly visible in lobbying over the EU AI Act. However, the extent of Big Tech lobbying on AI policy is still small in comparison to Fossil Fuels. Big Tech may have greater lobbying potential than ‘the Majors’, given their greater market capitalisation: almost $5 trillion for Amazon/Google/Amazon combined, versus around $1 trillion for ‘the majors’. Yet, individual AI labs have significantly less resources to marshall: OpenAI's valuation is estimated at around $30 billion, while Anthropic is valued at approximately $5 billion. While the overall level of AI-lobbying is unclear – estimates tie together all ‘digital lobbying’ – I expect it to be less than the current spending on climate lobbying, at over £150 million per year.

Further, transitioning away from fossil fuels confronts more formidable challenges in terms of collective action. Fossil fuels constitute a staggering 84% of global energy consumption. Some even liken society’s entrenched reliance on fossil fuels to the role slavery played prior to its abolition. In contrast, AI models have narrower applications, primarily in product enhancement and analytics, and are utilized by hundreds of millions, not billions, of users.

Despite confronting a powerful corporate lobby and threatening to raise costs on consumers, protests have influenced climate policies. Extinction Rebellion (XR), for instance, played a crucial role in galvanizing more ambitious climate policies within the UK government. From its launch in July 2018 until May 2019, Extinction Rebellion likely contributed to local authorities pushing forward net zero targets to 2030 from 2050, a more ambitious nationally determined contribution from the UK government, and the 2050 net zero pledge being implemented 1-3 years earlier. Additionally, XR may have had an influence at an international level: in the wake of XR protests, publics across the world became more worried about climate change, and many countries (including the EU and 10 others) declared a climate emergency – however, these effects are less well documented.

It might be tempting to look at Big Tech’s size and willingness to lobby AI regulation with despair. The success of XR gives reasons for hope.

D. Nuclear power

Protests against nuclear power offer another precedent for AI existential risk protests.

Anti-nuclear protests have achieved partial successes in the US, where stringent regulation effectively blocks any new power stations; several other countries, including Italy, have never introduced nuclear power, despite importing nuclear-generated electricity from its neighbours.

One particularly note-worthy case study is Germany which fully phased out nuclear power between 2011 and April 2023. Activists may have had a role in this policy reversal. In the 1970s, the Green party formed on an anti-nuclear platform, pushing the SPD to oppose nuclear energy too. An SPD-Green coalition government from 1998-2005 decided to slowly phase out nuclear power, although this decision was reversed after Merkel came to power. After Fukushima in 2011, massive anti-nuclear protests broke out across the world, including in Germany. Facing electoral threats from the Greens in state elections, Merkel announced a nuclear moratorium and ethics review. This committee's recommended phase-out was overwhelmingly approved, reflecting anti-nuclear sentiment.

Nuclear protests achieved phase-outs in Germany despite strong economic and strategic ‘drivers’ which parallel AI. Like the high potential profits from AI, there are strong financial incentives behind nuclear power. Legislation which extended plant lifetimes in 2010 offered around €73 billion in extra profits for energy companies. After Germany's nuclear reversal, various energy companies sued the government for compensation, with Vattenfall seeking €4.7 billion.

Further, there were clear strategic ‘drivers’ for continued nuclear power. Germany phased out nuclear power in 2023 despite rising energy costs following Russia's invasion of Ukraine. Other countries boosted investments (France), or delayed their phase-outs of nuclear power (e.g. Belgium), following the Russian invasion (ANS, 2023).

The case of nuclear power shows that geopolitical calculus is not an overwhelming determinant of government policy, at least in the short-run. Public pressure, including from protests, was influential. Further, Germany’s anti-nuclear mobilization was catalysed by the Fukushima meltdown in 2011. This suggests that, similarly to other cases, ‘warning shots’ from AI might also be significant.

E. CFCs

Another interesting analogue for AI protests is protests against CFCs. CFCs were first unilaterally banned in the US in the late 1970s, before spreading to other countries. The discovery of the ‘ozone hole’ in 1985 spurred countries to agree to substantial CFC reduction via the Montreal Protocol of 1987.

The 'drivers' of both CFCs and AI share some similarities. CFC production was concentrated in a few powerful chemical firms like DuPont, analogous to the dominance of Big Tech. While CFCs posed some benefits for the public in the 1970s, particularly in refrigeration and foam production, they CFCs were not as foundational to the world economy as fossil fuels – a closer comparison with the stage AI is at currently.

What role did activists have in regulating CFCs? Their role in the early CFCs bans is fairly clear. In 1974, a coalition of environmental groups helped to publicize the initial ‘Molina-Rowland’ hypothesis that CFC could destroy ozone molecules. The popularization of the risks, and media campaigns by groups like Natural Resources Defence Council (NRDC), helped spur the first bans on CFCs in the US in 1977.

Protests may also have influenced international regulation of CFCs. The Friends of the Earth ran an extensive corporate campaign encouraging consumers to boycott products with CFCs. It was particularly successful in the UK, with the British aerosol industry reversed its opposition to CFC regulation, which helped enable the strengthening of the Montreal Protocol in 1990. However, I am more uncertain about this: firms may have reversed their opposition to regulation because of changing profit incentives. For example, by the mid-1980s CFCs, DuPont realized global regulation could create a more profitable market for substitute chemicals the company was positioned to produce, so actually supported the signing of the Montreal Protocol.

In addition, there were other factors aside from activists which were key. First, there was the role of ‘epistemic communities’ of scientists. As described in the case of de-nuclearization in Kazakhstan, ‘epistemic communities’ are groups of experts who share similar worldviews and shape political outcomes by giving information and advice to politicians. In the CFC case, scientists advising US departments, especially in the EPA and State Department, influenced the US government to advocate for a more stringent treaty, enabling the success of the Montreal Protocol.

Further, US’s government support for the Montreal Protocol was unilaterally incentivised: if the US had unilaterally implementing the Montreal Protocol on its own, it would have prevented millions of skin cancer deaths among US citizens for example, equivalent to roughly $3.5 trillion in benefits, versus $21 billion in costs.

What lessons can be learnt from the case of CFCs?

There are several reasons for optimism. Just like with the Nuclear Freeze Campaign, activists helped shape international policymaking surrounding CFCs. Furthermore, the activists who carried out corporate campaigns benefitted the expert scientists who were working within the US government and pushing for stricter CFC regulation.

However, there are other reasons to be pessimistic about AI protests.

Different countries were unilaterally incentivised to sign the Montreal Protocol. This might be the case for AI, in theory: different countries pursuing continued AI development might be competing in a ‘suicide race’. However, this is not how countries are behaving. Instead, they are pushing ahead with AI capabilities.

Perhaps in the future, a clear AI ‘warning shot’ might change policymakers’ perceptions of the national interest. The discovery of the ‘ozone hole’ was crucial for mobilizing public opinion and policymakers’ attention. Perhaps in the future we will see similar ‘warning shots’ from which could spur stringent, international AI regulation.

Further, stringent international regulation was aided by a diminishing profit incentive behind CFCs. By the late 1980s, alternative products might have been more profitable for Dupont. In contrast, there are no obvious substitutes to 'frontier AI models' like GPT-3, so firms are much less likely to support a ‘phasing out’.

F. GMOS

(I have covered the case of GMOs in detail here. Please skip this section if you’re already familiar!)

In several ways, GMOs are a useful analogy for AI. It was seen as a revolutionary and highly profitable technology, which powerful companies were keen to deploy. Furthermore, GMOs did not have any clear ‘warning shots’, or high events which demonstrate the potential for large-scale harm, unlike the discovery of the ozone hole for CFCs or the meltdown of Fukushima for nuclear power. Yet, within the space of only a few years, between 1996 and 1999, public opinions shifted rapidly against GMOs, due to increasingly hostile media attention surrounding key ‘trigger events’ and heightened public mobilization. There was a de-facto moratorium on new approvals of GMOs in Europe by 1999. Today, only 1 GM crop is planted in Europe, and the region accounts for 0.05% of total GMOs grown worldwide. I am confident that protests had a key counterfactual impact.

There are several lessons from the GMO case. Firstly, ‘trigger events’ which lead to mass mobilization need not be catastrophes. The cloning of Dolly the Sheep, and the outbreak of ‘Mad Cow Disease’ were unrelated to GMOs, and did not pose any immediate catastrophic risks to the public.

Additionally, powerful corporate lobbies can be overwhelmed by activists. In the US, biotech companies spent over $140 million between 1998 and 2003 on lobbying. Monsanto spent $5 million on a single ad campaign in Europe. These levels of lobbying likely exceeds levels of AI-lobbying.

The messaging of GMO protests did not focus on risk. Instead, it focused on injustice. Opponents of GMOs thought principally in terms of moral acceptability, not risk. Rhetoric targeted particular companies (e.g. “Monsatan”) not systemic pressures.

Additionally, the anti-GMO movement brought together a broad range of groups, including environmental NGOs, consumer advocacy groups, farmers, and religious organizations. More generally, diverse protests are more likely to succeed.

5. Conclusion

So, have we ever said no? Given the history of technology, should we see AI development like “one big slime mold slowly rolling toward an inevitable future”?

In this piece, I have tried to set out a framework for thinking about these questions. When asking the question, “is slowing AI development possible?”, we should not look towards cases of restraining General Purpose Technologies like electricity or the internet, as the key reference class. Instead, we should look for technologies with similar geopolitical and commercial drivers, and groups who had comparable motivations for restraining them.

And looking at this reference class, there are several relevant cases in which we have said no: from geo-engineering experiments, nuclear weapons programs, and the use of fossil fuels, CFCs, and GMOs. (I am uncertain exactly how influential: these case studies constitute shallow dives (2-4 hours of research), and showing causation is difficult in social science.)

There are several more general ‘meta-lessons’ we can draw from these protests.

Firstly, the role of geopolitical incentives in determining policy outcomes is not overriding. The case studies of nuclear weapons (denuclearisation in Kazakhstan and Sweden; ‘Nuclear Freeze’ in US in 1980s) and the phase-out of nuclear power in Germany demonstrate that protests can have influence policy outcomes even in the face of powerful geopolitical drivers. Similarly, powerful corporate interests do not always have their way. Powerful lobbies – comparable to corporate interests favoring continued AI development – were overwhelmed by activists in several cases: fossil fuels, nuclear power, and GMOs.

Protests have also demonstrated their capacity to shape international governance, as seen in the Nuclear Freeze campaign and efforts against CFCs. If you believe that international governance is necessary for safe AI governance, protests could help enable this.

Additionally, in the cases of CFCs and de-nuclearisation in Kazakhstan, experts pushing for certain policies within governments benefitted from public mobilisations. This suggests that community of AI researchers, who share similar concerns about AI existential risk, and have privileged positions in the UK’s AI taskforce, would benefit from public mobilization in achieving more stringent regulation of ‘frontier AI’. More generally, these two case studies lend some support to the broader idea that ‘inside-game’ strategies for creating social change (working within the existing system: e.g., running for office, working for the government) are benefitted by other ‘outside-game strategies’ (community organizing, picketing, civil resistance etc.).

Lastly, the importance of ‘trigger events’ – highly publicised public events which lead to mass mobilisation – should not be understated. The dropping of nuclear weapons in WW2, the Fukushima nuclear disaster, and the discovery of the Ozone Hole were pivotal in galvanizing public awareness and support for these protest movements. However, the case of GMOs suggests that mass mobilisation does not require a catastrophic 'warning shot'.

- ^

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3995225, p11: “The policy recommendations for mitigating [risks from AI] in The Precipice support R&D into aligned artificial general intelligence (AGI), instead of delaying, stopping or democratically controlling AI research and deployment.”

- ^

This is based on personal conversations. Claim that slowing AI development was also made here: "Yes, longtermist researchers typically do not advocate for the wholesale pause or reversal of technological progress — because, short of a disaster, that seems deeply implausible. As mentioned above, longtermism pays attention to the “tractability” of various problems and strategies. Given the choice, many longtermists would likely slow technological development if they could."

- ^

I have left three factors out in particular. First, levels of epistemic support or ‘validity’ of different perceptions of risk are excluded; I am focused on the efficacy of protest groups rather than the desirability of their respective goals.

Additionally, I have not selected protest groups with similar demographics/strategies as current AI protests – since these factors are endogenous to protest groups (tactics and allyship are choices for protest groups), and because it seems too soon to make broad generalizations about AI protests.

Finally, I have not made any restrictions on the type of regulation which achieved restraint: i.e. restrictions on development or deployment; whether unilateral or multilateral. This is because there are many policy options for restraining AI: regulating development through a unilateral US-led pause, an international treaty, or restrictions on compute; or regulating the deployment of AI systems, for example, using tort regulation to make developers legally liable for AI accidents. By doing so, I hope to shed light on the various outcomes and ‘drivers’ protests groups can lead to.

- ^

Boeing 2707 was a proposed supersonic jet which received over $1 billion in funding from the government. Funding was cut in 1971 by the US Senate. While protests likely had some role, over 30 environmental, labor, and consumer groups lobbied Congress to end the program (NYT, 1972), other factors may have been more important: including insurmountable technical challenges and rapidly escalating costs (Dowling, 2016). Operation Popeye was a weather-control system used by the US military between 1967 and 1972 to increase the length of the monsoon season to prevent the North Vietnamese from roads. It was halted two days after being exposed by a journalist (Harper, 2008); wide-scale public protests have not been documented, limits its usefulness as an analogue. Another analogue is Automated Decision Systems (ADS) – algorithms for automating government tasks such as fraud detection, facial recognition, and risk modelling for children at risk of neglect. Redden et al. (2022) present 61 different ADSs which were canceled by Western Government between 2011 and 2020. Whilst protests were key in canceling many different projects, the ‘drivers’ of ADS – often improved government efficiency – seem very different to AI. Operation Ploughshare, a US government project to capture the civilian benefits of nuclear devices, was primarily abandoned due to diminishing geopolitical rationale, lack of commercial viability, and rising costs, rather than public mobilization (Hacker, 1995).

Charlie -- thanks very much for this informative and valuable analysis.

Some EAs might react by thinking 'Well in many of these cases, the protesters were wrong, misguided, or irrational, so we feel like it's weird to learn from protests that were successful, but that addressed the wrong cause areas'. That was my first reaction, esp. regarding protests against nuclear power, GMOs, and geo-engineering (all of which I support, more or less).

So I think it's important to separate goals from strategies, and to learn effective strategies even from protest movements that may have had wrong-headed goals.

Indeed, taking that point seriously, it may be worth broadening our historical consideration of successful protest movements from anti-new-technology protests to other situations in which protesters succeeded in challenging entrenched corporate power and escaping from geopolitical arms races. There again, I think we should feel free to learn effective tactics even from movements with misguided goals.

Hi Geoffrey, I appreciate that: thank you!

I agree with you that taking lessons from groups with goals you might object to seems counter-intuitive. (I might also add that protests against nuclear weapons programs, fossil fuels, and CFCs seemed to have had creditworthy aims.) However, I agree with you that we can learn effective strategies from groups with wrong-headed goals. Restricting the data to just groups we agree with would lose lessons about efficacy/messaging/allyship etc.

(There's also a broader question about whether this mixed reference class should make us worry about bad epistemics in AI activism community. @Oscar Delaney made a related comment in my other piece. However, I am comparing groups on what circumstances they were in (facing similar geopolitical/corporate incentives), not epistemics.)

I also agree that widening the scope beyond anti-technology protests would be interesting!

I would caution against taking too strong stances on whether we support something or not without having rigorously assessed it according to EA epistemic standards. I feel this is particularly acute in the case of GMOs and geo-engineering where in the case of GMOs the OP highlighted the justice part of the protests where on the one hand there are powerful corporates and on the other sometimes poor farmers. In the case of geo-engineering in Sweden it should be noted that Sweden has not signed the declaration on the rights of indigenous peoples. Not heeding the needs and perspectives of such marginalized groups has in the past been linked to horrific outcomes and as EA has its origin in helping these very groups I would strongly caution about taking any strong stances here without very careful deliberation.

This also goes to another comment on this post about how "the world would be better if EAs influence more policy decisions" which makes me cautious about hubris from EAs in how we can "fix everything". One thing I like about EA is how we focus on very small targeted interventions, understanding them thoroughly before trying to make a change. History seem less seldom to judge harshly those who just were seeking to help people clearly in need with limited scope, whereas people or small groups with grand ideas of revolutionizing the world often seem to end up as history's antagonists.

Apologies for the rant, I actually came to this post to raise another point but felt a need to react to some of the sentiment in the other comments.

Ulrik - I agree with you that 'History seem less seldom to judge harshly those who just were seeking to help people clearly in need with limited scope, whereas people or small groups with grand ideas of revolutionizing the world often seem to end up as history's antagonists.'

It's important to note that the AI industry promoters who advocate rushing full speed ahead towards AGI are a typical example of 'small groups with grand ideas of revolutionizing the world', eg by promising that AGI will solve climate change, solve aging, create mass prosperity, eliminate the need to work, etc. They are the dreamy utopians who are willing to take huge risks and dangers on everybody else, to impose their vision of an ideal society.

The people advocating an AI Pause (like me) are focused on a 'very small targeted intervention' of the sort that you support: shut down the handful of companies, involving just a few thousand researchers, in just a few cities, who are rushing towards AGI.

Yes I agree. Apologies for responding to the other comment on this post in the reply to your comment - I think that created unnecessary confusion.

Ulrik - thanks; understood!

Small remark regarding your the metric "* 100% minus the probability that the given technological restraint would have occurred without protests" (let's call the latter probability x): this seems to suggest that given the protests the probability became 100% while before it had been x and that hence the protests raised the probability from x to 100%. But the fact that the event eventually did occur does not mean at all that after the protests it had a probability of 100% of occurring. It could even have had the very same probability of occurring as before the protests, namely x, or even a smaller probability than that, if only x>0.

What you would actually want to compare here is the probability of occurring given no protests (x) and the probability of occurring given protests (which would have to be estimated separately).

In short: your numbers overestimate the influence of protests by an unknown amount.

That is a good point, thanks for that Jobst. I've made some edits in light of what you've said.

FYI I was also confused by the probability metric, reading after your edits. I read it multiple times and couldn't get my head round it.

"Probability of event occurring given protests - Probability of event occurring without protests"

The former number should be higher than the latter (assuming you think that the protests increased the chance of it happening) and yet in every case, the first number you present is lower, e.g.:

"De-nuclearization in Kazakhstan in early 1990s (5-15%*)"

(Another reason it's confusing is that they read like ranges or confidence intervals or some such, and it's not until you get to the end of the list that you see a definition meaning something else.)

Sorry that this is still confusing. 5-15 is the confidence interval/range for the counterfactual impact of protests, i.e. p(event occurs with protests) - p(event occurs without protests) = somewhere between 5 and 15. Rather than p(event occurs with protests) = 5, p(event occurs without protests) = 15, which wouldn't make sense.

Hey Charlie, great post--EAs tend to forget to look at the big picture, or when they do it's very skewed or simplistic (assertions that technology has always been for the best, etc). So it's good to get a detailed perspective on what worked and what did not.

I would simply add that there is a historical/cultural component that determines the chances of success for each protest that should not be forgotten. For example, in Sweden, a highly-functioning democracy, it's no surprise that the government would pay attention to the protests; I'm not sure this would work for example in Iran if people were pushing against nuclear. In Kazakhstan, the political climate at the time was freer than it is now, it was the end of the Soviet Union and there was a wind of freedom and detachment from the Soviet Union that made more things possible.

Adding this component by understanding the political dynamics and the level of freedom or responsiveness of the governments towards a bottom-up level of contest would probably help in advocating or not for protests and even put money into it. EAs might not be sold on activism but if they're shown that there's a decent possibility of impact they might change their minds.

Hi Vaipan, I appreciate that!

I agree that political climate is definitely important. The presence of elite allies (Swedish Democrats, President Nazarbayev), and their responsiveness to changes in public opinion was likely important. I am confident the same is true for GM protests in 1990s in Europe: decision-making was made by national governments, (who were more responsive to public perceptions than FDA in USA), and there were sympathetic Green Parties in coalition governments in France/Germany.

I agree that understanding these political dynamics for AI is vitally important – and I try to do so in the GM piece. One key reason to be pessimistic about AI protests is that there aren't many elite political allies for a pause. I think the most plausible TOCs for AI protests, for now, is about raising public awareness/shifting the Overton Window/etc., rather than actually achieving a pause.

I would like to note that none of that had been met with corporations willing to spend potentially dozens of billions of dollars on lobbying, due to the availability of the capital and the enormous profits to be made; and none of these clearly stand out to policymakers as something uniquely important from the competitiveness perspective. AI is more like railroads; it’d be great to make it more like CFCs in the eyes of policymakers, but for that, you need a clear scientific consensus on the existential threat from AI.

It makes sense to study the effectiveness of protests and other activism in general and figure out good strategies to use; but due to the differences, I believe it is too easy to learn wrong lessons from restrictions on GMOs and nuclear and have a substantial net-negative impact.

With AI, we want the policymakers to understand the problem and try to address it; incentivising them to address the public's concerns won’t lead to the change we need.

The messaging from AI activists should be clear but epistemically honest; we should ask the politicians to listen to the experts, we shouldn’t ask them to listen to the loudest of our voices (as the loudest voices are likely to make claims that the policymakers will know to be incorrect or the representatives of AI companies will be able to explain why they’re wrong, having the aura of science on their side).

Studying protests in general is great; studying whatever led to the Montreal Protocol and the Vienna Convention is excellent; but we need to be clearly different from people who had protested railroads.

—

Also, I’m not sure there’s an actual moratorium on GM crops in Europe- many GMO are officially imported and one GM crop (corn, I think?) is cultivated inside the EU

I'm so sorry it's taken me so long to respond, Mikhail!

<I would like to note that none of that had been met with corporations willing to spend potentially dozens of billions of dollars on lobbying>

I don't think this is true, for GMOs, fossil fuels, or nuclear power. It's important total lobbying capacity/potential, from actual amount spent on lobbying.... Total annual total technology lobbying is in the order hundreds of million: the amount allocated for AI lobbying is, by definition, less. This is a similar to total annual lobbying (or I suspect lower) than than biotechnology spending for GMOs. Annual climate lobbying over £150 million per year as I mentioned in my piece. The stakes are also high for nuclear power. As mentioned in my piece, legislation in Germany to extend plant lifetimes in 2010 offered around €73 billion in extra profits for energy companies, some firms sued for billions of Euros after Germany's reversal. (Though, I couldn't find an exact figure for nuclear lobbying).

< none of these clearly stand out to policymakers as something uniquely important from the competitiveness perspective >

I also feel this is too strong. Reagan's national security advisors were reluctant about his arms control efforts in 1980s because of national security concerns. Some politicians in Sweden believed nuclear weapons were uniquely important for national security. If your point is that AI is more strategically important than these other examples, then I would agree with you. Though your phrasing is overly strong.

< AI is more like railroads >

I don't know if this is true ... I wonder how strategically important railroads were? I also wonder how profitable they were? Seems to be much more state involvement in railroads versus AI... Though, this could be an interesting case study project!

< AI is more like CFCs in the eyes of policymakers, but for that, you need a clear scientific consensus on the existential threat from AI >

I agree you need scientific input, but CFCs also saw widespread public mobilisation (as described in the piece).

< incentivising them to address the public's concerns won’t lead to the change we need >

This seems quite confusing. Surely, this depends on what the public's concerns are?

< the loudest voices are likely to make claims that the policymakers will know to be incorrect >

This also seems confusing to me. If you believe that policymakers regularly sort the "loudest voices" from real scientists, in general, why do you think that regulations with "substantial net-negative impact" passed wrt GMOs/nuclear?

< Also, I’m not sure there’s an actual moratorium on GM crops in Europe >

Yes, with "moratorium" I'm referring to a de-facto moratorium on new approvals of GMOs 1999-2002. In general, though, Europe grows a lot less GMOs than other countries: 0.1 million hectares annually versus >70 million hectares in US. I wasn't aware Europe imports GMOs from abroad.

I appreciate the reply!

Widespread public mobilisation certainly helps to get more policymakers on board with regulation, but the mobilisation has to be caused by a scientific consensus and be pointing at the scientific consensus

I was talking about the loudest of the activist voices: I’m worried policymakers might hear the public is concerned about hugely beneficial technology instead the public being concerned about the technical reasons for doom and pointing at the scientists to listen to who can explain these reasons

For the context, I want lots of narrow AI to be allowed; I also want things with potential to kill everyone to be prevented from being created, with no chance of someone getting through, anywhere in the planet.

$957m in the US alone in 2023 on tech (less on AI): https://www.cnbc.com/2024/02/02/ai-lobbying-spikes-nearly-200percent-as-calls-for-regulation-surge.html

Thanks for the thorough analysis (you seem to have covered a lot of ground with little time!).

I have been interested in warning shots, albeit from biosecurity, but I think as you point out it could work quite well for AI too as long as take-off is not too fast. I am curious: Did you get a feeling that the movements you studied were able to make more of warning shots from having prepared and organized beforehand? Or could they quite effectively have impact by only retroactively responding to those events afterwards?

I found that for biosecurity, it seems possible that the chance of a warning shot is so high that it might be worthwhile preparing for it, especially if such preparations are likely to pay off. And to perhaps tie my comment a bit more closely to AI, it might be that the warning shot in bio is also a warning shot in AI, if the engineered pathogen was made possible by AI (there seems to be a possibility for quite a lot of catastrophe risk overlap between AI and bio).

Thank you @Ulrik Horn! I think warning shots may very well be important.

From my other piece: building up organizations in anticipation of future ‘trigger events’ is vital for protests, so that they can mobilize and scale in response – the organizational factor which experts thought was most important for protests. I think the same is true for GMOs: pre-existing social movements were able to capitalise on trigger events of 1997/1998, in part, because of prior mobilisation starting in 1980s.

I also think that engineered pathogen event is a plausible warning shot for AI, though we should also broaden our scope of what could lead to public mobilisation. Lots of 'trigger events' for protest groups (e.g. Rosa Parks, Arab Spring) did not stem from warning shots, but cases of injustice. Similarly, there weren't any 'warning shots' which posed harm for GMOs. (I say more about this in other piece!)

This is really valuable research, thank you for investigating and sharing!

My take from this is that there are people out there who are very good (and sometimes lucky) at organising effective protests. As @Geoffrey Miller comments below (above?), the protesters were not always right. But this is how the world works. We do not have a perfect world government. We can still learn from what they did that worked!

I believe that the world would be a much better place if EA's influenced more policy decisions. And if protesters were supporting EA-positions, it's highly likely that they'd will be at least mostly right!

So hoping that this work will help us figure out how to get more EA-aligned protesters.

I will be investigating a related area in my BlueDot Research Project, so may post something more on this later. But this article has already been a great help!

Hi Denis! Thank you for this. I agree that more EA influence on policy decisions would a good outcome. As I tried to set out in this piece, 'insiders' currently advising governments on AI policy would benefit from greater salience of AI as an issue, which protests could help bring about.

In terms of how we can get more EA-aligned protestors ... a really interesting question, and looking forward to seeing what you produce!

My initial thoughts: rational arguments about AI activism probably aren't necessary or sufficient for broader EA engagement. EAs aren't typically very ideological/political, and I think psychological factors ("even though I want to protest, is this what serious EAs do?") are strong motivators. I doubt many people seriously consider the efficacy/desirability of protests, before going on a protest. (I didn't, really). Once protests become more mainstream, I suspect more people will join. A rough-and-ready survey of EAs & their reasons not to protest would be interesting. @Gideon Futerman mentioned this in passing.

Another constraint on more EAs at protests is a lack of funding. This is endemic to protest groups more generally, and I think is also true for groups like PauseAI. I don't think there are any full-time organisers in the UK, for example.

Charlie - I appreciate your point about the lack of funding for AI-related protests.

There seems to be a big double standard here.

Many EA organizations are happy to spend tens of millions of dollars on 'technical AI alignment work', or AI policy/governance work, in hopes that they will reduce AI extinction risk. Although, IMHO, both have a very low chance of actually slowing AGI development, or resulting in safe alignment (given that 'alignment with human values in general' seems impossible, in principle, given the diversity and heterogeneity of human values -- as starkly illustrated in recent news from the Middle East.)

But the same EA organizations aren't willing, yet, to spend even a few tens of thousands of dollars on 'Pause AI' protests that, IMO, would have a much higher chance of sparking public discourse, interest, and concern about AI risks.

Funding protests is a tried-and-true method for raising public awareness. Technical AI alignment work is not a tried-and-true method for making AI safe. If our goal is to reduce extinction risk, we may be misallocating resources in directions that might seem intellectually prestigious, but that aren't actually very effective in the real world of public opinion, social media, mainstream media, and democratic politics.

Lightspeed Grants and my smaller individual donors should get credit for funding me to work on advocacy which includes protests full-time! Sadly afaik that is the only EA/adjacent funding that has gone toward public advocacy for AI Safety.

Thanks for writing this! I had one thought regarding how relevant saying no to some of the technologies you listed is to AGI.

In the case of nuclear weapons programs, the use of fossil fuels, CFCs, and GMOs, we actively used these technologies before we said no (FFs and GMOs we still use despite 'no', and nuclear weapons we have and could use at a moments notice). With AGI, once we start using them it might be too late. Geo-engineering experiments is the most applicable out of these, as we actually did say no before any (much?) testing was undertaken.

I agree restraining AGI requires "saying no" prior to deployment. In this sense, it is more similar to geo-engineering than fossil fuels: there might be no 'fire alarm'/'warning shot' for either.

Though, the net-present value of AGI (as perceived by AI labs) still seems v high, evidenced by high investment in AGI firms. So, in this sense, it has similar commercial incentives for continued development as continued deployment of GMOs/fossil fuels/nuclear power. I think the GMO example might be the best as it both had strong profit incentives and no 'warning shots'.

First of all - great concept and great execution. Lots of interesting information and a lucid, well-supported conclusion.

My initial and I feel insufficiently addressed concern is that successful protests will of course be overlooked because in retrospect the technology they are protesting will seem "obviously doomed" or "not the right technology" etc. Additionally, successful protests are probably a lot shorter than the unsuccessful ones (which go on for years continuing to try to stop something that is never stopped). I'm not sure this is evidence that the successful protests "don't count" because they were "too small."

I see you considered some of this in your (very interesting) section on "other interesting examples of technological restraint" but I would emphasize these and others like them are quite relevant as long as they obey your other constraints of similar-enough motivation and technology.

I was not able to come up with any examples to contribute. I feel there are a significant subset of things (pesticides, internet structural decisions, nonstandard incompatible designs?) that were attractive but would have resulted in a worse future had they not been successfully diverted.

Thank you!

I think your point about hindsight bias is a good one. I think it is true of technological restraint in general: "Often, in cases where a state decided against pursuing a strategically pivotal technology for reasons of risk, or cost, or (moral or risk) concerns, this can be mis-interpreted as a case where the technology probably was never viable."

I haven't discounted protests which were small – GMO campaigns and SAI advocacy were both small scale. The fact that unsuccessful protests are more prolonged might make them more psychologically available: e.g. Just Stop Oil campaigns. I'm slightly unsure what your point is here?

I also agree that other examples of restraint are also relevant – particularly if public pressure was involved (like for Operation Popeye, and Boeing 2707).