Epistemic status: very uncertain in everything. Based on hunches, not very thought out, and tested on a small sample size using a survey that could have been designed much better. Done for a college project.

Summary: People might think moral patients matter less if it is moderately difficult to help them. I did some basic surveying on MTurk to test this and it seems like it might be true in the context of donations.

Intro

The world is filled with moral catastrophes. An estimated 356 million children live in extreme poverty, an estimated 72 billion land animals are slaughtered for meat annually, and Toby Ord estimates existential risk in this century at 1 in 6. I’m pretty sure that most people agree that these things are very bad, yet do not spend much time working to fix them. I’ve been thinking about why this is the case and did some surveying on MTurk to test my hypothesis.

Moral sour grapes

A famished fox was frantically jumping

To get at some grapes that grew thickly,

Heavy and ripe on a high vine.

In the end he grew weary and gave up, thwarted,

And muttered morosely as he moved away:

"They're not worth reaching—still raw and unripe;

I simply can't stomach sour grapes."

Imagine Alex, who holds the following two beliefs:

- X is bad.

- Working on fixing X would require a lot of personal sacrifice.

These two beliefs held together do not have much implications for personal action, as most people seem to have a threshold after which things are outside the realm of duty. Now, Imagine that Alex comes across information that would contradict belief 2, and bring fixing X into the realm of duty.

- For X = “children dying of malaria”, they might find out that the market value of a human life is less than five thousand dollars.

- For X = “animal farming”, they might find that they could save hundreds of animals by going vegan.

Then they would either have to change their behavior or face the cognitive dissonance of thinking that something is bad, being able to make a small sacrifice to have a big impact, and yet not making the small sacrifice. I propose that people resolve this cognitive dissonance by either:

- rejecting belief 1 (by thinking that X isn’t that bad after all), or

- refusing to discard belief 2 (by being overly skeptical about ways to have an impact).

I call this effect moral sour grapes, but it’s very similar to Nate Soares’ tolerification.

Testing

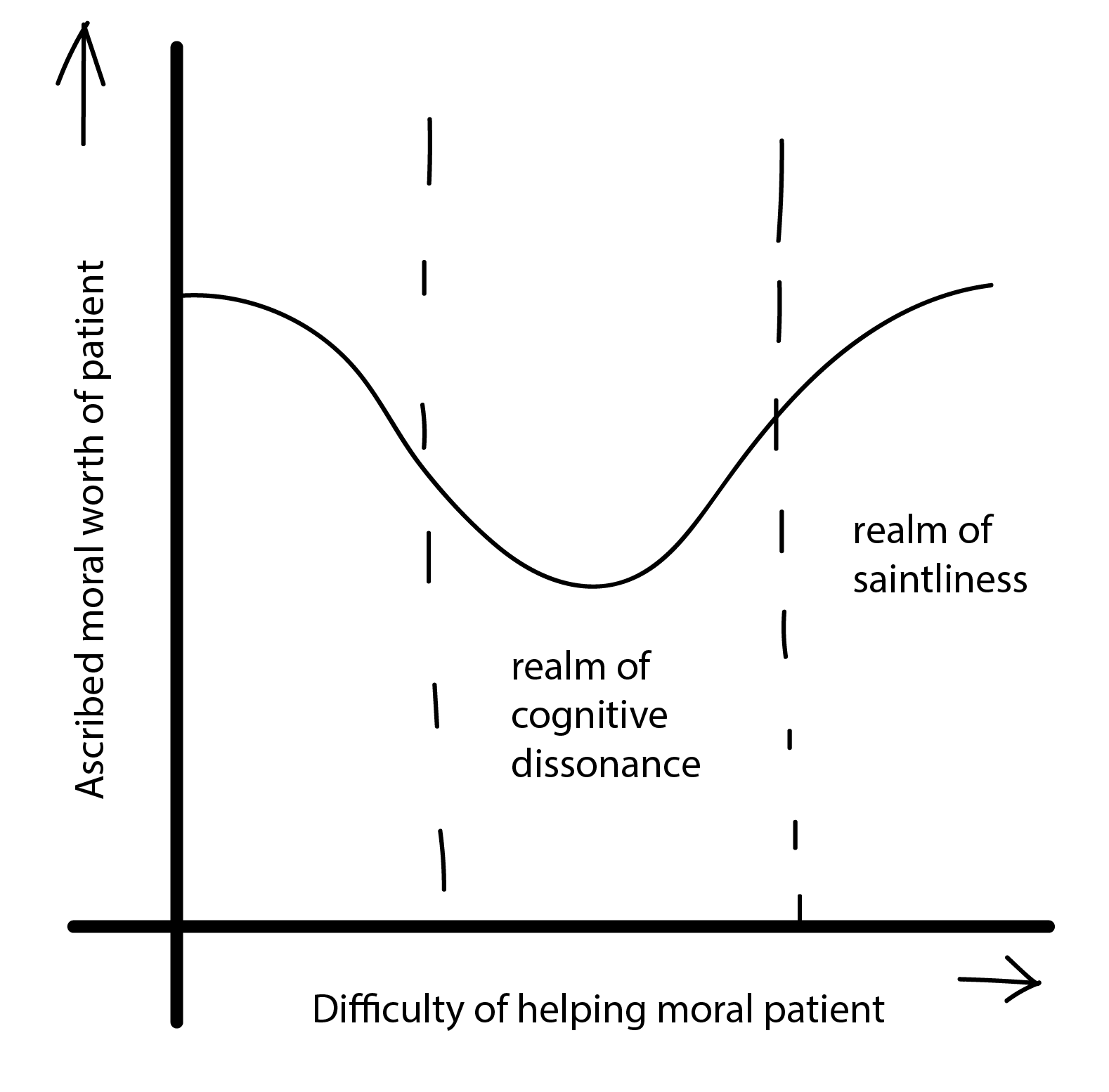

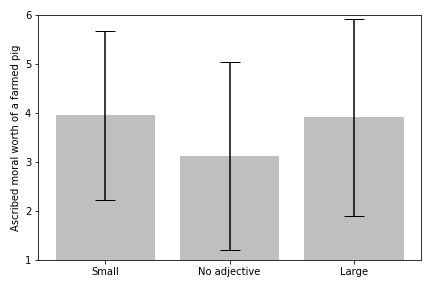

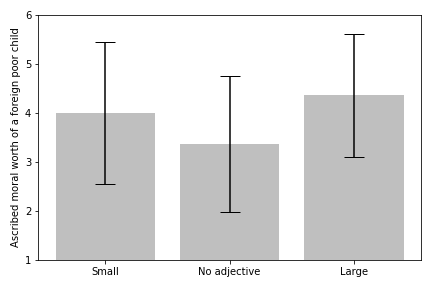

I decided to test this by surveying people on MTurk, asking them to rate the moral worth of affected moral patients on a 7-point integer scale, where higher numbers represented more moral worth (4 being equivalent to the average moral worth of a human life). I varied the difficulty of helping them across groups of participants. If my hypothesis were true, then the moral worth - difficulty graph would have a V shape. In other words, ascribed moral worth would drop with a rise in difficulty, but this effect would stop after a certain threshold where actions leave the realm of duty. I did this for the context of lifestyle changes (N = 106) and donations (N = 85). For the lifestyle change group, there was no noticeable effect, and in the case of donations, it seems to match my hypothesis quite well, and there’s quite a prominent V-shape.

This could imply that making alternative meat less expensive could make people care more about animals, and that asking people to donate small amounts (highlighting the impact of a small donation) could make them care more about poor foreign children. Or at least, it could prevent people from caring about them less, by causing less cognitive dissonance. I’m also tempted to wonder how the whole concept of “some actions are outside the realm of duty” can be dispelled, as some people (a large proportion of effective altruists, I would assume) don’t hold this belief. So, do moral sour grapes exist? Maybe!

Really cool! +1 for an interesting hypothesis and recreating real data to test it.

Are the bars standard errors or confidence intervals? In either case they seem rather wide. While the theory seems plausible I don’t think you can make strong inferences from this data

They're standard deviations, updated the figures, thanks! I agree strongly, this is weak evidence at best.