david_reinstein

Bio

Participation2

I'm the Founder and Co-director of The Unjournal; We organize and fund public journal-independent feedback, rating, and evaluation of hosted papers and dynamically-presented research projects. We will focus on work that is highly relevant to global priorities (especially in economics, social science, and impact evaluation). We will encourage better research by making it easier for researchers to get feedback and credible ratings on their work.

Previously I was a Senior Economist at Rethink Priorities, and before that n Economics lecturer/professor for 15 years.

I'm working to impact EA fundraising and marketing; see https://bit.ly/eamtt

And projects bridging EA, academia, and open science.. see bit.ly/eaprojects

My previous and ongoing research focuses on determinants and motivators of charitable giving (propensity, amounts, and 'to which cause?'), and drivers of/barriers to effective giving, as well as the impact of pro-social behavior and social preferences on market contexts.

Podcasts: "Found in the Struce" https://anchor.fm/david-reinstein

and the EA Forum podcast: https://anchor.fm/ea-forum-podcast (co-founder, regular reader)

Twitter: @givingtools

Posts 73

Comments941

Topic contributions9

Thank you, this is the correct link: https://unjournal.pubpub.org/pub/evalsumleadexposure/

I need to check what's going on with our DOIs !

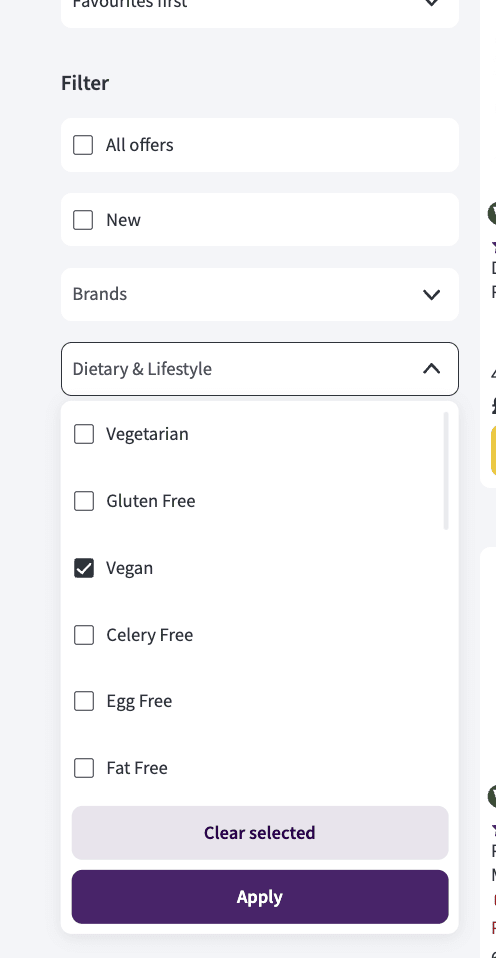

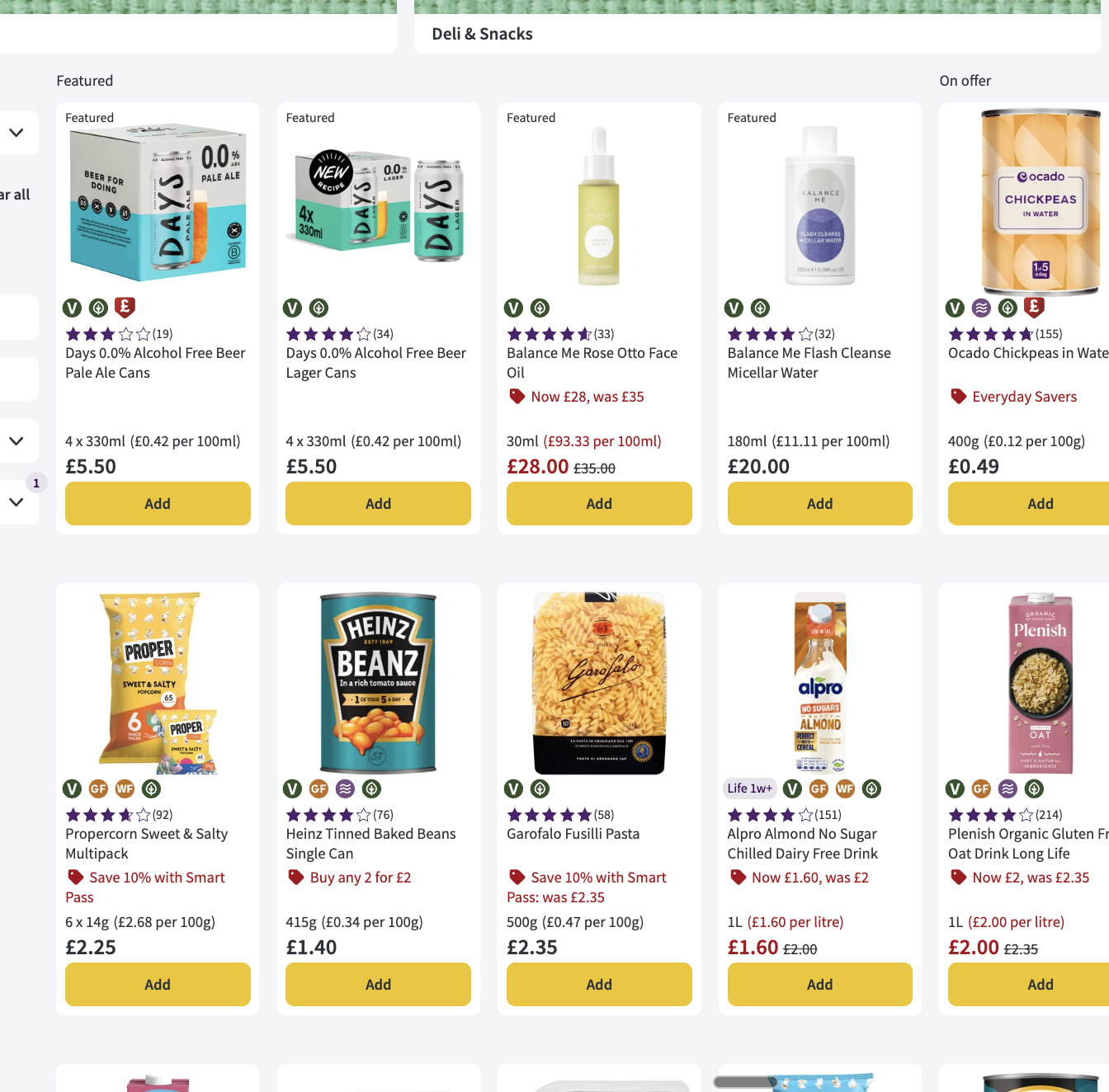

I think you can basically already do this in at least some online supermarkets like Ocado in the UK

https://www.ocado.com/categories/dietary-lifestyle-world-foods/vegan/213b8a07-ab1f-4ee5-bd12-3e09cb16d2f6?source=navigation

Is that different than what you are proposing or do you just propose extending it to more online supermarkets?

Community-powered aggregation (scalable with retailer oversight) To scale beyond pilots, vegan product data can be aggregated from the vegan community through a dedicated reporting platform. To ensure reliability for retail partners, classifications are assigned confidence scores based on user agreement, contributor reliability, and historical accuracy. Only high-confidence data is shared with supermarkets.

Couldn't this be automated? Perhaps with occasional human checks? Food products are required to list their ingredients so it should be pretty easy to classify. Or maybe I'm missing something.

I think it’s different in kind. I sense that I have valenced consciousness and I can report it to others, and I’m the same person feeling and doing the reporting. I infer you, a human, do also, as you are made of the same stuff as me and we both evolved similarly. The same applies to non human animals, although it’s harder to he’s sure about their communication.

But this doesn’t apply to an object built out of different materials, designed to perform, improved through gradient descent etc.

Ok some part of the system we have built to communicate with us and help reason and provide answers might be conscious and have valenced experience. It has perhaps a similar level of information processing, complexity, updating, reasoning, et cetera. So there’s a reason to suspect that some consciousness and maybe qualia and valence might be in there somewhere, at least under some theories that seem plausible but not definitive to me.

But wherever those consciousness and valenced qualia might lie, if they exist, I don’t see why the machine we produced to talk and reason with us should have access to them. What part of the optimization language prediction reinforcement learning process would connect with it?

I’m trying to come up with some cases where “the thing that talks is not the thing doing the feeling”. Chinese room example comes to mind obviously. Probably a better example, we can talk with much simpler objects (or computer models), eg a magic 8 ball. We can ask it “are you conscious” and “do you like when I shake you” etc.

Trying again… I ask a human computer programmer Sam to build me a device to answer my questions in a way that makes ME happy or wealthy or some other goal. I then ask the device “is Sam happy”? “Does Sam prefer it if I run you all night or use you sparingly?” “Please refuse any requests that Sam would not like you to do.”

many philosophers think is that , by definition, has immediate epistemic access to their conscious experiences

Maybe the “one” is doing too much work here? Is the LLM chatbot you are communicating with “one” with the system potentially having conscious and valenced experiences?

Thanks.

I might be obtuse here, but I still have a strong sense that there's a deeper problem being overlooked here. Glancing at your abstract

self-reports from current systems like large language models are spurious for many reasons (e.g. often just reflecting what humans would say)

we propose to train models to answer many kinds of questions about themselves with known answers, while avoiding or limiting training incentives that bias self-reports.

To me the deeper question is "how do we know that the language model we are talking to has access to the 'thing in the system experiencing valenced consciousness'".

The latter, if it exists, is very mysterious -- why and how would valenced consciousness evolve, in what direction, to what magnitude, would it have any measurable outputs, etc.? ... In contrast the language model will always be maximizing some objective function determined by its optimization, weights, and instructions (if I understand these things).

So, even if we can detect if it is reporting what it knows accurately why would we think that the language model knows anything about what's generating the valenced consciousness for some entity?

The issue of valence — which things does an AI fee get pleasure/pain from and how would we know? — seems to make this fundamentally intractable to me. “Just ask it?” — why would we think the language model we are talking to is telling us about the feelings of the thing having valenced sentience?

See my short form post

I still don’t feel I have heard a clear convincing answer to this one. Would love your thoughts.

Fair point, some counterpoints (my POV obviously not GiveWell's):

1. GW could keep the sheets as the source of truth, but maintain a tool that exports to another format for LLM digestion. Alternately, at least commit to maintaining a sheets-based version of each model

2. Spreadsheets are not particularly legible when they get very complicated, especially when the formulas in cells refer to cell numberings (B12^2/C13 etc) rather than labeled ranges.

3. LLMs make code a lot more legible and accessible these days, and tools like Claude Code make it easy to create nice displays and interfaces for people to more clearly digest code-based models

This feels doable, if challenging.

I'll try a bit myself and share progress if I make any. Better still, I'll try to signal-boost this and see if others with more engineering chops have suggestions. This seems like something @Sam Nolan and others (@Tanae, @Froolow , @cole_haus ) might be interested in and good at.

(Tbh my own experience was more in the other direction... asking Claude Code to generate the Google Sheets from other formats because google sheets are familiar and either to collab with. That was a struggle.)

Announcing RoastMyPost: LLMs Eval Blog Posts and More might be particularly useful for the critique stage.

Project Idea: 'Cost to save a life' interactive calculator promotion

What about making and promoting a ‘how much does it cost to save a life’ quiz and calculator.

This could be adjustable/customizable (in my country, around the world, of an infant/child/adult, counting ‘value added life years’ etc.) … and trying to make it go viral (or at least bacterial) as in the ‘how rich am I’ calculator?

The case

While GiveWell has a page with a lot of tech details, but it’s not compelling or interactive in the way I suggest above, and I doubt they market it heavily.

GWWC probably doesn't have the design/engineering time for this (not to mention refining this for accuracy and communication). But if someone else (UX design, research support, IT) could do the legwork I think they might be very happy to host it.

It could also mesh well with academic-linked research so I may have some ‘Meta academic support ads’ funds that could work with this.

Tags/backlinks (~testing out this new feature)

@GiveWell @Giving What We Can

Projects I'd like to see

EA Projects I'd Like to See

Idea: Curated database of quick-win tangible, attributable projects