A witty politician once said, "The world belongs to the old. The young ones just look better." He had a point. Power, seniority, and influence tend to come with age. Walking around the conference halls this February at EAG Global in the Bay Area, the average age seemed to be in the mid-20s or so. While youthfulness has its benefits, such as fewer family or career commitments, openness to pivoting occupation, and potentially more time for volunteering, I believe we miss out on the power, wisdom, and diversity that older participants could bring. Even people in their mid-late 40s appeared quite rare at the conference.

Why is it important to have age diversity and more older participants?

- Power and Influence: Leaders and managers are typically older than their employees. The median age in the U.S. House of Representatives is around 58. Baby Boomers (now mostly in their 60s and 70s) hold about 50% of America's wealth. I assume similar trends apply globally.

- Experience and Wisdom: Older individuals bring experience gained through years of trial and error.

- Diversity of opinions, perspectives and priorities naturally evolve with age.

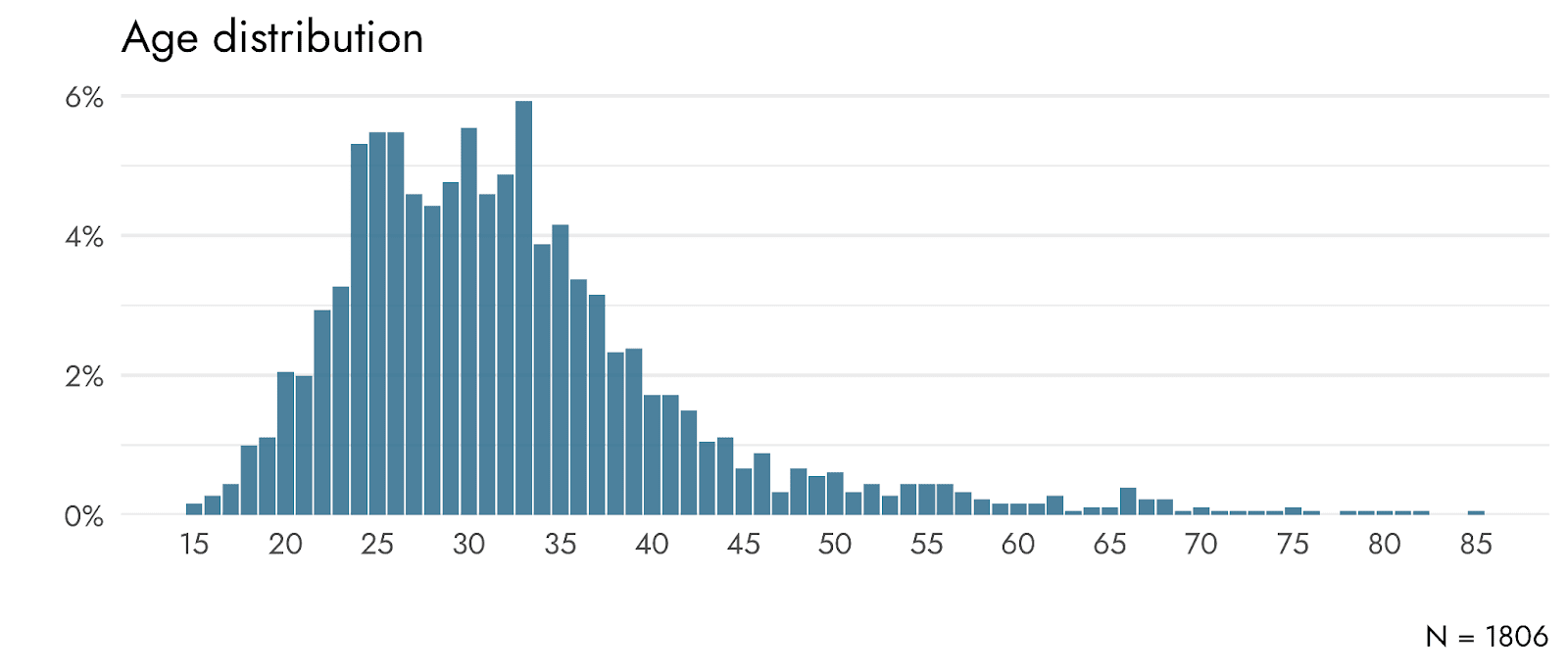

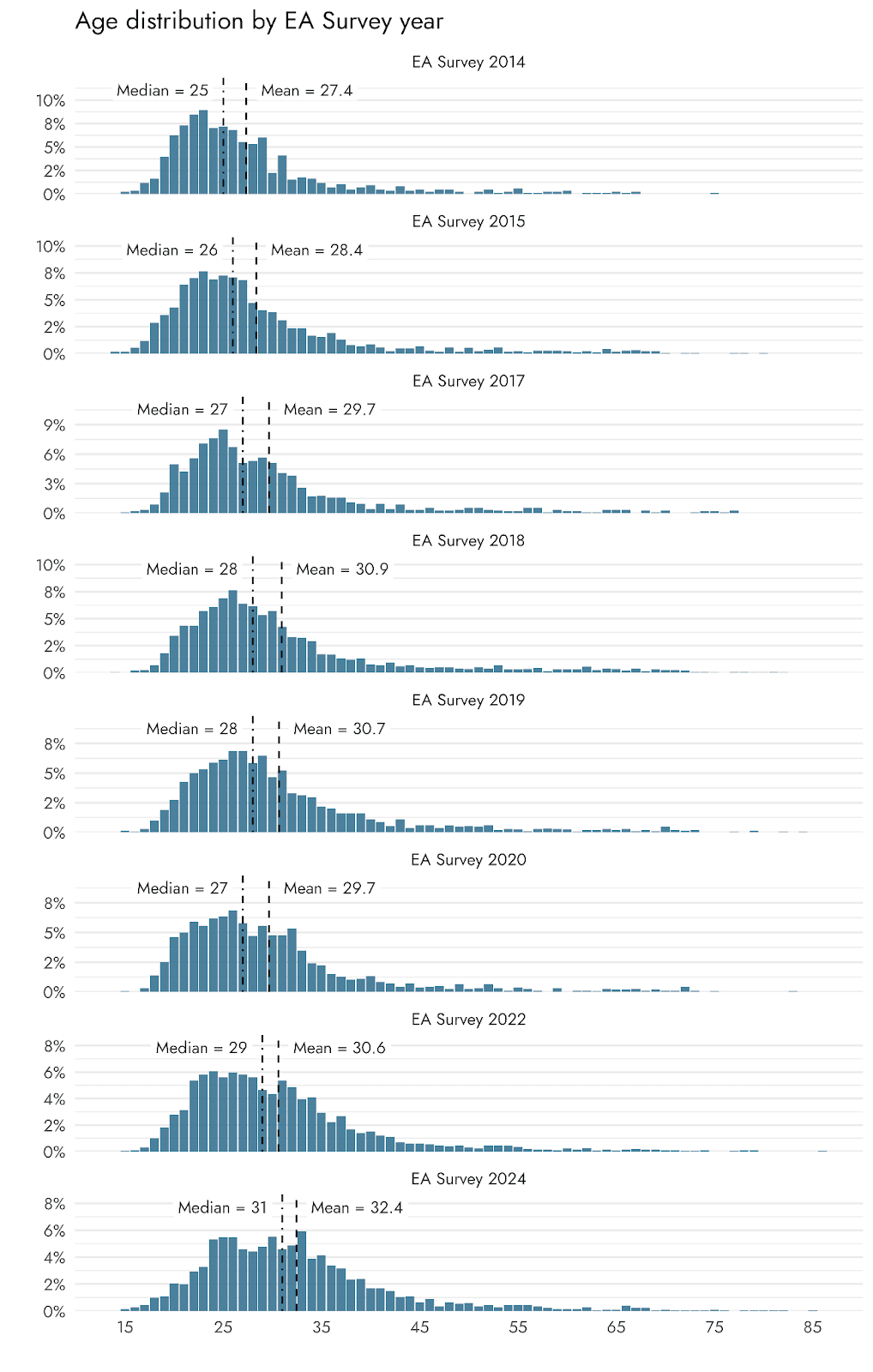

The Effective Altruism movement remains notably youthful. According to the EA Survey 2020, participants had a median age of 27 (mean 29).

Why is our movement, after being around for ~15 years (depending on how you count), less appealing to older participants?

How can Effective Altruism become more attractive to older demographics?

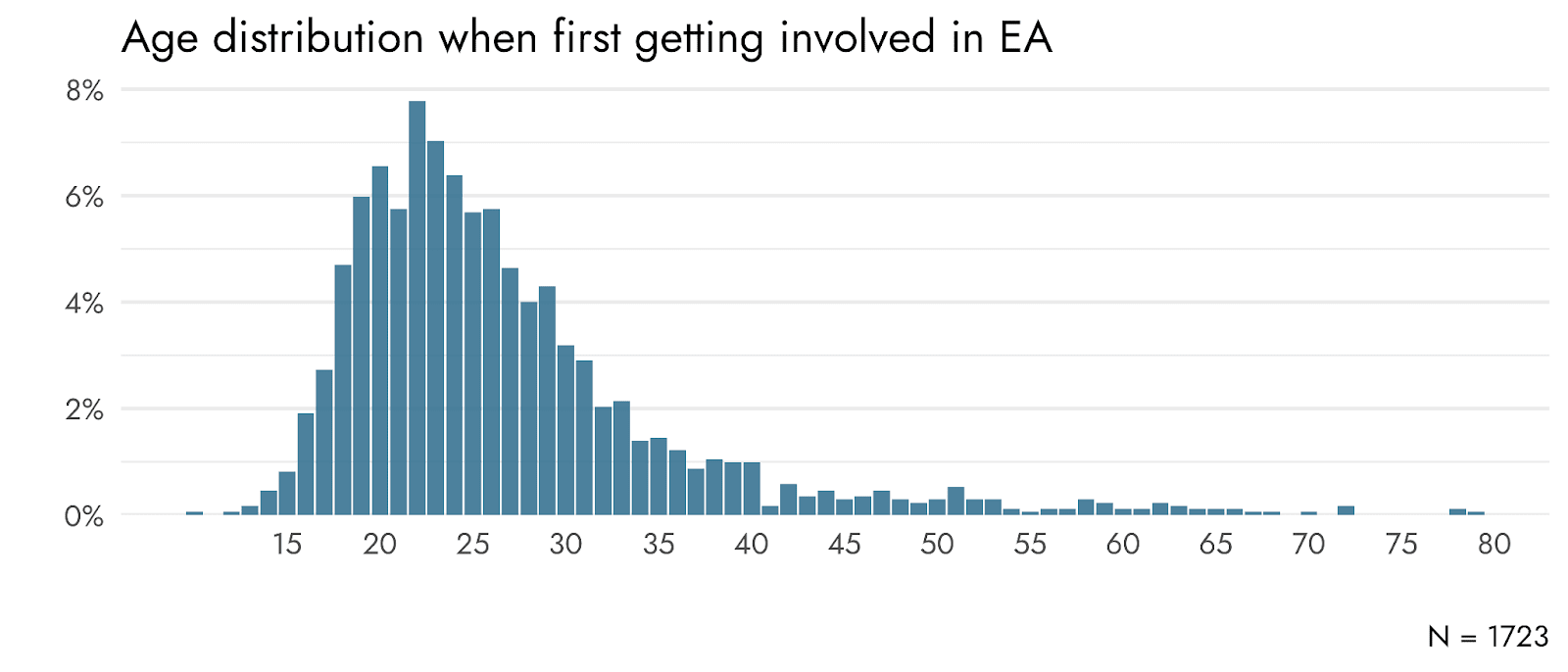

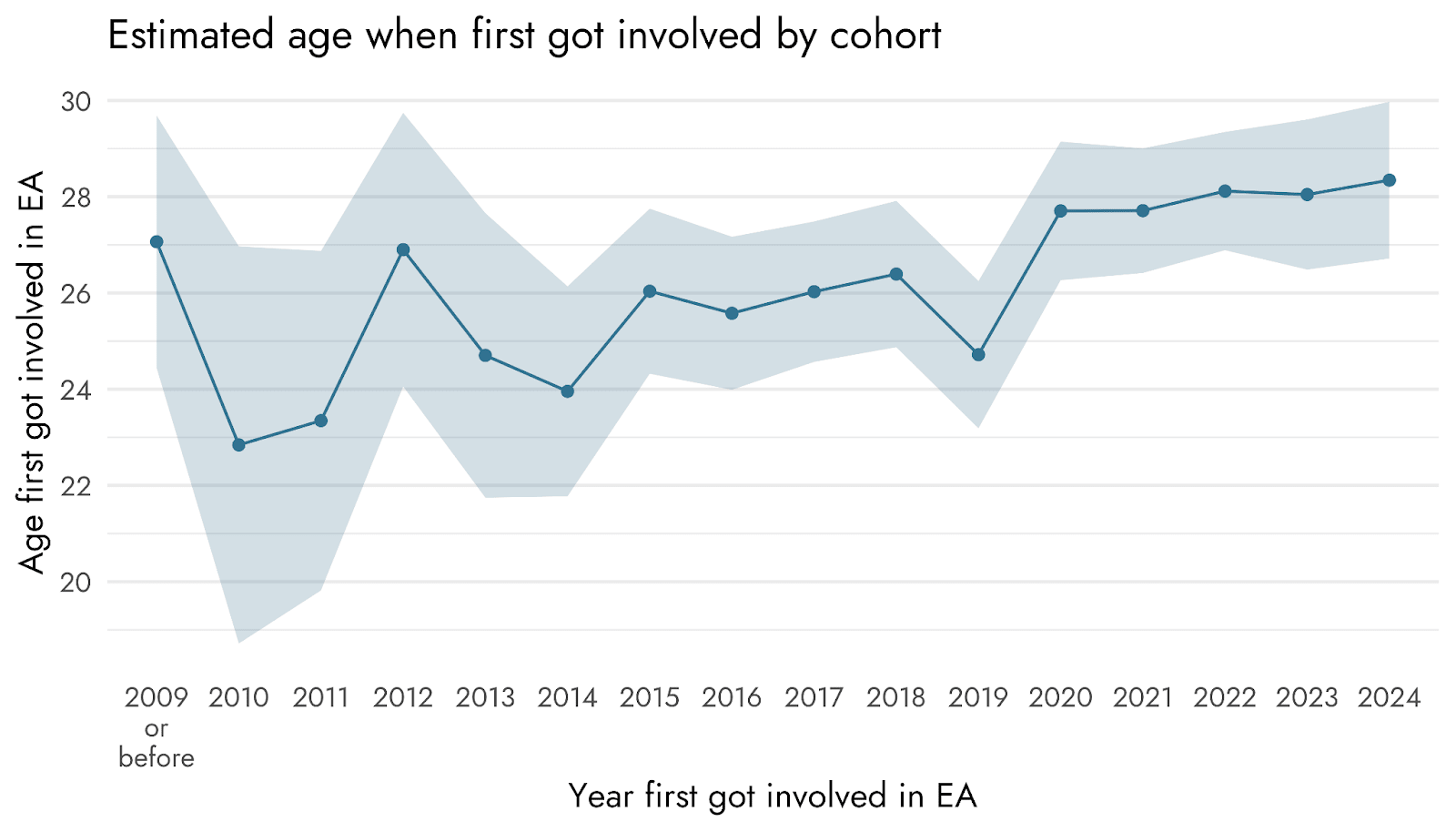

I expect there are some cohort effects (people in more recent generations have a higher probability of being involved). In particular, many people get into EA via university groups (although it may not be the place they 'first heard' about it; see David Moss' reply), and these groups have only been around a decade or so.

But I also imagine some pure age effects (as people age they leave/are less likely to enter), perhaps driven by things like

1. Homophily/identity/herding: If you only see people unlike you (agewise) you're less likely to think you belong. This leads to inertia.

2. Cost and family priorities: EA ~expects/encourages people to donate a substantial share of their income, or do directly impactful work (which may be less remunerative or secure). For older people the donation share/lost income could seem more substantial, esp. if they are used to their lifecycle. Or probably more significantly, for parents it may be harder to do what seems like 'taking money away from their children.

3. Status and prestige issues: EA leaders tend to be young, EA doesn't value seniority or credentials as much (which is probably a good thing). But older people might feel ~disrespected by this. Or second order: they might think that their age-peers and colleagues will think less of them if they are following or 'taking direction' from ~'a bunch of kids'. E.g., as a jr. professor at an academic conference if you are seated at the grad students' table you might feel insecure.

4. Issues and expertise that are relevant tends to be 'new stuff' that older people won't have learned or won't be familiar with. AI Safety is the biggest one, but there are other examples like Bayesian approaches.

(Identity politics bit: I'm 48 years old, and some of this is based on my own impressions, but not all of it.)