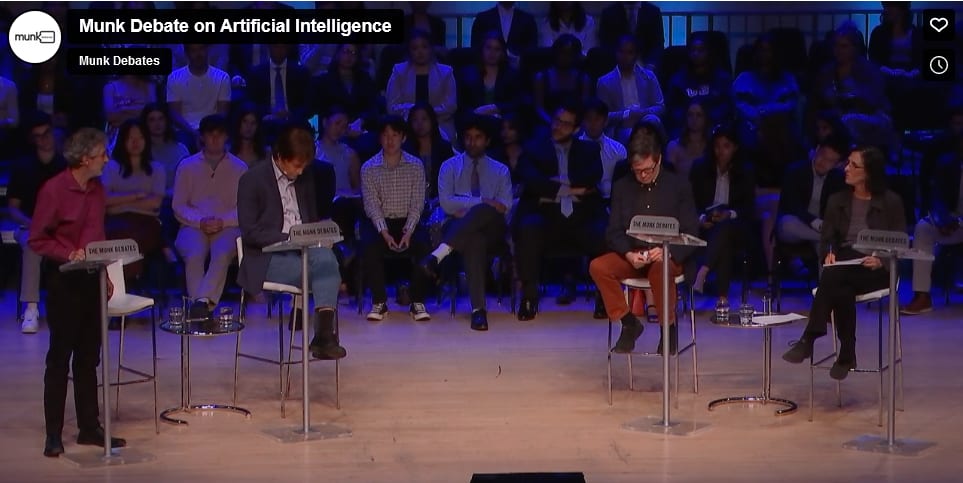

On June 22nd, there was a “Munk Debate”, facilitated by the Canadian Aurea Foundation, on the question whether “AI research and development poses an existential threat” (you can watch it here, which I highly recommend). On stage were Yoshua Bengio and Max Tegmark as proponents and Yann LeCun and Melanie Mitchell as opponents of the central thesis. This seems like an excellent opportunity to compare their arguments and the effects they had on the audience, in particular because in the Munk Debate format, the audience gets to vote on the issue before and after the debate.

The vote at the beginning revealed 67% of the audience being pro the existential threat hypothesis and 33% against it. Interestingly, it was also asked if the listeners were prepared to change their minds depending on how the debate went, which 92% answered with “yes”. The moderator later called this extraordinary and a possible record for the format. While this is of course not representative for the general public, it mirrors the high uncertainty that most ordinary people feel about AI and its impacts on our future.

I am of course heavily biased. I would have counted myself among the 8% of people who were unwilling to change their minds, and indeed I’m still convinced that we need to take existential risks from AI very seriously. While Bengio and Tegmark have strong arguments from years of alignment research on their side, LeCun and Mitchell have often made weak claims in public. So I was convinced that Bengio and Tegmark would easily win the debate.

However, when I skipped to the end of the video before watching it, there was an unpleasant surprise waiting for me: at the end of the debate, the audience had seemingly switched to a more skeptical view, with now only 61% accepting an existential threat from AI and 39% dismissing it.

What went wrong? Had Max Tegmark and Yoshua Bengio really lost a debate against two people I hadn’t taken very seriously before? Had the whole debate somehow been biased against them?

As it turned out, things were not so clear. At the end, the voting system apparently broke down, so the audience wasn’t able to vote on the spot. Instead, they were later asked for their vote by email. It is unknown how many people responded, so the difference can well be a random error. However, it does seem to me that LeCun and Mitchell, although clearly having far weaker arguments, came across quite convincing. A simple count of the hands of the people behind the stage, who can be seen in the video, during a hand vote results almost in a tie. The words of the moderator also seem to indicate that he couldn’t see a clear majority for one side in the audience, so the actual shift may have been even worse.

In the following, I assume that Bengio and Tegmark were indeed not as convincing as I had hoped. It seems worthwhile to look at this in some more detail to learn from it for future discussions.

I will not give a detailed description of the debate; I recommend you watch it yourself. However, I will summarize some key points and will give my own opinion on why this may have gone badly from an AI safety perspective, as well as some learnings I extracted for my own outreach work.

The debate was structured in a good way and very professionally moderated by Munk Debate’s chair Rudyard Griffiths. If anything, he seemed to be supportive of an existential threat from AI; he definitely wasn’t biased against it. At the beginning, each participant gave a 6-minute opening statement, then each one could reply to what the others had said in a brief rebuttal. After that, there was an open discussion for about 40 minutes, until the participants could again summarize their viewpoints in a closing statement. Overall, I would say the debate was fair and no side made significant mistakes or blunders.

I will not repeat all the points the participants made, but give a brief overview of their stance on various issues as I understood them in the following table:

| Tegmark | Bengio | LeCun | Mitchell | |

| Is AI R&D an existential risk? | Yes | Yes | No, we will keep AI under control | No, this is just science fiction/ not grounded in science |

| What is the probability of an existential risk from AI? | High enough to be concerned (>10%) | Edit: probability of ASI 10%-50% according to people I spoke to | As small as that of being wiped out by an asteroid | Negligible |

| Is ASI possible in the foreseeable future? | Yes | Yes, 5-20 years | Yes, although there are still important elements missing | No |

| Is there an x-risk from malicious actors using AI? | Yes | Yes | No, because the good guys will have superior AI | No, AI will not make already existing threats much worse |

| Is there an x-risk from rogue AI? | Yes | Yes | No, because we won’t build AI that isn’t safe | No, AI will be subhuman for a long time |

| Is there an x-risk from human dis-empower-ment? | Yes | Yes | No, AI will always be docile and make people stronger | No, AI will be subhuman for a long time |

| Will ASI seek power? | Yes | Yes | No, we will make AI docile, intel-ligence is not correlated with dominance | No, AI has no will of its own |

| Is the orthogonality thesis correct? (The term wasn’t mentioned directly in the debate) | Yes | Yes | No, intelligence is generally beneficial | No, an ASI would be smart enough to under-stand what we really want |

| What are pro’s and con’s of taking AI x-risk seriously? | Pro: We need to take it seriously to do what is necessary and prevent worst-case scenarios | Pro: We need to take it seriously to do what is necessary and prevent worst-case scenarios | Contra: Being too cautious stifles innovation and will prevent us from reaping the benefits of AI | Contra: It takes away attention from the real (short-term) risks of AI |

| Specific talking points | We need to be humble, cannot simply assume that ASI will be safe or impossible | I have been working in AI for a long time and was convinced that ASI is a long way off, but I changed my mind after ChatGPT/ GPT-4 | Yes, current technology could go wrong, but we can and will prevent that. AI development should be open-source. | This is all just hype/science fiction, there is no evidence for ASI/x-risks, people have always been afraid of technology |

My heavily biased summary of the discussion: While Bengio and Tegmark argue based on two decades of alignment research, LeCun and Mitchell merely offer heuristics of the kind “people were scared about technology in the past and all went well, so there is no need to be scared now”, an almost ridiculous optimism on the side of LeCun (along the lines of “We will not be stupid enough to build dangerous AI”, “We will be able to build benevolent ASI by iteratively improving it”) and an arrogant dismissiveness by Mitchell towards people like Yoshua Bengio and Geoffrey Hinton, calling their concerns “ungrounded speculations” and even “dangerous”. Neither Mitchell nor LeCun seem very familiar with standard AI safety topics, like instrumental goals and the orthogonality thesis, let alone agentic theory.

Not much surprise here. But one thing becomes apparent: Bengio and Tegmark are of almost identical mindsets, while LeCun and Mitchell have different opinions on many topics. Somewhat paradoxically, this may have helped the LeCun/Mitchell side in various ways (the following is highly speculative):

- Mitchell’s dismissiveness may have shifted the Overton window of the audience towards the possibility that Bengio and Tegmark, despite their credits, might be somehow deluded.

- This may have strengthened LeCun’s more reasonable (in my view) stance: he admitted that ASI is possible and could pose a risk, but at the same time dismissed an x-risk on the grounds that no one would be stupid enough to build an unsafe ASI.

- LeCun came across as somewhat “in the middle of the spectrum” between Mitchell’s total x-risk dismissal and Tegmark’s and Bengio’s pro x-risk stance, so people unsure about the issue may have taken his side.

- Mitchell attacked Bengio and Tegmark indirectly multiple times, calling their opinions unscientific, ungrounded speculation, science fiction, etc. In contrast, Bengio and Tegmark were always respectful and polite, even when Tegmark challenged Mitchell. This may have further increased LeCun’s credibility, since there were no attacks on him and he didn’t attack anyone himself.

Although Bengio and Tegmark did a good job at explaining AI safety in layman’s terms, their arguments were probably a bit difficult to grasp for people with no prior knowledge of AI safety. Mitchell’s counter-heuristics, on the other hand (“people have always been afraid of technology”, “don’t trust the media when they hype a problem”), are familiar to almost anyone. Therefore, the debate may have appeared balanced to outsiders, when at least to me it is obvious that one side was arguing grounded in science and rationality, while the other was not.

I have drawn a few lessons for my own work and would be interested in your comments on these:

- Explaining AI safety to the general public is even more important than I previously thought, if only to strengthen the arguments of the leading AI safety researchers in similar situations.

- We cannot rely on logical arguments alone. We need to actively address the counter-heuristics and make it clear why they are not applicable and misleading.

- It may be a good idea to enter such a debate with a specific framework to build your arguments on. For example, Tegmark or Bengio could have mentioned orthogonality and instrumental goals right from the start and refer to that framework whenever LeCun and Mitchell were arguing that ASI would have no reason to do bad things, or intelligence was always beneficial. I personally would probably have used a frame I call the “game of dominance”, which I use to explain why AI doesn’t have to be human-like or ASI to become uncontrollable.

- It seems like a good idea to have a mix of differing opinions on your side, even somewhat extreme (though grounded in rationality) positions – these will strengthen the more moderate stances. In this specific case, a combination of Bengio and e.g. Yudkowsky may have been more effective.

- Being polite and respectful is important. While Mitchell’s dismissiveness may have helped LeCun, it probably hurt her own reputation, both in the AI safety community and in the general public.

As a final remark, I would like to mention that my personal impression of Yann LeCun did improve while watching the debate. I don’t think he is right in his optimistic views (and I’m not even sure if this optimism is his true belief, or just due to his job as chief AI scientist at Meta), but at least he recognizes the enormous power of advanced AI and admits that there are certain things that must not be done.

Haven't watched it yet, but perhaps we should be cautious calling some arguments "heuristics", and some "logical arguments". I think the distinction isn't completely clear, and some arguments for AI extinction risk could be considered heuristics.

I agree that we should always be cautious when dismissing another's arguments. I also agree that some pro-x-risk arguments may be heuristics. But I think the distinction is quite important.

A heuristic is a rule of thumb, often based on past experience. If you claim "tomorrow the sun will rise in the east because that's what it has always done", that's a heuristic. If you instead say "Earth is a ball circling the sun while rotating on its axis; reversing this rotation would require enormous forces and is highly unlikely, therefore we can expect that it will look like the sun is rising in the east tomorrow", that's not a heuristic, but a logical argument.

Heuristics work well in situations that are more or less stable, but they can be misleading when the situation is unusual or highly volatile. We're living in very uncommon times, therefore heuristics are not good arguments in a discussion about topics like AI safety.

The claim "people have always been afraid of technology, and it was always unfounded, so there is no need to be afraid of AI x-risk now" is a typical heuristic, and it is highly misleading (and also wrong IMO - the fear of a nuclear war is certainly not unfounded, for instance, and some of the bad consequences of technology, like climate change and environmental destruciton, are only now becoming apparent).

The problem with heuristics is that they are unreliable. They prove nothing. At the same time, they are very easy to understand, and therefore very convincing. They have a high potential of misleading people and clouding their judgements. Therefore, we should avoid and fight them wherever we can, or at least make it transparent that they are just rules of thumb based on past experience, not logical conclusions based on a model of reality.

Edit: I'd be interested to hear what the people who disagree with this think. Seriously. This may be an important discussion.

I think the issue is that we are talking about a highly speculative event, and thus the use of heuristics is pretty much unavoidable in order for people to get their bearings on the topic.

Take the heuristic that Tegmark employed in this debate: that the damage potential of human weapons has increased over time. He talks about how we went from sticks, to guns, to bombs, killing dozens, to hundreds, to millions.

This is undeniably a heuristic, but it's used to prime people for his later logical arguments as to why AI is also dangerous, like these earlier technologies.

Similarly, when Yann lecun employs the heuristic that "in the past, people have panicked about new technology, but it always turned out to be overblown", it's a priming device, and he later goes on to make logical arguments for why he believes the risk to be overblown in this case as well.

I don't believe either heuristic is wrong to use here. I think they are both weak arguments that are used as setup for later points. I do think the OP has been selective in their calling out of "heuristics".

Thank you for clarifying your view!

This is not a heuristic. It would be a heuristic if he had argued "Because weapons have increased in power over time, we can expect that AI will be even more dangerous in the future". But that's not what he did if I remember it correctly (unfortunately, I don't have access to my notes on the debate right now, I may edit this comment later). However, he may have used this example as priming, which is not the same in my opinion.

Mitchell in particular seemed to argue that AI x-risk is unlikely and talking about it is just "ungrounded speculation" because fears have been overblown in the past, which would count as a heuristic, but I don't think LeCun used it in the same way. But I admit that telling it apart isn't easy.

The important point here is not so much whether using historical trends or other unrelated data in arguments is good or bad, it's more whether the argument is built mainly on these. As I see it:

Tegmark and Bengio argued that we need to take x-risk from AI seriously because we can't rule it out. They gave concrete evidence for that, e.g. the fast development of AI capabilities in the past years. Bengio mentioned how that had surprised him, so he had updated his probability. Both admitted that they didn't know with certainty whether AI x-risk was real, but gave it a high enough probability to be concerned. Tegmark explicitly asked for "humbleness": Because we don't know, we need to be cautious.

LeCun mainly argued that we don't need to be worried because nobody would be stupid enough to build a dangerous ASI without knowing how to control it. So in principle, he admitted that there would indeed be a risk if there was a probability that someone could be stupid enough to do just that. I think he was closer to Bengio's and Tegmark's viewpoints on this than to Mitchell's.

Mitchell mainly argued that we shouldn't take AI x-risk seriously because a) it is extremely unlikely that we'll be able to build uncontrollable AI in the foreseeable future and b) talking about x-risk is dangerous because it takes away energy from "real" problems. a) was in direct contradiction to what LeCun said. The evidence she provided for it was mainly a heuristic ("people in the 60's thought we were close to AGI, and it turned out they were wrong, so people are wrong now") and an anthropomorphic view ("computers aren't even alive, they can't make their own decisions"), which I would also count as a heuristic ("humans are the only intelligent species, computers will never be like humans, therefore computers are very unlikely to ever be more intelligent than humans"), but this may be a misrepresentation of her views. In my opinion, she gave no evidence at all to justify her claim that two of the world's leading AI experts (Hinton and Bengio) were doing "ungrounded speculation". b) is irrelevant to the question debated and also a very bad argument IMO.

I admit that I'm biased and my analysis may be clouded by emotions. I'm concerned about the future of my three adult sons, and I think people arguing like LeCun and, even more so, Mitchell are carelessly endangering that future. That is true for your own future as well, of course.

Hey, thanks for engaging. I am also concerned about the future, which is why I think it's incredibly important to get the facts right, and ensure that we aren't being blinded by groupthink or poor judgment.

I don't think your "heuristic" vs "argument" distinction is sufficiently coherent to be useful. I prefer to think of it all as evidence, and talk about the strength of that evidence.

That weapons have gotten more deadly over time is evidence in favour of AI danger, it's just weak evidence. That the AI field has previously fallen victim to overblown hype is evidence against imminent AI danger, it's just weak evidence. We're talking about a speculative event, extrapolation from the past/present is inevitable. What matters is how useful/strong such extrapolation are.

You talk about Tegmark citing recent advances in AI as " concrete evidence" that a future AGI will be world domination capable. But obviously you can't predict long term outcomes from short term trends. Lecun/mitchell retort that AI are still incredibly useless at many seemingly easy tasks, and that AI hype has occurred before, so they extrapolate the other way, to say it will stall out at some point.

Who is right? You can't figure that out by the semantics of "heuristics". To get an actual answer, you have to dig into actual research on capabilities and limitations, which was not done by anybody in this debate (mainly because it would have been too technical for a public-facing debate).

I agree in principle. However, I still think that there's a difference between a heuristic and a logical conclusion. But not all heuristics are bad arguments. If I get an email from someone who wants to donate $10,000,000 to me, I use the heuristic that this is likely a scam without looking for further evidence. So yeah, heuristics can be very helpful. They're just not very reliable in highly unusual situations. In German comments, I often read "Sam Altman wants to hype OpenAI by presenting it as potentially dangerous, so this open letter he signed must be hype". That's an example of how a heuristic can be misleading. It is ignoring the fact, for example, that Yoshua Bengio and Geoffrey Hinton also signed that letter.

No. Tegmark cites this as concrete evidence that a future uncontrollable AGI is possible and that we shouldn't carelessly dismiss this threat. He readily admits that there may be unforeseen obstacles, and so do I.

I fully agree.

I feel like people might be interested in my opinions on the debate, as an AI doom skeptic.

I think that this live debate format is not particularly useful in truth-finding, and in this case mostly ended up with shallow, 101 level arguments (on all sides). Nonetheless, it was a decent discussion, and I think everyone was polite and good faith. I definitely disagree with the OP that Mitchell was being "dismissive" for stating her honest belief that near-term AGI is unlikely. This is a completely valid position held by a significant portion of AI researchers.

I thought Bengio was the best debater of the bunch, as he was calm and focused on the most convincing element of the AI risk argument (that of malicious actors misusing AI). I respect that he emphasised his own uncertainty a lot.

Lecunn did a lot better than I was expecting, and I think laid his case pretty well and made a lot of good arguments. I think he might have misled the audience a little by not stating that his plan for controllable AI was speculative in nature.

I found Tegmark to be quite bad in this debate. He overrelied upon unsupported speculation and appeals to authority, and didn't really respond to his opponents in his rebuttals. I found his continuous asking of probabilities to be a poor move: this may be the norm in EA spaces, but it looks very annoying to outsiders and is barely an argument. I think he is unused to communicating to the general public, I spotted a few occasions of using the terms "alignment" and "safety" in ways that would not have been obvious to an onlooker.

Mitchell was generally fine and did bring up some good arguments (like that intelligent AI would be able to figure out what we wanted), but it felt like she was a little unprepared and wasn't good at responding to the others arguments. I think she would have done well to research the counterarguments to her points in order to address them better.

Overall, I thought it was generally fine as an intro to the layman.

I didn't state that. I think Mitchell was "dismissive" (even aggressively so) by calling the view of Tegmark, Bengio, and indirectly Hinton and others "ungrounded speculations". I have no problem with someone stating that AGI is unlikely within a specific timeframe, even if I think that's wrong.

I agree with most of what you wrote about the debate, although I don't think that Mitchell presented any "good" arguments.

It probably would, but that's not the point of the alignment problem. The problem is that even if it knows what we "really" want, it won't care about it unless we find a way to align it with our values, needs, and wishes, which is a very hard problem (if you doubt that, I recommend watching this introduction). We understand pretty well what chickens, pigs, and cows want, but we still treat them very badly.

I think calling their opinions "ungrounded speculation" is an entirely valid opinion, although I would personally use the more diplomatic term "insufficiently grounded speculation". She acknowledges that they have reasons for their speculation, but does not find those reasons to be sufficiently grounded in evidence.

I do not like that her stating her opinions and arguments politely and in good faith is being described as "aggressive". I think this kind of hostile attitude towards skeptics could be detrimental to the intellectual health of the movement.

As for your alignment thoughts, I have heard the arguments and disagree with them, but I'll just link to my post on the subject rather than drag it in here.

I disagree on that. Whether politely said or not, it disqualifies another's views without any arguments at all. It's like saying "you're talking bullshit". Now, if you do that and then follow up with "because, as I can demonstrate, facts A and B clearly contradict your claim", then that may be okay. But she didn't do that.

She could have said things like "I don't understand your argument", or "I don't see evidence for claim X", or "I don't believe Y is possible, because ...". Even better would be to ask: "Can you explain to me why you think an AI could become uncontrollable within the next 20 years?", and then answer to the arguments.

I think we'll just have to disagree on this point. I think it's perfectly fine to (politely) call bullshit, if you think something is bullshit, as long as you follow it up with arguments as to why you think that (which she did, even if you think the arguments were weak). I think EA could benefit from more of a willingness to call out emperors with no clothes.

Agreed.

That's where we disagree - strong claims ("Two Turing-award winners talk nonsense when they point out the dangerousness of the technology they developed") require strong evidence.

Hi Karl,

Thanks for your post. You noted that it seems less of the audience was convinced by the end of the debate. I attended that debate, and the page where participants were supposed to vote about which side they were on was not loading. So, I expect far fewer people voted at the end of the debate than at the beginning. The sample of people who did vote at the end, therefore, may very well not be representative. All to say: I wouldn't weight the final vote too heavily!

Thank you for this insight! I agree, as I wrote, that the result is inconclusive. I still think the debate could have had much "better" results in the sense that it could have made people more concerned about AI x-risks. I think that Bengio and Tegmark failed at this at least in part, though I'm not sure whether my explanation for this is correct.

Quick addition, it's also up on their youtube channel with an (automatic) transcript here

I don't think viewing 'won' or 'lost' is a good frame for these debates. Instead, given that AI Safety and existential/catastrophic concerns are now a mainstream issue, debates like these are part of a consensus-making process for both the field and the general public. I think the value of these debates lies in normalising this issue as one that is valid to have a debate about in the public sphere. These debates aren't confined to LessWrong or in-the-know Twitter sniping anymore, and I think that's unironically a good thing. (It's also harder to dunk on someone to their face as opposed to their Twitter profile which is an added bonus).

For what it's worth the changes were only a few percentage points, the voting system was defective as you mention. Both sides can claim victory (the pro-side "people still agree with us" the anti-side "we changed more minds in the debate"). I think you're a bit too hasty to extrapolate what the 'AI Safety Side' strategy should be. I also think that the debate will live on in the public sphere after the end date, and I don't think that the 'no' side really came off as substantially better than the 'yes' side, which should set off people's concerns along the lines of "wait, so if it is a threat, we don't have reliable methods of alignment??"

However, like everyone, I do have my own opinions and biases about what to change for future debates, so I suppose it'd be honest to own up to them:

This isn't valuable to truth-seeking, but it does have an impact of perceptions of legitimacy etc

I agree.

That may well be true.

Yes, he definitely would have been a good choice.

Thanks for this post, good summary!

I recall reading that in debates like this, the audience usually moves against the majority position.

There's a simple a priori reason one might expect this: if to begin with there are twice as many people who agree with X as disagree with it, then the anti- side has twice as many people available who they can plausibly persuade to switch to their view.

If 10% of both groups change their minds, you'd go from 66.6% agreeing to 63.3% agreeing.

(Note that even a very fringe view benefits from equal time in a debate format, in a way that isn't the case in how much it gets covered in the broader social conversation.)

Would be neat if anyone could google around and see if this is a real phenomenon.

Interesting! This does sound pretty plausible, and it could explain a good share of the move against the original majority position.

Still, this seems unlikely to entirely explain moving to "almost in a tie", though, in case that's what actually happened. If you start with vote shares p and 1−p, and get half from each group switching to the other, you end up with 0.5p+0.5(1−p)=0.5 in each group. Half from each group switching seems pretty extreme,[1] and any less than that (the same share of each group switching) would preserve the majority position.

More than half from each switching sounds crazy: their prior positions would be inversely correlated with their later positions.

sorry I thought the difference was more like 4%? 67-33 to 63-37.

If Robert_Wiblin was right about the proposed causal mechanism, which I'm fairly neutral on, then you just need .67x -.33x= 0.04, or about a x=~12% (relative) shift from each side, which is very close to Robert's original proposed numbers.

Yes sorry I don't meant to 'explain away' any large shift (if it occurred), the anti- side may just have been more persuasive here.

I have thought something similar (without having read about it before), given the large percentage of people who were willing to change their minds. But I think the exact percentage of the shift, if there was one at all, isn't really important. I think you could say that since there wasn't a major shift towards x-risk, the debate wasn't going very well from an x-risk perspective.

Imagine you're telling people that the building you're in is on fire, the alarm didn't go off because of some technical problem, and they should leave the building immediately. If you then have a discussion and afterwards even just a small fraction of people decides to stay in the building, you have "lost" the debate.

In this case, though I was disappointed, I don't think the outcome is "bad", because it is an opportunity to learn. We're just at the beginning of the "battle" about the public opinion on AI x-risk, so we should use this opportunity to fine-tune our communications. That's why I wrote the post. There's also this excellent piece by Steven Byrnes about the various arguments.

I haven't watched a recording of the debate yet and I intend to, though I feel like I'm familiar enough with arguments on both sides that I may not learn much. This review was informative. It helped me notice that understanding the nature of public dialogue and understanding of AGI risk may be the greatest learning value from the debate.

I agree with all the lessons you've drawn from this debate and laid out at the end of this post. I've got other reactions lengthy enough I may make them into their own top-level posts, though here's some quicker feedback.

I'd distinguish here between how extreme the real positions someone takes are, and how extreme their rhetoric is. For example, even if Yudkowsky were to take more moderate positions, I expect he'd still come across as an extremist based on the rhetoric he often invokes.

While my impression is that Yudkowsky is not as hyperbolic in direct conversations, like on podcasts, his reputation as among the more disrespectful and impolite proponents of AGI risk persists. I expect he'd conduct himself in a debate like this much the way Mitchell conducted herself, except in the opposite direction.

To be fair, there are probably some more bombastic than Yudkowsky. Yet I'd trust neither them nor him to do better in a debate like this.

I'm writing up some other responses in reaction to this post, though I've noticed a frustrating theme across my different takes. There are better and worse arguments against the legitimacy of AGI risk as a concern, though it's maddening that LeCunn and Mitchell mostly stuck to making the worse arguments.

Good summary and especially helpful with the table to present the differing views succinctly. I'm looking forward to watching this debate and wasn't aware it was out. It's really a shame that they had some glitch that might have affected how much we can rely on the final scores to represent what really happened with the audience views