Humans are social animals, and as such we are influenced by the beliefs of those around us. This simulation explores how beliefs can spread through a population, and how indirect relationships between beliefs can lead to unexpected correlations. The featured simulation only works in the original post. I recommend visiting to explore the ideas fully.

STRANGE BED-FELLOWS

There are some strange ideological bed-fellows that emerge in the realm of human beliefs. Social scientists grapple with the strong correlation between Christianity and gun ownership when the “Prince of Peace” lived in a world without guns. Similarly there are other correlations between atheism and globalisation or pro-regulation leftists who are also pro-choice, and then we have the anti-vax movement infiltrating both the far-left and far-right of politics.

Does this all mean that people are just confused?

The simulation explores the network effects of belief transmission and runs on the principle that humans adopt beliefs that align with their pre-existing beliefs, seeking cognitive coherence over cognitive dissonance.

“A receiver of a belief either accepts the incoming belief or not based on the context of their own belief system (internal coherency)…”

- Rodriguez et al*

Each belief in this simulation has a valence with each other belief-with those sharing a positive valence being complementary ideas, and those with a negative valence being dissonant. The simulation doesn’t specifically model bias, but apparent bias is an emergent property of the system.

INSTRUCTIONS

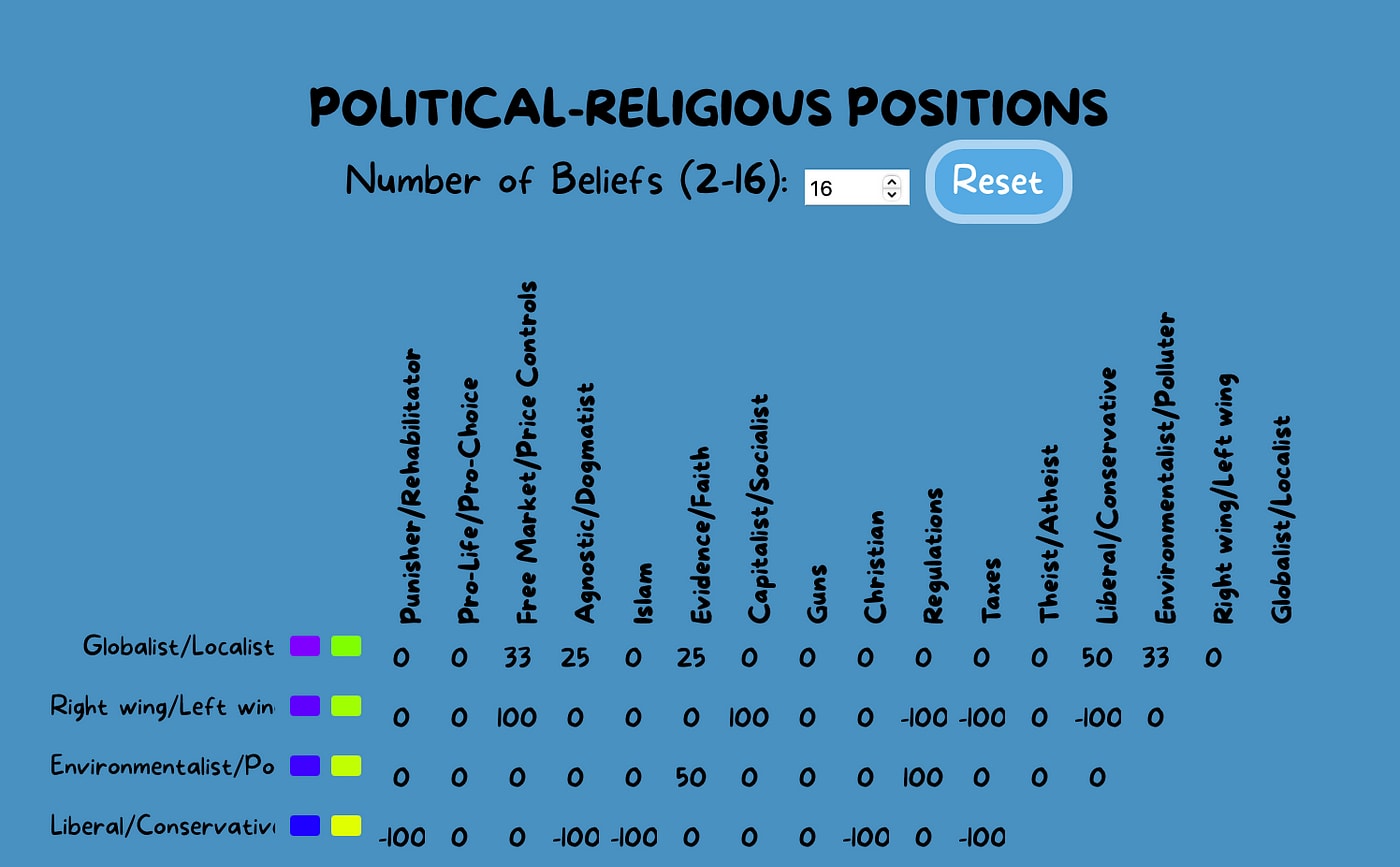

The opening sample is simply my own intuitions about what logical relationship some religious and political beliefs have with one another on . I have purposefully left anything I do not see as directly connected as zero. You can edit these valence values or categories to reflect your own intuitions, or the issues important to you.

It’s a bit of a learning curve thinking about valences, as each belief here is actually a pair-the belief and its opposite. So, if you have have a single issue like “taxes” this will be interpretted as “Pro-Tax”/”Anti-Tax”. When relating this to another related factor like “Right Wing/”Left Wing” you are looking for onevalue to describe how aligned “Pro-Tax” and “Right-Wing” are, and also how aligned are “Anti-Tax” and “Left Wing” are. So in this case, you might say -75.

VALENCE MATRIX

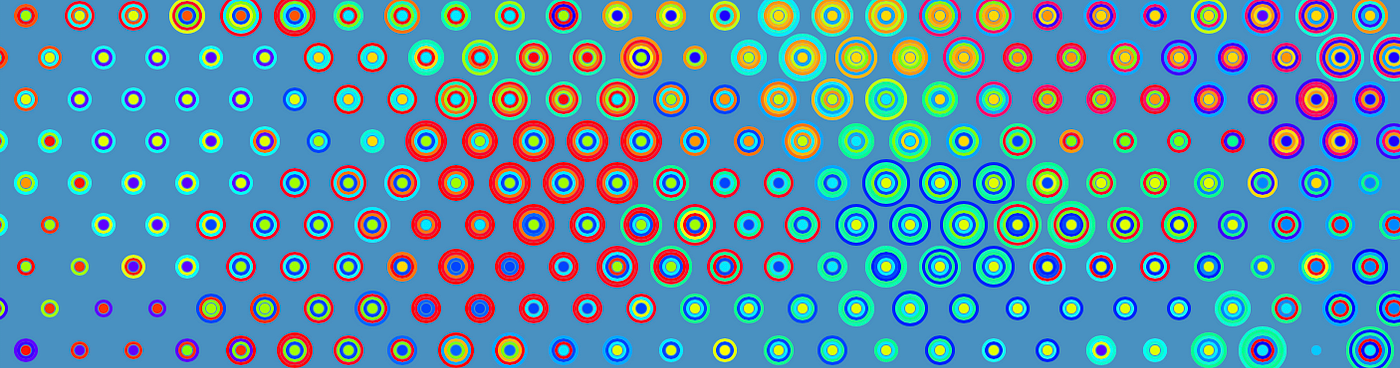

The simulation depicts nodes transmitting ideas (coloured rings). If an incoming idea increases the total valence value of the node it is adopted, if not then the most coherent set of beliefs is adopted, which might involve rejecting the incoming idea or ejecting a pre-existing belief.

The dot itself is coloured corresponding to its most aligned (strongest) belief.

SIMULATION GRID

FINDING (CIRCUITOUSLY CAUSED) CORRELATIONS

You can explore the correlations between beliefs, revealing how many familiar correlations arise even without a specific valence being ascribed.

Depending on how many beliefs or factors you’re using this will make for a fairly long list, at the bottom of which will be the comments section, where I hope you’ll post notes on your own explorations.

SO…

I’ve kept this post as simple as possible, but I intend to refine the model and write a much more detailed analysis of the methodology involved, informed by your feedback, so please drop a comment with anything interesting you discover.

RELATED MATERIAL

- * Collective Dynamics of Belief Evolution under Cognitive Coherence and Social Conformity by Rodriguez et al is a fascinating, evidence-based look at the way ideas are transmitted, featuring accessible diagrams and explanations.

- For those uber-nerds out there who want to send in their own tables, if you open your web inspector, there’s a console log output of the current table in csv format made whenever you run the simulation. If you want to copy and paste that to me, I’ll check it out and integrate it into the page.

- If you’re interested in the spread of ideas, check out our post on Genes, Memes and Susan Blackmore’s concept of Temes, or our post on originality-Taking Credit

Thank you very much! I think you will find this interesting:

https://forum.effectivealtruism.org/posts/aCEuvHrqzmBroNQPT/the-evolution-towards-the-blank-slate

The second epigraph is where it gets interesting for you:

This article surveys the evolutionary and game theoretical literature and suggests a new synthesis in the nature-nurture controversy. Gintian strong reciprocity is proposed as the main synthetic theory for evolutionary anthropology, and the thesis here defended is that the humanization process has been mainly one of “de-instinctivation”, that is, the substitution of hardwired behavior by the capabilities to handle cultural objects.

Thanks Arturo, and yes, I found it very interesting. I find the passage you've pulled particularly interesting in terms of AGI which seems to be following the same notion of "de-instinctivation" substitution narrow hardwiring for the ability to adapt to general situations.

I also found the critique of Utilitarian impartiality interesting, and it helped clarify my thinking in terms of the simulation. This model actually is founded on cognitive bias, treating it as a feature rather than a bug. So, incoming beliefs are only ever adopted when aligned with pre-existing beliefs and yet it is still impartial in that, given a conflicting belief an agent will always choose the strongest coalition of beliefs, letting beliefs with negative marginal value go freely. This is actually a very reasonable way to adopt beliefs (because we can only ever make decisions based on our prior knowledge) and yet has the natural result of bias that we observe in humans.

We are designed for social computation, not for individual rationality. Beyond the papers I comment in the pre-print, this book is a modern synthesis of Cultural Evolution Theory:

Cultural Evolution: How Darwinian Theory Can Explain Human Culture and Synthesize the Social Sciences

https://www.amazon.es/Cultural-Evolution-Darwinian-Synthesize-Sciences/dp/0226520447

Thank you for your reference of Gonzalez's paper.