Edit: No longer as excited. Per this comment:

I also think it is astronomically unlikely that a world splitting exercise like this would make the difference between 'at least one branch survives' and 'no branches survive'. The reason is just that there are so, so many branches

and per this comment:

An ingenious friend just pointed out a likely much larger point of influence of quantum particle-level noise on humanity: the randomness in DNA recombination during meiosis (gamete formation) is effectively driven by single molecular machine and the individual crossovers etc likely strongly depends on the Brownian-level noise. This would mean that some substantial part of people would have slightly different genetic makeup, from which I would expect substantial timeline divergence over 100s of years at most (measuring differences on the level of human society).

Explanation of the idea

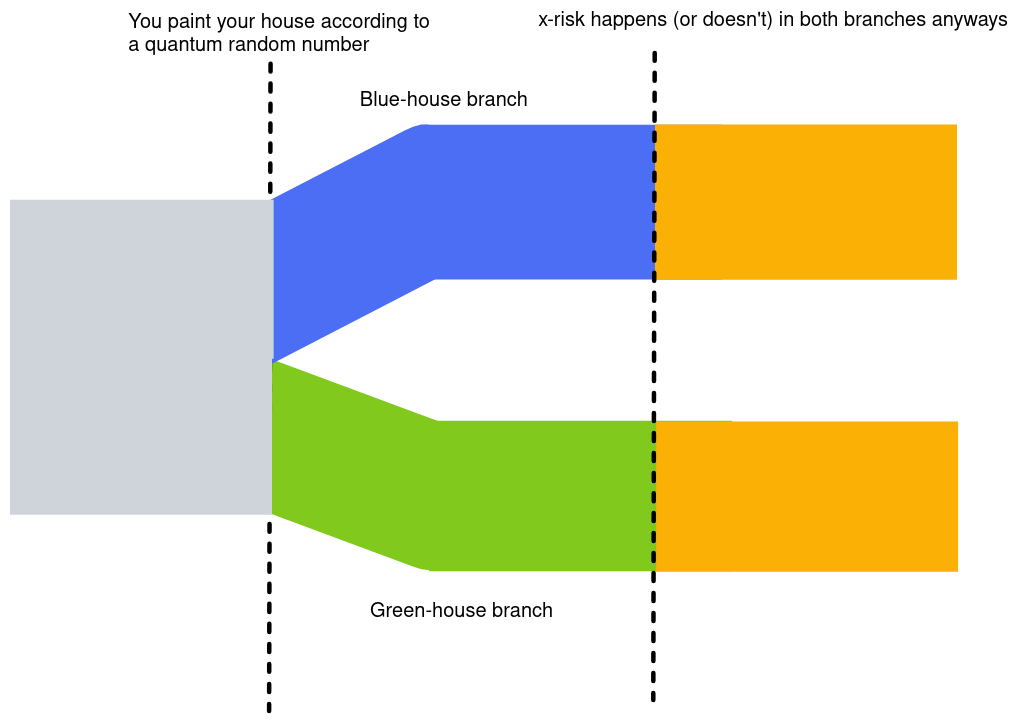

Under my understanding of the many-worlds interpretation of quantum mechanics, we have a probability measure, and every time there is a quantum event, the measure splits into mutually unreachable branches.

This in itself doesn't buy you much. If you choose which color to paint your house based on quantum randomness, you still split the timeline. But this doesn't affect the probability in any of the branches that an AI, asteroid or pandemic comes and kills you and everything you care about.

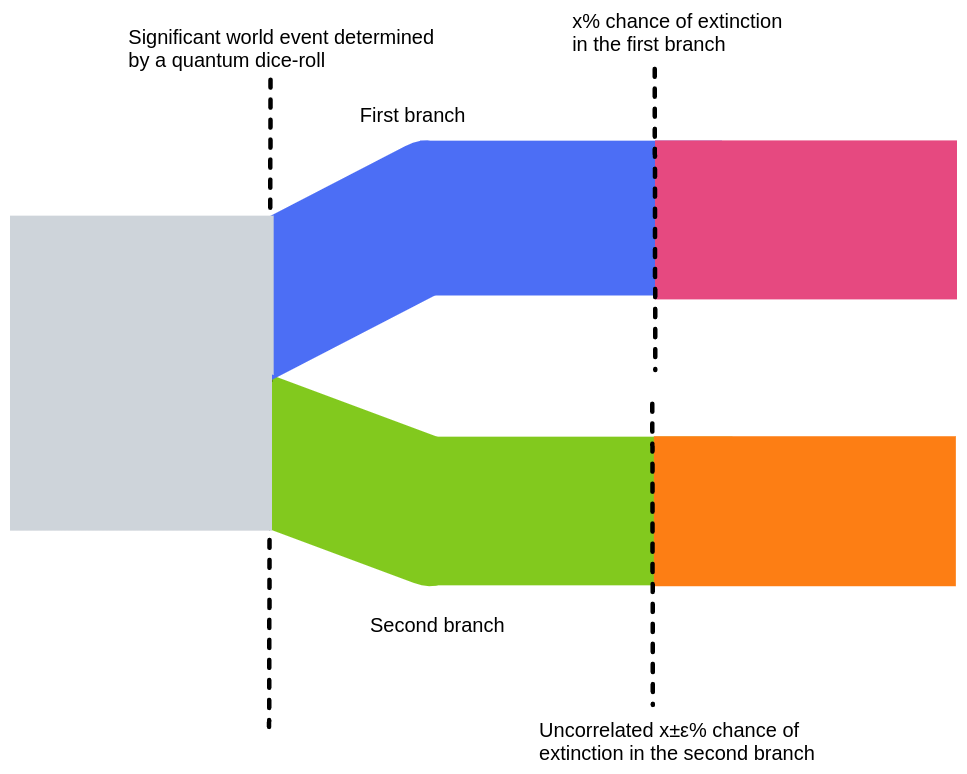

But we could also split the timeline such that the chances of extinction risk happening are less than perfectly correlated in each of the branches.

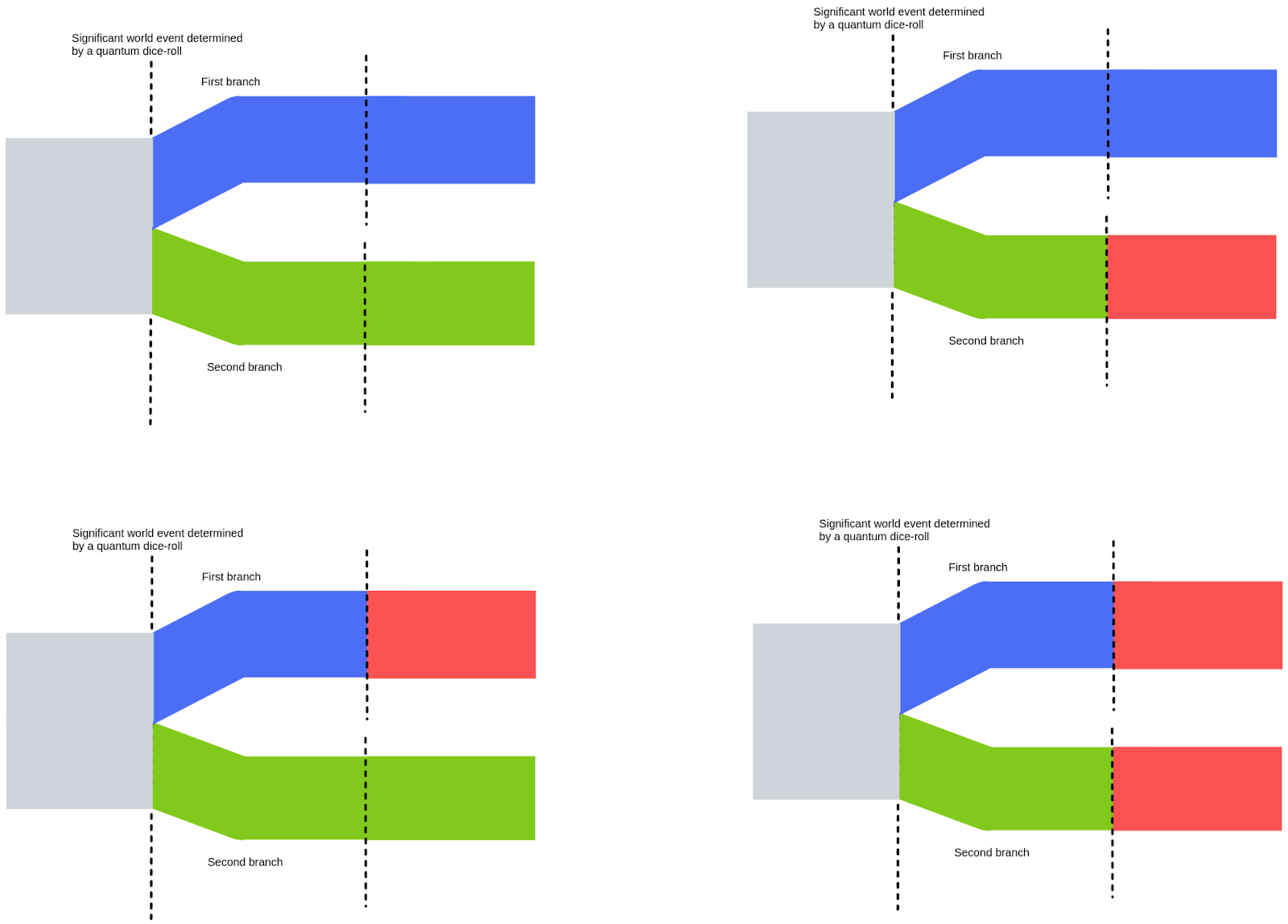

This second option doesn't buy you a greater overall measure. Or, in other words, if you're inside a branch, it doesn't increase your probability of survival. But it does increase the probability that at least one branch will survive (For instance, if the probability of x-risk is 50%, it increases it from 50% to 75%).

Implementation details

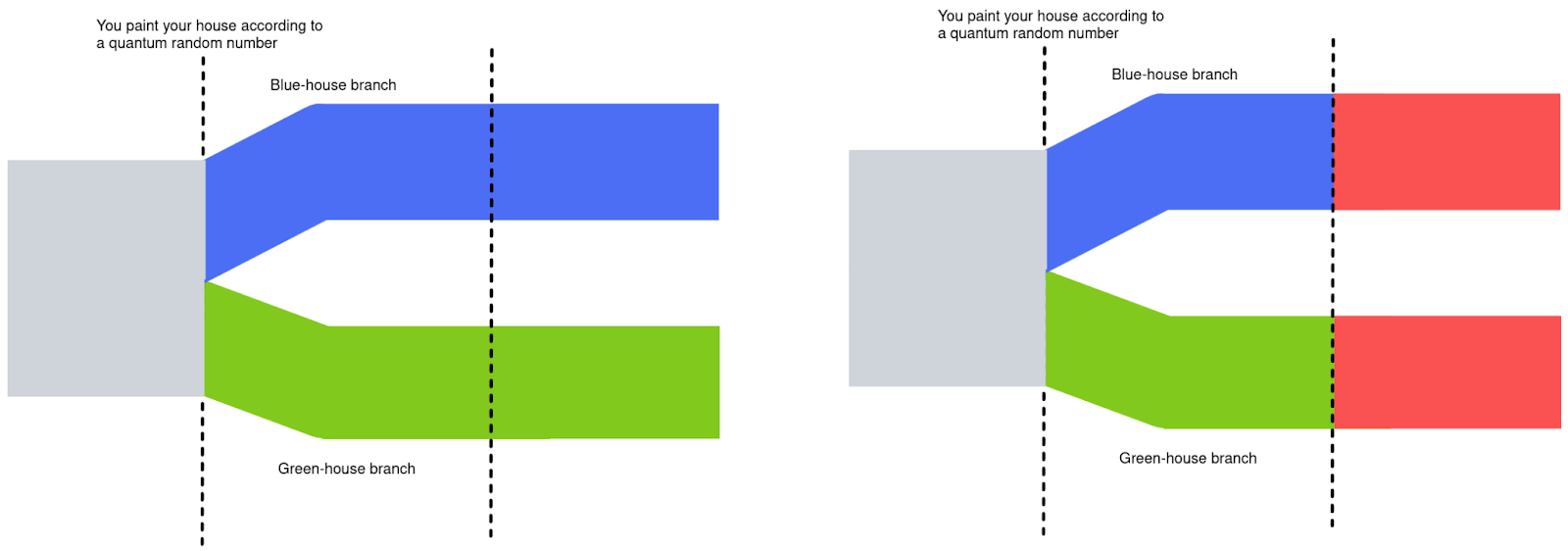

I don't really buy the "butterfly effect" as it corresponds to extinction risk. That is, I think that to meaningfully affect chances of survival, you can't just quantum-randomize small-scale events. You have to randomize events that are significant enough that the chances of survival within each of the split timelines become somewhat uncorrelated. So the butterfly effect would have to be helped along.

Or, in other words, it doesn't much matter if you quantum-randomize the color of all houses, you have to randomize something that makes extinction-risk independent.

Further, note that the two branches don't actually have to be independent. You can still get partial protection through partial uncorrelation.

In practice, I'm thinking of splitting our timeline many, many times, rather than only once.

General randomness vs targeted randomness

General randomness

Still, one way which one could get enough independence would in fact be to indiscriminately add quantum randomness, and hope that some of it sticks and buys you some uncorrelation.

For instance, instead of asking his Twitter followers, Elon Musk could have quantum-randomized whether or not to sell $10 billion of stock. This in itself doesn't seem like it would affect extinction risk. But accumulating enough changes like that could add up.

Another way of creating a lot of indiscriminate quantum randomness might be by having random.org output random numbers based on quantum effects, in a similar manner to how this site does, presumably thus affecting the actions of a lot of people.

One could also build the infrastructure for, e.g., cryptocurrency contracts to access quantum randomness, and hope and expect that many other people build upon them (e.g., to build quantum lotteries that guarantee you'll be the winner in at least some branch).

These later interventions seem like they could plausibly cost <10 million of seed investment, and produce significant amounts of quantum splitting. Cryptocurrencies or projects in some hyped-up blockchain seem like they could be great at this, because the funders could presumably recoup their investment if newly rich crypto-investors or users find the idea interesting enough.

General, indiscriminate random also seems like it would protect against (anthropogenic) risks we don't know about.

Targeted randomness

Instead of indiscriminate splitting, one could also target randomness to explicitly maximize the thatindependence of the branches. For instance, if at some point some catastrophe looms large on the horizon and there are mutually exclusive paths which seem like they are roughly equal in probability, we could quantum-randomize which path to take.

An example intervention of the sort I'm thinking about might involve, for example, OpenPhil deciding whether to invest or not in OpenAI, or doubling down or not on their initial investment, depending on the result of a quantum roll of the dice. Large donor lotteries also seem like they could be a promising target for quantum randomization. One issue is that we don't really know how significant an event has to be to meaningfully change/make independent some probabilities of survival. I would lean on the large side. Something like, e.g., shutting down OpenAI seems like it could be a significant enough change in the AI landscape that it could buy some uncorrelation, but it doesn’t seem that achievable.

Targeted randomness might provide less protection against risks we don't know much about, but I'm not sure.

Downsides

One big cost could be just the inconvenience of doing this. There are a lot of cases where we know what the best option is, so adding randomness would on average hurt things. It would be good to have a better estimate of benefit (or broad ways of calculating benefit), so that we can see what we’re trading off.

This proposal also relies on the many-worlds interpretation of quantum mechanics to be correct, and this could be wrong or confused. If this was the case, this proposal would be totally useless. Nonetheless, the many-worlds interpretation just seems correct, and I'm ok with EA losing a bunch of money on speculative projects, particularly if the downside seems bounded.

I haven't really thought all that much about how this would interact with existential risks other than extinction.

Acknowledgments

This article is a project by the Quantified Uncertainty Research Institute,

Thanks to Gavin Leech and Ozzie Gooen for comments and suggestions. The idea also seems pretty natural. Commenters pointed out that Jan Tallin and/or Jan Kulveit came up with a similar idea. In particular, I do know that the acceptance of some students to ESPR in ¿2019? was quantum-randomized.

Thanks for writing this — in general I am pro thinking more about what MWI could entail!

But I think it's worth being clear about what this kind of intervention would achieve. Importantly (as I'm sure you're aware), no amount of world slicing is going to increase the expected value of the future (roughly all the branches from here), or decrease the overall (subjective) chance of existential catastrophe.

But it could increase the chance of something like "at least [some small fraction]% of'branches' survive catastrophe", or at the extreme "at least one 'branch' survives catastrophy". If you have some special reason to care about this, then this could be good.

For instance, suppose you thought whether or not to accelerate AI capabilities research in the US is likely to have a very large impact on the chance of existential catastrophe, but you're unsure about the sign. To use some ridiculous play numbers: maybe you're split 50-50 between thinking investing in AI raises p(catastrophe) to 98% and 0 otherwise, or investing in AI lowers p(catastrophe) to 0 and 98% otherwise. If you flip a 'classical' coin, the expected chance of catastrophe is 49%, but you can't be sure we'll end up in a world where we survive. If you flip a 'quantum' coin and split into two 'branches' with equal measure, you can be sure that one world will survive (and another will encounter catastrophe with 98% likelihood). So you've increased the chance that 'at least 40% of the future worlds will survive' from 50% to 100%.[1]

In general you've moving from more overall uncertainty about whether things will turn out good or bad, to more certainty that things will turn out in some mixture of good and bad.

Maybe that sounds good, if for instance you think the mere fact that something exists is good in itself (you might have in mind that if someone perfectly duplicated the Mona Lisa, the duplicate would be worth less than the original, and that the analogy carries).[2]

But I also think it is astronomically unlikely that a world splitting exercise like this would make the difference[3] between 'at least one branch survives' and 'no branches survive'. The reason is just that there are so, so many branches, such that —

It's like choosing between [putting $50 on black and 50% on red] at a roulette table, and [putting $100 on red].

But also note that by splitting worlds you're also increasing the chance that 'at least 40% of the future worlds will encounter catastrophe' from 48% to 99%. And maybe there's a symmetry, where if you think there's something intrinsically good about the fact that a good thing occurs at all, then you should think there's something intrinsically bad about the fact that a bad thing occurs at all, and I count existential catastrophe as bad!

Note this is not the same as claining it's highly unlikely that this intervention will increase the chance of surviving in at least one world.

Because you are making at most a factor-of-two difference by 'splitting' the world once.

Yes, I agree

This analogy isn't perfect. I'd prefer the analogy that, in a trolley problem in which the hostages were your family, one may care some small amount about ensuring at least one family-member survives (in opposition/contrast to maximizing the number of family members which survive)

Yeah, when thinking more about this, this does seem like the strongest objection, and here is where I'd like an actual physicist to chip in. If I had to defend why that is wrong, I'd say something like:

Seems to me that pretty much whenever anyone would actually considering 'splitting the timeline' on some big uncertain question, then even if they didn't decide to split the timeline, there are still going to be fairly non-weird worlds in which they make both decisions?

But this requires a quantum event/events to influences the decision, which seems more and more unlikely the closer you are to the decision. Though per this comment, you could also imagine that different people were born and would probably make different decisions.

I think I like the analogy below of preventing extinction being (in a world which goes extinct) somewhat akin to avoiding the industrial revolution/discovery of steam engines, or other efficient sources of energy. If you are only allowed to do it with quantum effects, your world becomes very weird.

What makes you think that? So long as value can change with the distribution of events across branches (as perhaps with the Mona Lisa) the expected value of the future could easily change.

I think this somehow assumes two types of randomness: quantum randomness and some other (non-quantum) randomness, which I think is an inconsistent assumption.

In particular, in a world with quantum-physics, all events either depend on "quantum" random effects or are (sufficiently) determined*. If e.g. extinction is uncertain, then there will be further splits, some branches avoiding extinction. In the other (although only hypothetical) case of it being determined in some branch, the probability is either 0 or 1, voiding the argument.

*) In a world with quantum physics, nothing is perfectly determined due to quantum fluctuations, but here I mean something practically determined (and also allowing for hypothetical world models with some deterministic parts/aspects).

I think it is confusing to think about quantum randomness as being only generated in special circumstances - to the contrary, it is extremely common. (At the same time, it is not common to be certain that a concrete "random" bit you somehow obtain is quantum random and fully independent of others, or possibly correlated!)

To illustrate what I mean by all probability being quantum randomness: While some coin flips are thrown in a way that almost surely determines the result (even air molecule fluctuations are very unlikely to change the result - both in quantum and classical sense) - some coin flips are thrown in such a way that the outcome is uncertain to the extent to be mostly dependent on the air particles bumping into the coin (imagine a really long throw); lets look at those.

The movements of individual particles are to large extent quantum random (on the particle level, also - the exact micro-state of individual air molecules is to a large extent quantum-random), and moreover influenced by quantum fluctuations. As the coin flies through the air, the quantum randomness influence "accumulates" on the coin, and the flip can be almost-quantum-random without any single clearly identifiable "split" event. There are split events all along the coin movement, with various probabilities and small influences on the outcome - each contributing very small amounts of entropy to the target distribution. (In a simplified "coin" model, each of the quantum-random subatimic-level events would directly contribute a small amount of entropy (in bits) to the outcome.)

With this, it is not clear what you mean "one branch survives", as in fully quantum world, there are always some branches always survive; the probability may just be low. My point is that there (fundamentally) is no consistent way to say what constitutes the branches we should care about.

If you instead care about the total probability measure of the branches with an extinction event, that should be the same as old-fashioned probability of extinction - my (limited) understanding of quantum physics interpretations is that by just changing the interpretation, you won't get different expected results in a randomly chosen timeline. (I would appreciate being corrected here if my general understanding is lacking or missing some assumption.)

Re 1.: Yeah, if you consider "determined but unknown" in place of the "non-quantum randomness", this is indeed different. Let me sketch a (toy) example scenario for that:

We have fixed two million-bit numbers A and B (not assuming quantum random, just fixed arbitrary; e.g. some prefix of pi and e would do). Let P2(x) mean "x is a product of exactly 2 primes". We commit to flipping a quantum coin and on heads, we we destroy humanity iff P2(A), on tails, we destroy humanity iff P2(B). At the time of coin-flip, we don't know P2(A) or P2(B) (and assume they are not obvious) and can only find out (much) later.

Is that a good model of what you would "deterministic but uncertain/unknown"?

In this model (and a world with no other branchings - which I want to note is hard to conceive as realistic), I would lean towards agreeing that "doing the above" has higher chance of some branch surviving than e.g. just doing "we destroy humanity iff P2(A)".

(FWIW, I would assume the real world to be highly underdetermined in outcome just through quantum randomness on sufficient time-scales, even including some intuitions about attractor trajectories; see just below.)

Re 2.: The rate of accumulation of (ubiquitous, micro-state level) quantum effects on the macro-state of the world are indeed uncertain. While I would expect the cumulative effects on the weather to be small (over a year, say?), the effects on humanity may already be large: all our biochemistry (e.g. protein interactions, protein expression, ...) is propelled by Brownian motion of the molecules, where the quantum effects are very strong, and on the cellular scale, I would expect the quantum effects to contribute a substantial amount of noise e.g. in the delays and sometimes outcomes. Since this also applies to the neurons in the brain (delays, sometimes spike flipped to non-spike), I would assume the noise would have effects observable by other humans (who observed the counterfactual timeline) within weeks (very weak sense of the proper range, but I would expect it to be e.g. less than years).

This would already mean that some crucial human decisions and interactions would change over the course of years (again, scale uncertain but I would expect less rather than more).

(Note that the above "quantum randomness effect" on the brain has nothing to do with questions whether any function of the brain depends on quantum effects - it merely talks about nature of certain noise in the process.)

An ingenious friend just pointed out a likely much larger point of influence of quantum particle-level noise on humanity: the randomness in DNA recombination during meiosis (gamete formation) is effectively driven by single molecular machine and the individual crossovers etc likely strongly depends on the Brownian-level noise. This would mean that some substantial part of people would have slightly different genetic makeup, from which I would expect substantial timeline divergence over 100s of years at most (measuring differences on the level of human society).

My impression is that you're arguing that quantum randomness creates very large differences between branches. However, couldn't it still be the case that even more differences would be preferable? I'm not sure how much that first argument would impact the expected value of trying to create even more divergences.

Yeah, this makes sense, thanks.

It seems to me like quantum randomness can be a source of creating legitimate divergence of outcomes. Lets call this "bifurcation". I could imagine some utility functions for which increasing the bifurcation of outcomes is beneficial. I have a harder time imagining situations where it's negative.

I'd expect that interventions that cause more quantum bifurcation generally have other costs. Like, if I add some randomness to a decision, the decision quality is likely to decrease a bit on average.

So there's a question of the trade-offs of a decrease in the mean outcome, vs. an increase in the amount of bifurcation.

There's a separate question on if EAs should actually desire bifurcation or not. I'm really unsure here, and would like to see more thinking on this aspect of it.

Separately, I'd note that I'm not sure how important it is if there's already a ton of decisive quantum randomness happening. Even if there's a lot of it, we might want even more.

One quick thought; often, when things are very grim, you're pretty okay taking chances.

Imagine we need 500 units of AI progress in order to save the world. In expectation, we'd expect 100. Increasing our amount to 200 doesn't help us, all that matters is if we can get over 500. In this case, we might want a lot of bifurcation. We'd much prefer a 1% chance of 501 units, than a 100% chance of 409 units, for example.

In this case, lots of randomness/bifurcation will increase total expected value (which is correlated with our chances of getting over 500 units, moreso than it is correlated with the expected units of progress).

I imagine this mainly works with discontinuities, like the function described above (Utility = 0 for units 0 to 499, and Utility = 1 for units of 500+)

My intuitions don't tell me to care about the probability that at least one branch survives.

This seems like a quite strong empirical claim. It may be the case that tiny physical differences eventually have large counterfactual effects on the world. For example, if you went back in time 1000 years and painted someone's house a different color, my probability distribution for the weather here and now would look like the historical average for weather here, rather than the weather in the original timeline. Certainly we don't have reason to believe in a snowball effect, where if you make a small change, the future tends to look significantly different in (something like) the direction of that change -- but I think we do have reason to believe in a butterfly effect, in which small changes have unpredictable, eventually-large effects. The alternative is a strong empirical claim that the universe is stable (not sure how to make this precise), I think.

I think the crux here may not be the butterfly effect, but the overall accumulated effect of (quantum) randomness: I would expect that if you went 1000 years back and just "re-ran" the world from the same quantum state (no house painting etc.), the would would be different (at least on the human-preceivable level; not sure about weather) just because so many events are somewhat infuenced by (micro-state) quantum randomness.

The question is only the extent of the quantum effects on the macro-state (even without explicit quantum coin flips) but I expect this to be quite large e.g. in human biology and in particular brain - see my other comment (Re 2.) (NB: independent from any claims about brain function relying on quantum effects etc.).

(Note that I would expect the difference after 1000 years to be substantially larger if you consider the entire world-state to be a quantum state with some superpositions, where e.g. the micro-states of air molecules are mostly unobserved and in various superpositions etc., therefore increasing the randomness effect substantially ... but that is merely an additional intuition.)

Good point, and that's a crux between two not-unreasonable positions, but my intuition is that even if the universe was deterministic, if you (counterfactually) change house color, the day-to-day weather 1000 years later has essentially no correlation between the two universes.

You could make this precise by thinking of attractor states. E.g., if I'd scored less well in any one exam as a kid, or if some polite chit-chat had gone slightly differently, I think the difference gets rounded down to 0, because that doesn't end up affecting any decisions.

I'm thinking of some kinds of extinction risk as attractor states that we have to exert some pressure (and thus some large initial random choice) to avoid. E.g., unaligned AGI seems like one of those states.

This would surprise me, but I could just have wrong intuitions here. But even assuming that is the case, small initial changes would have to snowball fast and far enough to eventually avert an x-risk.

Hmm. At the very least, if you have some idealized particles bouncing around in a box, minutely changing the direction of one causes, as time goes to infinity, the large counterfactual effect of fully randomizing the state of the box (or if you prefer, something like redrawing the state from the random variable representing possible states of the box).

I'd be surprised if our world was much more stable (meaning something like: characterized by attractor states), but this seems like a hard and imprecise empirical question, and I respect that your intuitions differ.

Like, suppose that instead of "preventing extinction", we were talking about "preventing the industrial revolution". Sure, there are butterfly effects which could avoid that, but it seems weird.

From this Twitter thread, maybe one thinks that the world is net-good, and one wants to have more branches (would be the same as a clone of Earth). Like, it isn't clear to me why one should value worlds according to their quantum-probability mass.

For what it's worth Sean Carroll thinks the weights are needed

Thanks. Some of the relevant passages:

I think it's bunk that we get to control the number of splits, unless your value function is really weird and considers branches which are too similar to not count as different worlds.

Come on people, the whole point of MWI is that we want to get rid of the privileged role of observers!

It's unclear to me why this would be so weird

Not sure I agree.