TLDR

I present a proof-of-concept for the feasibility of predicting the cost-effectiveness of behavioral science research. The results suggest that funding EA-aligned behavioral science research could be more cost-effective at promoting well-being than investing in deploying the best existing interventions for promoting global (mental) health.

Introduction

We now live in a time when people’s decisions are more influential than ever before (Ord, 2020). On the one hand, bad decisions could destroy the future of humanity (e.g., through nuclear war, engineered pandemics, or misaligned advanced AI). On the other hand, good decisions could enable a vast future that is substantially better than the present (e.g., by averting existential risks and ending extreme poverty). Behavioral science can help improve those decisions by elucidating the underlying cognitive and affective mechanisms and the conditions under which they lead to good versus bad decisions. Applying behavioral science to crucial questions could therefore put us into a much better position to develop, improve, select, and deploy effective interventions preventing catastrophic decisions and fostering impactful altruistic contributions (Lieder & Prentice, 2022; Lieder, Prentice, & Corwin-Renner, 2022).

Consistent with this argument, we recently found that funding research projects that are designed to enable or develop better interventions can be substantially more cost-effective than investing everything into interventions that are already available today. This general finding could be especially true for high-quality behavioral science research on crucial questions. Much of the previous behavioral science research did not live up to this standard (Simmons, Nelson, & Simonsohn, 2011). This means that behavioral science can become substantially much more effective at generating impactful insights and interventions than it has been in the past. This requires asking better questions and answering them with better methods. Thus far, most efforts to improve behavioral science have focused on how questions are answered (Hales, Wesselmann, & Hilgard, 2019). I believe that asking the right questions is at least as important as answering them well. I therefore think that we should be at least as methodical about choosing our questions as we are about answering them. So far, there are virtually no principled methods for choosing good scientific questions. The Research Priorities Project aims to change that by developing a method that helps behavioral scientists choose more impactful research questions.

A good scientific question is one whose investigation leads to a large gain in knowledge that has impactful applications that substantially increase the wellbeing of humanity. This requires that the question has three properties (Gainsburg, et al., 2022): i) It has to be important, ii) it has to be tractable, and iii) it has to be neglected. The Research Priority Project’s team of behavioral scientists and effective altruists is currently developing a method for identifying such questions.

A general method for identifying impactful behavioral science research questions

So far, our method proceeds in four steps:

- Ask many researchers from a wide range of research areas within psychology what the most important questions of psychology might be.

- Select a subset of the questions that score the highest on importance, tractability, and neglectedness.

- Estimate the expected increase in human well-being that will be enabled by researching each topic per dollar invested.

- Rank the potential topics by their expected cost-effectiveness

We are currently working on Step 3 of our first iteration of this process. Our plan for Step 3 is to apply our general model of the cost-effectiveness of scientific research to perform quantitative cost-effectiveness analyses of the positive social impact of researching different topics. Concretely, we aim to develop Guesstimate models of how effective the resulting interventions can be expected to be and how beneficial the behaviors promoted by those interventions are for the well-being of humanity. This model will allow us to probabilistically simulate how impactful the deployment of the resulting interventions might be. We can then combine this information with the cost of deploying those interventions to estimate their cost-effectiveness. We can then compare the cost-effectiveness of the new intervention(s) to the cost-effectiveness of the best existing intervention. Based on this comparison, we can then evaluate how high the likelihood is that our intervention will be more effective and how those potential benefits compare to the cost of research.

Proof of Concept: the value of research on promoting prosocial behavior

As a proof of concept, we applied this approach to the research topic of how people find and adopt a prosocial purpose. The following sections describe the model we developed to estimate the cost-effectiveness of such research and the results of this analysis.

A simple model

The logic of our model of the impact of research on promoting prosocial behavior is as follows. Understanding how people can find a highly beneficial prosocial purpose might improve the effectiveness of existing online interventions for fostering purpose (Baumsteiger, 2019). Deploying the improved interventions might increase the frequency of kindness, which in turn increases the well-being of the people who commit the acts of kindness and their beneficiaries.

I have built a first probabilistic model using Guesstimate (https://www.getguesstimate.com/models/21450). Each of the rectangles in this model specifies the probability distribution of a variable, such as the frequency of prosocial behavior, given the values of other variables and data from the literature. The arrows show which variables influence which other variables. This model has three parts. The first part models the effect of the research on the effectiveness of the intervention, the resulting increase in kindness, and its effect on well-being. The second part models the cost of deploying the improved intervention. And the third part of the model combines the estimates generated by the first two parts to estimate the cost-effectiveness of conducting the research as opposed to investing all the money in the deployment of the best existing intervention. We now briefly describe each of the three parts of the model in turn.

Figure 1. Screenshot from the part of the probabilistic model concerning the effect of the intervention on well-being.

Predicting the effect of the intervention resulting from the research

As illustrated in Figure 1, the first part of the model describes how the research might lead to increased well-being. This part has two components: i) the effect of the intervention on prosocial behavior, and ii) the effect of prosocial behavior on well-being. We combine the predictions of these two parts to obtain a probabilistic prediction of the total increase in well-being we can expect to achieve per person who is reached by the intervention. The model measures this benefit by the number of hours for which a person’s subjective well-being was increased by one standard deviation. We call this measure hours of happiness.

The first component describes how much the newly developed intervention might increase the frequency of prosocial behavior and how long that effect might last. These two predictions are combined into an overall estimate of the number of additional acts of prosocial behavior that a person who has been exposed to the intervention will perform because of it. We make the conservative assumption that, for the new intervention, those quantities will not be systematically better or worse than they were for previous interventions. Our model, therefore, samples their values from the corresponding empirical distribution of effect sizes reported in meta-analyses (Menting et al., 2013; Mesurado et al., 2019; Shin & Lee, 2021) and an additional relevant recent study they overlooked (Bausteiger, 2019). We thereby use the variability in the effectiveness of recently developed interventions as an estimate of the upside potential of developing a new intervention.

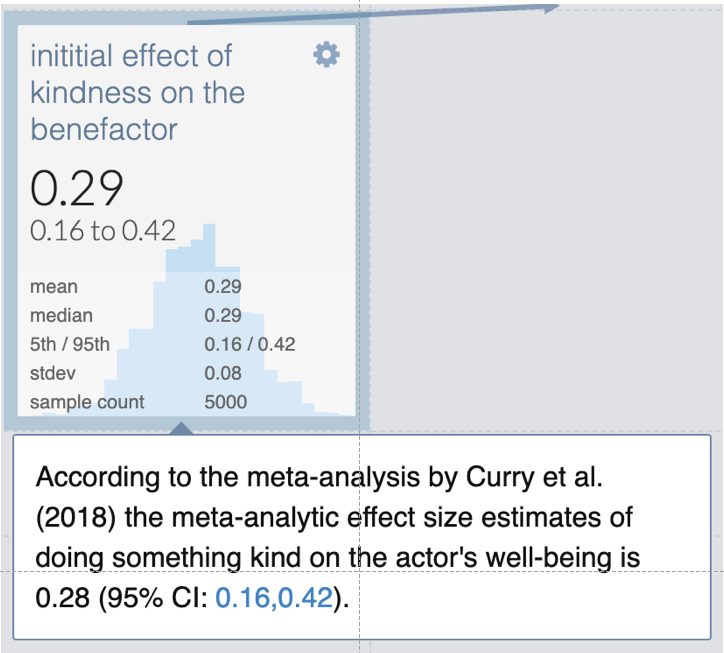

The second component estimates the additional hours of happiness that each act of prosocial behavior brings to its beneficiaries and the person who performs it. This estimate combines the intensity of the positive emotions people experience when they act prosocially (Curry et al., 2018) or benefit from someone’s prosocial behavior (Pressman et al., 2015) with how long those positive emotions typically last (Verduyn et al., 2009). Figure 2 shows one of the model’s building blocks, namely the effect of one act of kindness on the beneficiaries' happiness. As you can see, this is a simple Normal distribution that reflects the confidence interval on the corresponding effect size from the meta-analysis by Curry et al. (2018).

Figure 2. Probabilistic model of the initial effect of performing an act of kindness on well-being. The values in the box are the empirical estimates based on 5000 samples. They may therefore slightly deviate from the expected value of the corresponding distribution.

Predicting the cost of deploying the intervention

We assumed that the most cost-effective intervention resulting from the research on promoting prosocial behavior would be a digital intervention similar to the one developed by Baumsteiger (2019). We, therefore, modeled the cost of deploying the intervention per person who completes it as the product of the cost of online advertising per install times the proportion of people who complete the intervention upon starting it. This led to an estimated cost of $11 per person who completes the intervention.

Predicting the relative cost-effectiveness of investing in the research

We modeled the cost-effectiveness of the new intervention as the ratio between the hours of happiness created per person who completes the intervention and the deployment cost per person who completes the intervention. Similarly, the cost-effectiveness of research (CE_research) is the quotient of the value of research (VOR) and the research costs (RC), that is, CE_research = VOR/RC.

Building on the value of information framework (Howard, 1966), we model the VOR as the expected value of the best opportunity we will have to invest money in good causes after the research has been completed minus the expected value of the opportunities that are already available. To obtain a lower bound on the value of research, we assume that its only benefit will be to add a new intervention to the repertoire of interventions that EAs and EA organizations can fund. We model the value of either decision situation as the amount of human happiness that can be created with the remaining amount of money. The amount of happiness we can create without the research is the product of the budget and the cost-effectiveness of the best intervention that already exists (i.e., budget*CE_prev). The amount of human happiness that can be created after a research project has produced a new intervention with cost-effectiveness CE_new is the product of the amount of money that is left after the research has been completed (budget-RC) and the maximum of the cost-effectiveness of the new intervention and the best previous intervention (i.e., max (CE_prev, CE_new)). Under these assumptions, the value of research is

VOR = E[(budget-RC)*max(CE_prev, CE_new)] - E[budget*CE_prev],

where E[X] denotes the expected value of X.

This assumes that the intervention resulting from the research will be deployed if and only if it is more cost-effective than the best existing intervention. Moreover, the present analysis modeled the total amount of funds that will be deployed to increase human well-being as a log-Normal distribution with a 95% confidence interval ranging from 10 million dollars to 1 billion dollars.

Building on McGuire and Plant (2021), we used delivering task-shifted CBT group therapy for depression to women in Africa as our placeholder for the most effective intervention. McGuire and Plant (2021) found this intervention to be 9 to 12 times as cost-effective as cash transfers. Moreover, we assume that the budget that can be invested into the new intervention is the minimum of the amount of money that could be cost-effectively invested in the intervention (scalability) and the portion of the budget remaining after the research has been funded. We model the scalability of the intervention as the product of the number of people who have internet access, the proportion of them who would click on an ad to the intervention if it is presented to them often enough, and the cost per click of online advertising.

Preliminary Results

Running Monte Carlo simulations with our probabilistic model allowed us to estimate i) the cost-effectiveness of interventions that might result from research on promoting prosocial behavior and the cost-effectiveness of conducting such research. We found that the expected value of the cost-effectiveness of the interventions that further research on promoting prosocial behavior might produce is about 24 hours of happiness per dollar, and its median is about 6.6 hours of happiness per dollar (see Figure 3a). The uncertainty about the cost-effectiveness of new interventions for promoting prosocial behavior is very high (standard deviation: 53.1 hours of happiness per dollar). Importantly, the probability distribution of the cost-effectiveness of the resulting interventions has a long right tail. Therefore, there is a very high upside potential (95th percentile: 116.8 hours of happiness per dollar).

Figure 3. Results of our cost-effectiveness analysis of research on promoting prosocial behavior.

| (a) Predicted cost-effectiveness of interventions resulting from the research. | (b) Cost-effectiveness of funding research and then selecting either the new intervention or the best previous intervention. | c) Ratio of the cost-effectiveness of funding research and then selecting the best intervention vs. investing everything into already existing interventions. |

|  |  |

The expected cost-effectiveness of the best existing intervention for promoting global mental health (88 hours of happiness per dollar) is higher than the expected value of this distribution, but significantly lower than its 95th percentile. This suggests that research on promoting prosocial behavior has the potential to lead to an intervention that is even more cost-effective. Crucially, we only have to deploy the new intervention if it is more cost-effective than the previous one. If it turns out to be less cost-effective, then we continue deploying the best previous intervention, and we only lose a small amount of money for conducting the research. Moreover, because online interventions are highly scalable and the total amount of money that EA funding organizations have earmarked for increasing human well-being is many orders of magnitude larger than the cost of a research project, an online intervention whose cost-effectiveness is even slightly higher than the cost-effectiveness of the best existing intervention could have a massive positive impact. Our model takes this into account and therefore predicts that the cost-effectiveness of conducting the research and then deciding which intervention to deploy is about 7.5 times the cost-effectiveness of the best existing intervention (see Figure 3b-c). Moreover, our estimate of the cost-effectiveness of research on promoting prosocial behavior is about 3 times as high as our estimate of the cost-effectiveness of strategically investing in scientific research in general. This suggests that research on promoting prosocial behavior is especially cost-effective compared to both interventions and general scientific research.

Our model makes a number of assumptions. To determine which of these assumptions are critical for our conclusions, we performed a sensitivity analysis. We found that our estimate of the cost-effectiveness of research on prosocial behavior is not overly sensitive to any of the individual assumptions. Some of the more important assumptions are the proportion of people who follow through with the intervention upon starting it, and the effect of kindness on the well-being of the beneficiary. If the proportion of people who complete the intervention upon encountering it was only half as high, then the cost-effectiveness of the research would also be only half as high. Future research should therefore refine our assumptions about these variables based on a more thorough review of existing research findings. By contrast, many other assumptions, such as the cost of the research, were not crucial within the range of possible values we considered.

Discussion

The proof-of-concept cost-effectiveness analysis suggests that it is possible to quantitatively estimate the positive social impact of psychological research on a given topic. Moreover, it indicates that, despite the high uncertainty, such estimates can be useful for deciding whether a potential research topic is worth pursuing. Encouragingly, we found that psychological research could have a larger positive social impact than some of the best existing interventions for improving global (mental) health.

Perhaps the most important takeaway from this case study is that scientific research and R&D could be more cost-effective than investing directly in interventions when it has a non-negligible chance to produce new or improved interventions that are substantially more cost-effective than the best existing interventions. This can be the case even if the interventions that result from the research are less cost-effective than the best existing intervention on average. What matters most is that there is high upside potential. That is, the crucial factor is whether there is a non-negligible chance that the research projects will lead to at least one new intervention that is (much) more cost-effective than the best existing intervention. Our case study suggests that some research on motivating and enabling people to engage in (effective) altruism could have this property.

We should keep in mind that the cost-effectiveness analysis presented in this post was just the very first exploration of the feasibility of making predictions about the potential impact of behavioral science research. It is therefore difficult to assess how much credence we should put into its conclusions. Constructive criticism and future improvements to the model are likely to change our estimate moving forward. We would be in a much better position to ascertain how much trust we should put in the cost-effectiveness estimate if it were based on a systematic, objective method whose reliability had already been established empirically.

Next steps

To address these limitations, our next step will be to translate what we have learned into a general method for predicting the cost-effectiveness of (behavioral science) research in hours of happiness per dollar invested. Once we have developed the method, we will test if it is sufficiently objective (“Does it lead to similar estimates when different teams apply it?”) and sufficiently accurate (“Can it reliably discern highly impactful research from less impactful research?”).

We hope that once we have developed a method that is sufficiently objective and sufficiently accurate to be useful, we will be able to apply it to identify the most important scientific questions in increasingly larger research areas, culminating in a prioritized list of the most important research topics of the behavioral science along with cost-effectiveness estimates. This will also make it possible to compare the cost-effectiveness of behavioral science research on specific topics to the cost-effectiveness of best existing interventions of established causes, such the charities recommended by GiveWell.

I hope that, in the long run, this line of work will shift the priorities of the behavioral sciences and the criteria of academic funding agencies towards research that is highly beneficial for the long-term flourishing of humanity. This is a big and audacious project that will require the joint efforts of methodologists and domain experts. We invite you to join us in estimating the cost-effectiveness of behavioral science research and helping behavioral scientists ask more impactful research questions. There are many opportunities for you to contribute that cover a range of skill sets and interests, and it is conceivable that funding for part-time or full-time roles might become available in the future. So if you are potentially interested in collaborating on this project, please contact us.

Acknowledgements

This analysis is part of the research priorities project, which is an ongoing collaboration with Izzy Gainsburg, Philipp Schönegger, Emily Corwin-Renner, Abbigail Novick Hoskin, Louis Tay, Isabel Thielmann, Mike Prentice, John Wilcox, Will Fleeson, and many others. The model is partly inspired by Jack Malde’s earlier post on whether effective altruists should fund scientific research. I would like to thank Joel McGuire and Michael Plant from the Happier Lives Institute, Emily Corwin-Renner, Cecilia Tilli, and Andreas Stuhlmüller for helpful pointers, feedback, and discussions. I would also like to thank Rachel Baumsteiger and Isabel Thielmann for sharing information on research projects that this analysis is based on.

References

- Baumsteiger, R. (2019). What the world needs now: An intervention for promoting prosocial behavior. Basic and applied social psychology, 41(4), 215-229.

- Curry, O. S., Rowland, L. A., Van Lissa, C. J., Zlotowitz, S., McAlaney, J., & Whitehouse, H. (2018). Happy to help? A systematic review and meta-analysis of the effects of performing acts of kindness on the well-being of the actor. Journal of Experimental Social Psychology, 76, 320-329.

- Gainsburg, I., Pauer, S., Abboub, N., Aloyo, E. T., Mourrat, J., Cristia, A. (2022). How Effective Altruism can help psychologists maximize their impact. Perspectives on Psychological Science.

- Hales, A. H., Wesselmann, E. D., & Hilgard, J. (2019). Improving psychological science through transparency and openness: An overview. Perspectives on Behavior Science, 42(1), 13-31.

- Howard, R. A. (1966). Information value theory. IEEE Transactions on systems science and cybernetics, 2(1), 22-26.

- Lieder, F., & Prentice, M. (in press). Life Improvement Science. In F. Maggino (Ed.), Encyclopedia of Quality of Life and Well-Being Research, 2nd Edition, Springer. https://doi.org/10.13140/RG.2.2.10679.44960

- Lieder, F., Prentice, M., & Corwin-Renner, E. R. (2022). An interdisciplinary synthesis of research on understanding and promoting well-doing. OSF Preprint

- McGuire, J., & Plant, M. (2021, October). Strong Minds: Cost-Effectiveness Analysis. Technical Report. Happier Lives Institute. https://www.happierlivesinstitute.org/report/psychotherapy-cost-effectiveness-analysis/

- Menting, A. T., de Castro, B. O., & Matthys, W. (2013). Effectiveness of the Incredible Years parent training to modify disruptive and prosocial child behavior: A meta-analytic review. Clinical Psychology Review, 33(8), 901-913.

- Mesurado, B., Guerra, P., Richaud, M. C., & Rodriguez, L. M. (2019). Effectiveness of prosocial behavior interventions: a meta-analysis. In Psychiatry and neuroscience update (pp. 259-271). Springer, Cham.

- Ord, T. (2020). The Precipice. Bloomsbury Publishing.

- Pressman, S. D., Kraft, T. L., & Cross, M. P. (2015). It’s good to do good and receive good: The impact of a ‘pay it forward’style kindness intervention on giver and receiver well-being. The Journal of Positive Psychology, 10(4), 293-302.

- Shin, J., & Lee, B. (2021). The effects of adolescent prosocial behavior interventions: a meta-analytic review. Asia Pacific Education Review, 22(3), 565-577.

- Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant. Psychological Science, 22(11), 1359-1366.

- Verduyn, P., Delvaux, E., Van Coillie, H., Tuerlinckx, F., & Van Mechelen, I. (2009). Predicting the duration of emotional experience: two experience sampling studies. Emotion, 9(1), 83.

My comment is gonna be pretty negative, but I think it's excellent that you included a cost-effectiveness estimate (CEE). IMO, CEEs should pretty much be mandatory for cause area proposals, and it bugs me every time I see a post without one. Writing a CEE makes you easier to pick on (which is why I'm picking on you), but having a flawed CEE is far preferable to being not even wrong. And your CEE is pretty structurally solid, and I'm glad you made it. I like how you look at not just the EV but the variance because variance matters—a higher variance means the new intervention has a better chance of being better than the baseline intervention. I also like that you're comparing consistent units (happy hours per dollar).

Ok, here's the negative part:

The CEE in the linked Guesstimate looks optimistic to the point of being impossible. Given the quoted numbers of 32 acts of kindness per day with each act producing an average of 0.7 happy hours, that's 22 happy hours produced per person-day of acts of kindness. If you said people's acts of kindness increased overall happiness by 10%, I'd say that sounds too high. If you say it produces 22 happy hours, when the average person is only awake for 17 hours...well that's not even possible.

I am also very skeptical of the reported claim that a one-time intervention of "watching an elevating video, enacting prosocial behaviors, and reflecting on how those behaviors relate to one’s value" (Baumsteiger 2019) can produce an average of 1600 additional acts of kindness per person. That number sounds about 1000x too high to me.

In general, psych studies are infamous for reporting impossibly massive effects and then failing to replicate. The given cost-effectiveness involves a conjunction of several impossibly massive effects, producing a resulting cost-effectiveness that I would guess is about 100,000x too high.

This still doesn't tell us the cost-effectiveness of the proposed research project, which is what you were trying to estimate. The upside to the research project basically entirely comes from the small probability that the intervention turns out to be way more cost-effective than I think it is, and I think the chance of that is much higher than 1 in 100,000, but I still think the Guesstimate is significantly overestimating the cost-effectiveness of further research in this area.

This isn't to say no behavioral intervention could be cost-effective; I understand that this was just one idea, and it's a relatively simple model. I do think it's important to have a promising preliminary cost-effectiveness estimate before putting a lot of funding into a cause area.

I have investigated the issues you highlighted, diagnosed the underlying errors, and revised the model accordingly. The root of the problem was that I had sourced some of the estimates of the frequency of prosocial behavior from studies on social behavior under special, unrepresentative conditions, such as infants interacting with adults for 10 min while being observed by researchers and prosocial behavior in TV series. I have removed those biased estimates of the frequency of prosocial behavior in the real world. As a consequence, the predicted lifetime increase in the number of kind acts per person reached by the intervention dropped from 1600 to 64. The predicted cost-effectiveness of the research dropped from 110 times the cost-effectiveness of StrongMinds to 7.5 times the cost-effectiveness of StrongMinds.

In producing this revised version, I also made a few additional improvements. The most consequential of those was to base the estimated cost of deploying the intervention on empirical data on the effectiveness of online advertising in $ per install.

I am currently using Squiggle to program a much more rigorous version of this analysis. That version will include additional improvements and rigorously document and justify each of the model’s assumptions.

Thanks for following up! Those sound like good changes.

Another thing you might do (if it's feasible) is list the studies you're using on something like Replication Markets.

Thank you for your feedback, Michael, and thank you very much for making me aware of those specialized prediction platforms. I really like your suggestion. I think making predictions about the likely results of replication studies would be helpful for me. It would push me to critically examine and quantify how much confidence I should put in the studies my models rely on. Obtaining the predictions of other people would be a good way to make that assessment more objective. We could then incorporate the aggregate prediction into the model. Moreover, we could use prediction markets to obtain estimates or forecasts for quantities for which no published studies are available yet. I think it might be a good idea to incorporate those steps into our methodology. I will discuss that with our team today.

Thank you for engaging with and critiquing the cost-effectiveness analysis, Michael! There seem to be a few misunderstandings I would like to correct.

The value you calculated is the sum of the additional happiness of all the people to whom the person was kind. This includes everyone they interacted with that day in any way. This includes everyone from the strangers they smiled at, to the friends they messaged, the colleagues they helped at work, the customers they served, their children, their partner, and their parents and other family members. If you consider that the benefit for the kindness might be benefited over more than a dozen people, then 22 hours of happiness, might be no more than 1-2 hours per person. Moreover, the estimates also take into account that a person who benefits from your kindness today might still be slightly more happy tomorrow.

The intervention by Baumsteiger (2019) was a multi-session program that lasted 12 days and involved planning, performing, and documenting one's prosocial behavior for 10 days in a row. The effect sizes distribution in the Guesstimate model is based on many different studies, some of which were even more intensive.

Most of the estimates are based on meta-analyses of many studies. The results of meta-analyses are substantially more robust and more reliable than the result of a single study.

I think you are right that this first estimate was too optimistic. In particular, the probability distribution of the frequency of prosocial behavior is currently based on four estimates from different studies. One of those studies led to an estimate that appears to be far too high. This might be because they defined prosocial behavior more liberally because it involved interactions with children, or because participants knew that they were being observed. I will think about what the more general problem might be and how it can be addressed systematically.