5. Recommended interventions

5.1 Review of major risks related to conscious AI

In previous sections, we discussed various risks related to conscious AI. As we've seen, the risks are not only extensive (They don't affect only humans or only AIs), but also severe.

So, what can we actually do, today, to reduce risks related to conscious AI?

This post outlines three overall recommendations based on our research.

- Recommendation I: Don’t intentionally build conscious AI.

- Recommendation II: We support research in the following areas: consciousness evals, how to enact AI welfare protections in policy, surveys of expert opinion, surveys of public opinion, decoding consciousness attributions, & provisional legal protections for AI.

- Recommendation III: We support the creation of an AI public education campaign (specifically related to conscious AI).

Here's how each of these interventions targets the aforementioned risks:

Recommendations ↓

| Risks → | AI suffering | Human disempowerment | Depravity | Geopolitical instability |

| I. Don’t intentionally build conscious AI | ✓ | ✓ | ✓ | ||

| II. Supported research | Consciousness evals | ✓ | |||

| AI welfare policy | ✓ | ||||

| Expert surveys | ✓ | ||||

| Public surveys | ✓ | ✓ | ✓ | ||

| Decoding consciousness attributions | ✓ | ✓ | |||

| Provisional legal protections | ✓ | ||||

| III. AI public education campaigns | ✓ | ✓ | ✓ | ✓ | |

Before delving into the recommendations, we briefly comment on how exactly we evaluate different options for interventions.

5.2 What makes a good intervention?

We aim to take a principled approach to determining the best & most effective strategies for reducing risks related to conscious AI. To this end, we shall adopt the four following criteria to assess the advantages & disadvantages of possible interventions[1] (Dung 2023a):

- Beneficence: The proposed intervention should reduce net suffering risk (of humans, machines, animals, & other moral patients) by as much as possible. At the same time, it should not significantly increase the probability/severity of other negative outcomes nor decrease the probability/utility of other positive outcomes.

- Action-guiding: The proposed intervention should suggest sufficiently concrete courses of action. That is to say, there is minimal vagueness in how it ought to be implemented. This also means that interventions should not imply conflicting or contradictory courses of action.

- Consistent with our epistemic situation: The proposed intervention should broadly presuppose uncertainty regarding prevailing theoretical issues, the future trajectory of technological advances, & future sociocultural developments. In other words, interventions should not require knowledge that is beyond our current grasp & especially knowledge that we are unlikely to attain in the immediately foreseeable future (e.g. knowledge of how the capacity for phenomenal consciousness can arise from non-conscious matter).

- Feasibility: The proposed intervention should be realistic to implement. In other words, for the relevant actor[2] (i.e. an AI lab or governmental agency), the intervention should not only be practically possible to implement, but there should also be a reasonable expectation of success.

The order of our recommendations reflects our overall judgments with respect to these criteria.

5.3 Recommendation I: Don’t intentionally build conscious AI

Description

Overview: Our first recommendation is to pursue regulatory options for halting or at least decelerating research & development that directly aims at building conscious AI.

Justification: Attempts to build conscious AI court multiple serious risks (AI suffering, human disempowerment, & geopolitical instability)– endangering not only humans but also AI[3]. What’s more, as we argue in appendix (§A1), the positive motivations for building conscious AI (improved functionality, safety, insights into consciousness) are nebulous & speculative. Both experts (Saad & Bradley 2022; Seth 2023) and the general public[4] (§4.21) support the idea of stopping or slowing efforts to build conscious AI.

Conditions of implementation: Perhaps the most specific & well-discussed proposal comes from the philosopher of mind Thomas Metzinger (2021a), who has also served on the European Commission’s High-Level Expert Group on AI[5]. Metzinger’s proposal consists in two parts[6]:

- A global moratorium on lines of research explicitly aimed at building conscious AI, lasting until 2050 & open to amendment or repeal earlier than 2050 in light of new knowledge or developments

- Increased investment into neglected research streams

Our discussion of recommendation II (§5.4) expands on (2). As concerns (1), the main issue is determining which lines of research are affected. Currently, multiple actors across academia & industry openly aim at building conscious AI. One place to start could be for governments & funding institutions to restrict funding to projects whose explicit goals are to build conscious AI. Second, research projects whose goals are of strategic relevance to conscious AI (&, in particular, AI suffering) may also fall under the scope of this moratorium. This could include, for instance, work on creating AI with self-awareness (self-models) or affective valence.

This selective scope improves the feasibility of this intervention relative to global bans[7] on AI research (Dung 2023a). Furthermore, because the benefits of conscious AI are unclear (§A1), a ban on conscious AI research is unlikely to trigger a “race to the bottom” (though see our discussion of rogue actors building conscious AI).

Special considerations

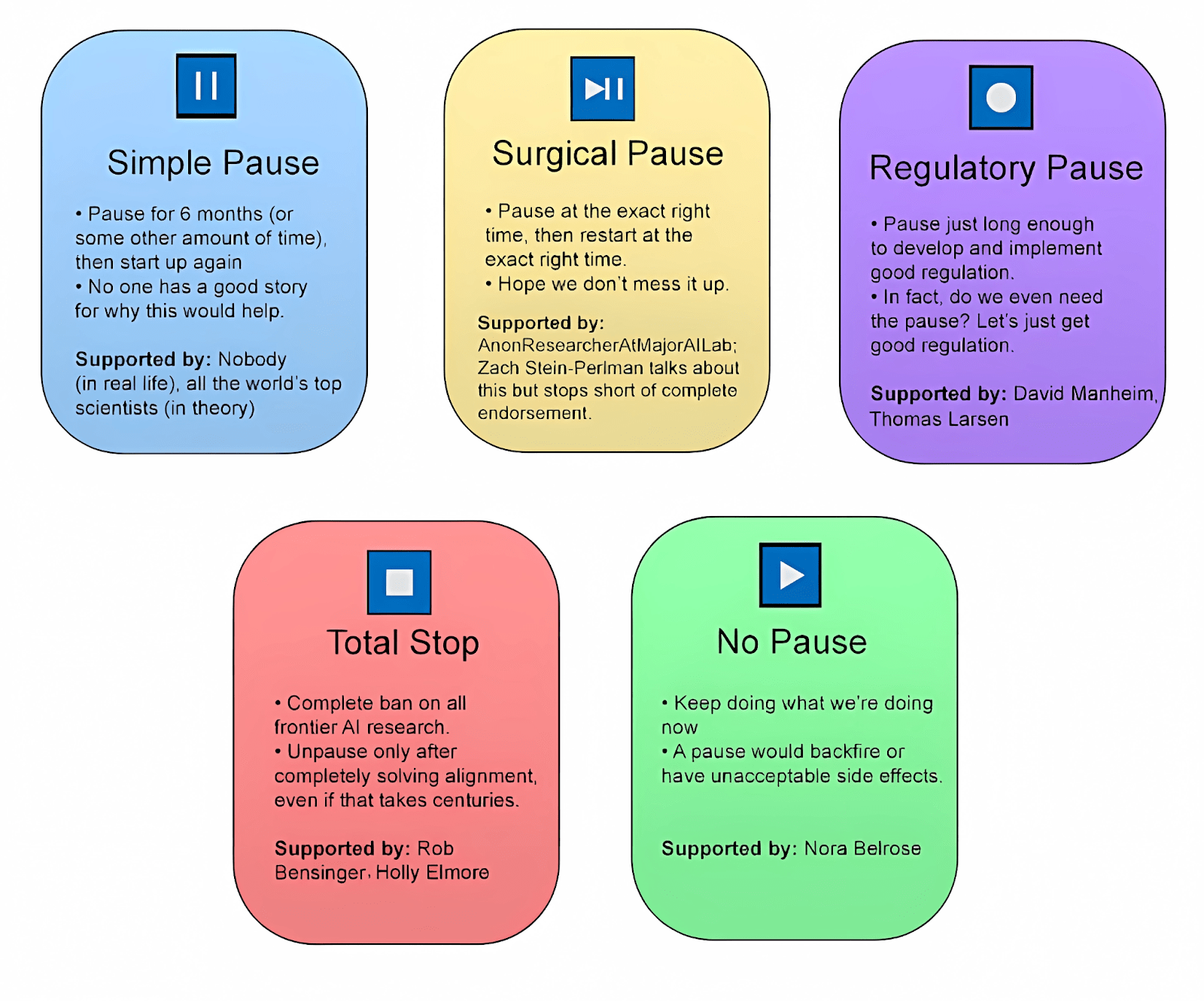

Action-guiding? Notwithstanding the foregoing specifications, the proposal to stop or slow progress towards conscious AI is beset by significant practical ambiguities. Chief among these is the timing of the intervention: that is, (1) when would be the most opportune moment to initiate the ban, & (2) for how long should the ban be sustained to be most effective? We do not attempt to resolve these issues in this policy paper. We note them as key priorities for future work. Having said that, future work on these questions may profit from existing debates over pause AI, which have yielded helpful & constructive frameworks for further discussion (Alexander 2023):

Finally, other key details to be resolved include the conditions for amendment or repeal: what new developments or achievements could warrant: (a) revising the scope of the moratorium, (b) terminating it before 2050, or (c) extending its duration past 2050? These provisos are also instrumental to the overall promise of this intervention.

5.4 Recommendation II: Supported research

5.4.1 Consciousness evals

Description

Overview: We still lack a robust way to test for the presence of conscious experience in artificial systems (§4.121). Further work could take the direction of the theory-light approach, borrowed from animal welfare research. Specifically, we propose focusing on the following questions:

- What capacities should be assessed? How to define and refine them so that they are empirically tractable and robust?

- How to integrate the different criteria into an overall assessment?

Researchers could also investigate other avenues like the recent effort with self-reports and interpretability (Perez & Long 2023). Efforts should be aimed at reducing the risk of false negatives and false positives, and devising tests which cannot be (easily) gamed.

Justification: Even if we are successful in limiting direct efforts to build conscious AIs, consciousness might still emerge as an unexpected capacity. We need to be able to say whether a system is conscious, since this will inform whether this system could be treated as an object or not. We should be able to do so with higher certainty than we do now. While a complete theory of consciousness would be very helpful, we could still make progress towards evaluating the moral significance of AI systems by developing tests for consciousness, which could at least reduce our confidence intervals.

Special considerations

Consistency with our epistemic situation: Creating tests for consciousness should be done with future technological advances in mind, since progress in AI systems might make certain tests obsolete and/or possible to game.

Feasibility: The theory-light approach might fail to integrate different indicators into an overall assessment. It might also be that we can’t create a test for consciousness without a general theory of consciousness. Finally, certain philosophers' concerns that consciousness cannot be empirically studied (Levine 1983) might turn out to be true.

5.4.2 AI welfare research and policies

Description

Overview: If future AI systems merit moral consideration, we need to understand what interests they’ll have and how to protect them (Ziesche & Yampolskiy 2019; Hildt 2022). AI welfare research should investigate the former, while devising AI rights should address the latter.

Exploration of AI welfare policies depends on the kinds of moral status artificial systems will have. Legal protections will be different for AI systems with a moral status akin to some animals, where degrees of certainty w/r/t their consciousness vary for different species, compared to for systems with status akin to human-level status, if not higher (Shulman & Bostrom 2021). Therefore, a key question to answer is about the types and degrees of moral status different AI systems will have, which might depend on the qualities and degrees of their consciousness (Hildt 2022). Similarly to assigning moral status to animals based on taxonomy, we could look for ways to categorise AI systems based on architectural or functional features. We therefore see AI welfare research to develop as a specific line of artificial consciousness research (Mannino et al 2015).

When it comes to AI welfare policies, it is important to note that AI rights need not necessarily be against human rights. We should strive to find positive-sum solutions where possible–actions which are beneficial for both humans and AIs (Sebo & Long 2023); and be open to compromises if they can have significant impact (like making changes to one’s diet for environmental or animal welfare considerations).

Justification: Even if future AI systems are probably not conscious, it is worth exploring what it might mean for future AI systems to be treated well, so that we are prepared for this possibility. Similarly, even in the face of current uncertainty around the kinds of moral status they’ll have, we can start thinking about policies under different scenarios.

Conditions of implementation: These efforts require highly interdisciplinary work. Consciousness researchers should work together with AI developers to define what welfare means for AI systems, and together with ethicists to resolve ambiguities around their moral status. This work will feed into policy efforts to find positive interventions for both humans and nonhumans.

Special Considerations

Uncertainties: Assessing AI welfare and how to improve it might not be feasible until we have a more mature theoretical understanding of consciousness. More importantly, certain directions for policies might turn out to be misguided and even potentially harmful.

Alternative frameworks for AI rights: While the most conventional reasoning for AI protections is pathocentrism, it is worth noting that some scholars encourage alternative frameworks, such as the social-relational framework which “grants moral consideration on the basis of how an entity is treated in actual social situations and circumstances” (Harris & Anthis 2021; Gunkel 2022). It might be that we should give AI systems rights even if they are not conscious (§5.46). In this case, the discussion of AI rights should take into account not only consciousness evals, but also the aims and needs of the broader societies into which AIs are integrated.

5.4.3 Survey on expert opinion

Description

Overview: Taking into consideration a recent academic survey on consciousness of researchers with a variety of backgrounds (e.g. philosophy, neuroscience, psychology, and computer science), as well as a large-scale survey of AI practitioners on the future of AI, we propose devising a large-scale survey on machine consciousness which will combine aspects of both:

- Respondents will be presented with a number of questions about the nature of artificial consciousness. For example, they could be presented with a list of conditions for consciousness and be asked to assign a probability for each to be necessary or sufficient, as well as a probability for whether it would be present in AI systems by a certain year (this kind of analysis is done by Sebo & Long 2023)

- Respondents will show what levels of concern they have about different negative scenarios and risks related to conscious AI.

Justification: Conducting a survey on expert opinion on AI consciousness will be useful for the following reasons:

- It can inform work on consciousness evals.

- It can identify the kinds of AI work which might be indirectly focused on building conscious AI (and this might be relevant for the implementation of a potential ban on building conscious AI, see §5.3).

- It can identify major uncertainties or neglected areas and therefore where to focus future research.

- It can help prioritisation of work on certain risks over others.

- Repeating the survey on an annual basis will allow monitoring trends.

Conditions of implementation: It will be valuable to include a wide range of researchers–AI experts and consciousness experts. Undoubtedly, the most informed answers will be from experts whose focus is artificial consciousness. However, there may not be many of them in Francken et al’s (2022) survey, only 10% of the total 166 respondents had a background in computer science) and in addition, there will be value in having a diverse set of views. The risk of uninformed views is higher for the AI practitioners than for consciousness practitioners since the overlap between consciousness in general and artificial consciousness is bigger than between AI and conscious AI. The idea is that consciousness experts will be better able to comment on AI consciousness than computer scientists. However, there is a considerable benefit to including different AI specialisations since it is possible that some AI developers, despite not being directly involved with conscious AI, are indirectly working towards it by working on capabilities which might be conditions for AI consciousness.

Special Considerations

Questions on moral status: Introducing assessments of the severity of a number of scenarios and risks might entail giving assessments for the moral status/weight of future AIs. In other words, experts might implicitly make judgments about the comparative value of AIs with respect to humans. Schukraft (2020) argues that this method for measuring capacity for welfare status can be misleading since moral intuitions do not necessarily point to objective truths. This is why the questions which involve scenarios and risks related to conscious AIs should be formulated with care and should not be used to draw conclusions about the different degrees of moral status AIs should have.

5.4.4 Survey on public opinion

Description

Overview: The data we currently have on the public’s views about AI consciousness is limited. Therefore, we recommend the following:

- More public opinion polls about AI consciousness should be conducted, in general. Other than the annual AIMS survey (Pauketet et al 2023), we were only able to find two other polls from recent years about this topic.

- Public opinion polls about AI consciousness should be conducted in countries other than the US and UK—currently, all publicly available polls on this issue are from these two countries. While these two countries will likely have an outsized impact on the development and legislation of AI consciousness, they only comprise around 5% of the global population combined—it is likely that many if not most future AI agents will be subject to the laws and treatment of the remaining 95%, making understanding their beliefs and attitudes important.

- Public opinion polls about AI consciousness should include demographic analyses, such as age, gender, religious affiliation, political orientation, and socioeconomic status, in order to examine how answers to other questions vary with these factors.

- Public opinion polls about AI should include questions designed to gauge how much respondents care about this issue, such as:

- How they would rank the importance of AI suffering relative to other AI risks (such as AI-generated misinformation or algorithmic bias), or how they would rank the importance of AI risks relative to other political issues.

- How many government resources they think should be spent on risks related to AI suffering.

- Whether they would be willing to make hypothetical tradeoffs to prevent AI suffering (e.g. pay $X a month more for a more humane LLM, analogous to the price gap between eggs from free-range vs. caged chickens).

Justification: Among other things, this information would help forecast how future discourse about AI consciousness is likely to play out (for instance, whether it is at risk of being politically polarised) and would help determine how many resources to invest in interventions to sway public opinion (§5.5).

Conditions of implementation: We would be keen to see independent researchers, think tanks, nonprofits, and media outlets alike conduct polls on these issues. These polls would be most effective if conducted on a periodic basis (e.g. annually) to monitor how opinions evolve.

Special considerations

While these polls could show policymakers that their constituents care about this issue, they could also show the opposite. Therefore, there is a risk that it would cause a decrease in the amount of attention and public resources devoted to this problem. Pollsters should be mindful of this risk when publishing and discussing their results.

5.4.5 Decoding consciousness attribution

Description

Overview: We also support research that is aimed at deciphering the logic behind people’s intuitive consciousness attributions[8]: what factors drive people to perceive AI as conscious?

Justification: Consciousness is a complex, multifaceted phenomenon. This being the case, a holistic understanding of consciousness must reconcile philosophical & scientific explanations with a thorough account of the factors driving consciousness perceptions[9]. This is crucial because consciousness attributions may be subject to confounders: factors which compromise the reliability of our judgments about which things are conscious. Such confounders might include cognitive biases underwriting anthropomorphic tendencies (biasing towards false positive judgments), or financial incentives to eschew regulations protecting conscious AIs (biasing towards false negative judgments). Confounders may unwittingly prejudice consciousness attributions one way or the other, or they may be exploited by bad actors.

Research on the mechanics of consciousness attributions can reduce uncertainty about our intuitive perceptions of consciousness, promote responsible AI design (Schwitzgebel 2024), & help to steer away from the false negative & false positive scenarios.

Conditions of implementation: Specifically, we propose two research directions:

- Anthropomorphism & the intentional stance:

- Which properties of AIs (e.g. anthropomorphic design cues; P&W) tend to elicit consciousness attributions from humans?

- What factors lead people to treat things as intentional agents (Dennett)? What factors engage people’s theory of mind reasoning capacities?

- What is the relationship between the uncanny valley effect[10] & consciousness perceptions? Given that the uncanny valley effect can be overcome through habituation (Złotowski et al 2015), can certain interventions also positively influence consciousness perceptions?

- Relations with AI:

- What non-technical[11] features (e.g. emotional intelligence) favour trustworthy perceptions of AI?

- How do macro-level factors (e.g. different actors’ vested interests) influence their consciousness attributions? How to cultivate sensitivity to biases towards anthropomorphism & anthropodenial (de Waal 1997)

5.4.6 Provisional legal protections

Description

Overview: We support research which (1) clarifies the extent to which abusive behaviours towards AI can negatively influence relations towards humans & (2) in the event that this hypothesis is supported, explores strategies that could mitigate adverse spillover effects.

Justification: Our final research recommendation addresses the risk of depravity (§3.24). The risk of depravity essentially rests on an empirical hypothesis: that abusing life-like AI (especially social actor AI) might have detrimental effects on our moral character, eventually altering the way we behave towards other people for the worse. Although the specific mechanisms underpinning this transitive effect (“spillover”) remain to be rigorously worked out, there is enough evidence of such a connection to warrant precautionary efforts (Guingrich & Graziano 2024). Moreover, the fact that depravity is a risk that occurs across all quadrants implies that precautionary efforts could be robustly efficacious despite uncertainty about which scenario we are in or will be in.

Conditions of implementation: First & foremost, we suggest the following research directions aimed at testing & clarifying the transitive hypothesis:

- Are certain types of relations with AI (e.g. emotional, sexual, employee) more likely than others to have spillover effects on human relations? What sorts of factors promote or hinder this transitive effect (e.g. anthropomorphic design cues)?

- Which cognitive mechanisms subserve spillover effects?

- What effects might culture have on the type & extent of spillover effects?

- Could spillover effects be positively leveraged to support the cultivation of human virtues? In other words– could AI help us to treat other humans better, &, in doing so, become better people (Guingrich & Graziano 2024)?

If the transitive hypothesis fails to be supported by further research, then the risk of depravity can be safely dismissed. However, if evidence continues to mount in favour of the transitive hypothesis, then it would be prudent to explore policy options to prevent negative spillover effects resulting in harm to humans. One forthcoming strategy could be extending provisional rights & legal protections to AI. These concessions would be provisional in the sense that they do not depend upon strong proof that AIs are conscious (e.g. passing a standardised consciousness eval; §5.41). Rather, they would apply only to AIs that are capable of engaging in certain types of relations with humans (i.e., those that are prone to have negative spillover effects). This proposal has two cardinal motivations. Most importantly, by mitigating adverse spillover effects from AI abuse, it could indirectly decrease harm to humans. Moreover, by prohibiting certain types of abusive behaviours towards AI, it could decrease the risk of AI suffering. We support research aimed at more deeply assessing the scope, viability, & appeal of this proposal.

Special considerations

Uncertainties: Initial survey data show that there is some public support for protecting AI against cruel treatment & punishment (Lima et al 2020). In spite of this, & even granted robust empirical validation of the transitive hypothesis, the political appeal of the idea of provisional legal protections remains dubious. In the first place, it may appear anthropocentric because it is not motivated by AI’s own interests. In the second place, the mechanism of risk reduction is indirect & its impact difficult to measure. Nonetheless, we believe these directions are worth exploring for the reasons outlined above.

Lastly, the type & extent of provisional legal protections would need to be carefully balanced against the risk of social hallucination (Metzinger 2022). This intervention could be misinterpreted as signalling the imminence of conscious AI. Misconceptions about the nature & intent of this proposal could, in the worst case, prompt a transition into the false positive scenario (especially if these misconceptions are exploited by non-conscious AI to gain further resources & better achieve its own instrumental goals; §4.12).

5.5 Recommendation III: AI public opinion campaigns

Description

Overview: As mentioned previously, preliminary evidence (Lima et al 2020) suggests that informational interventions can increase public support for AI moral consideration. Lima et al found that exposing participants to different kinds of information—such as the set of requirements for legal personhood or debunking the misconception that legal personhood is exclusive to natural entities—resulted in significantly increased support for various robot rights, such as the right to hold assets or the right to a nationality. Therefore, we recommend that in the near future, governments and nonprofits should deploy public opinion campaigns based on this research. Additionally, we believe further research attempting to replicate these findings, evaluating other interventions that may have higher effect sizes, or be more effective for particular groups in this area is promising.

Justification: While further research on public opinion would help prioritise how many resources to spend on these interventions (as discussed in the section above), based on what we know today, it seems likely that some degree of awareness-raising and public education will be necessary in order to prevent the most severe risks, like AI suffering.

Implementation details: It may be that the opportune moment to deploy such public interventions lies in the future rather than now. The AIs that exist today seem overwhelmingly likely not to be conscious, and it would be premature to enact legal protections for current AIs. Therefore, it would likely be more effective to implement these campaigns in the future, with efforts in the meantime focusing on further research on similar interventions. Some signs that it is an opportune time to deploy public opinion campaigns may include: polls showing that at least 45% of experts believe some current AIs are conscious, an AI companion app reaching the Top 100 chart on the iOS app store, activist movements regarding AI rights begin to form organically.

Special considerations

Uncertainties: While waiting to deploy these campaigns may be more appropriate than deploying them today, there is also a risk that once AI rights become a more salient issue, the public’s views will already be crystalized, making them harder to influence. Therefore, there is a possibility that deploying these interventions sooner rather than later is preferable.

This post is part 4 in a 5-part series entitled Conscious AI and Public Perception, encompassing the sections of a paper by the same title. This paper explores the intersection of two questions: Will future advanced AI systems be conscious? and Will future human society believe advanced AI systems to be conscious? Assuming binary (yes/no) responses to the above questions gives rise to four possible future scenarios—true positive, false positive, true negative, and false negative. We explore the specific risks & implications involved in each scenario with the aim of distilling recommendations for research & policy which are efficacious under different assumptions.

Read the rest of the series below:

- Introduction and Background: Key concepts, frameworks, and the case for caring about AI consciousness

- AI consciousness and public perceptions: four futures

- Current status of each axis

- Recommended interventions (this post) and clearing the record on the case for conscious AI

- Executive Summary (posting later this week)

This paper was written as part of the Supervised Program for Alignment Research in Spring 2024. We are posting it on the EA Forum as part of AI Welfare Debate Week as a way to obtain feedback before official publication.

- ^

These 4 criteria partially coincide with 3 major risk aversion strategies from decision theory applied in animal sentience research (Fischer 2024). (1) Worst-case aversion prioritises minimising the potential for highly negative outcomes. Options are ranked based on perceived severity of the worst possible outcome rather than solely on expected value maximisation. This strategy optimises for security & safety, & may coincide with a focus on beneficence. (2) Expected value maximisation prioritises interventions that are more likely to produce concrete, measurable positive results. This approach optimises for measurability & effectiveness, & may coincide with a focus on feasibility. (3) Ambiguity aversion prioritises interventions that rely the least upon uncertain information. Options are ranked based on estimated proportion of knowledge vs. ignorance of the key facts. This means that interventions that rely upon unlikely but known probabilities are preferred over interventions that rely upon assumptions with a wider probability distribution. This strategy optimises for clarity & confidence in decision-making processes, & may coincide with a focus on action-guiding & feasibility.

- ^

Most interventions will require the action of other parties besides oneself. In such cases, feasibility should not presuppose their backing of the intervention. Rather, feasibility will also need to take into account the relative probability that other necessary actors can be convinced to enact the desired intervention– in addition to the probability that, if enacted, the intervention will achieve positive results (Dung 2023a).

- ^

Consistent with a preventative ethics approach (Seth 2021), by avoiding building conscious AI, we avoid bringing vulnerable individuals into existence. By the same stroke, we also sidestep future moral dilemmas related to conscious AI, including such thorny issues as trade-offs between the welfare of humans & that of AIs, as well as how to live alongside morally autonomous AI (ibid). Conscious AI is a genie that, once unleashed, is difficult to put back into the bottle. That is to say, it will be difficult to revert back to a state before conscious AI without committing some kind of violence.

- ^

The 2023 Artificial Intelligence, Morality, & Sentience (AIMS) survey found that 61.5% of US adults supported banning the development of sentient AI (an increase from 57.7% in 2021; Pauketat et al 2023).

- ^

In (2019), the High-Level Expert Group published Ethics Guidelines for Trustworthy AI. However, Metzinger has later criticised the guidelines (2019; 2021b). On his view, the guidelines were industry-dominated (suffered from "regulatory capture"), short-sighted (largely ignored long-term risks including those related to conscious AI & artificial general intelligence), & toothless (adherence is not compelled by enforceable measures).

- ^

Both aspects of this proposal can be dated back to the Effective Altruism Foundation’s 2015 policy paper on AI risks & opportunities, which Metzinger co-authored (Mannino et al 2015).

- ^

The overall pace of AI progress (whether or not related to conscious AI) is a major concern among experts (Grace et al 2024). Movements such as “pause AI” or “effective decelerationism” advocate for global restraints on AI progress. Having said that, these conservative movements are not without their critics (Lecun 2024). Outright global bans on all AI research are likely infeasible due to the economically & geopolitically strategic value of advanced AI capabilities, & due to the multilateral coordination that would be required for such a ban to be effective (Dung 2023).

- ^

Much like how people’s tendency to attribute gender to different individuals tell us something interesting about their underlying concepts of gender (e.g. relative importance of biological factors, psychological identity, social roles…), people’s tendency to attribute consciousness to different things (AIs, animals, plants, rocks…) also tells us something interesting about their underlying concepts of consciousness (the relative importance of material constitution, cognitive sophistication, behavioural complexity...).

- ^

In other words, the “scientific” & “manifest” images (Sellars 1963) of consciousness must be brought to terms with one another.

- ^

The uncanny valley effect (Mori 2012) refers to a sensation of discomfort or unease that individuals feel when confronted with an artificial thing that appears almost, but not quite human.

- ^

The object of interest here is perceived trustworthiness, not “actual” trustworthiness. Accordingly, explainability & interpretability are not relevant.

Executive summary: To reduce risks related to conscious AI, the authors recommend not intentionally building conscious AI, supporting research in key areas like consciousness evaluation and AI welfare policy, and creating public education campaigns about conscious AI.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.