tl;dr: We should value large expected impact[1] rather than large inputs, but should get especially excited about megaprojects anyway because they're a useful tool we're now unlocking.

tl;dr 2: It previously made sense for EAs to be especially excited about projects with very efficient expected impact (in terms of dollars and labour required). Now that we have more resources, we should probably be especially excited about projects with huge expected impact (especially but not only if they're very efficient). Those projects will often be megaprojects. But we should remember that really we're excited about capacity to achieve lots of impacts, not capacity to absorb lots of inputs.

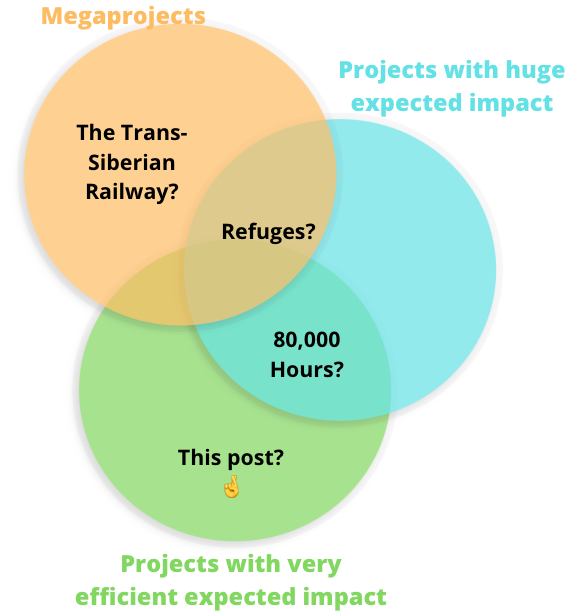

We should be excited about the blue and green circles, including but not limited to their overlaps with the orange circle. We should not be excited about the rest of the orange circle. Question marks are because estimating impact is hard, man. For more on refuges, see Concrete Biosecurity Projects (some of which could be big).

A lot of people are excited about EA-related megaprojects, and I agree that they should be. But we should remember that megaprojects are basically defined by the size of their inputs (e.g., "productively" using >$100 million per year), and that we don't intrinsically value the capacity to absorb those inputs. What we really care about is something like maximising the expected moral value of the world. Megaprojects are just one means to that end, and actually we should generally be even more excited about achieving the same impacts using less inputs & smaller projects (if there are ways to do that).

How can we reconcile these thoughts, and why should we in any case still get excited about - and pay special attention to - generating & executing megaproject ideas?

I suggest we think about this as follows:

- Think of a Venn diagram with circles for megaprojects, projects with huge expected impact, and projects with very efficient expected impact.

- Very roughly speaking, something like "maximising moral goodness" is the ultimate goal and always has been.

- Given that we have limited resources, we should therefore be especially excited about efficient expected impact, and that has indeed been a key focus of EA thus far.

- Projects like 80,000 Hours, FHI, and the book Superintelligence were each far smaller than megaprojects, but in my view probably had and still had huge expected impact, to an extent that'd potentially justify megaproject-level spending if that'd been necessary. That's great (it's basically even better than actual megaprojects!), and we'd still love more projects that can punch so far above their weight.

- But efficiency is just a proxy. Always choosing the most cost- or labour-efficient option is not likely to maximise expected impact; sometimes it's worth doing something that's less efficient in terms of one or more resources if it has a sufficiently large total impact.

- Meanwhile and in contrast, larger projects or large amounts of inputs is also a useful proxy to have in mind when generating/considering project ideas, for three main reasons:

- Many things that would achieve huge impact and are worth doing will be large, costly, expensive projects.

- As a community and as individuals, we've often avoided generating, considering, or executed such ambitious ideas, due to our focus on efficiency and perhaps our insufficient ambition/confidence. This leaves "megaprojects" as a fairly untapped area of potentially worthwhile ideas.

- Coming up with, prioritizing among, and (especially) executing large projects involves some relatively distinct and generalisable skills, so the more we do that, the more we unlock the ability to do more of it in future.

- This is basically a matter of people/teams/communities testing fit and building career capital.

- This is one reason to sometimes pursue large projects rather than smaller projects with similar/greater impact. But this still isn't a matter of valuing the size of projects as an end in itself.

- As we've gained and continue to gain more resources (especially money, human capital, political influence), efficiency is becoming a somewhat less useful proxy to focus on, "large projects" is becoming a somewhat more useful proxy to focus on, and we're sort-of "unlocking" an additional space of project options with huge expected impact.

- So we should now be explicitly focusing a decent chunk of our attention on coming up with, prioritizing among, and executing megaproject ideas with huge expected impact, and on building capacity to do those things. (If we don't make an explicit effort to do that, we'll continue neglecting it via inertia.)

- But we should remember that this is in addition to our community working toward smaller and/or more efficient projects. And we should remember that really we should first and foremost be extremely ambitious in terms of impacts, and just willing to also - as a means to that end - be extremely ambitious in terms of inputs absorbed.

Epistemic status: I spent ~30 mins writing a shortform version of this, then ~1 hour turning that into this post. I feel quite confident that what I'm trying to get across is basically true, but only moderately confident that I've gotten my message across clearly or that this is a very useful message to get across. The title feels kind-of misleading/off but was the best I could quickly come up with.

Acknowledgements: My thanks to Linch Zhang for conversations that informed my thinking here & for a useful comment on my shortform (though I imagine his thinking and framing would differ from mine at least a bit).

This post represents my personal views only.

- ^

I'm using "expected impact" as a shorthand for "expected net-positive counterfactual moral impact".

Yeah, I agree with that.

Though I also think your comment could be read as implying that you think megaprojects won't themselves be cost-effective / labour-effective / in other senses efficient, relative to some bar like 80k or FHI or GiveWell's recommended charities or ACE's recommended charities. (Were you indeed thinking that?)

I think I disagree with that. That is, I'd guess that at least a few megaprojects that would be worth doing if we had the right founders will also clear the relevant efficiency bar. (I also think that at least a few won't clear the relevant efficiency bar. And, of course, most megaprojects that aren't worth doing will also not clear the relevant efficiency bar.)

I haven't attempted any relevant Fermi estimates or even really properly qualitatively thought about this before. My tentative disagreement is just based on the following fuzzy thoughts:

(But now that I've started to draft this reply, I realise that this might be an important question, that its answer isn't immediately obvious, and that I've hardly thought about it at all and I don't feel confident about my fuzzy thoughts on it. Also, in any case, this wouldn't mean I overall disagree with your comment and wouldn't change my views on what I said in the post itself.)