This is a linkpost for https://ealifestyles.substack.com/p/ea-origin-story-round-up

For every person who calls themselves an EA (or aspiring EA, or EA-adjacent), there’s a story. Some of those stories are more unique than others. I put a call out on Twitter for people’s EA origin stories - here are some of my favourites

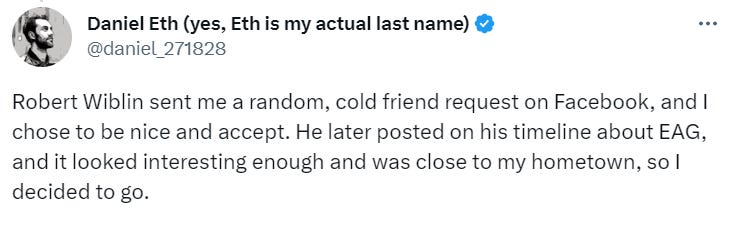

Rob Wiblin wants to be your friend

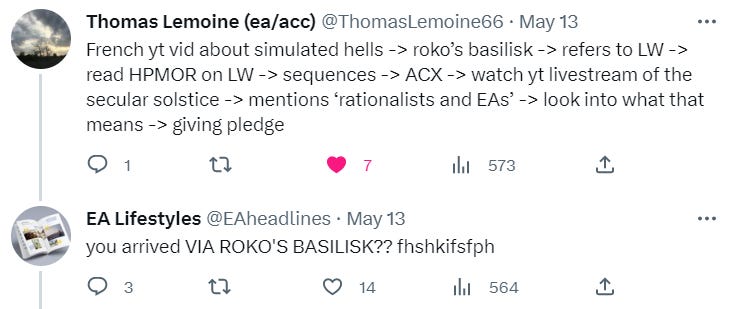

The infohazard to EA pipeline

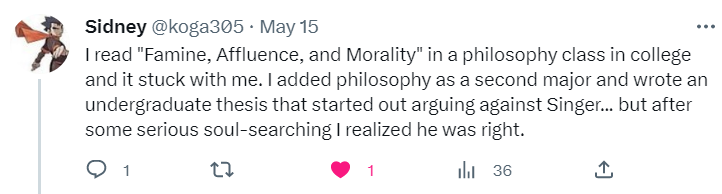

You come at the king, you best not miss

Flirt to convert ;)

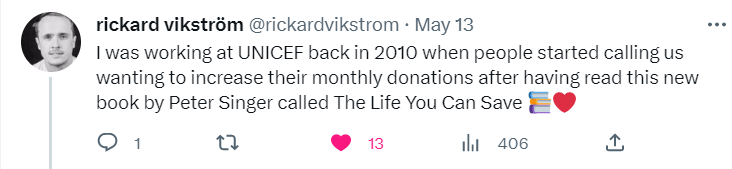

Wait, you’re calling to increase your donations??

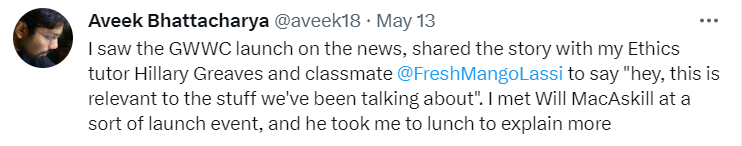

The OG origin story

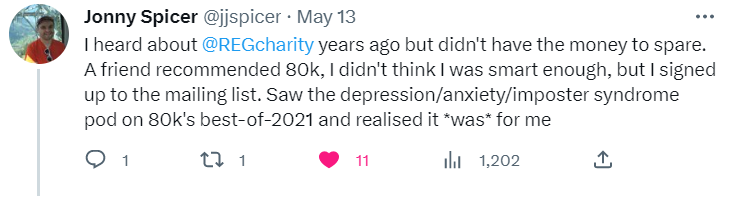

Yes, EA is for you :)

What’s your origin story? Post it below!

EA Lifestyles news: I'll be launching a paid version of the newsletter next week! In celebration, you'll get multiple emails next week showing you what's included in the paid version. Make sure to subscribe to get all the EA Lifestyles emails straight to your inbox.

Oh hey, I wrote a blog post (sorta) about this.

The TLDR: Since I was a teenager I've been looking for ways to give effectively, and once GiveWell appeared doing so became a whole lot easier.

I was interested in most of the relevant cause areas in some form from childhood (the global poor, animal welfare, extinction risks), and independently formulated utilitarianism (not uncommon I’m told, both Bertrand Russell and Brian Tomasik apparently did the same), so I was a pretty easy sell.

I was assigned “Famine, Affluence, and Morality” and “All Animals are Equal” for a freshman philosophy course, and decided Peter Singer really got it and did philosophy in the way that seemed most important to me. Later I revisited Singer when working on a long final paper about animal rights and I ran into his TED talk on Effective Altruism.

At first I was sympathetic but not that involved, but gradually realized that it was much more the style of ethics/activism I was interested in promoting than the other things on the table, or at least on top of them. I founded my school’s Effective Altruism club while I still didn’t really know all that much about the movement, and started learning more, especially after a friend (Chris Webster) recommended the 80,000 Hours podcast to me.

Around this same time I read Reasons and Persons, and met my friend and long-time collaborator Nicholas Kross who introduced me to many rationalist ideas and thinkers, and by the end of undergrad, I was basically a pretty doctrinaire, knowledgeable EA. Kind of a long story, but the whole thing was pretty much in fits and starts so I don’t know a great way to compress it.

Here's mine: My Effective Altruism Timeline (2013)

I just find it delightful that that HPMOR is the start of so many people's EA origin story, partly just as a curiosity as I had an opposite path to so many people (AMF > EA > LW > HPMOR)

Presumably there are many people alive today because of a chain of events started with EY writing a fanfic of all things.

That's kind of wonderful to think about - and I think it's hilarious that you have the opposite journey!