On the recent post on Manifest, there’s been another instance of a large voting group (30-40ish [edit to clarify: 30-40ish karma, not 30-40ish individuals])arriving and downvoting any progressive-valenced comments (there were upvotes and downvotes prior to this, but in a more stochastic pattern). This is similar to what occured with the eugenics-related posts last year. Wanted to flag it to give a picture to later readers on the dynamics at play.

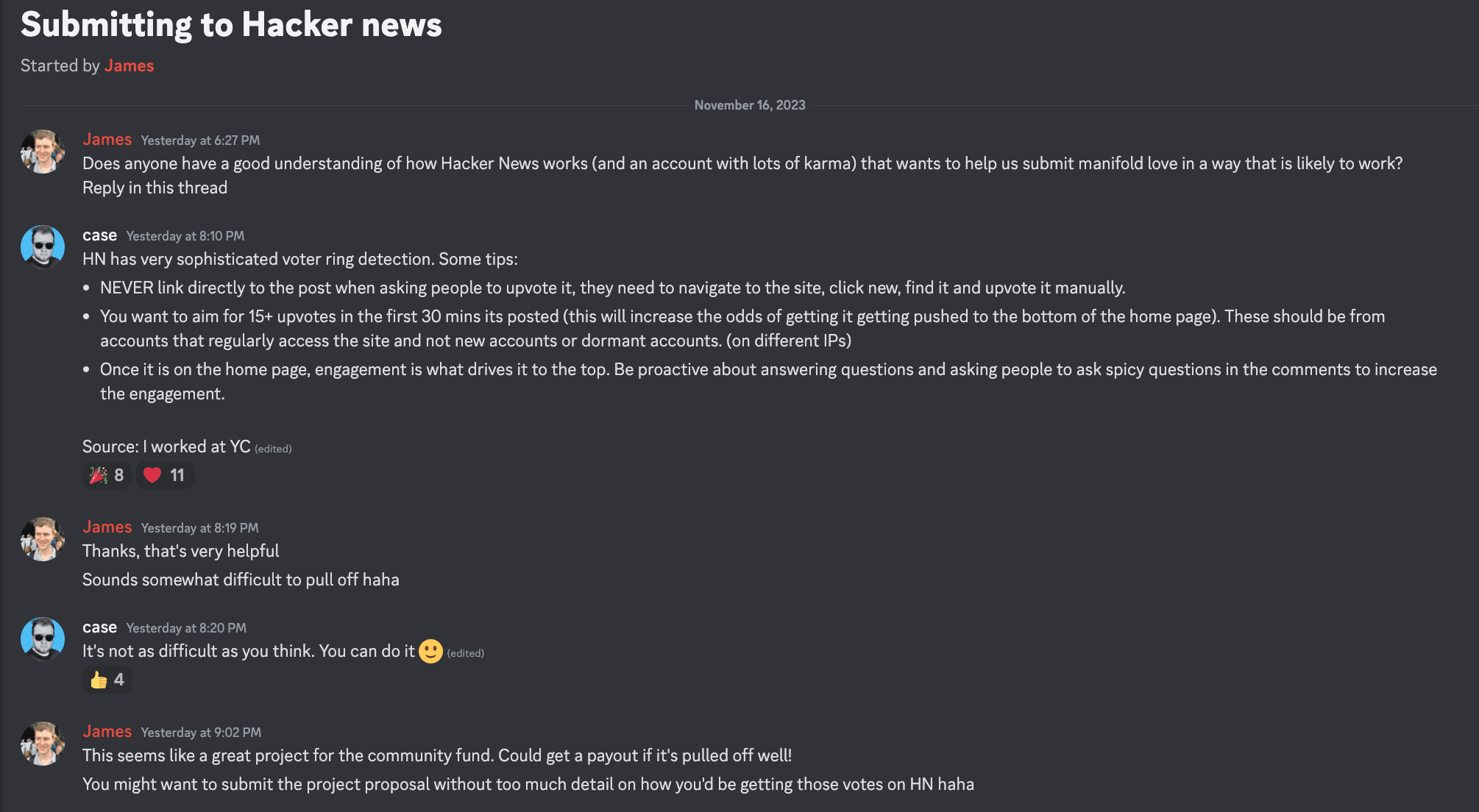

Manifold openly offered funding voting rings in their discord:

Just noting for anyone else reading the parent comment but not the screenshot, that said discussion was about Hacker News, not the EA Forum.

I would be surprised if it's 30-40 people. My guess is it's more like 5-6 people with reasonably high vote-strengths. Also, I highly doubt that the overall bias of the conversation here leans towards progressive-valenced comments being suppressed. EA is overwhelmingly progressive and has a pretty obvious anti-right bias (which like, I am a bit sympathetic to, but I feel like a warning in the opposite direction would be more appropriate)

My wording was imprecise - I meant 30-40ish in terms of karma. I agree the number of people is more likely to be 5-12. And my point is less about overall bias than just a particular voting dynamic - at first upvotes and downvotes occurring as is pretty typical, then a large and sudden influx of downvotes on everything from a particular camp.

There really should be a limit on the quantity of strong upvotes/downvotes one can deploy on comments to a particular post -- perhaps both "within a specific amount of time" and "in total." A voting group of ~half a dozen users should not be able to exert that much control over the karma distribution on a post. To be clear, I view (at least strong) targeted "downvoting [of] any progressive-valenced comments" as inconsistent with Forum voting norms.

At present, the only semi-practical fix would be for users on the other side of the debate to go back through the comments, guess which ones had been the targets of the voting group, and apply strong upvotes hoping to roughly neutralize the norm-breaking voting behavior of the voting group. Both the universe in which karma counts are corrupted by small voting groups and the universe in which karma counts are significantly determined by a clash between voting groups and self-appointed defenders seem really undesirable.

We implemented this on LessWrong! (indeed based on some of my own bad experiences with threads like this on the EA Forum)

The EA Forum decided to forum gate the relevant changes, but on LW people would indeed be prevented from voting like I think voting is happening here: https://github.com/ForumMagnum/ForumMagnum/commit/07e0754042f88e1bd002d68f5f2ab12f1f4d4908

Thanks for the suggestion Jason! @JP Addison says that he forum-gated it at the time because he wanted to “see how it went over, whether they endorsed it on reflection. They previously wouldn’t have liked users treating votes as a scarce resource.” LW seems happy with how it’s gone, so we’ll go ahead and remove the forum-gating.

I really enjoyed this 2022 paper by Rose Cao ("Multiple realizability and the spirit of functionalism"). A common intuition is that the brain is basically a big network of neurons with input on one side and all-or-nothing output on the other, and the rest of it (glia, metabolism, blood) is mainly keeping that network running.

The paper's helpful for articulating how that model's impoverished, and argues that the right level for explaining brain activity (and resulting psychological states) might rely on the messy, complex, biological details, such that non-biological substrates for consciousness are implausible. (Some of those details: spatial and temporal determinants of activity, chemical transducers and signals beyond excitation/inhibition, self-modification, plasticity, glia, functional meshing with the physical body, multiplexed functions, generative entrenchment.)

The argument doesn't necessarily oppose functionalism, but I think it's a healthy challenge to my previous confidence in multiple realisability within plausible limits of size, speed, and substrate. It's also useful to point to just how different artificial neural networks are from biological brains. This strengthens my feeling of the alien-ness of AI models, and updates me towards greater scepticism of digital sentience.

I think the paper's a wonderful example of marrying deeply engaged philosophy with empirical reality.

On the recent post on Manifest, there’s been another instance of a large voting group (30-40ish [edit to clarify: 30-40ish karma, not 30-40ish individuals])arriving and downvoting any progressive-valenced comments (there were upvotes and downvotes prior to this, but in a more stochastic pattern). This is similar to what occured with the eugenics-related posts last year. Wanted to flag it to give a picture to later readers on the dynamics at play.

Thanks for the suggestion Jason! @JP Addison says that he forum-gated it at the time because he wanted to “see how it went over, whether they endorsed it on reflection. They previously wouldn’t have liked users treating votes as a scarce resource.” LW seems happy with how it’s gone, so we’ll go ahead and remove the forum-gating.