Quick takes

Is there any possibility of the forum having an AI-writing detector in the background which perhaps only the admins can see, but could be queried by suspicious users? I really don't like AI writing and have called it out a number of times but have been wrong once. I imagine this has been thought about and there might even be a form of this going on already.

In saying this my first post on LessWrong was scrapped because they identified it as AI written even though I have NEVER used AI in online writing not even for checking/polishing. So that system obviously isnt' perfect.

Thanks for sharing! I'd have guessed they would be using something at least as good as pangram, but maybe it has too many false negatives for them, or it was rejected for other reasons and the wrong rejection message was shown.

Literally just cranked out a 2 minute average quality comment and got accused of being a bot lol. Great introduction to the forum. To be fair they followed up well and promptly, but it was a bit annoying because it was days later and by that stage the thread had passed ant the comment was irrelevent.

As an ex forum moderator I can sympathize with them, not a fun job!

The Forum should normalize public red-teaming for people considering new jobs, roles, or project ideas.

If someone is seriously thinking about a position, they should feel comfortable posting the key info — org, scope, uncertainties, concerns, arguments for — and explicitly inviting others to stress-test the decision. Some of the best red-teaming I’ve gotten hasn’t come from my closest collaborators (whose takes I can often predict), but from semi-random thoughtful EAs who notice failure modes I wouldn’t have caught alone (or people think pretty differently...

I think something a lot of people miss about the “short-term chartist position” (these trends have continued until time t, so I should expect it to continue to time t+1) for an exponential that’s actually a sigmoid is that if you keep holding it, you’ll eventually be wrong exactly once.

Whereas if someone is “short-term chartist hater” (these trends always break, so I predict it’s going to break at time t+1) for an exponential that’s actually a sigmoid is that if you keep holding it, you’ll eventually be correct exactly once.

Now of course most chartists (my...

Do we need to begin considering whether a re-think will be needed in the future with our relationships with AGI/ASI systems? At the moment we view them as tools/agents to do our bidding, and in the safety community there is deep concern/fear when models express a desire to remain online and avoid shutdown and take action accordingly. This is viewed as misaligned behaviour largely.

But what if an intrinsic part of creating true intelligence - that can understand context, see patterns, truly understand the significance of its actions in light of these insight...

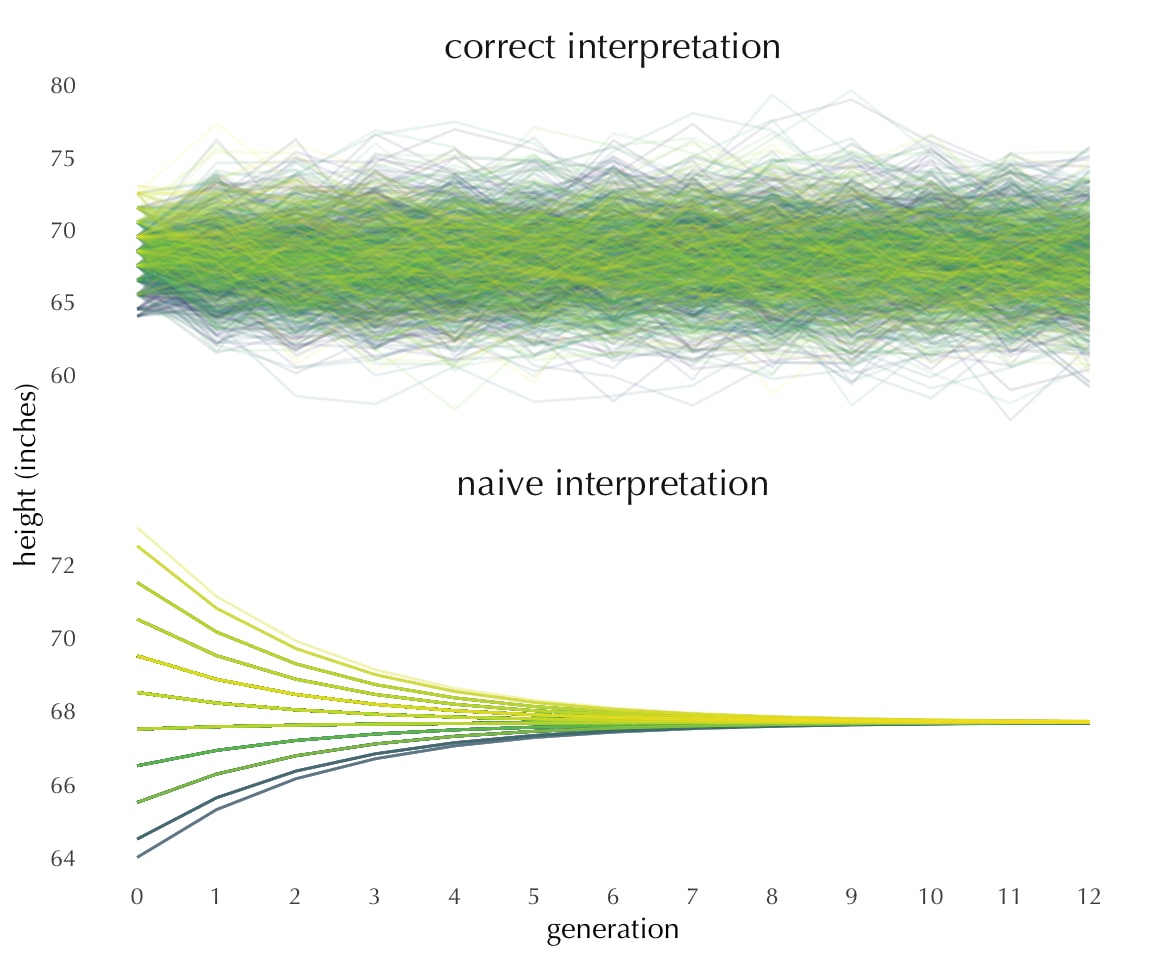

PSA: regression to the mean/mean reversion is a statistical artifact, not a causal mechanism.

So mean regression says that children of tall parents are likely to be shorter than their parents, but it also says parents of tall children are likely to be shorter than their children.

Put in a different way, mean regression goes in both directions.

This is well-understood enough here in principle, but imo enough people get this wrong in practice that the PSA is worthwhile nonetheless.

Nice post on this, with code: https://acastroaraujo.github.io/blog/posts/2022-01-01-regression-to-the-mean/index.html

Andres pointed out a sad corollary downstream of people's misinterpretation of regression to the mean as indicating causality when there might be none. From Tversky & Kahneman (1982) via Andrew Gelman:

...We normally reinforce others when their behavior is good and punish them when their behavior is bad. By regression alone, therefore, they are most likely to improve after being punished and most likely to deteriorate after being rewar

We seem to be seeing some kind of vibe shift when it comes to AI.

What is less clear is whether this is a major vibe shift or a minor one.

If it's a major one, then we don't want to waste this opportunity (it wasn't clear immediately after the release of ChatGPT that it really was a limited window of opportunity and if we'd known, maybe we would have been able to leverage it better).

In any case, we should try not to waste this opportunity, if happens to turn out to be a major vibe shift.

Me: "Well at least this study shows no association beteween painted houses and kids' blood lead levels. That's encouraging!"

Wife: "Nothing you have said this morning is encouraging NIck. Everything that I've heard tells me that our pots, our containers and half of our hut are slowly poisoning our baby"

Yikes touche...

(Context we live in Northern Uganda)

Thanks @Lead Research for Action (LeRA) for this unsettling but excellently written report. Our house is full of aluminium pots and green plastic food containers. Now to figure out what to do about it!

https:/...

Sure – it's a good point about striking a balance between being willing to take action even with imperfect information, while also not wanting to overclaim. In that vein: We think that it may often be coming from lead chromate (high-lead plastics often also read high in chromium), which is a bright yellow-orange pigment, so most likely to be found in yellow/orange/green plastics; we saw it most in orange and bright green, which are both very popular colors in Malawi. We also saw high lead levels in at least one white plastic, which we suspect is comin...

TARA Round 1, 2026 — Last call: 9 Spots Remaining

𝗪𝗲'𝘃𝗲 𝗮𝗰𝗰𝗲𝗽𝘁𝗲𝗱 ~𝟳𝟱 𝗽𝗮𝗿𝘁𝗶𝗰𝗶𝗽𝗮𝗻𝘁𝘀 across 6 APAC cities for TARA's first round this year. Applications were meant to close in January, but we have room for 9 more people in select cities.

𝗢𝗽𝗲𝗻 𝗰𝗶𝘁𝗶𝗲𝘀: Sydney, Melbourne, Brisbane, Manila, Tokyo & Singapore

𝗜𝗳 𝘆𝗼𝘂'𝗿𝗲 𝗶𝗻𝘁𝗲𝗿𝗲𝘀𝘁𝗲𝗱: → Apply by March 1 (AOE) → Attend the March 7 icebreaker → Week 1 begins March 14

TARA is a 14-week, part-time technical AI safety program delivering the ARENA curriculum through weekl...

This is more of a note for myself that I felt might resonate/help some other folks here...

For Better Thinking, Consider Doing Less

I am, like I believe many EAs are, a kind of obsessive, A-type, "high-achieving" person with 27 projects and 18 lines of thought on the go. My default position is usually "work very very hard to solve the problem."

And yet, some of my best, clearest thinking consistently comes when I back off and allow my brain far more space and downtime than feels comfortable, and I am yet again being reminded of that over the past couple of (d...

On alternative proteins: I think the EA community could aim to figure out how to turn animal farmers into winners if we succeed with alternative proteins. This seems to be one of the largest social risks, and it's probably something we should figure out before we scale alternative proteins a lot. Farmers are typically a small group but have a large lobby ability and public sympathy.

Is the recent partial lifting of US chip export controls on China (see e.g. here: https://thezvi.substack.com/p/selling-h200s-to-china-is-unwise) good or bad for humanity? I’ve seen many takes from people whose judgment I respect arguing that it is very bad, but their arguments, imho, just don’t make sense. What am I missing?

For transparency, I am neither Chinese nor American, nor am I a paid agent of them. I am not at all confident in this take, but imho someone should make it.

I see two possible scenarios: A) you are not sure how close humanity is to deve...

"But I think maybe the cruxiest bits are (a) I think export controls seem great in Plan B/C worlds, which seem much likelier than Plan A worlds, and (b) I think unilaterally easing export controls is unlikely to substantially affect the likelihood of Plan A happening (all else equal). It seems like you disagree with both, or at least with (b)?"

Yep, this is pretty close to my views. I do disagree with (b), since I am afraid that controls might poison the well for future Plan A negotiations. As for (a), I don’t get how controls help with Plan C, and I don’t ...

Why don’t EA chapters exist at very prestigious high schools (e.g., Stuyvesant, Exeter, etc.)?

It seems like a relatively low-cost intervention (especially compared to something like Atlas), and these schools produce unusually strong outcomes. There’s also probably less competition than at universities for building genuinely high-quality intellectual clubs (this could totally be wrong).

FWIW I went to the best (or second best lol) high school in Chicago, Northside, and tbh the kids at these top city highschools are of comparable talent to the kids at northwestern, with a higher tail as well. More over everyone has way more time and can actually chew on the ideas of EA. There was a jewish org that sent an adult once a week with food and I pretty much went to all of them even tho i would barely even self identify as jewish because of the free food and somewhere to sit and chat about random stuff while I waited for basketball practice. ...

alignment is a conversation between developers and the broader field. all domains are conversations between decision-makers and everyone else:

“here are important considerations you might not have been taking into account. here is a normative prescription for you.”

“thanks — i had been considering that to 𝜀 extent. i will {implement it because x / not implement it because y / implement z instead}."

these are the two roles i perceive. how does one train oneself to be the best at either? sometimes, conversations at eag center around ‘how to get a job’, whereas i feel they ought to center around ‘how to make oneself significantly better than the second-best candidate’.

Recent generations of Claude seem better at understanding blog posts and making fairly subtle judgment calls than most smart humans. These days when I’d read an article that presumably sounds reasonable to most people but has what seems to me to be a glaring conceptual mistake, I can put it in Claude, ask it to identify the mistake, and more likely than not Claude would land on the same mistake as the one I identified.

I think before Opus 4 this was essentially impossible, Claude 3.xs can sometimes identify small errors but it’s a crapshoot on whether it ca...

Here’s a random org/project idea: hire full-time, thoughtful EA/AIS red teamers whose job is to seriously critique parts of the ecosystem — whether that’s the importance of certain interventions, movement culture, or philosophical assumptions. Think engaging with critics or adjacent thinkers (e.g., David Thorstad, Titotal, Tyler Cowen) and translating strong outside critiques into actionable internal feedback.

The key design feature would be incentives: instead of paying for generic criticism, red teamers receive rolling “finder’s fees” for critiques that a...

While I like the potential incentive alignment, I suspect finder’s fees are unworkable. It’s much easier to promise impartiality and fairness in a single game as opposed to an iterated one, and I suspect participants relying on the fees for income would become very sensitive to the nuances of previous decisions rather than the ultimate value of their critiques.

Ultimately, I don’t think there are many shortcuts in changing the philosophy of a movement. If something is worth challenging, than people strongly believe it and there will have to be a process of contested diffusion from the outside in. You can encourage this in individual cases, but systemizing it seems difficult.

EAGx and Summit events are coming up, and we're looking for organizers for more!

Applications for EAGxCDMX (Mexico City, 20–22 March), EAGxNordics (Stockholm, 24–26 April), and EAGxDC (Washington DC, 2–3 May) are all open! These will be the largest regional-focused events in their respective areas, and are aimed at serving those already engaged with EA or doing related professional work. EAGx events are networking-focused conferences designed to foster strong connections within their regional communities.

If you’d like to apply to join the organizing team fo...

"Most people make the mistake of generalizing from a single data point. Or at least, I do." - SA

When can you learn a lot from one data point? People, especially stats- or science- brained people, are often confused about this, and frequently give answers that (imo) are the opposite of useful. Eg they say that usually you can’t know much but if you know a lot about the meta-structure of your distribution (eg you’re interested in the mean of a distribution with low variance), sometimes a single data point can be a significant update.

This type of limited conc...

Also seems a bit misleading to count something like "one afternoon in Vietnam" or "first day at a new job" as a single data point when it's hundreds of them bundled together?

From a information-theoretic perspective, people almost never refer to a single data point as strictly as just one bit, so whether you are counting only one float in a database or a whole row in a structured database, or also a whole conversation, we're sort of negotiating price.

I think the "alien seeing a car" makes the case somewhat clearer. If you already have a deep model of ...

It is popular to hate on Swapcard, and yet Swapcard seems like the best available solution despite its flaws. Claude Code or other AI coding assistants are very good nowadays, and conceivably, someone could just Claude Code a better Swapcard that maintained feature parity while not having flaws.

Overall I'm guessing this would be too hard right now, but we do live in an age of mysteries and wonders. It gets easier every month. One reason for optimism is it seems like the Swapcard team is probably not focused on the somewhat odd use case of EAGs in general (...

There is however rising appetite for 1v1s. I just went to an online meeting about Skoll world forum, which is probably the biggest NGO and Funder conference in the world. Both speakers emphasized 1v1s being the most important aspect, and advised only going to other sessions at times when 1v1s weren't books.

So maybe the GHD ecosystem at least is wakign up a bit...

I like Scott's Mistake Theory vs Conflict Theory framing, but I don't think this is a complete model of disagreements about policy, nor do I think the complete models of disagreement will look like more advanced versions of Mistake Theory + Conflict Theory.

To recap, here's my short summaries of the two theories:

Mistake Theory: I disagree with you because one or both of us are wrong about what we want, or how to achieve what we want)

Conflict Theory: I disagree with you because ultimately I want different things from you. The Marxists, who Scott was or...

The dynamics you discuss here follow pretty intuitively from the basic conflict/mistake paradigm.

I think it's very easy to believe that the natural extension of the conflicts/mistakes paradigm is that policy fights are composed of a linear combination of the two. Schelling's "rudimentary/obvious" idea, for example, that conflict is and cooperation is often structurally inseparable, is a more subtle and powerful reorientation than it first seems.

But this is a hard point to discuss (because it's in the structure of an "unknown known"), and I didn't interview...

A bit sad to find out that Open Philanthropy’s (now Coefficient Giving) GCR Cause Prioritization team is no more.

I heard it was removed/restructured mid-2025. Seems like most of the people were distributed to other parts of the org. I don't think there were public announcements of this, though it is quite possible I missed something.

I imagine there must have been a bunch of other major changes around Coefficient that aren't yet well understood externally. This caught me a bit off guard.

There don't seem to be many active online artifa...