The original report can be found here - https://docs.google.com/document/d/1MLmzULVrksH8IwAmH7wBpOTyU_7BjBAVl6N63v2vvhM/edit?usp=sharing

Acknowledgements

I thank Iyngkarran Kumar, Oliver Guest, Holly Elmore, Lucy Farnik, Aidan Ewart, and Robert Reason for their discussions, pushback and feedback on this report. Any errors present are my sole responsibility. Cover image created by DALL-E 2.

This work was done under the Bristol AI Safety Centre (BASC).

Short Summary

This report aims to develop a forecast to an open question from the analysis, ‘Prospects for AI safety agreements between countries’ (Guest, 2023): Is there sufficient time to have a ‘risk awareness moment’ in either the US (along with its allies) and China in place before an international AI safety agreement can no longer meaningfully reduce extinction risks from AI?

Bottom line: My overall estimate/best guess is that there is at least a 40% chance there will be adequate time to implement a CHARTS agreement before it ceases to be relevant.

How much does public sentiment matter?

This report focuses solely on forecasting ‘risk awareness moments’ (RAMs) related to advanced AI amongst policy elites in the United States and China. It does not consider RAMs amongst the general public.

Some evidence suggests public opinion has limited direct influence on policy outcomes in America. One influential study found average citizens’ policy preferences had a “near-zero” impact when controlling for elites’ views. However, this finding remains debated, and the interplay between public attitudes and policy action is unclear. Given the lack of consensus, this report excludes general public sentiment.

In China’s authoritarian context, public opinion may drive some policy changes, often through concerns about regime legitimacy. However, available survey data provides little insight into Chinese public views, specifically on AI risks.

Thus, this report will estimate RAM timelines considering only dynamics among governmental and technical elites shaping AI policy in both nations. Broader public risk awareness is complex to forecast and excluded from this report, given minimal directly relevant polling. The tentative focus is predicting when heightened concern about advanced AI risks emerges among key policymakers.

What is the likelihood that we have a RAM amongst policy elites in the US and China?

This report considers two scenarios for whether a sufficient "risk awareness moment" (RAM) regarding catastrophic risks from advanced AI has already occurred or may transpire in the near future among US and Chinese policy elites.

There is some evidence a RAM may be occurring now, like AI leaders voicing concern before Congress and China enacting regulations on harms from algorithms. However, factors like lack of political will for extreme precautions in the US and incentives to compete in AI in China weigh against having already crossed the RAM threshold. There appears to be a 44% chance of a current US RAM and a 54% chance for China.

Alternatively, a RAM could emerge within 2-3 years as more policymakers directly become aware of AI existential risks, "warning shot" incidents demonstrate concerning AI behaviours and major capability leaps occur. Reasons against this include AI safety becoming a partisan issue in the US and perceived benefits outweighing extinction risks. There seems to be a 60% chance of a RAM by 2026 under this scenario.

Conditional on there being a risk awareness moment (RAM), how long would negotiations, on average, for an AI safety agreement to come into place?

This report will rely on Oliver Guest's forecast that such an agreement could be negotiated approximately four years after a RAM among policy elites in the US and China (Guest, 2023).

As an initial estimate, I expect negotiations could conclude within 1-2 years of a RAM, especially if details are sorted out after an initial agreement in principle. However, Oliver Guest has conducted extensive research on timelines for this specific context. Oliver’s 4-year estimate seems a reasonable preliminary guideline to follow.

How long does it take for an agreement to come into force and then the actual regulations to take place?

Oliver estimates a 70% chance that if an AI development oversight agreement is reached, it could come into legal force within six months. However, uncertainty remains around timelines to establish verification provisions in practice once an agreement is enacted.

Previous arms control treaties have seen verification bodies created rapidly, like the OPCW for the Chemical Weapons Convention. However, the first on-site inspections under new AI oversight could still take around six months as rules are interpreted and initial audits arranged.

Some factors like voluntary industry collaboration enabling monitoring ahead of mandates may help accelerate subsequent oversight implementation. With preparation, the timeline from enactment to proper enforcement of oversight through an agreement like CHARTS could potentially be shortened to 1-3 months.

How long till CHARTS is a useful agreement to push for?

This report examines when negotiating agreements like CHARTS to reduce AI risks could become infeasible before a definitive "point of no return" is reached. Three scenarios could make CHARTS obsolete:

- AI surpasses hardware limitations and becoming unconstrained by available computation. I estimate a 20-40% chance of this occurring around the emergence of transformative AI (TAI), which would render chip supply controls pointless.

- Rapid TAI political takeover leaving humanity unable to regulate AI's growth. I expect a >80% chance of this within one year of TAI.

- Irreversible entanglement with increasingly capable AI systems, which could happen ~2 years pre-TAI. I estimate a 60-80% chance of this.

1. Key terms

1.1 What is Transformative AI?

This report defines "transformative artificial intelligence" (TAI) as computer software possessing sufficient intelligence that its widespread deployment would profoundly transform the world, accelerating economic growth on the scale of the Industrial Revolution. Specifically, TAI refers to software that could drive global economic growth from ~2-3% currently to over 20% per year if utilised in all applications where it would add economic value. This implies the world economy would more than double within four years of TAI's emergence (Contra, 2020).

1.2 What is a risk awareness moment?

This report employs the concept of a 'risk awareness moment' or RAM as conceptualised by Guest (2023), who defines a RAM as "a point in time, after which concern about extreme risks from AI is so high among the relevant audiences that extreme measures to reduce these risks become possible, though not inevitable."

In other words, a RAM refers to a threshold point where concern about catastrophic or existential threats from advanced AI becomes widespread enough to potentially motivate major policy changes or interventions to reduce these risks. This does not mean such actions become guaranteed after a RAM, but they shift from inconceivable to plausibly on the table for consideration. This conception of the RAM concept informs the present analysis regarding the likelihood that such a threshold may be reached.

1.3 What type of international safety agreement?

Oliver Guest’s report (2023) describes a proposed agreement called the 'Collaborative Handling of Artificial Intelligence Risks with Training Standards' (CHARTS). The key features of CHARTS are:

- Prohibiting governments and companies from performing large-scale training runs that are deemed likely to produce powerful but misaligned AI systems. The riskiness of training runs would be determined based on proxies such as total training compute usage or the use of techniques like reinforcement learning.

- Requires extensive verification of compliance through mechanisms like on-chip activity logging, on-site inspections of data centres, and a degree of mechanistic interpretability.

- Cooperating to prevent exports of AI-relevant compute hardware to non-member countries to avoid dangerous training runs being conducted in non-participating jurisdictions.

This report does not directly evaluate whether the proposed CHARTS agreement would successfully address key governance challenges surrounding transformative AI or appraise its specific strengths and limitations. At first glance, the agreement’s core features appear potentially advantageous for reducing extinction risks from artificial intelligence in the near term. One perspective is that if the agreement enabled an independent governing body to effectively (a) ensure chip manufacturers implement chips capable of recording and storing risky or large-scale training runs, (b) impose sanctions for unauthorised risky training, (c) enforce limits on compute provision to individual labs or governments, and (d) proactively fulfil its oversight duties, I estimate a 75% probability (confidence: medium) that this could significantly delay timelines for transformative AI.

However, fully evaluating the proposal's merits and weaknesses would necessitate a more in-depth analysis, which I may undertake eventually.

2. How much does public sentiment matter?

This report's tentative approach will solely focus on forecasting ‘risk awareness moments’ (RAMs) occurring amongst policy elites in the United States and China. It will not consider RAMs amongst the general public.

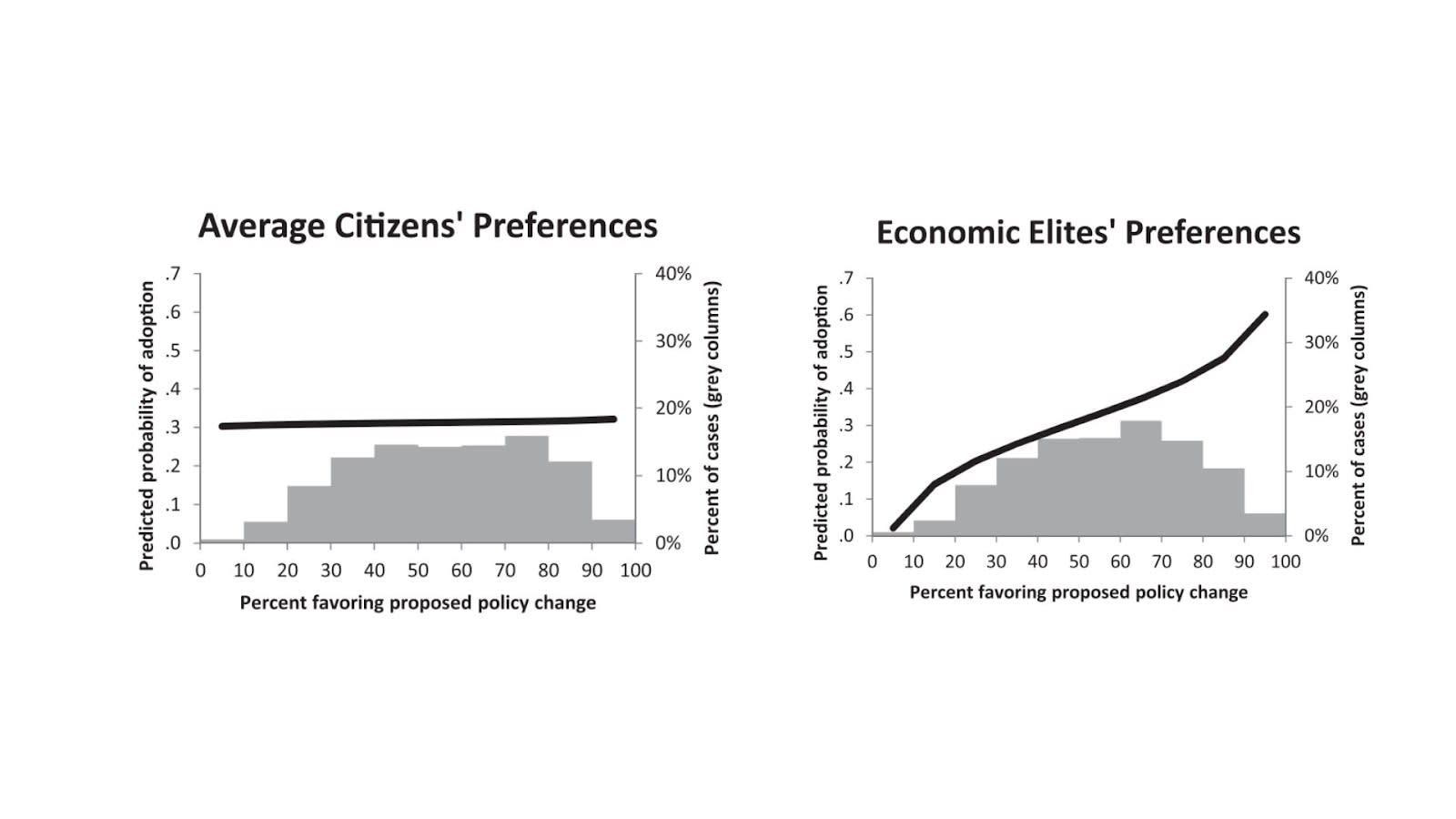

Some empirical evidence challenges the notion that broad public sentiment substantially influences actual policy outcomes in America. A widely-discussed study analysing 1,779 policy issues found that the preferences of average citizens had minimal independent impact on policy when controlling for the preferences of economic elites and business interest groups. The study concluded that average citizens' preferences had a "near-zero, statistically non-significant" effect on policy outcomes (Gilens & Page, 2014, p. 575).

However, the finding that public opinion has little influence on US policymaking has been critiqued. Re-analysis of the original data showed that on issues where preferences diverge, policy outcomes largely reflect middle-class preferences, not just elites. Another re-examination found enactment rates are similar when either median-income Americans or elites strongly support a policy change (Enns, 2015; Bashir, 2015). The complex interplay between public views and resultant policy action remains an open question, with analyses arriving at differing conclusions. Given the significant unknowns and lack of consensus surrounding public opinion's policy impact, this report will refrain from considering it as a factor.

Figure 1. From Gilens & Page (2014).

What about China? Contrary to initial assumptions that public opinion may play a minimal role in policy decisions under China's authoritarian setup, some evidence suggests it does impact policy to a degree, often through concerns about regime legitimacy. In authoritarian contexts like China's, procedural legitimacy derived from democratic processes is weak, amplifying the regime's need for performance legitimacy based on popular living standards (Li, 2022).

Prior research indicates China's government actively tracks and responds to public opinion, particularly online sentiment, due to its relevance for performance legitimacy. However, divergences can emerge between state narratives and evolving public attitudes. For example, Chinese public opinion towards the United States deteriorated dramatically from 2016 to 2020. This shift likely reflects reactions to external events rather than just alignment with state propaganda. Further evidence of divergent public attitudes is that negative views towards Australia and the UK were lower than towards the US around this time, despite China’s tensions with those countries (Fang et al., 2022, p. 43).

Rigorous public polling on AI risks is unfortunately scarce in China. One 2021 survey showed that 56% of Chinese respondents viewed AI as ‘beneficial’ and only 13% as ‘harmful’. Harmful here did not seem to specify risks of human extinction (The Lloyd's Register Foundation, 2021). This feels too insubstantial to warrant much consideration.

Given the lack of data on public views specifically regarding AI risks, this report will not consider the likelihood of a general RAM in China. It will focus solely on policy elites.

3. What is the likelihood that we have a RAM amongst policy elites in the US and China?

For a CHARTS agreement to come in place, the US or China must have RAM. I can imagine two scenarios to answer this question:

3.1 We might be having a RAM right now.

Let us consider whether the world has already reached a risk awareness moment (RAM) regarding extreme risks from advanced AI systems.

United States

Several recent developments provide evidence that we may currently be experiencing a RAM:

- Powerful new LLM systems like GPT-4 and Claude are causing concerns among US Congress and legislators about existential risks from such systems.

- Respected leaders in the field of AI, like Geoffrey Hinton (New York Times, 2023) and Max Tegmark (Wall Street Journal, 2023), have publicly expressed worries about existential threats, which are holding sway over US legislators.

- Heads of AI labs like Dario Amodei (Washington Post, 2023) and Sam Altman (New York Times, 2023) have expressed concerns before a Senate committee.

- [Minor] The ‘Statement on AI Risk’ (Center for AI Safety, 2023) helped gain significant attention on AI safety.

- More senators seem to be voicing their concerns about risks from AI (Bloomberg, 2023).

- [Minor] The prospect of American jobs being automated away by LLMs may spur willingness to implement regulations

However, some reasons against having reached a sufficient RAM threshold:

- Concern may not be sufficiently high about worst-case AI risks like human extinction.

- There is not enough political willingness for more extreme precautionary measures as the proposals laid out in CHARTS.

- Scepticism likely remains about AI existential risks due to their unintuitive nature.

- The flipside of Hinton and Tegmark is that you have others like Yann LeCunn who are not as bullish on the ideas of existential risks from AI (Munk Debate, 2023).

- Overoptimism and naiveness about US politicians, both from the Republican and Democrat sides, being able to perceive risks from AI rationally.

Given all of this, I think there is a 44% chance that a RAM is occurring amongst policy elites in the United States at the moment (CI: 35% to 53%).

You can play around with the Guesstimate model here [Link] and place different weights on my listed considerations.

China

There are some indications that a risk awareness moment regarding AI may be emerging among Chinese policy elites:

- A Chinese diplomat, Zhang Jun, gave a UN speech emphasising human control over AI and establishing risk response mechanisms. This suggests awareness and concern about AI risks among Chinese foreign policy officials (Concordia AI, 2023)

- Expert advisor Zeng Yi separately warned the UN about existential risks from advanced AI. As a key advisor to China's Ministry of Science and Technology, this likely reflects awareness among influential scientific experts advising the government (ibid).

- China has already enacted vertical regulations targeting algorithm harms like misinformation, demonstrating a willingness to constrain perceived AI risks (Carnegie Endowment for International Peace, 2023).

- The ‘Interim Measures on Generative AI’ can be read here [Link].

- It might also be the case that China's restrictions aimed at ensuring LLMs do not generate content against state interests could end up slowing down Chinese AI lab capabilities.

- As a totalitarian regime, China may have a lower threshold to justify new regulations constraining AI systems, unlike the US, where policymakers may be more heavily influenced by industry lobbying.

Factors potentially weighing against a sufficient RAM threshold being reached currently:

- China still lags behind leading Western nations in advanced AI capabilities (Foreign Affairs, 2023). This may reduce incentives to prioritise speculative future risks over near-term technological competitiveness.

- There may not yet be enough concern specifically about catastrophic or existential AI risks among Chinese officials, even if general AI risks are increasingly discussed.

- China may have incentives to avoid externally imposed regulations on its domestic chip production capabilities, especially regulations that enable oversight by foreign entities. Specifically, China may seek to preserve unconstrained access to domestic chip fabrication facilities to pursue potential state interests like invading Taiwan, which is a dominant player in cutting-edge semiconductor manufacturing.

Given all of this, I think there is a 57% chance that a RAM is occurring amongst policy elites in China at the moment (CI: 45% to 68%).

Once again, you can play around with this forecast in Guesstimate [Link].

3.2 A RAM might be two or three years away.

Another kind of world that I will briefly think about is one where we are two or three years from a ‘risk awareness moment’. Concerns about human extinction from advanced AI may not seem sufficiently salient among policymakers to constitute a RAM. AI safety is a relatively novel issue that is not yet well understood, which likely hinders sufficient urgency to prompt major interventions.

The composition of US policy elites and those surrounding them will likely change to include more people who know about AI Safety. As AI capabilities continue advancing and AI safety advocates enter governmental positions (maybe through different fellowships being offered [Link]) and climb its ladder, I estimate a 60% probability that a RAM will occur by the year 2026 (CI: 48% to 72%) in the following ways:

- In the US, senior legislators are directly advised about AI existential risks and have vivid intuitions about extinction pathways.

- Serious incidents (‘warning shots’ (Kokotajlo, 2020)) clearly demonstrate AI systems gaining agency and being able to potentially cause harm or deceive humans (maybe through more incidents like what ARC Evals has published (Alignment Research Centre, 2023) or models like AutoGPT.

- Major capability leaps occur, like the emergence of GPT-5 or GPT-6 model or another AI lab releasing an LLM that’s more powerful.

- More influential figures endorse calls for urgent measures like moratoriums on advanced AI.

Some of these trends could spur a RAM in China on a similar timescale.

Reasons against this intuition:

- AI ends up becoming a bipartisan issue that roadblocks how long it takes for a RAM to occur.

- Building TAI is seen as so valuable that even if there is a non-zero chance of extinction, governments will still push hard to build it. An analogy of this can be drawn to the Trinity test.

Play around with the Guesstimate here [Link].

4. Conditional on there being a risk awareness moment (RAM), how long would negotiations, on average, for an AI safety agreement to come into place?

This report will rely on Oliver Guest's forecast that an agreement between the United States and allies and China limiting advanced AI development could be negotiated approximately four years after a RAM occurs (Guest, 2023).

As an initial estimate, I would expect countries to act more quickly to establish an agreement if concerns about potentially catastrophic AI risks reach the RAM threshold, perhaps reaching an agreement within 1-2 years. There also appears to be a possibility of an initial agreement being made in principle within this accelerated timeframe, with details sorted out later.

However, Oliver Guest has conducted more extensive research specifically focused on estimating timelines for reaching a CHARTS agreement. Given this, Oliver’s estimate of approximately four years post-RAM seems a reasonable preliminary estimate to rely on. This will remain open to updating if additional evidence emerges suggesting faster or slower timelines are more probable after a RAM takes place.

5. How long does it take for an agreement to come into force and then the actual regulations to take place?

Oliver argues that if an international agreement is reached, it may come into force within six months of negotiation, with 70% confidence. However, less information is provided on timelines before key oversight provisions are established in practice.

For instance, implementing verification of chip manufacturers producing chips capable of recording large training runs would require creating a body able to externally confirm this manufacturing is occurring and that has methods for documenting and storing records of production. How long starting up and initiating enforcement from such an oversight organisation might take is uncertain.

Certain international agreements have seen verification bodies and activities commence almost instantly after enactment or signing. The Chemical Weapons Convention (CWC) was signed in January 1993 and entered force in April 1997. The Organisation for the Prohibition of Chemical Weapons (OPCW), tasked with verifying CWC compliance, initiated verification work soon after the Treaty took effect (OPCW). In fact, several other verification bodies follow a similar pattern. The Comprehensive Nuclear-Test-Ban Treaty (CTBT) was signed in 1996, and the CTBTO Preparatory Commission, which enforces the CTBT, was established in the same year (UNODA). The Treaty on Open Skies was signed in 1992, and then the Open Skies Consultative Commission came into force in the same year (Federation for American Scientists).

However, the establishment of a verification body does not necessarily mean verification systems would be operational immediately. Based on intuition, the first audit of relevant facilities could take approximately six months to occur after such a body is set up, as creating a new organisation to independently verify labs and states, getting access, and adapting hardware would involve months of preparation and likely roadblocks.

It is also worth noting that a verification body can predate an agreement, as the International Atomic Energy Agency did relative to the Treaty on the Non-Proliferation of Nuclear Weapons (IAEA). Voluntary industry collaboration could potentially help expedite subsequent government oversight by enabling tracking ahead of regulations. For instance, NVIDIA could opt to voluntarily enable compute monitoring before mandates. Early multi-stakeholder standards bodies and best practices could also ease eventual compliance, though regulations would likely still be required for comprehensive verification.

Overall, while the previous precedents demonstrate timely verification is possible after agreements are enacted, my rough estimate is that fully implementing oversight in practice could still take 6-12 months. Industry goodwill and preparatory work may help accelerate enforcement once formal regulations are in place, shortening the timeline to begin proper enforcement through CHARTS to potentially 1-3 months.

6. How long till CHARTS is a useful agreement to push for?

The initial open question posed by Oliver centred on determining timelines for a "point of no return" (PONR), defined as when humanity loses the ability to meaningfully reduce risks from advanced AI systems (Kokotajlo, 2020). It seems plausible that negotiating a CHARTS agreement or other international AI cooperation could become infeasible even before a definitive PONR is reached. At the same time, some risk reduction levers may remain viable for a period after that point. A better framing may be asking when a CHARTS agreement no longer becomes useful to pursue.

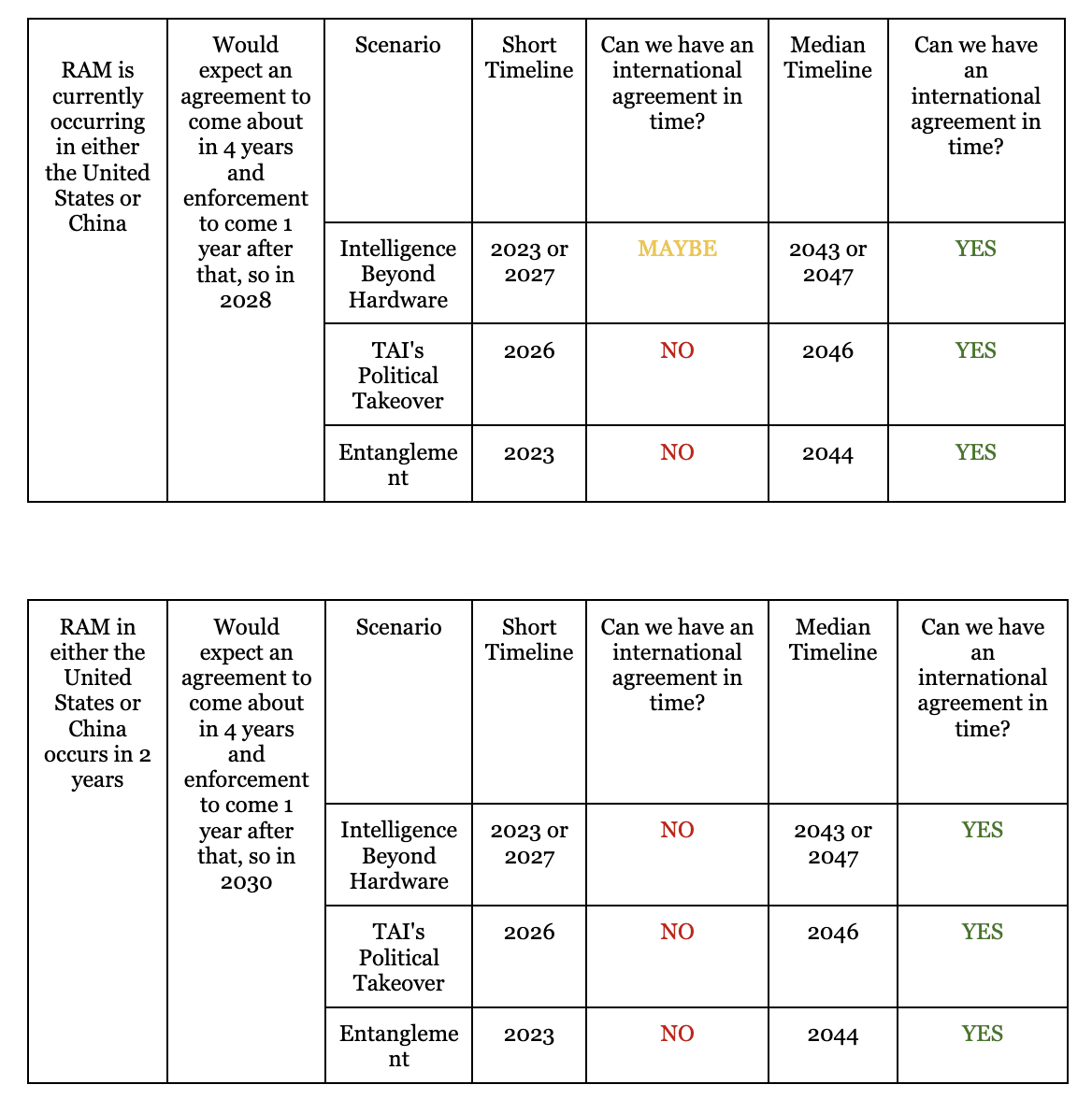

Three potential scenarios could prevent a CHARTS agreement from being useful:[1]

- Intelligence Beyond Hardware: AI evolves such that its capabilities surpass hardware limitations. One pathway is an AI system finding efficiency improvements so its capabilities are no longer determined by available computation. Thus, controlling chip manufacturers or regulating compute through CHARTS becomes irrelevant. I estimate the likelihood of this occurring before other scenarios is low, around 20-40%. I expect producing such systems will still require massive chip quantities, making chip regulation deterrents still viable. If this did occur, I would expect it either two years before or after the onset of transformative AI (TAI).

- TAI's Political Takeover: Within a short timeframe, misaligned and power-driven TAI infiltrates and dominates political systems. This rapid dominance leaves humanity unable to regulate or control AI's growth, exacerbating existential risks. I would expect a political takeover within one year of TAI's emergence.

- Irreversible Entanglement: The global economy becomes intricately intertwined with AI such that disentangling or regulating AI poses substantial economic risks. I place a high chance of 60-80% that this irreversible entanglement happens before TAI, as increasingly intelligent systems are embedded throughout society. I estimate this could occur around two years pre-TAI as highly capable, but not yet transformative, AI proliferates.

Predicting when any of these scenarios may occur seems highly challenging. The estimates provided are my best guesses, given current knowledge.

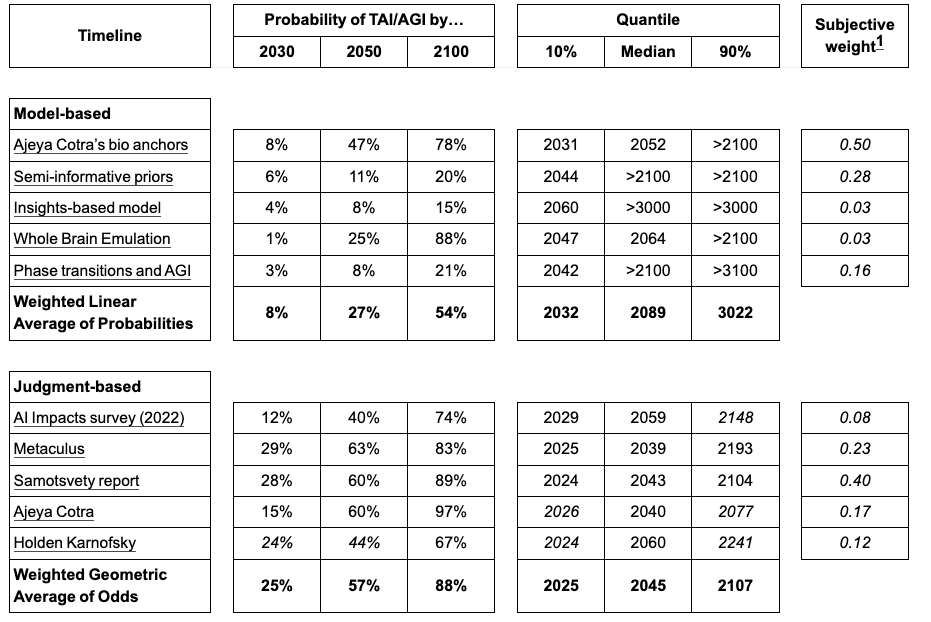

A recent literature review on TAI timelines (Wynroe et al., 2023) found the 10th, 50th, and 90th percentile estimates for TAI emergence were 2025, 2045, and post-2070.[2] This report does not consider the long timelines at the end of that distribution, given the speculation involved.

Figure 2. TAI Timelines aggregated. From Wynroe et al. (2023).

7. Will there be time to implement an agreement before CHARTS is no longer useful?

With the considerations above, my overall estimate/best guess is that there is at least a 40% chance there will be adequate time to implement a CHARTS agreement before it ceases to be relevant. I am sceptical that we are in a short-timeline world for transformative AI, and I tentatively believe median timeline scenarios are more likely. Given this, it seems plausible that CHARTS could be negotiated and enforced before becoming obsolete.[3]

However, I am less certain whether CHARTS specifically would be the most beneficial agreement to prioritise in the long run. Regulating computation could prove valuable in the near term, but more promising opportunities for cooperation may emerge that we would not want to inadvertently impede by over-focusing on CHARTS.

Bibliography

Can be found in the original report.

- ^

Of course, these are far from the only scenarios that play out, and there is a lot of speculation on my part as to the likelihood of these occurring.

- ^

Note this has not considered the new report, ‘What a compute-centric framework says about takeoff speeds’ (Davidson, 2023).

- ^

This definitely needs an explanation that I have omitted from here. I hope to write something about my own timelines soon.